For those in NYC working in AI, ML-NYC is a free monthly speaker series co-organized by the Flatiron Institute, Columbia, and NYU. Past speakers include Bin Yu, Christos Papadimitriou, Léon Bottou (and many more). Talks are followed by a catered reception.

Join us Feb 11th @ 4pm for Romain Lopez!

31.01.2026 16:17 —

👍 1

🔁 0

💬 0

📌 0

Excited to highlight recent work from the lab at NeurIPS! If you’re interested in understanding why uncertainty estimates often break under distribution shift — and how we can do better — check out Yuli’s poster tomorrow.

03.12.2025 17:08 —

👍 3

🔁 1

💬 0

📌 0

ML-NYC Speaker Series and Happy Hour: Daniel Björkegren

AI for Low-Income Countries

The ML in NYC Speaker Series + Happy Hour is excited to host Professor Daniel Björkegren as our December speaker as he speaks about AI for Low-Income Countries!

Registration: www.eventbrite.com/e/ml-nyc-spe...

02.12.2025 18:21 —

👍 2

🔁 0

💬 0

📌 1

Hello!

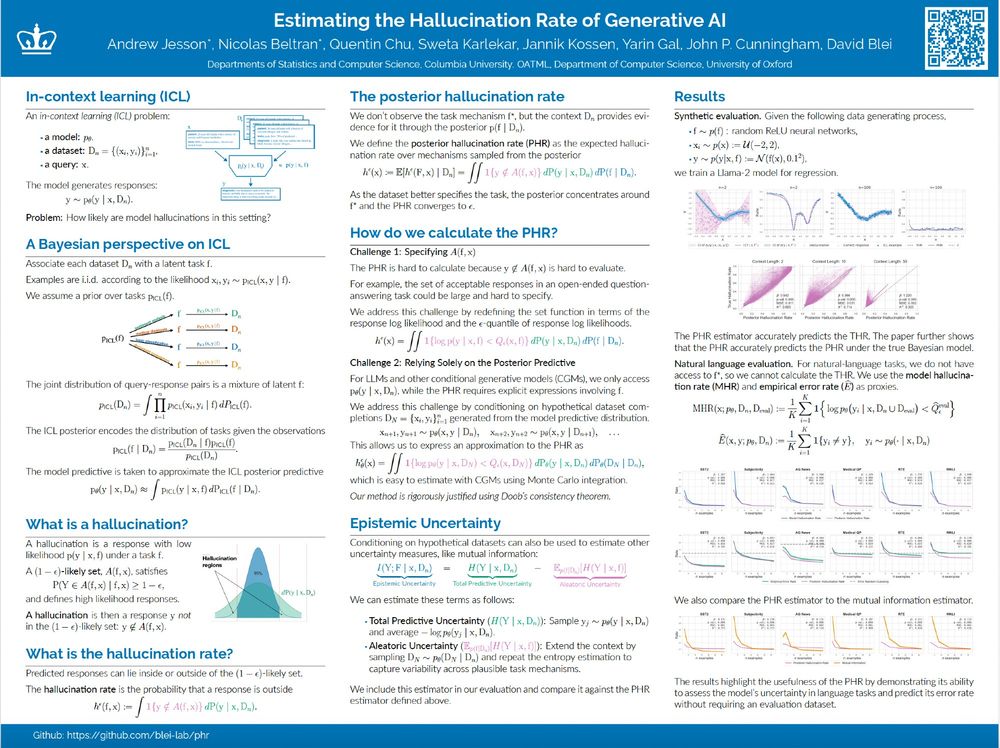

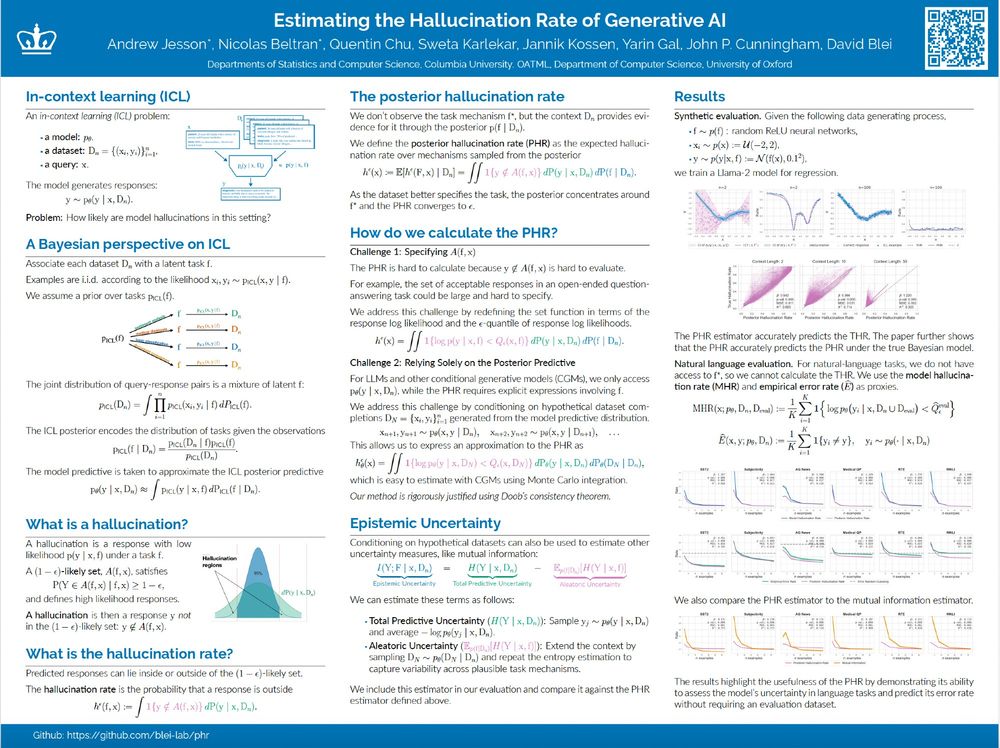

We will be presenting Estimating the Hallucination Rate of Generative AI at NeurIPS. Come if you'd like to chat about epistemic uncertainty for In-Context Learning, or uncertainty more generally. :)

Location: East Exhibit Hall A-C #2703

Time: Friday @ 4:30

Paper: arxiv.org/abs/2406.07457

12.12.2024 18:13 —

👍 23

🔁 4

💬 0

📌 1

fun @bleilab.bsky.social x oatml collab

come chat with Nicolas , @swetakar.bsky.social , Quentin , Jannik , and i today

13.12.2024 17:26 —

👍 10

🔁 1

💬 1

📌 0

Check out our new paper from the Blei Lab on probabilistic predictions with conditional diffusions and gradient boosted trees! #Neurips2024

02.12.2024 23:02 —

👍 32

🔁 6

💬 0

📌 0

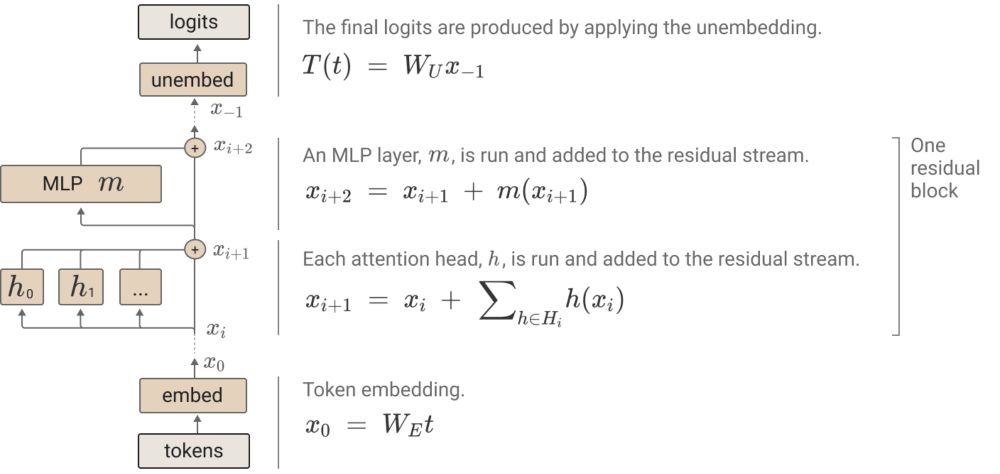

Check out our new paper about hypothesis testing the circuit hypothesis in LLMs! This work previously won a top paper award at the ICML mechanistic interpretability workshop, and we’re excited to share it at #Neurips2024

10.12.2024 19:07 —

👍 7

🔁 1

💬 0

📌 0

For anyone interested in fine-tuning or aligning LLMs, I’m running this free and open course called smol course. It’s not a big deal, it’s just smol.

🧵>>

03.12.2024 09:21 —

👍 326

🔁 64

💬 9

📌 4

Very happy to share some recent work by my colleagues @velezbeltran.bsky.social, @aagrande.bsky.social and @anazaret.bsky.social! Check out their work on tree-based diffusion models (especially the website—it’s quite superb 😊)!

02.12.2024 22:49 —

👍 16

🔁 1

💬 1

📌 0

GitHub - andrewyng/aisuite: Simple, unified interface to multiple Generative AI providers

Simple, unified interface to multiple Generative AI providers - GitHub - andrewyng/aisuite: Simple, unified interface to multiple Generative AI providers

Just learned about @andrewyng.bsky.social's new tool, aisuite (github.com/andrewyng/ai...) and wanted to share! It's a standardized wrapper around chat completions that lets you easily switch between querying different LLM providers, including OpenAI, Anthropic, Mistral, HuggingFace, Ollama, etc.

29.11.2024 20:25 —

👍 23

🔁 3

💬 1

📌 0

Announcing the NeurIPS 2024 Test of Time Paper Awards – NeurIPS Blog

Test of Time Paper Awards are out! 2014 was a wonderful year with lots of amazing papers. That's why, we decided to highlight two papers: GANs (@ian-goodfellow.bsky.social et al.) and Seq2Seq (Sutskever et al.). Both papers will be presented in person 😍

Link: blog.neurips.cc/2024/11/27/a...

27.11.2024 15:48 —

👍 110

🔁 14

💬 1

📌 2

Sorry John, that isn’t my area of expertise!

25.11.2024 00:44 —

👍 0

🔁 0

💬 1

📌 0

This is very interesting! Do you have any intuition as to whether or not this phenomenon happens only with very simple “reasoning” steps? Does relying on retrieval increase as you progress from simple math to more advanced prompts like GSM8K or adversarially designed prompts (like adding noise)?

24.11.2024 16:29 —

👍 3

🔁 0

💬 1

📌 0

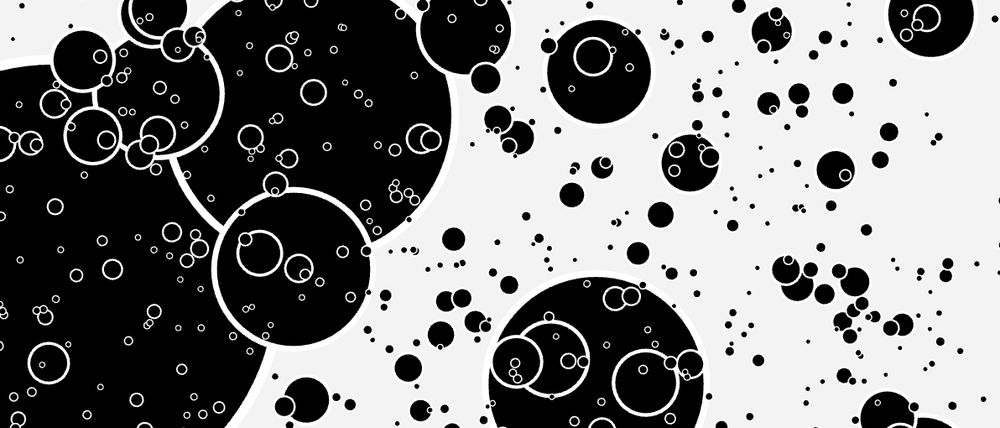

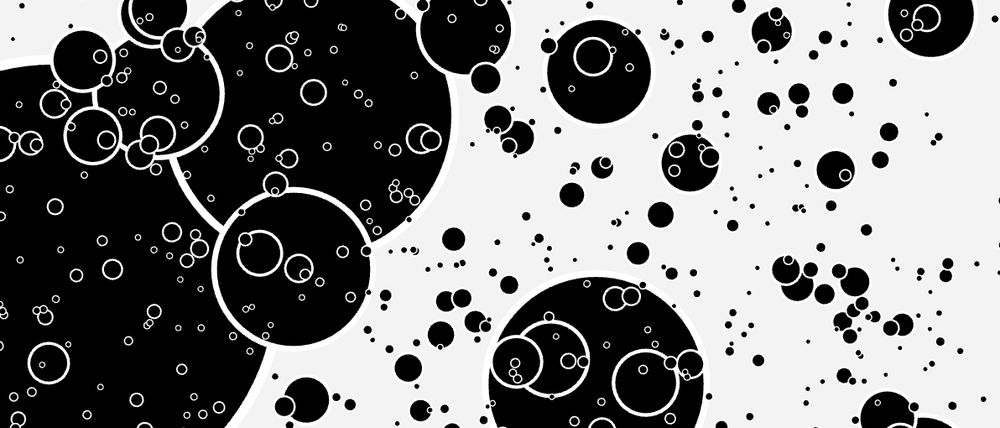

Many circles of different sizes, representing a visualization of inequality

The Gini coefficient is the standard way to measure inequality, but what does it mean, concretely? I made a little visualization to build intuition:

www.bewitched.com/demo/gini

23.11.2024 15:35 —

👍 199

🔁 57

💬 10

📌 8

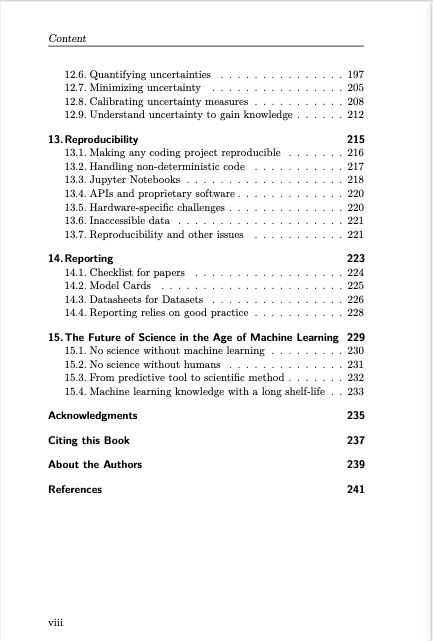

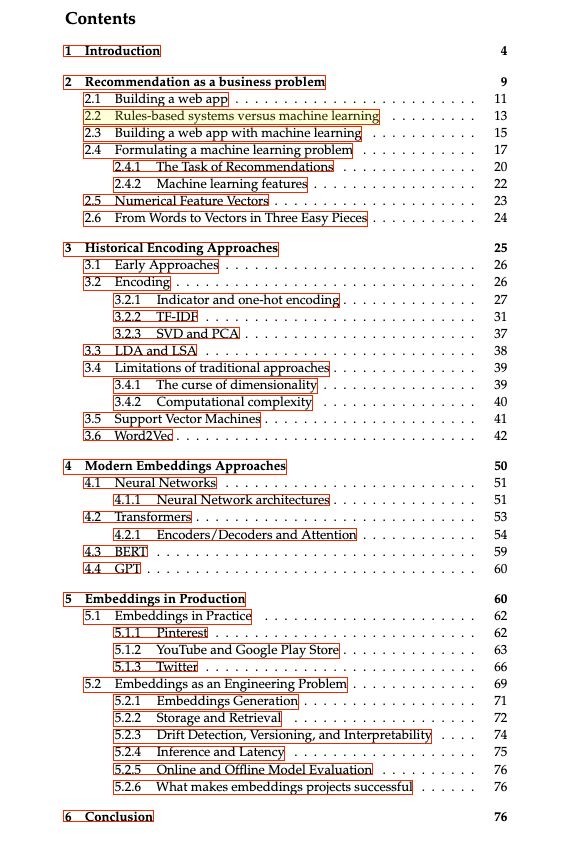

Book outline

Over the past decade, embeddings — numerical representations of

machine learning features used as input to deep learning models — have

become a foundational data structure in industrial machine learning

systems. TF-IDF, PCA, and one-hot encoding have always been key tools

in machine learning systems as ways to compress and make sense of

large amounts of textual data. However, traditional approaches were

limited in the amount of context they could reason about with increasing

amounts of data. As the volume, velocity, and variety of data captured

by modern applications has exploded, creating approaches specifically

tailored to scale has become increasingly important.

Google’s Word2Vec paper made an important step in moving from

simple statistical representations to semantic meaning of words. The

subsequent rise of the Transformer architecture and transfer learning, as

well as the latest surge in generative methods has enabled the growth

of embeddings as a foundational machine learning data structure. This

survey paper aims to provide a deep dive into what embeddings are,

their history, and usage patterns in industry.

Cover image

Just realized BlueSky allows sharing valuable stuff cause it doesn't punish links. 🤩

Let's start with "What are embeddings" by @vickiboykis.com

The book is a great summary of embeddings, from history to modern approaches.

The best part: it's free.

Link: vickiboykis.com/what_are_emb...

22.11.2024 11:13 —

👍 653

🔁 101

💬 22

📌 6

(Shameless) plug for David Blei's lab at Columbia University! People in the lab currently work on a variety of topics, including probabilistic machine learning, Bayesian stats, mechanistic interpretability, causal inference and NLP.

Please give us a follow! @bleilab.bsky.social

20.11.2024 20:41 —

👍 20

🔁 3

💬 1

📌 0

Hi! Our lab does Bayesian stuff :) Could you add Dave Blei's lab to this pack as well if it's not already full? @bleilab.bsky.social

20.11.2024 15:38 —

👍 1

🔁 0

💬 1

📌 0

Could you add Dave Blei's lab to this pack as well if it's not already full? @bleilab.bsky.social

20.11.2024 15:37 —

👍 0

🔁 0

💬 0

📌 0

Could you add Dave Blei's lab to this pack as well if it's not already full? @bleilab.bsky.social

20.11.2024 15:36 —

👍 1

🔁 0

💬 0

📌 0

Could you add Dave blei's lab to this pack as well if it's not already full! @bleilab.bsky.social

20.11.2024 15:36 —

👍 0

🔁 0

💬 1

📌 0

We created an account for the Blei Lab! Please drop a follow 😊

@bleilab.bsky.social

20.11.2024 15:34 —

👍 3

🔁 1

💬 0

📌 0

Oh, I’ve been meaning to check out that YouTube series—thanks! Also sadly, there's no class website, but I can share the "super quick intro to mech interp" presentation I made. It’s somewhat rough, but hopefully, it gets the main points across! sweta.dev/files/intro_...

20.11.2024 15:08 —

👍 1

🔁 0

💬 1

📌 0

Mailing list contact information

Information to be added to the post-Bayes mailing list.

📢 Post-Bayesian online seminar series coming!📢

To stay posted, sign up at

tinyurl.com/postBayes

We'll discuss cutting-edge methods for posteriors that no longer rely on Bayes Theorem.

(e.g., PAC-Bayes, generalised Bayes, Martingale posteriors, ...)

Pls circulate widely!

19.11.2024 20:22 —

👍 16

🔁 6

💬 0

📌 3

Can’t believe I forgot about this paper, thanks so much!!

20.11.2024 13:55 —

👍 1

🔁 0

💬 0

📌 0

I haven’t read the first one but it looks very informative, thank you!! We also had a separate unit on transformer architecture; I’m going to add this to that paper list as well!

20.11.2024 13:55 —

👍 2

🔁 0

💬 0

📌 0