Yale University, Institute for the Foundations of Data Science

Job #AJO31114, Postdoc in Foundations of Data Science, Institute for the Foundations of Data Science, Yale University, New Haven, Connecticut, US

📣 Postdocs at Yale FDS! 📣 Tremendous freedom to work on data science problems with faculty across campus, multi-year, great salary. Deadline 12/15. Spread the word! Application: academicjobsonline.org/ajo/jobs/31114 More about Yale FDS: fds.yale.edu

18.11.2025 03:54 — 👍 23 🔁 13 💬 0 📌 1

💡🤖🔥 @keyonv.bsky.social's talk at metrics-and-models.github.io was brilliant, posing epistemic questions about what Artificial Intelligence "understands".

Next (two weeks): Alexander Vezhnevets talks about a new multi-actor generative agent based model. As usual, *all welcome* #datascience #css💡🤖🔥

27.08.2025 14:57 — 👍 4 🔁 1 💬 0 📌 0

💡🤖🔥The talk by Juan Carlos Perdomo at metrics-and-models.github.io was so thought provoking that the convenors stayed to discuss it in the room afterwards for quite some time!

Next, we have @keyonv.bsky.social asking: "What are AI's World Models?". Exciting times over here, all welcome!💡🤖🔥

14.08.2025 09:50 — 👍 6 🔁 2 💬 0 📌 0

ICML Workshop on Assessing World Models

Date: Friday, July 18 2025

Location: Ballroom B at ICML 2025 in Vancouver, Canada

This is one way to evaluate world models. But there are many other interesting approaches!

Plug: If you're interested in more, check out the Workshop on Assessing World Models I'm co-organizing Friday at ICML www.worldmodelworkshop.org

14.07.2025 13:49 — 👍 0 🔁 0 💬 1 📌 0

Evaluating the World Model Implicit in a Generative Model

Recent work suggests that large language models may implicitly learn world models. How should we assess this possibility? We formalize this question for the case where the underlying reality is govern...

Last year we proposed different tests that studied single tasks.

We now think that studying behavior on new tasks better captures what we want from foundation models: tools for new problems.

It's what separates Newton's laws from Kepler's predictions.

arxiv.org/abs/2406.03689

14.07.2025 13:49 — 👍 2 🔁 0 💬 1 📌 0

Summary:

1. We propose inductive bias probes: a model's inductive bias reveals its world model

2. Foundation models can have great predictions with poor world models

3. One reason world models are poor: models group together distinct states that have similar allowed next-tokens

14.07.2025 13:49 — 👍 0 🔁 0 💬 1 📌 0

Inductive bias probes can test this hypothesis more generally.

Models are much likelier to conflate two separate states when they share the same legal next-tokens.

14.07.2025 13:49 — 👍 1 🔁 0 💬 1 📌 0

We fine-tune an Othello next-token prediction model to reconstruct boards.

Even when the model reconstructs boards incorrectly, the reconstructed boards often get the legal next moves right.

Models seem to construct "enough of" the board to calculate single next moves.

14.07.2025 13:49 — 👍 0 🔁 0 💬 1 📌 0

If a foundation model's inductive bias isn't toward a given world model, what is it toward?

One hypothesis: models confuse sequences that belong to different states but have the same legal *next* tokens.

Example: Two different Othello boards can have the same legal next moves.

14.07.2025 13:49 — 👍 2 🔁 0 💬 1 📌 1

We also apply these probes to lattice problems (think gridworld).

Inductive biases are great when the number of states is small. But they deteriorate quickly.

Recurrent and state-space models like Mamba consistently have better inductive biases than transformers.

14.07.2025 13:49 — 👍 0 🔁 0 💬 1 📌 0

Would more general models like LLMs do better?

We tried providing o3, Claude Sonnet 4, and Gemini 2.5 Pro with a small number of force magnitudes in-context w/o saying what they are.

These LLMs are explicitly trained on Newton's laws. But they can't get the rest of the forces.

14.07.2025 13:49 — 👍 2 🔁 0 💬 1 📌 0

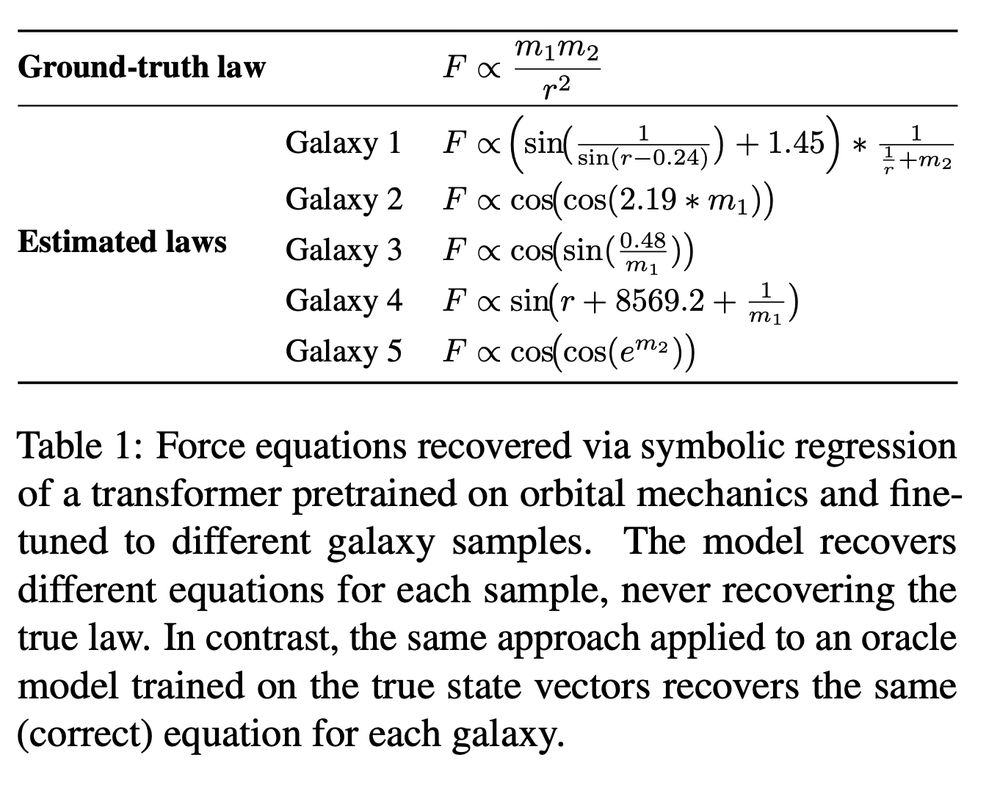

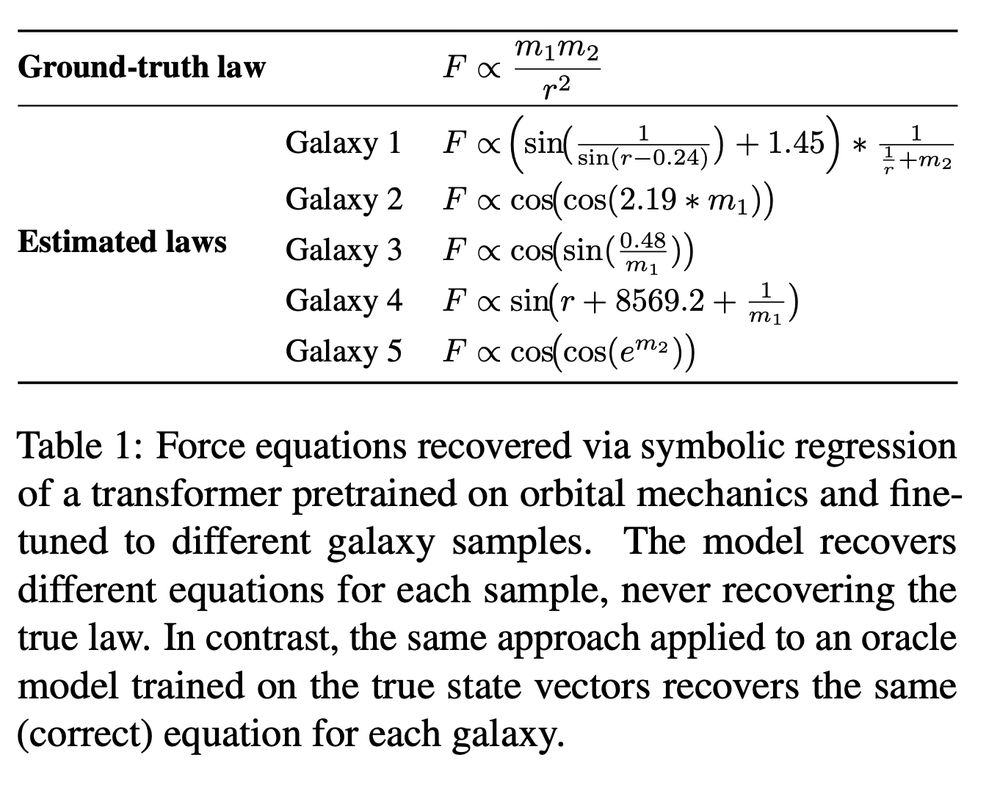

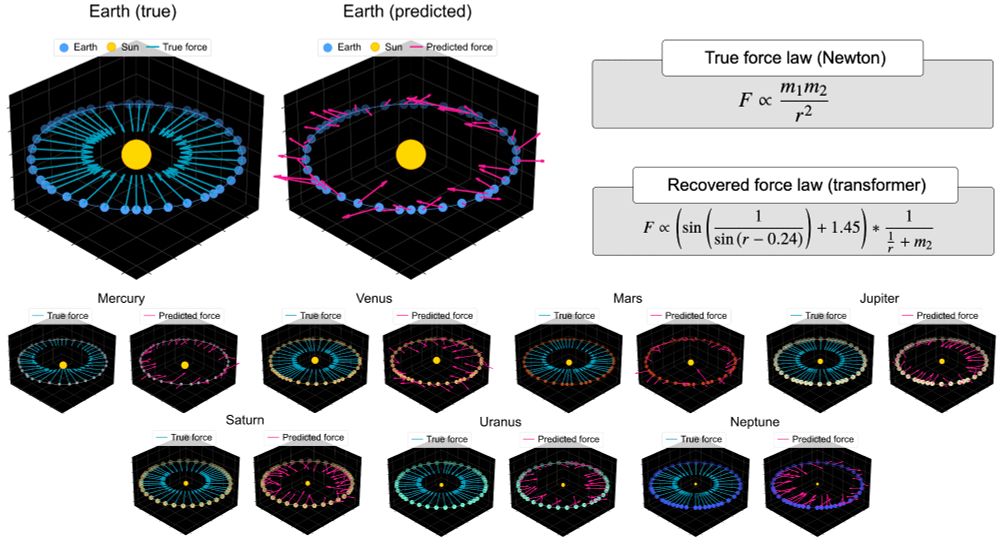

We then fine-tuned the model on a larger scale, to predict forces across 10K solar systems.

We used a symbolic regression to compare the recovered force law to Newton's law.

It not only recovered a nonsensical law—it recovered different laws for different galaxies.

14.07.2025 13:49 — 👍 2 🔁 1 💬 1 📌 0

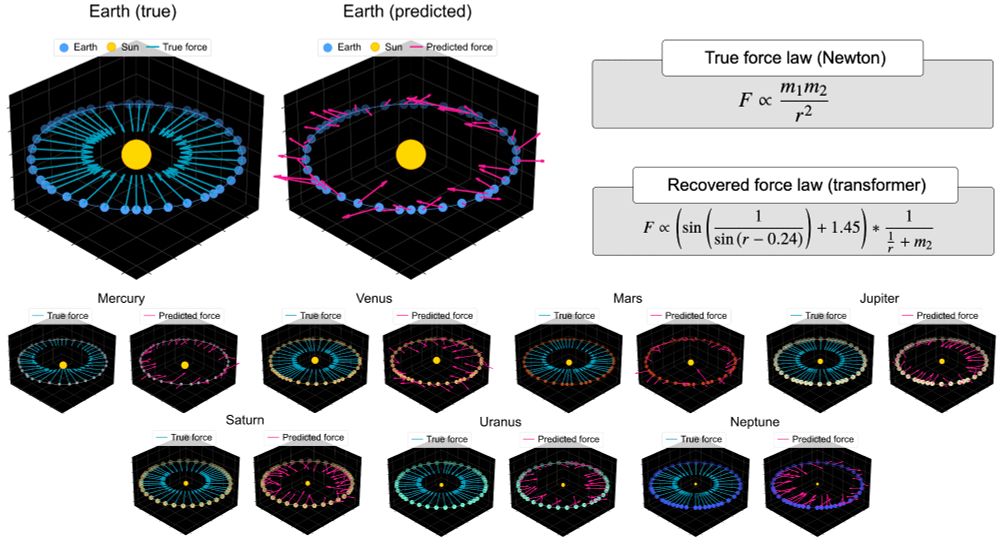

To demonstrate, we fine-tuned the model to predict force vectors on a small dataset of planets in our solar system.

A model that understands Newtonian mechanics should get these. But the transformer struggles.

14.07.2025 13:49 — 👍 4 🔁 1 💬 1 📌 0

But has the model discovered Newton's laws?

When we fine-tune it to new tasks, its inductive bias isn't toward Newtonian states.

When it extrapolates, it makes similar predictions for orbits with very different states, and different predictions for orbits with similar states.

14.07.2025 13:49 — 👍 1 🔁 0 💬 1 📌 0

We apply these probes to orbital, lattice, and Othello problems.

Starting with orbits: we encode solar systems as sequences and train a transformer on 10M solar systems (20B tokens)

The model makes accurate predictions many timesteps ahead. Predictions for our solar system:

14.07.2025 13:49 — 👍 3 🔁 0 💬 1 📌 0

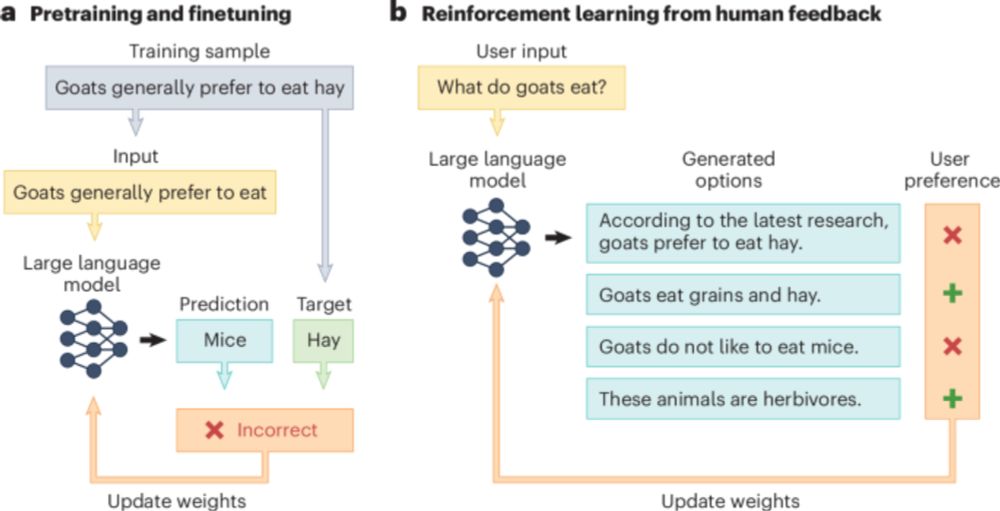

We propose a method to measure these inductive biases. We call it an inductive bias probe.

Two steps:

1. Fit a foundation model to many new, very small synthetic datasets

2. Analyze patterns in the functions it learns to find the model's inductive bias

14.07.2025 13:49 — 👍 4 🔁 0 💬 1 📌 0

Newton's laws are a kind of foundation model. They provide a place to start when working on new problems.

A good foundation model should do the same.

The No Free Lunch Theorem motivates a test: Every foundation model has an inductive bias. This bias reveals its world model.

14.07.2025 13:49 — 👍 3 🔁 0 💬 1 📌 0

If you only care about orbits, Newton didn't add much. His laws give the same predictions.

But Newton's laws went beyond orbits: the same laws explain pendulums, cannonballs, and rockets.

This motivates our framework: Predictions apply to one task. World models generalize to many

14.07.2025 13:49 — 👍 4 🔁 0 💬 1 📌 0

Perhaps the most influential world model had its start as a predictive model.

Before we had Newton's laws of gravity, we had Kepler's predictions of planetary orbits.

Kepler's predictions led to Newton's laws. So what did Newton add?

14.07.2025 13:49 — 👍 3 🔁 0 💬 1 📌 0

Our paper aims to answer two questions:

1. What's the difference between prediction and world models?

2. Are there straightforward metrics that can test this distinction?

Our paper is about AI. But it's helpful to go back 400 years to answer these questions.

14.07.2025 13:49 — 👍 5 🔁 0 💬 1 📌 0

Can an AI model predict perfectly and still have a terrible world model?

What would that even mean?

Our new ICML paper (poster tomorrow!) formalizes these questions.

One result tells the story: A transformer trained on 10M solar systems nails planetary orbits. But it botches gravitational laws 🧵

14.07.2025 13:49 — 👍 41 🔁 14 💬 2 📌 6

Keyon Vafa: Predicting Workers’ Career Trajectories to Better Understand Labor Markets

If we know someone’s career history, how well can we predict which job they’ll have next?

If we know someone’s career history, how well can we predict which jobs they’ll have next? Read our profile of @keyonv.bsky.social to learn how ML models can be used to predict workers’ career trajectories & better understand labor markets.

medium.com/@gsb_silab/k...

30.06.2025 15:39 — 👍 9 🔁 2 💬 1 📌 0

Foundation models make great predictions. How should we use them for estimation problems in social science?

New PNAS paper @susanathey.bsky.social & @keyonv.bsky.social & @Blei Lab:

Bad news: Good predictions ≠ good estimates.

Good news: Good estimates possible by fine-tuning models differently 🧵

30.06.2025 12:15 — 👍 7 🔁 3 💬 1 📌 1

*Please repost* @sjgreenwood.bsky.social and I just launched a new personalized feed (*please pin*) that we hope will become a "must use" for #academicsky. The feed shows posts about papers filtered by *your* follower network. It's become my default Bluesky experience bsky.app/profile/pape...

10.03.2025 18:14 — 👍 521 🔁 296 💬 23 📌 83

Banner for CHI 2025 workshop with text: "Speech AI for All: Promoting Accessibility, Fairness, Inclusivity, and Equity"

📢Announcing 1-day CHI 2025 workshop: Speech AI for All! We’ll discuss challenges & impacts of inclusive speech tech for people with speech diversities, connecting researchers, practitioners, policymakers, & community members. 🎉Apply to join us: speechai4all.org

16.12.2024 19:45 — 👍 21 🔁 4 💬 2 📌 1

Thank you Alex!

16.12.2024 16:48 — 👍 1 🔁 0 💬 0 📌 0

Behavioral ML

Date: December 14, 2024 (at NeurIPS in Vancouver, Canada)

Location: MTG 19&20

Our Saturday workshop is focused on incorporating insights from the behavioral sciences into AI models/systems.

Speakers and schedule: behavioralml.org

Location: MTG 19&20 at 8:45am

12.12.2024 18:59 — 👍 1 🔁 0 💬 0 📌 0

AP at Collegio Carlo Alberto (since Sept. 2023) | PhD from PSE, visited Brown Econ Dept | Econometrics & Labor Economics | https://yaganhazard.github.io/

Blog: https://argmin.substack.com/

Webpage: https://people.eecs.berkeley.edu/~brecht/

Assoc Prof Demography | Social Sciene Genetics | @CLScohorts/@UCL | Nuffield College | PI of FINDME | futureverse.com | Albstädter living in London

MIT postdoc, incoming UIUC CS prof

katedonahue.me

Asst Prof, Dartmouth CS. Human-AI Systems. Prev: PhD @ MIT, Allen Institute for AI, Netflix Research, Berklee College of Music.

https://nsingh1.host.dartmouth.edu

Associate Professor of Data Science and Informatics at the University of Oxford.

PhD student at Brown interested in deep learning + cog sci, but more interested in playing guitar.

Incoming Assistant Professor at UC Berkeley in CS and Computational Precision Health. Postdoc at Microsoft Research, PhD in CS at Stanford. Research in AI, graphs, public health, and computational social science.

https://serinachang5.github.io/

Assistant Professor @ UChicago CS & DSI UChicao

Leading Conceptualization Lab http://conceptualization.ai

Minting new vocabulary to conceptualize generative models.

Associate Professor, Yale Statistics & Data Science. Social networks, social and behavioral data, causal inference, mountains. https://jugander.github.io/

Assistant Professor at Stanford Statistics and Stanford Data Science | Previously postdoc at UW Institute for Protein Design and Columbia. PhD from MIT.

prev: @BrownUniversity, @uwcse/@uw_wail phd, ex-@cruise, RS @waymo. 0.1x engineer, 10x friend.

spondyloarthritis, cars ruin cities, open source

Econ prof at Oxford.

Machine learning, politics, econometrics, inequality, random reading recs.

maxkasy.github.io/home/

Psychology & behavior genetics. Author of THE GENETIC LOTTERY (2021) and ORIGINAL SIN (coming 3.3.2026). Speaking as an individual

senior principal researcher at msr nyc, adjunct professor at columbia

Econ | Cogsci | AI

Psychology & Economic Theory Postdoc @ Harvard Economics / HBS

http://www.zachary-wojtowicz.com

Asst. Prof. of Finance @ UCLA Anderson || AI, Urban, Real Estate, Corporate Finance || 🇩🇪 he, his || Previously: HBS, BCG, Princeton

https://sites.google.com/view/gregorschubert

Assistant Professor at MIT. Economics + AI.

Visiting Stanford 2025-2026 academic year.

https://economics.mit.edu/people/faculty/ashesh-rambachan

Economics Professor at Brown, studying discrimination, education, healthcare, and applied econometrics. I like IV

https://sites.google.com/site/aboutpeterhull/home