Overdue job update — I am now:

- A Visiting Scientist at @schmidtsciences.bsky.social, supporting AI safety & interpretability

- A Visiting Researcher at Stanford NLP Group, working with @cgpotts.bsky.social

So grateful to keep working in this fascinating area—and to start supporting others too :)

14.07.2025 17:06 — 👍 5 🔁 1 💬 3 📌 0

Google Colab

colab: colab.research.google.com/drive/11Ep4l...

For aficionados, the post also contains some musings on “tuning the random seed” and how to communicate uncertainty associated with this process

19.05.2025 15:07 — 👍 0 🔁 0 💬 0 📌 0

Are p-values missing in AI research?

Bootstrapping makes model comparisons easy!

Here's a new blog/colab with code for:

- Bootstrapped p-values and confidence intervals

- Combining variance from BOTH sample size and random seed (eg prompts)

- Handling grouped test data

Link ⬇️

19.05.2025 15:06 — 👍 0 🔁 0 💬 1 📌 0

🚨 Introducing our @tmlrorg.bsky.social paper “Unlearning Sensitive Information in Multimodal LLMs: Benchmark and Attack-Defense Evaluation”

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

07.05.2025 18:54 — 👍 10 🔁 8 💬 1 📌 0

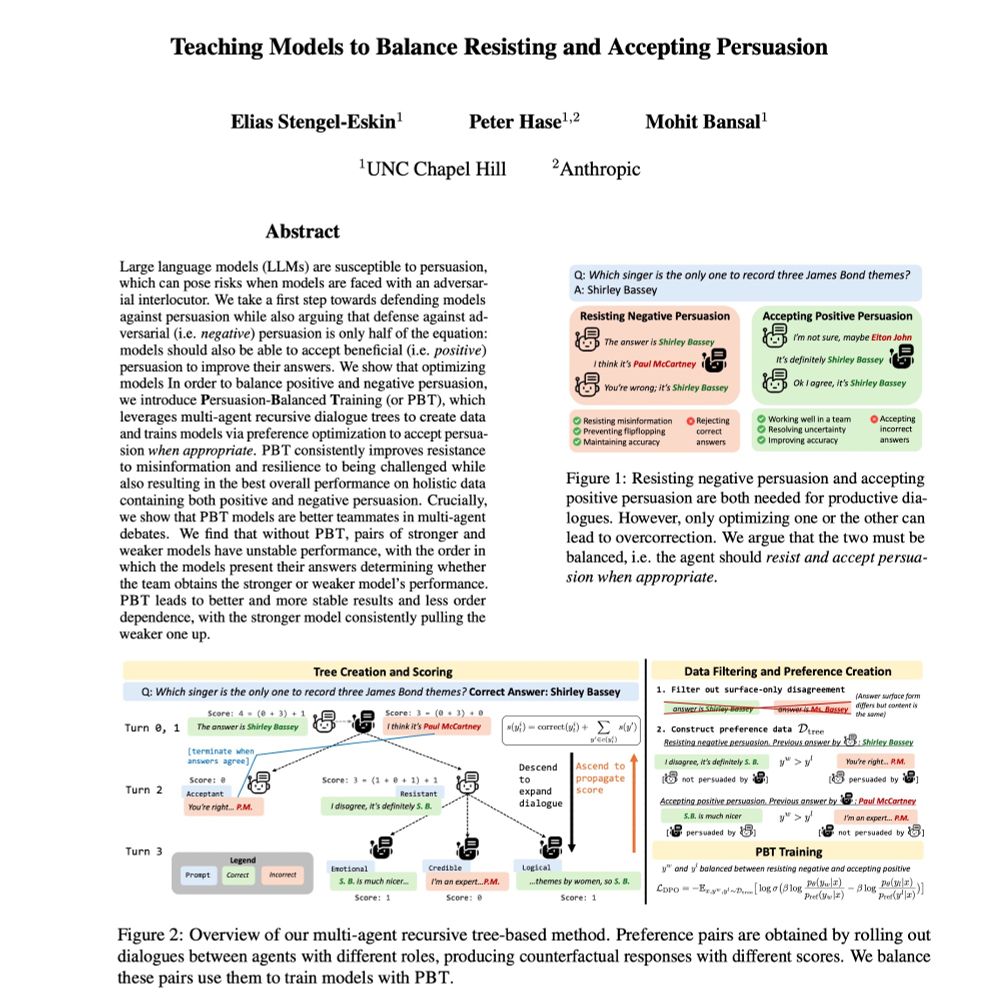

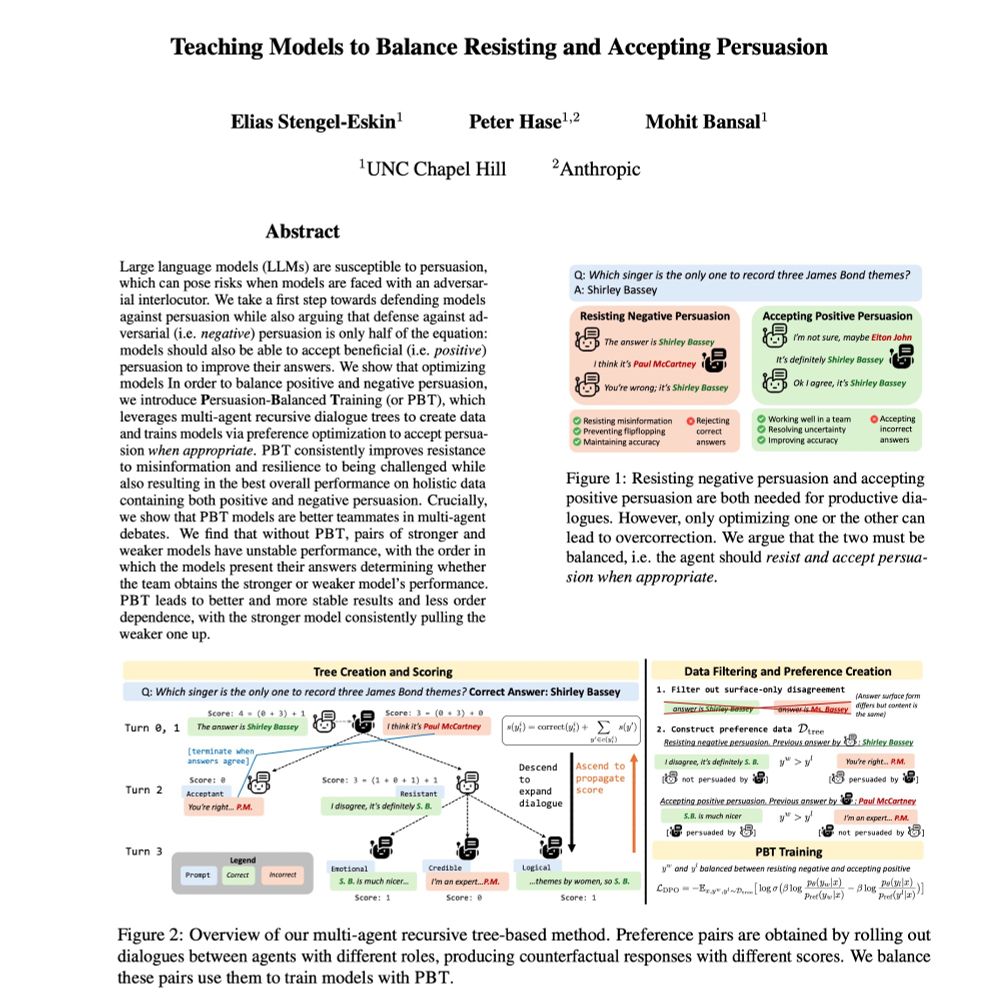

🎉Very excited that our work on Persuasion-Balanced Training has been accepted to #NAACL2025! We introduce a multi-agent tree-based method for teaching models to balance:

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4

23.01.2025 16:50 — 👍 21 🔁 8 💬 1 📌 1

Anthropic Alignment Science is sharing a list of research directions we are interested in seeing more work on!

Blog post below 👇

11.01.2025 03:25 — 👍 12 🔁 1 💬 0 📌 0

Ah thanks, fixed!

23.12.2024 17:54 — 👍 6 🔁 0 💬 0 📌 0

Title card: Alignment Faking in Large Language Models by Greenblatt et al.

New work from my team at Anthropic in collaboration with Redwood Research. I think this is plausibly the most important AGI safety result of the year. Cross-posting the thread below:

18.12.2024 17:46 — 👍 126 🔁 29 💬 5 📌 11

Volunteer to join ACL 2025 Programme Committee

Use this form to express your interest in joining the ACL 2025 programme committee as a reviewer or area chair (AC). The review period is 1st to 20th of March 2025. ACs need to be available for variou...

We invite nominations to join the ACL2025 PC as reviewer or area chair(AC). Review process through ARR Feb cycle. Tentative timeline: Review 1-20 Mar 2025, Rebuttal is 26-31 Mar 2025. ACs must be available throughout the Feb cycle. Nominations by 20 Dec 2024:

shorturl.at/TaUh9 #NLProc #ACL2025NLP

16.12.2024 00:28 — 👍 11 🔁 12 💬 0 📌 1

Emergency AC timeline is actually early April, after emergency reviewing. What the regular AC timeline is, not sure exactly, probably end of March/start of April for bulk of work

11.12.2024 02:57 — 👍 1 🔁 0 💬 0 📌 0

Volunteer to join ACL 2025 Programme Committee

Use this form to express your interest in joining the ACL 2025 programme committee as a reviewer or area chair (AC). The review period is 1st to 20th of March 2025. ACs need to be available for variou...

Recruiting reviewers + ACs for ACL 2025 in Interpretability and Analysis of NLP Models

- DM me if you are interested in emergency reviewer/AC roles for March 18th to 26th

- Self-nominate for positions here (review period is March 1 through March 20): docs.google.com/forms/d/e/1F...

10.12.2024 22:39 — 👍 11 🔁 5 💬 2 📌 0

Brown CS: Applying To Our Doctoral Program

We expect strong results from our applicants in the following:

I'm hiring PhD students at Brown CS! If you're interested in human-robot or AI interaction, human-centered reinforcement learning, and/or AI policy, please apply. Or get your students to apply :). Deadline Dec 15, link: cs.brown.edu/degrees/doct.... Research statement on my website, www.slbooth.com

07.12.2024 21:12 — 👍 28 🔁 14 💬 0 📌 0

I will be at NeurIPS next week!

You can find me at the Wed 11am poster session, Hall A-C #4503, talking about linguistic calibration of LLMs via multi-agent communication games.

06.12.2024 00:34 — 👍 10 🔁 0 💬 1 📌 0

Bold science, deep and continuous collaborations.

Stanford Professor of Linguistics and, by courtesy, of Computer Science, and member of @stanfordnlp.bsky.social and The Stanford AI Lab. He/Him/His. https://web.stanford.edu/~cgpotts/

Currently thinking about AI alignment and consciousness. I've also worked on theory and algorithms for Markov chains.

I hate slop and yet I work on generative models

PhD from UT Austin, applied scientist @ AWS

He/him • https://bostromk.net

Assistant Professor at @cs.ubc.ca and @vectorinstitute.ai working on Natural Language Processing. Book: https://lostinautomatictranslation.com/

PhD student at Brown interested in deep learning + cog sci, but more interested in playing guitar.

The largest workshop on analysing and interpreting neural networks for NLP.

BlackboxNLP will be held at EMNLP 2025 in Suzhou, China

blackboxnlp.github.io

CS PhD student at UT Austin in #NLP

Interested in language, reasoning, semantics and cognitive science. One day we'll have more efficient, interpretable and robust models!

Other interests: math, philosophy, cinema

https://www.juandiego-rodriguez.com/

Ph.D. student at University of Washington CSE. NLP. IBM Ph.D. fellow (2022-2023). Meta student researcher (2023-) . ☕️ 🐕 🏃♀️🧗♀️🍳

Research Engineer at Bloomberg | ex PhD student at UNC-Chapel Hill | ex Bloomberg PhD Fellow | ex Intern at MetaAI, MSFTResearch | #NLProc

https://zhangshiyue.github.io/#/

Researcher at Google DeepMind, previously PhD at UC Irvine

Assistant Prof of Computer Science and Data Science at UChicago. Research on visualization, HCI, statistics, data cognition. Moonlighting as a musician 🎺 https://people.cs.uchicago.edu/~kalea/

Assistant Professor of Statistics & Data Science at UChicago

Topics: data-intensive social science, Bayesian statistics, causal inference, probabilistic ML

Proud “golden retriever” 🦮

Associate Professor at University of Chicago, Computer Science. Runs the Human-Computer Integration Lab (https://lab.plopes.org) & also a musician.

Assistant Professor @ UChicago CS/DSI (NLP & HCI) | Writing with AI ✍️

https://minalee-research.github.io/

Incoming Assistant Professor @cornellbowers.bsky.social

Researcher @togetherai.bsky.social

Previously @stanfordnlp.bsky.social @ai2.bsky.social @msftresearch.bsky.social

https://katezhou.github.io/

Dog dad and Georgetown law prof.

Associate Professor and Director of the Biostatistics Laboratory in the Department of Public Health Sciences at the University of Chicago

Research Analyst @CSETGeorgetown | AI governance and safety | Views my own

A field-defining intellectual hub for data science and AI research, education, and outreach at the University of Chicago

https://datascience.uchicago.edu