Exploring AI legislation in Congress with AGORA: Risks, Harms, and Governance Strategies – Emerging Technology Observatory

Using AGORA to explore AI legislation enacted by U.S. Congress since 2020

In other words, Congress is still in the early days of governing AI but so far seems more focused on understanding and harnessing AI’s potential than addressing its downsides. Make sure to take a deeper dive into our analysis here 🧵6/6 eto.tech/blog/ai-laws...

29.07.2025 18:15 — 👍 1 🔁 0 💬 0 📌 0

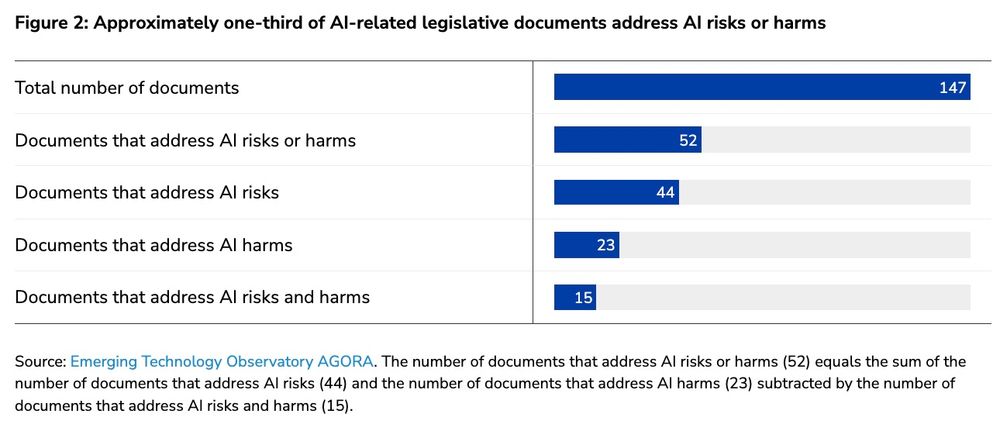

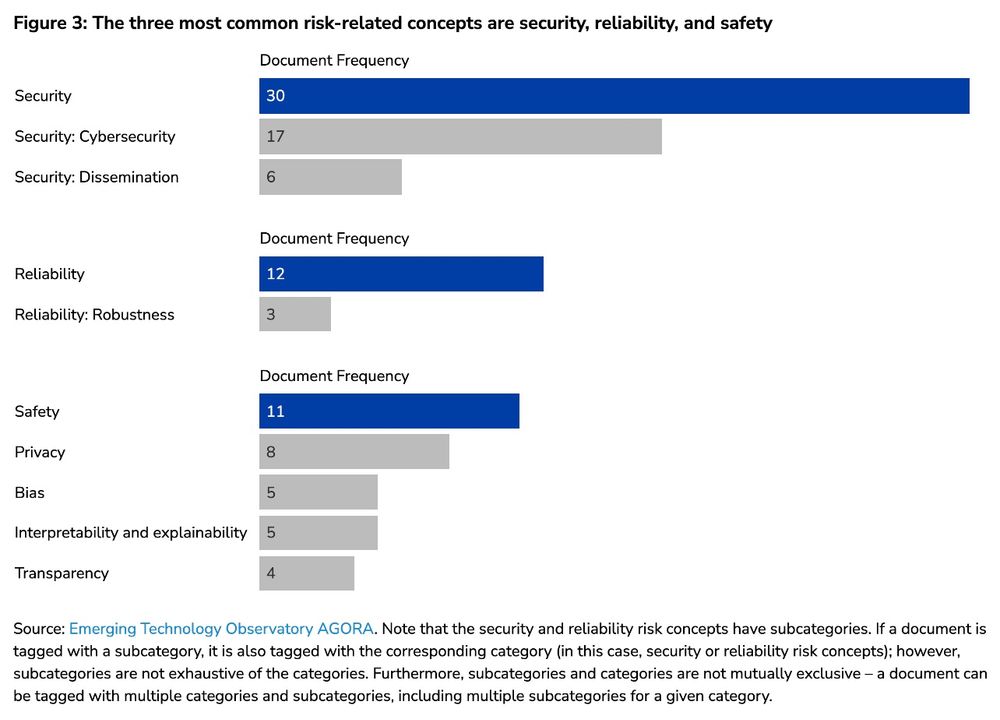

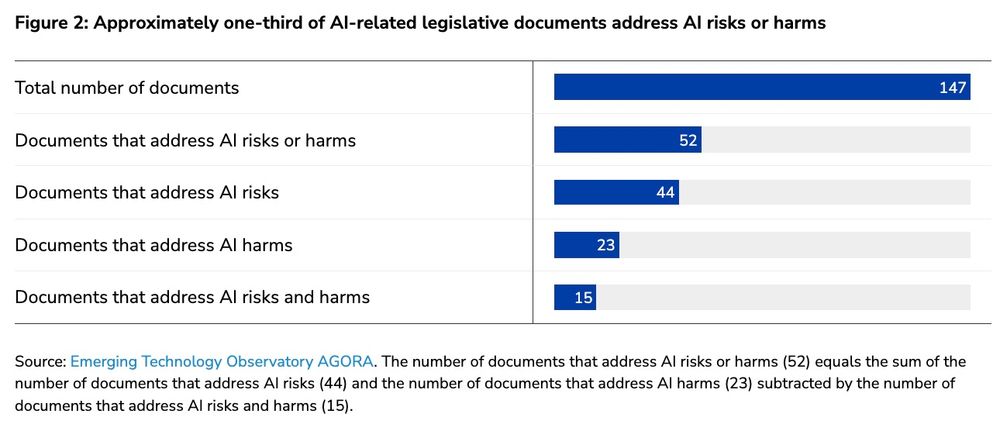

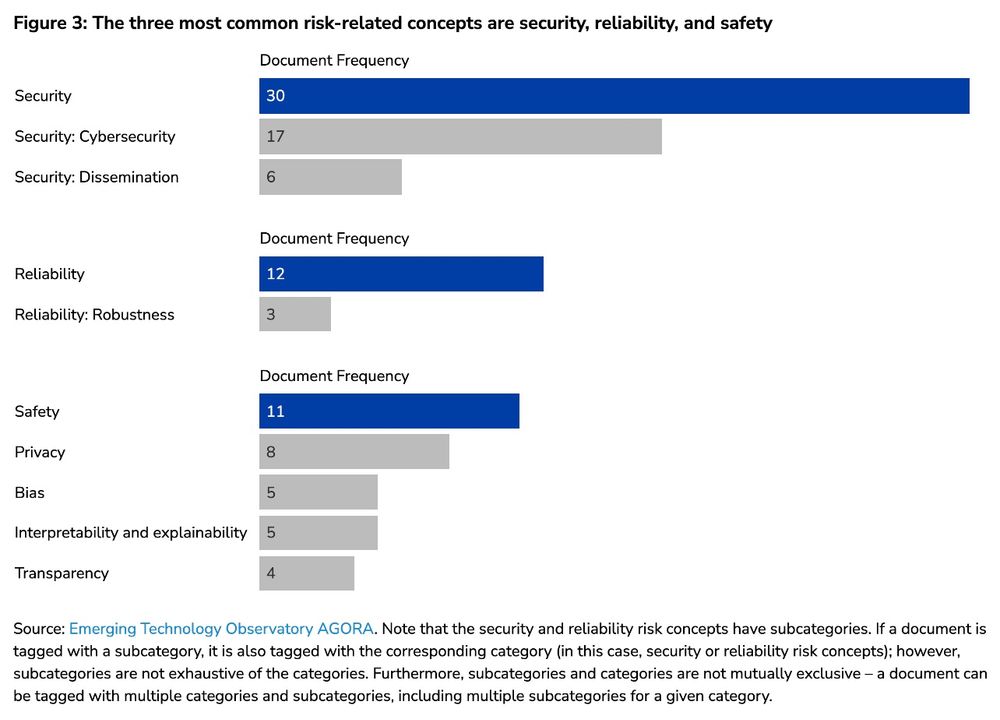

Fewer legislative docs directly tackle risks or undesirable consequences from AI (such as harm to infrastructure) than propose strategies such as government support, convening, or institution-building 🧵5/6

29.07.2025 18:15 — 👍 1 🔁 0 💬 1 📌 0

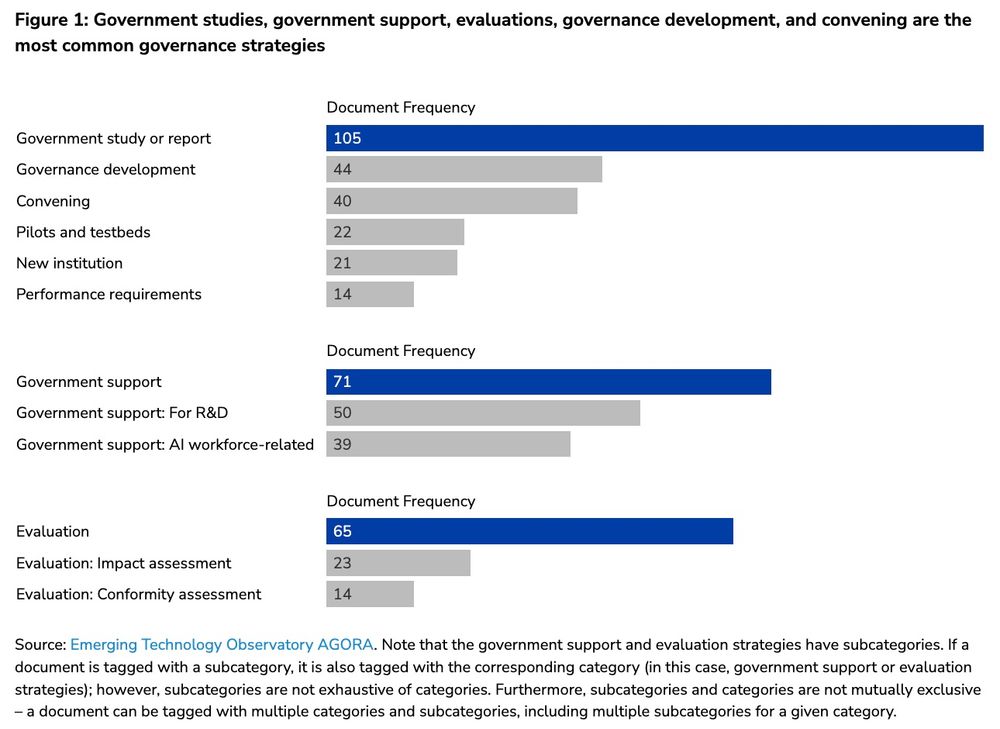

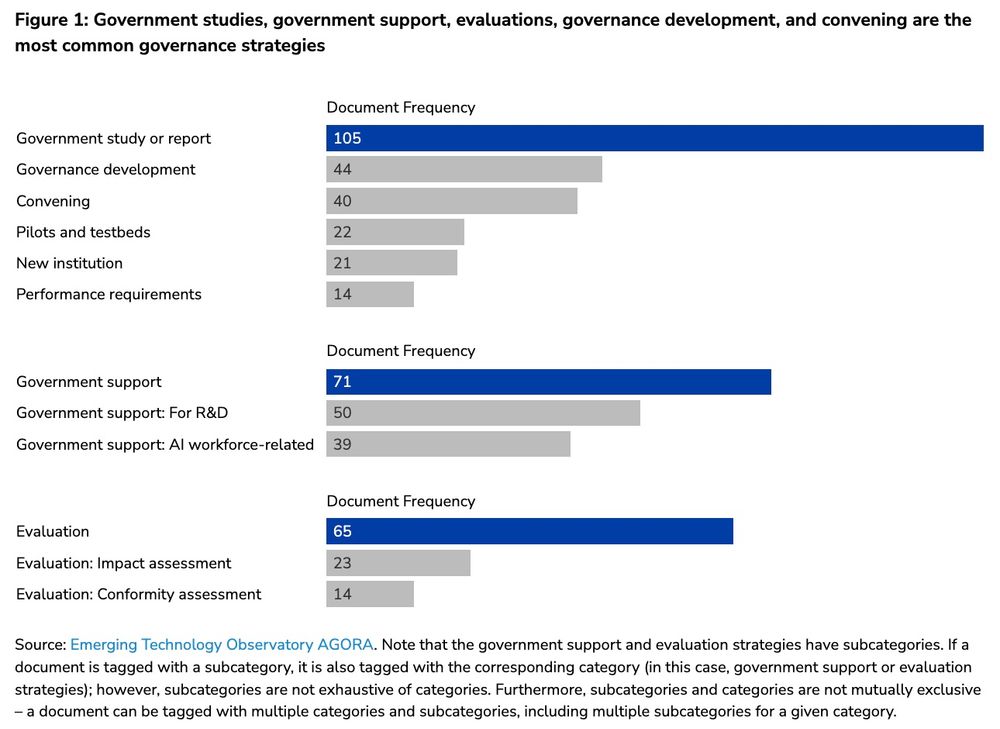

Very few enactments leverage performance requirements, pilots, new institutions, or other governance strategies that place concrete requirements on AI systems or represent investments in maturing or scaling up AI capabilities 🧵4/6

29.07.2025 18:15 — 👍 1 🔁 0 💬 1 📌 0

Most of Congress’s 147 enactments focus on commissioning studies of AI systems, assessing their impacts, providing support for AI-related activities, convening stakeholders, & developing additional AI-related governance docs 🧵3/6

29.07.2025 18:15 — 👍 1 🔁 0 💬 1 📌 0

We find that Congress has enacted many AI-related laws & provisions which are focused more on laying the groundwork to harness AI’s potential – often in nat'l sec contexts – than placing concrete demands on AI or directly tackling their specific, undesirable consequences 🧵2/6

29.07.2025 18:15 — 👍 1 🔁 0 💬 1 📌 0

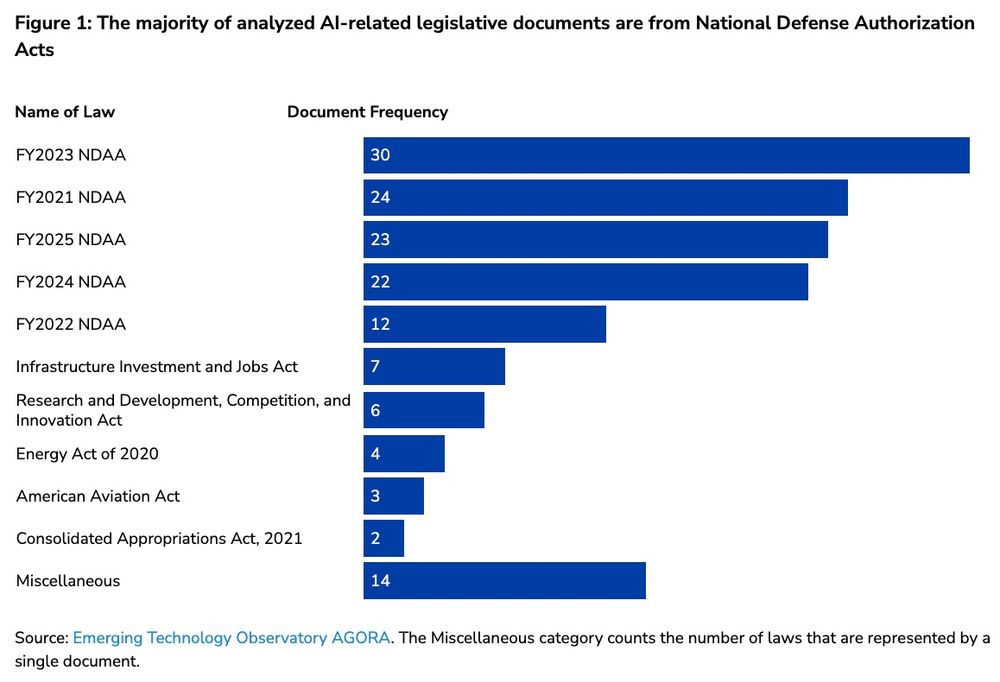

Check out the second @csetgeorgetown.bsky.social @emergingtechobs.bsky.social blog from @sonali-sr.bsky.social and myself where we explore the strategies, risks, and harms addressed by AI-related laws enacted by Congress between Jan 2020 and March 2025 🧵1/6 eto.tech/blog/ai-laws...

29.07.2025 18:15 — 👍 6 🔁 3 💬 1 📌 0

Yesterday's new AI Action Plan has a lot worth discussing!

One interesting aspect is its statement that the federal government should withhold AI-related funding from states with "burdensome AI regulations."

This could be cause for concern.

24.07.2025 18:55 — 👍 5 🔁 3 💬 1 📌 0

Stay tuned for the second blog, which examines the governance strategies, risk-related concepts, and harms covered by this legislation! 🧵3/3

23.07.2025 13:39 — 👍 1 🔁 0 💬 0 📌 0

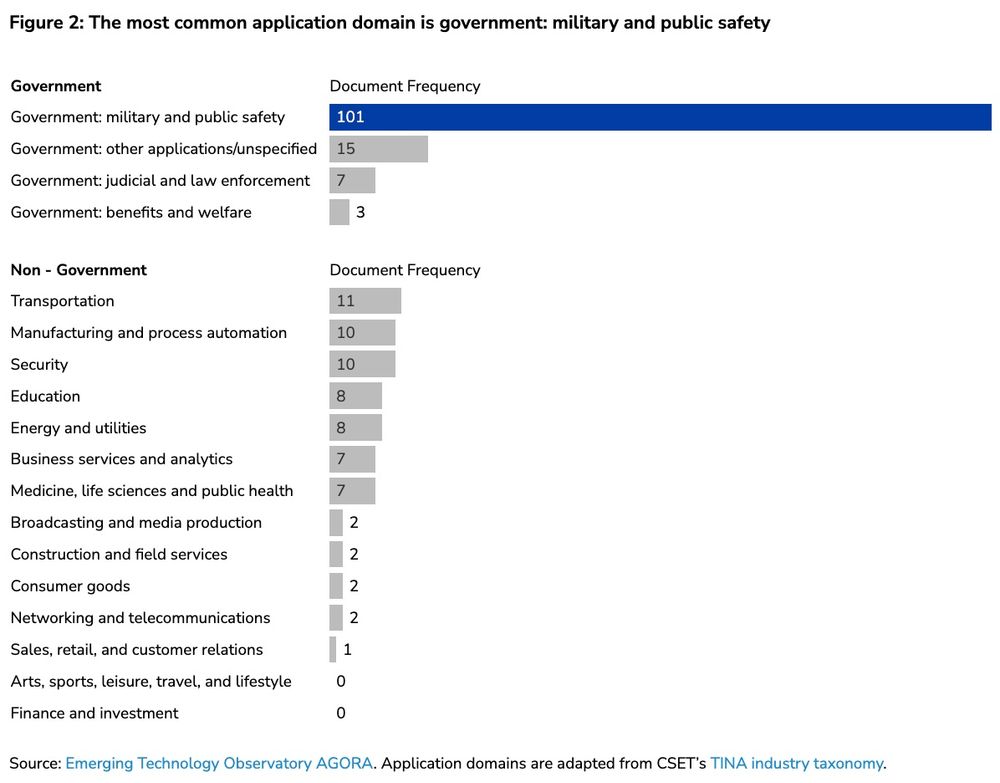

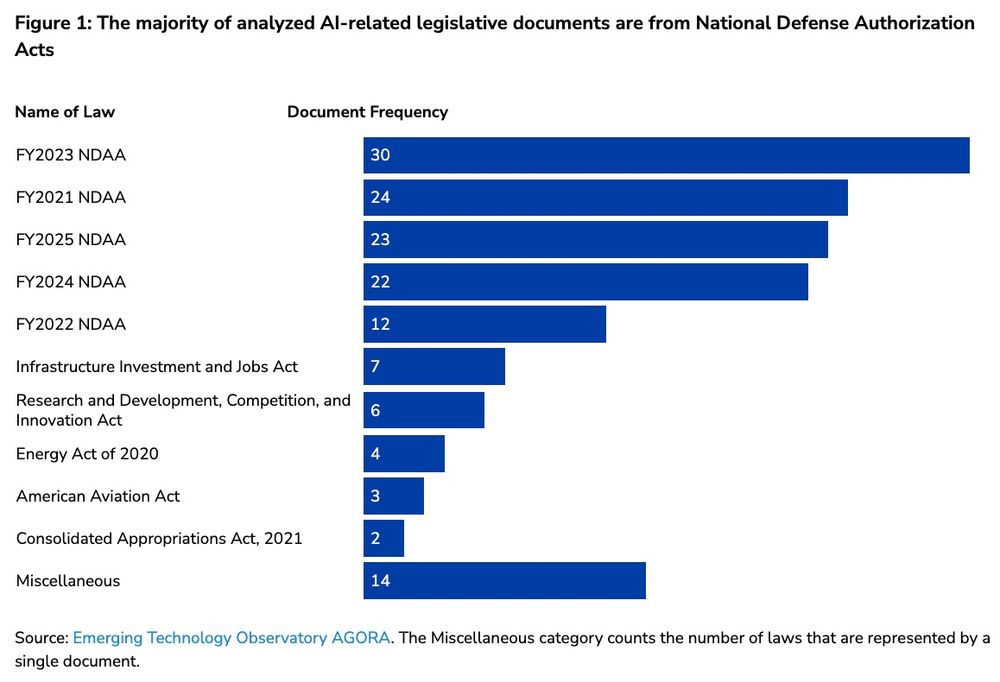

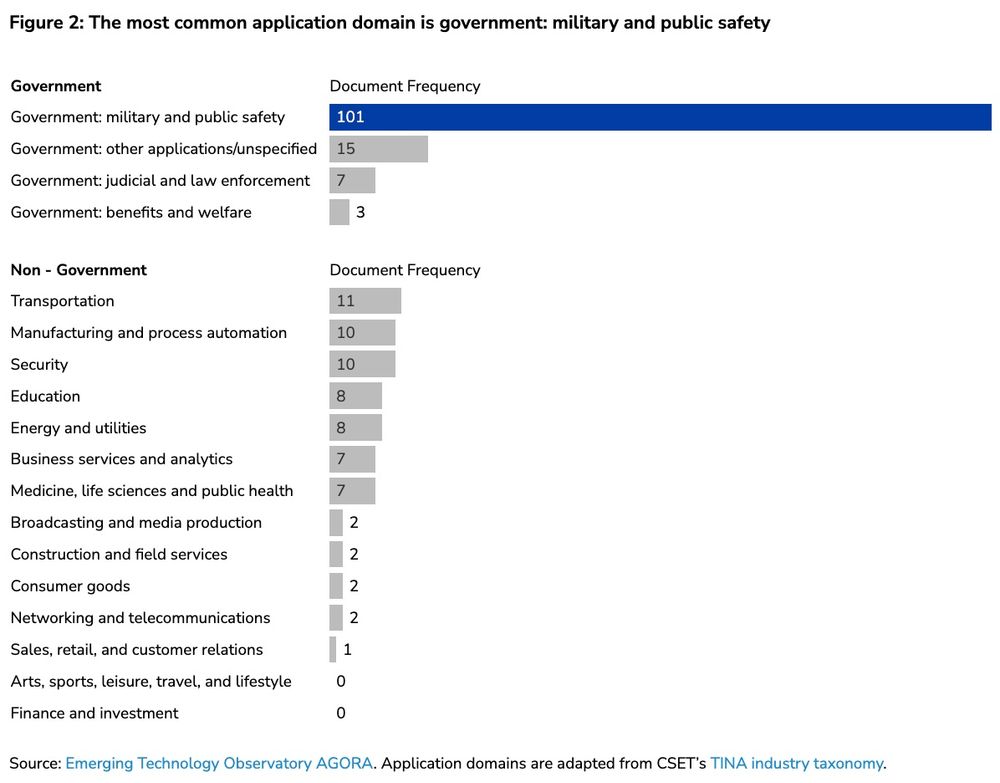

We find that, contrary to conventional wisdom, Congress has enacted many AI-related laws and provisions — most of which apply to military and public safety contexts 🧵2/3

23.07.2025 13:39 — 👍 1 🔁 0 💬 1 📌 0

Check out the first blog in a 2 part series from @sonali-sr.bsky.social and myself where we use data from @csetgeorgetown.bsky.social @emergingtechobs.bsky.social AGORA to explore ✨AI-related legislation that was enacted by Congress between January 2020 and March 2025✨

eto.tech/blog/ai-laws... 🧵1/3

23.07.2025 13:39 — 👍 6 🔁 4 💬 1 📌 0

Check out the latest AGORA roundup from @emergingtechobs.bsky.social , which highlights some overlooked AI provisions in the Big Beautiful Bill!

02.07.2025 19:52 — 👍 0 🔁 0 💬 0 📌 0

The 10 yr moratorium on state AI laws will hurt U.S. nat'l security & innovation if enacted. In our piece in @thehill.com , @jessicaji.bsky.social , @vikramvenkatram.bsky.social , & I argue that states support the very infrastructure needed for a vibrant U.S. AI ecosystem

thehill.com/opinion/tech...

19.06.2025 22:33 — 👍 2 🔁 1 💬 0 📌 0

Banning state-level AI regulation is a bad idea!

One crucial reason is that states play a critical role in building AI governance infrastructure.

Check out this new op-ed by @jessicaji.bsky.social, myself, and @minanrn.bsky.social on this topic!

thehill.com/opinion/tech...

18.06.2025 18:52 — 👍 7 🔁 5 💬 1 📌 0

Amidst all the discussion about AI safety, how exactly do we figure out whether a model is safe?

There's no perfect method, but safety evaluations are the best tool we have.

That said, different evals answer different questions about a model!

28.05.2025 14:31 — 👍 7 🔁 3 💬 1 📌 0

AI Action Plan Database

A database of recommendations for OSTP's AI Action Plan.

@ifp.bsky.social recently published a searchable database of all AI Action Plan submissions, many of which cover topics that overlap with CSET's submission! Check out CSET's recs here: cset.georgetown.edu/publication/... and compare it to others here: www.aiactionplan.org

19.05.2025 17:14 — 👍 3 🔁 0 💬 0 📌 0

Trump Should Not Abandon March-In Rights

Moving forward with the Biden administration’s guidance could deliver lower drug prices and allow more Americans to reap the benefits of public science. In late 2023, the federal government published ...

Have you heard of the Bayh-Dole Act? It's niche, but an incredibly important factor in the U.S. innovation ecosystem!

For the National Interest, @jack-corrigan.bsky.social and I discuss a potential change that could benefit public access to medical drugs.

nationalinterest.org/blog/techlan...

28.04.2025 18:08 — 👍 6 🔁 2 💬 0 📌 0

What does the EU's shifting strategy mean for AI?

CSET's @miahoffmann.bsky.social & @ojdaniels.bsky.social have a new piece out for @techpolicypress.bsky.social.

Read it now 👇

10.03.2025 14:17 — 👍 4 🔁 4 💬 0 📌 0

Check out our paper on the quality of interpretability evaluations of recommender systems:

cset.georgetown.edu/publication/...

Led by @minanrn.bsky.social and Christian Schoeberl!

@csetgeorgetown.bsky.social

19.02.2025 20:45 — 👍 11 🔁 5 💬 0 📌 0

[6/6] Our findings suggest the importance of standards for AI evaluations and a capable workforce to assess the efficacy of these evaluations. If researchers understand & measure facets of AI trustworthiness differently, policies for building trusted AI systems may not work

20.02.2025 19:52 — 👍 0 🔁 0 💬 0 📌 0

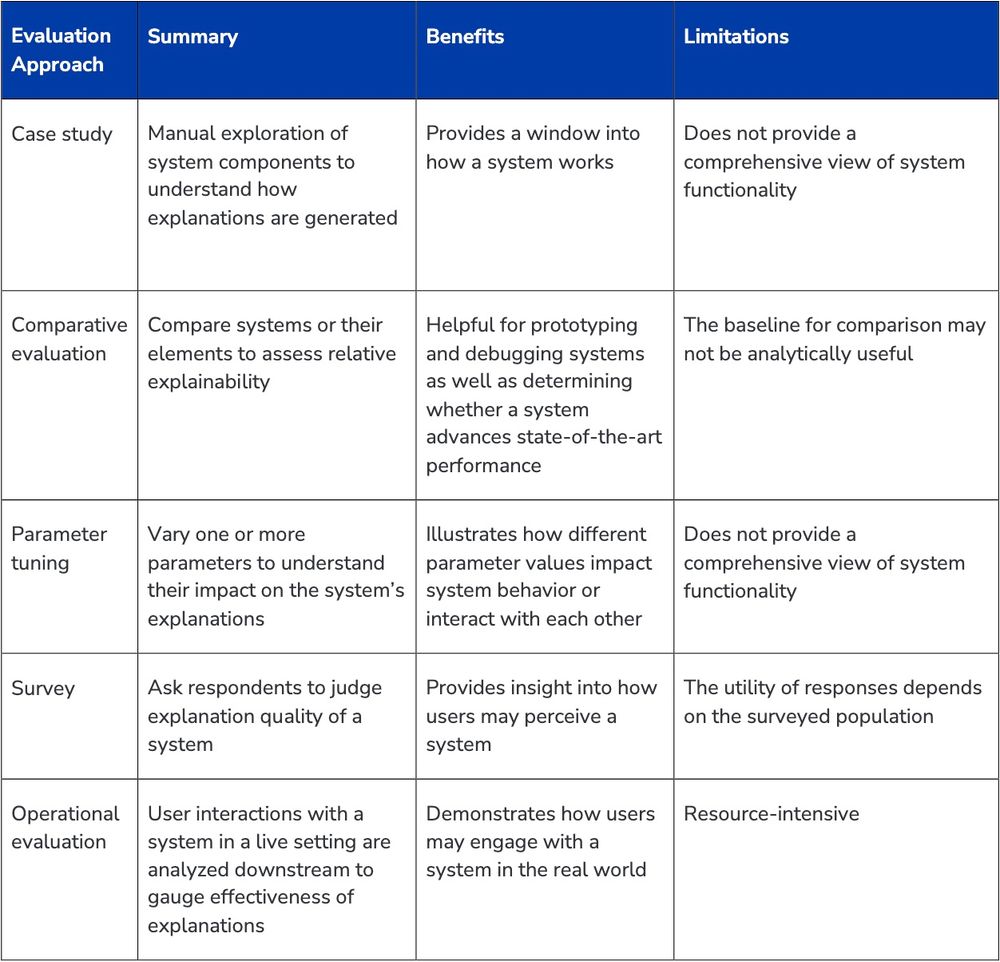

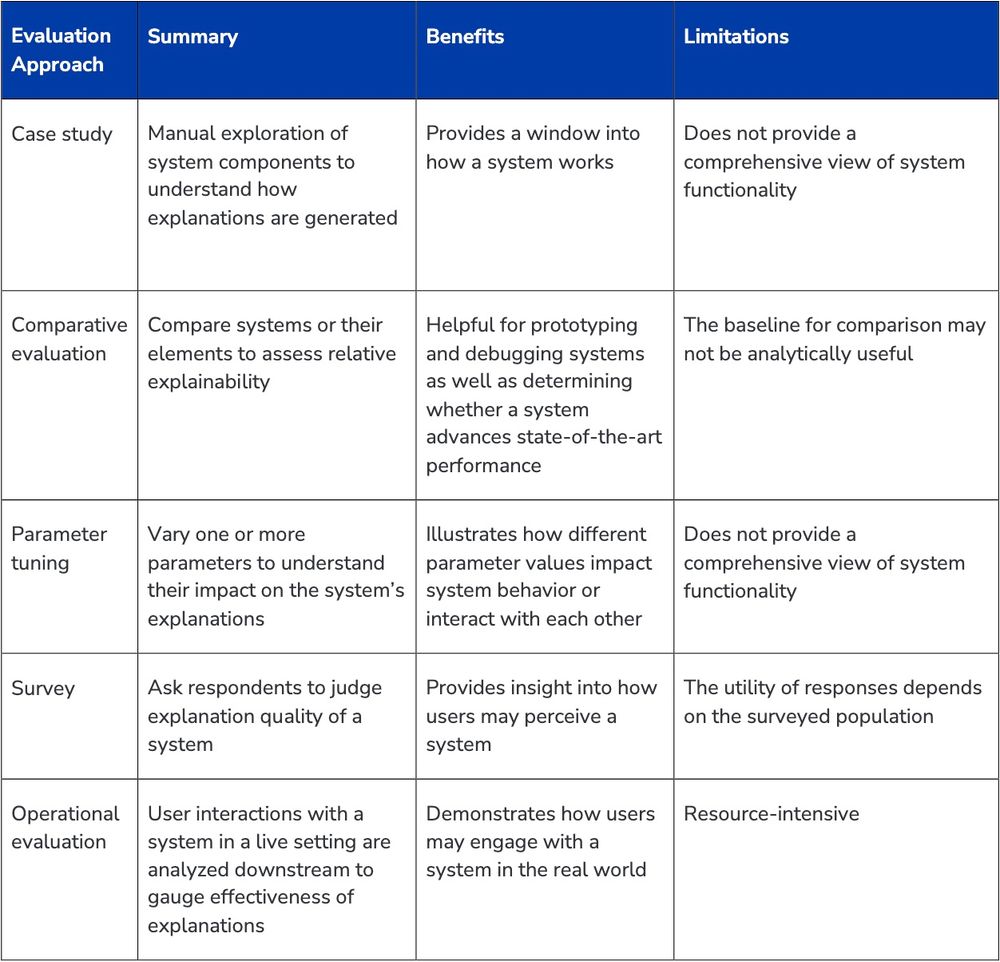

[5/6] Here are the evaluation approaches we identified:

20.02.2025 19:52 — 👍 1 🔁 0 💬 1 📌 0

[4/6] We find that research papers (1) do not clearly differentiate explainability from interpretability, (2) contain combinations of five evaluation approaches, & (3) more often test if systems are built according to design criteria than if systems work in the real world

20.02.2025 19:52 — 👍 0 🔁 0 💬 1 📌 0

[3/6] Our new report examines how researchers evaluate claims about the explainability & interpretability of recommender systems – a type of AI system that often uses explanations

20.02.2025 19:52 — 👍 0 🔁 0 💬 1 📌 0

[2/6] Building trust in AI & understanding why & how AI systems work are key to adopting and building better models

20.02.2025 19:52 — 👍 0 🔁 0 💬 1 📌 0

CS PhD candidate at Princeton. I study the societal impact of AI.

Website: cs.princeton.edu/~sayashk

Book/Substack: aisnakeoil.com

Princeton computer science prof. I write about the societal impact of AI, tech ethics, & social media platforms. https://www.cs.princeton.edu/~arvindn/

BOOK: AI Snake Oil. https://www.aisnakeoil.com/

PhD Candidate SETU- Department of Management and Organisation

Researching implications of the lifting of the marriage bar for female bank workers in Ireland

Associate Professor at UT-Austin. I study authoritarian politics, security, & East Asia, especially China and Korea.

Non-resident scholar at Carnegie Endowment & U.S. Army War College, editor of the Texas National Security Review. Views my own.

The official account of the Stanford Institute for Human-Centered AI, advancing AI research, education, policy, and practice to improve the human condition.

We're a free market think tank advancing real solutions to complex policy problems. We work on tech, cybersecurity, clean energy, justice, occupational licensing, flood insurance, and more.

Book our experts/media: PR@rstreet.org

See our work: rstreet.org

CNBC Anchor (2pm ET), Chief Energy Reporter & Senior National Correspondent. Data-driven broadcaster & journalist. Storyteller.

Actual account of CNBC anchor Brian Sullivan. Find me on X @sullyCNBC & Instagram @briansullivan.

A nonpartisan think tank that promotes an open society and changes public policy via direct engagement in the policymaking process.

The Center for European Policy Analysis (CEPA) | CEPA’s mission is to ensure a strong and democratic transatlantic alliance for future generations. Media inquiries: press@cepa.org

Covering China like no one else.

Free Newsletter: http://thewirechina.com/newsletter

Subscriptions: http://thewirechina.com/subscriptions

Perry World House is the University of Pennsylvania's hub for global engagement. Follow/Repost/Like is not endorsement.

2025 International Conference on the Science of Science and Innovation

June 16-18, 2025 in Copenhagen, Denmark #ICSSI2025

👉 www.icssi.org 👈

A new kind of news. Read more at https://themessenger.com/

Infectious disease MD (also CCM & EM) focused on pandemics, emerging infections, biosecurity, biotech.