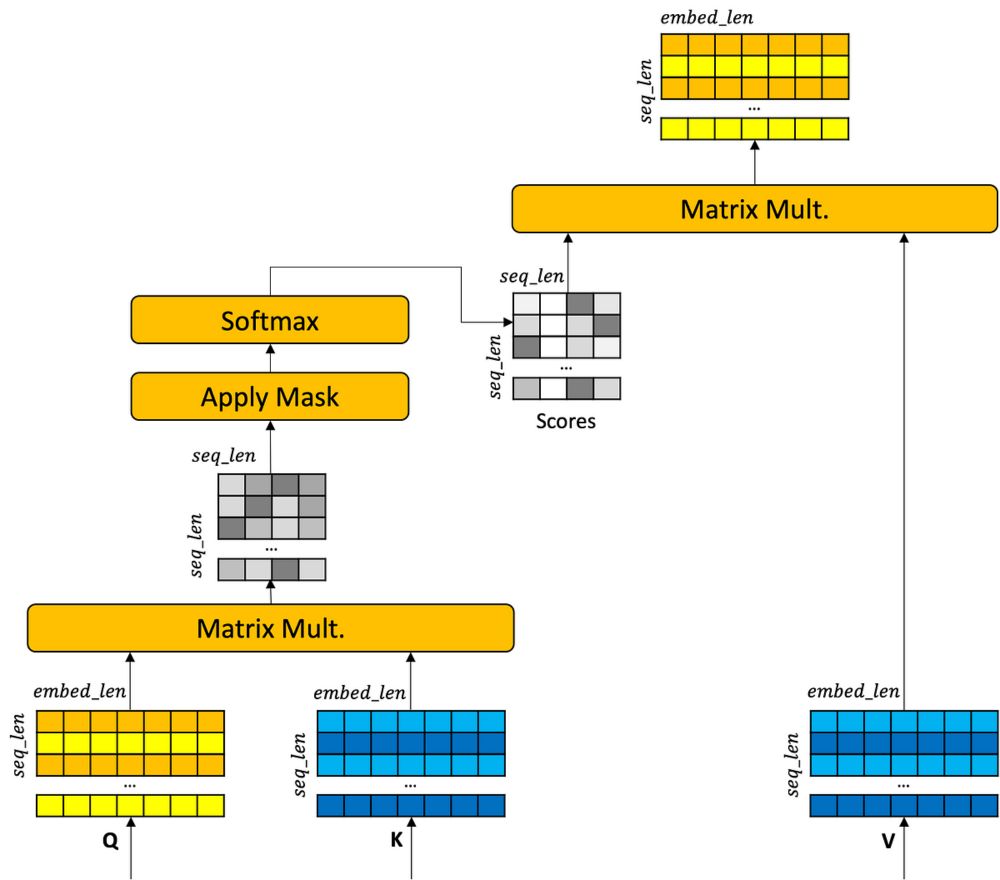

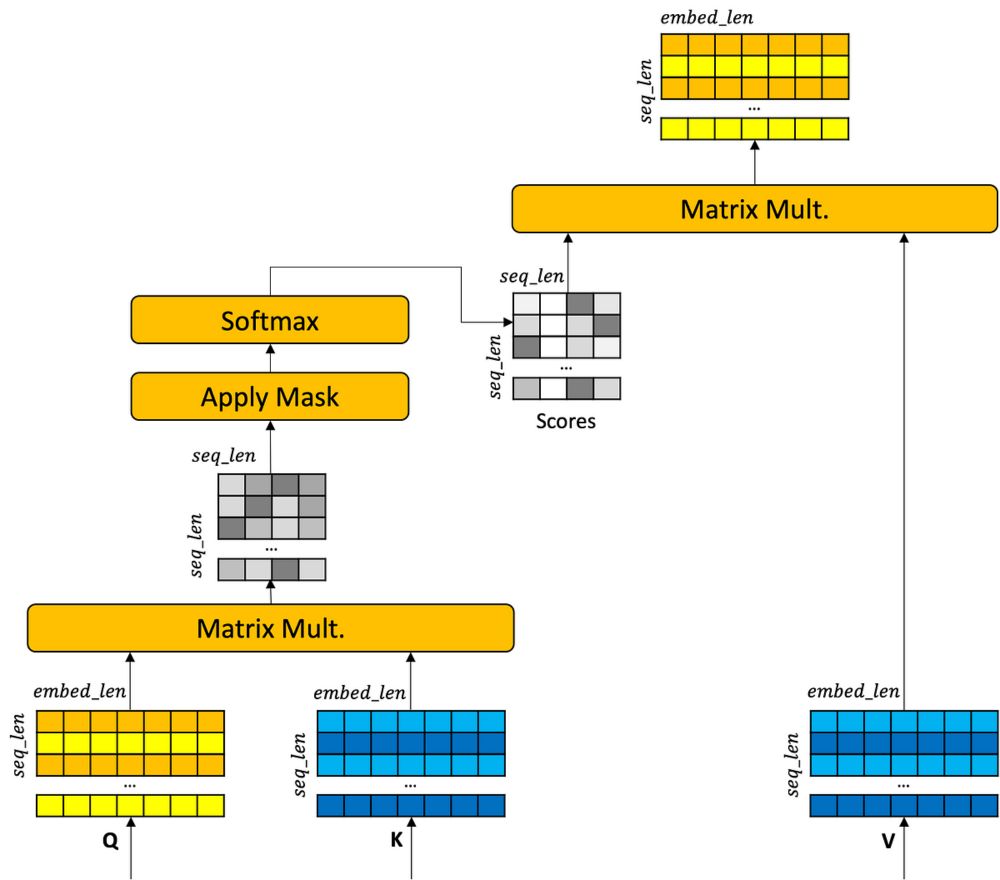

Transformers: Origins

An unofficial origin story of the transformer neural network architecture.

I have converted a portion of my NLP Online Masters course to blog form. This is the progression I present that takes one from recurrent neural network to seq2seq with attention to transformer. mark-riedl.medium.com/transformers...

26.11.2024 02:15 — 👍 116 🔁 15 💬 6 📌 2

ALTA: Compiler-Based Analysis of Transformers

We propose a new programming language called ALTA and a compiler that can map ALTA programs to Transformer weights. ALTA is inspired by RASP, a language proposed by Weiss et al. (2021), and Tracr (Lin...

I’m pretty excited about this one!

ALTA is A Language for Transformer Analysis.

Because ALTA programs can be compiled to transformer weights, it provides constructive proofs of transformer expressivity. It also offers new analytic tools for *learnability*.

arxiv.org/abs/2410.18077

24.10.2024 03:31 — 👍 53 🔁 16 💬 2 📌 0

A tweet from Tim van der Zee, from August 10, 2017, that reads: "Academia is a bunch of people emailing "sorry for the late response" back and forth until one of them gets tenure."

This was seven years ago. I think about this often.

14.11.2024 06:58 — 👍 258 🔁 20 💬 5 📌 4

The AI Interdisciplinary Institute at the University of Maryland (AIM) is hiring

40 new faculty members

in all areas of AI, particularly:

- accessibility,

- sustainability,

- social justice, and

- learning;

building on computational, humanistic, or social scientific approaches to AI.

>

13.11.2024 12:37 — 👍 64 🔁 19 💬 1 📌 5

Humanities and AI Virtual Institute - Schmidt Sciences

Schmidt Sciences is outlining the timeline for a new program to support research at the intersection of artificial intelligence and the humanities. Open call for proposals to come Dec 15. www.schmidtsciences.org/humanities-a...

13.11.2024 18:00 — 👍 77 🔁 31 💬 0 📌 0

This one is a study on voting-based evaluation to comparisons of models in LMSYS Chatbot Arena leaderboard, by independent researcher Nick Ryan. Simulations show that two Condorcet-consistent methods (Copeland and Ranked Pairs) can be robust to uncertain/noisy evals.

nickcdryan.com/2024/09/06/u...

13.11.2024 10:22 — 👍 18 🔁 3 💬 2 📌 1

Honestly very disappointed since joining BlueSky, this is not the weather app I was hoping for

13.11.2024 21:34 — 👍 329 🔁 21 💬 17 📌 0

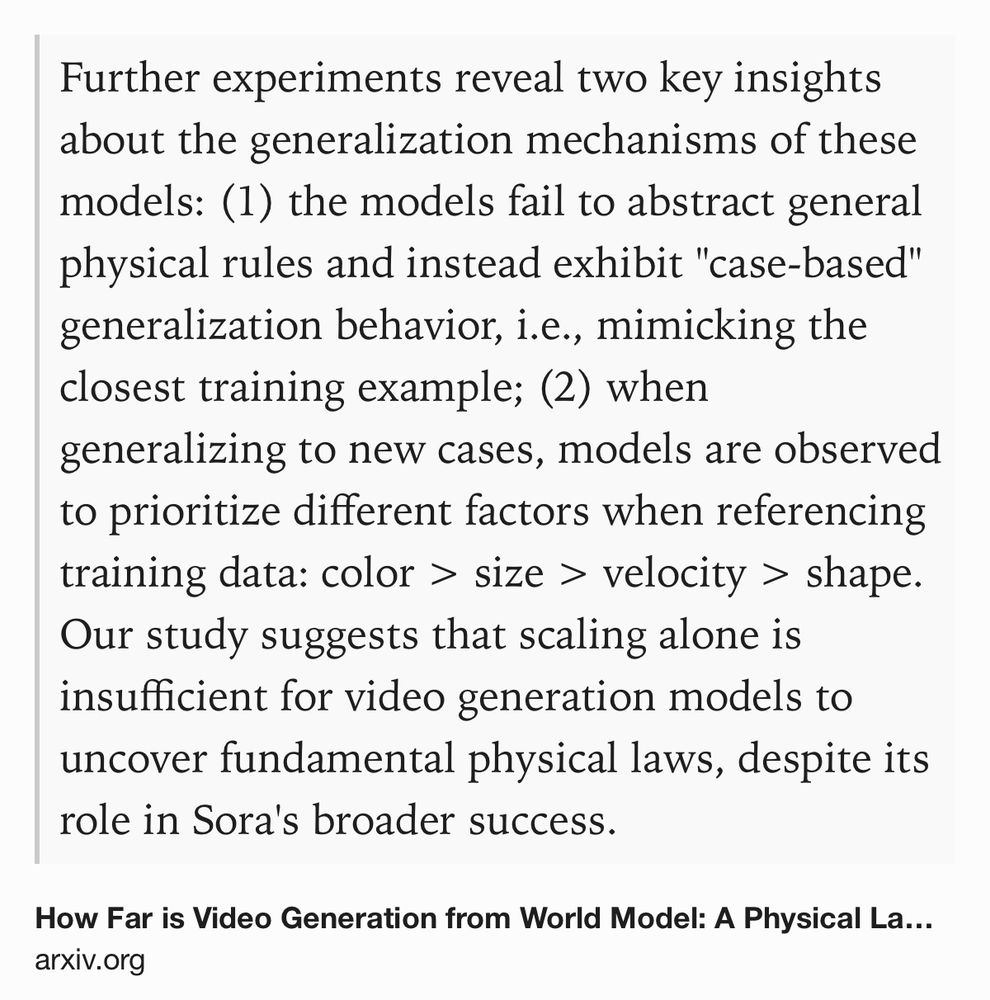

Text Shot: Further experiments reveal two key insights about the generalization mechanisms of these models: (1) the models fail to abstract general physical rules and instead exhibit "case-based" generalization behavior, i.e., mimicking the closest training example; (2) when generalizing to new cases, models are observed to prioritize different factors when referencing training data: color > size > velocity > shape. Our study suggests that scaling alone is insufficient for video generation models to uncover fundamental physical laws, despite its role in Sora's broader success.

How Far is Video Generation from World Model: A Physical Law Perspective https://arxiv.org/abs/2411.02385v1 #AI #video

10.11.2024 01:30 — 👍 2 🔁 2 💬 0 📌 0

NSF COA | Jordan Matelsky

NSF makes you say who you got conflicts (coauthored) with. We (really just Jordan Matelsky) just built you a tool for that. Literally one click: bib.experiments.kordinglab.com/nsf-coa

11.11.2024 20:11 — 👍 682 🔁 319 💬 84 📌 74

New York Theory Day finally returns on December 6, 2024, after being put on hiatus during COVID.

Will be held at @nyutandon.bsky.social in Brooklyn. Registration is free!

Ft stellar speakers Amir Abboud, Sanjeev Khanna, Rotem Oshman, and

Ron Rothblum!

sites.google.com/view/nyctheo...

14.11.2024 01:06 — 👍 19 🔁 4 💬 2 📌 0

Hello… world?

13.11.2024 20:14 — 👍 4 🔁 0 💬 0 📌 0

San Diego Dec 2-7, 25 and Mexico City Nov 30-Dec 5, 25. Comments to this account are not monitored. Please send feedback to townhall@neurips.cc.

Postdoc in the System Neuroscience Group @University of Newcastle

Outdoorsy physicist who loves plant-based food and a liveable plant.

Abolish the value function!

AI researcher, Cooperative AI, AI for Social Good, Multi Agent Systems, Game Theory, Evolutionary Biology.

Opinions are my own.

Information and updates about RLC 2025 at the University of Alberta from Aug. 5th to 8th!

https://rl-conference.cc

Kansas State University. Neurosymbolic AI, Knowledge Graphs, Ontologies, Semantic Web. My opinions are my own. https://people.cs.ksu.edu/~hitzler/

Assistant Professor at UW and Staff Research Scientist at Google DeepMind. Social Reinforcement Learning in multi-agent and human-AI interactions. PhD from MIT. Check out https://socialrl.cs.washington.edu/ and https://natashajaques.ai/.

Scientist, Medical AI Researcher, Humanist

Founding Co-director of @UF_IC3 Center and director of @UFiHealLab

#AI4Health

Opinions my own.

She/her.

Searching for the numinous

Australian Canadian, currently living in the US

https://michaelnotebook.com

Robotics, AI, RL, Applied Impact Robotics, Nova Labs Metal Shop Steward, Software Engineer, Blacksmith, Maker, KZ4GD, old fart

Associate Professor @ Trinity College Dublin. Computer Science, AI, Reinforcement Learning, Intelligent Mobility

I can be described as a multi-agent artificial general intelligence.

OK, so some people pointed out that I am not in fact artificial, contradicting my bio. To them I would reply that I am likely also a cognitive gadget.

www.jzleibo.com

Assoc Prof @UWaterloo

Research:

#ArtificialIntelligence #MachineLearning #ReinforcementLearning

#casual-learning, dimensionality reduction, #ethicalAI #aimorality #aialignment

Domains: wildfires, driving, medical, lidar

Diversity is Strength.

VP Engineering at @bookcreator.com

Governor at Clevedon School

https://pho.tos.legge.tt/

NO KINGS. NO FASCISTS. FUND SCIENCE.

Professor of Computer Science @ BioFrontiers Institute at University of Colorado, Boulder and External Faculty @ Santa Fe Institute

orcid: https://orcid.org/0000-0002-3529-8746

Director, Stratification Economics at The Roosevelt Institute/Roosevelt Forward

@rooseveltinstitute.org

Sociologist, Dad, Autistic person, Bathos-Enjoyer, elitist jerk

Taxes are what we pay for civilized society

Opinions are my own

If a box on a curb with "Free Meyer Lemons" written on it were a guy