The top 3 requests?

🧠 "error" (35%)

✅ "test" (21%)

✨ "improve" (18%)

AI isn’t starting from a blank file.

It’s jumping into messy code and making sense of it.

The real value? Debugging, refining, and unblocking.

16.07.2025 16:29 — 👍 3 🔁 0 💬 0 📌 0

Most people think devs use AI to write code from scratch.

But we analyzed 81 million developer chats — and that’s not what’s happening.

Here's what we found 👇

16.07.2025 16:29 — 👍 2 🔁 2 💬 1 📌 0

Agent prompting = engineering communication.

Don’t think “prompt engineering.” Think “design doc + task breakdown + pair programming.”

Good prompts are good collaboration.

15.07.2025 16:28 — 👍 2 🔁 0 💬 0 📌 0

Ask for a plan before action.

“I need to expose time zone settings. First, suggest a plan—don’t write code yet.”

This gives you control. And gives the Agent a checkpoint to align.

15.07.2025 16:28 — 👍 1 🔁 0 💬 1 📌 0

Don’t cram it all in at once.

❌ “Read ticket, build UI, write tests, update docs”

✅ Break into steps:

- Read ticket

- Build UI

- Write tests

- Update docs

Let the Agent finish before moving on.

15.07.2025 16:28 — 👍 1 🔁 0 💬 1 📌 0

Point the Agent to the right files.

❌ “Add JSON parser to chat backend”

✅ “Add JSON parser in LLMOutputParsing (services/ folder). It’ll be used to extract structured output from chat completions.”

Precision = performance.

15.07.2025 16:28 — 👍 1 🔁 0 💬 1 📌 0

Give references to code, tests, or docs.

❌ “Write tests for ImageProcessor”

✅ “Write tests for ImageProcessor.Follow structure in test_text_processor.py”

The Agent learns better by example.

15.07.2025 16:28 — 👍 1 🔁 0 💬 1 📌 0

Include why, not just what.

❌ “Use events instead of direct method calls”

✅ “Reviewers flagged tight coupling in SettingsWebviewPanel.statusUpdate(). Let’s refactor to events to improve modularity.”

Reasoning aligns the Agent with your intent.

15.07.2025 16:28 — 👍 2 🔁 0 💬 1 📌 0

❌ “Fix bug in login handler”

✅ “Login fails with 500 on incorrect passwords. Repro: call /api/auth with wrong creds. Check auth_service.py. Add test if possible.”

Agents need context like humans do.

15.07.2025 16:28 — 👍 2 🔁 0 💬 1 📌 0

Most Agent failures aren’t about bad models.

They’re about bad prompts.

Here’s how to write prompts that actually work—based on thousands of real dev-Agent interactions 👇🧵

15.07.2025 16:28 — 👍 2 🔁 0 💬 1 📌 0

Thanks for the feedback, Michael - agree we were overzealous here. We'll go ahead and delete all your info so you don't hear from us again.

11.07.2025 04:12 — 👍 0 🔁 0 💬 0 📌 0

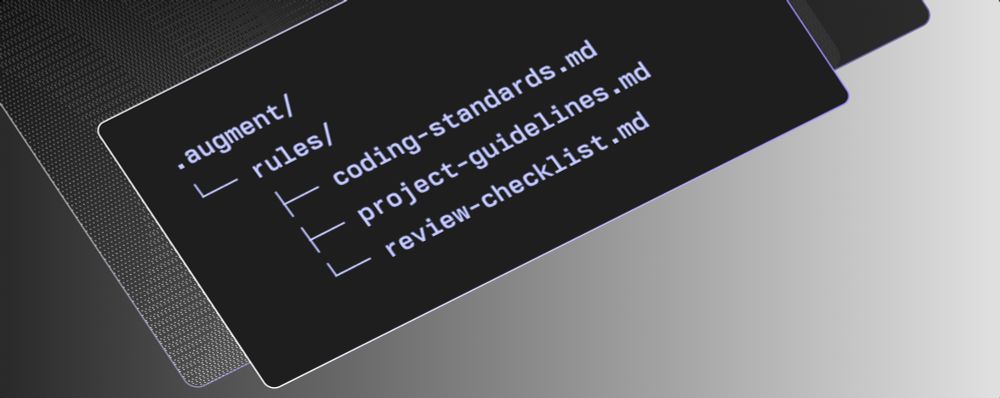

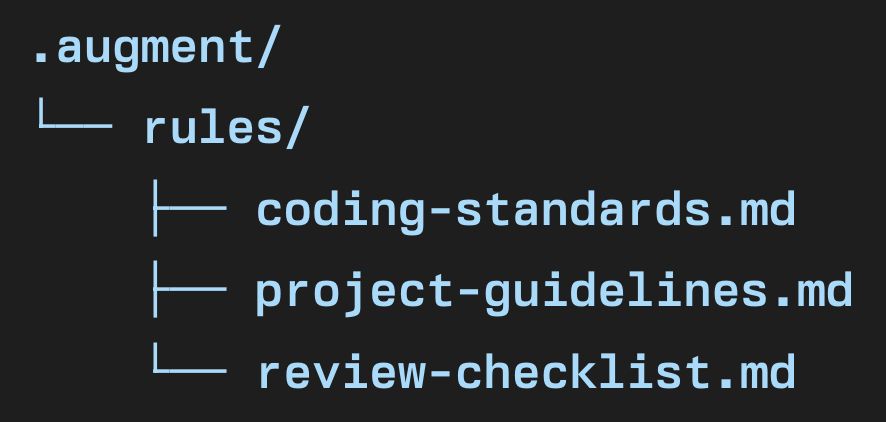

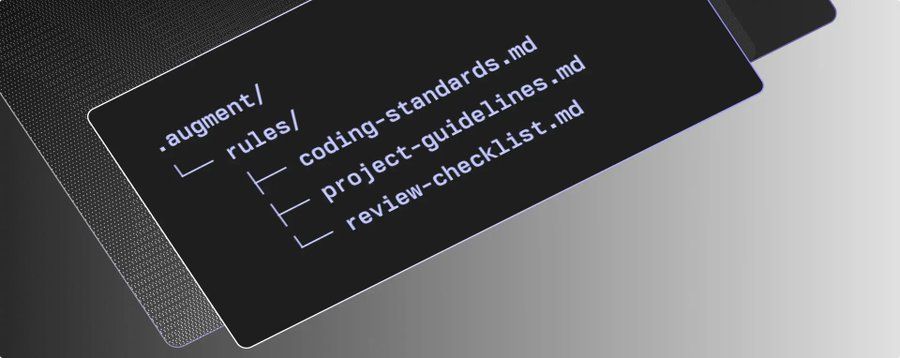

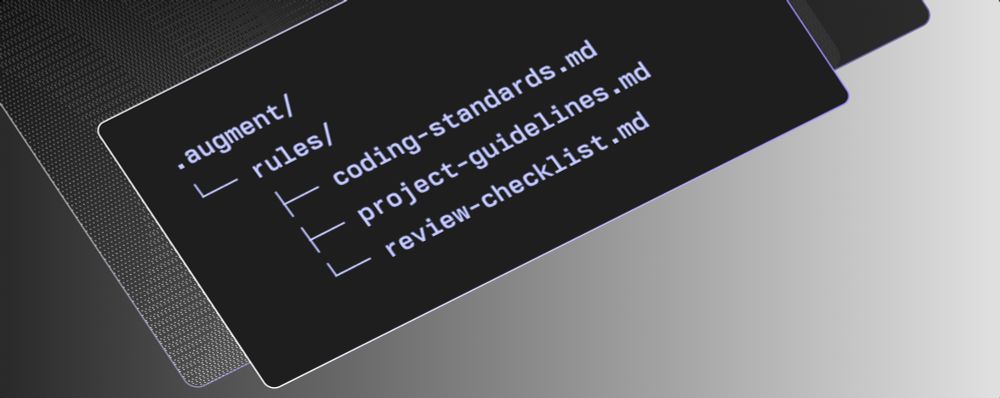

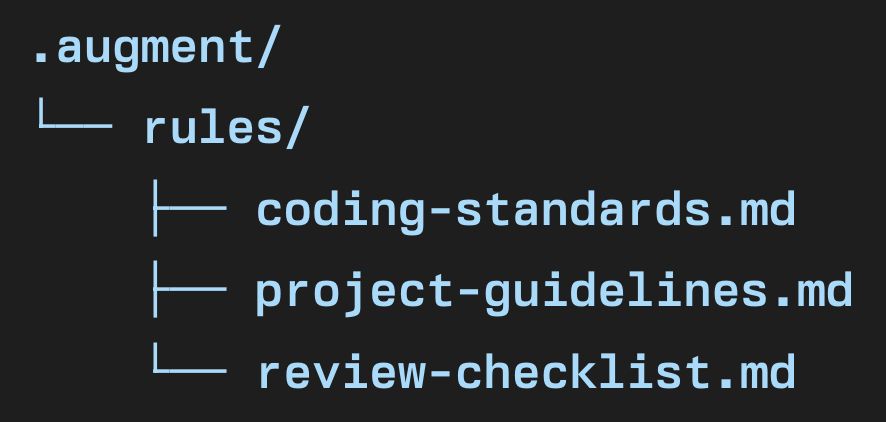

Introducing Augment Rules

The most powerful AI software development platform with the industry-leading context engine.

Ready to make your agent work the way you do? Create a .augment/rules/ folder in your repository and start customizing.

🔗 www.augmentcode.com/changelog/in...

09.07.2025 21:21 — 👍 1 🔁 0 💬 0 📌 0

Already using .augment-guidelines.md?

No changes required—your setup remains supported.

But Augment Rules offers even greater flexibility and control.

09.07.2025 21:21 — 👍 1 🔁 0 💬 1 📌 0

Three flexible ways to use Rules:

1️⃣ Always: Attach rules to every query automatically

2️⃣ Manual: Select rules per query as needed

3️⃣ Auto: Describe your task—the agent intelligently selects the most relevant rules

09.07.2025 21:21 — 👍 0 🔁 0 💬 1 📌 0

Get started in seconds:

🧠 Smart Rule Selection: Agent Requested mode finds what’s relevant for each task

🚀 Seamless Migration: Import rules from other tools, or use your existing Augment guidelines

🧩 Flexible Organization: Use any file name or structure to match your workflow

09.07.2025 21:21 — 👍 0 🔁 0 💬 1 📌 0

Every project, team, and workflow is unique.

Augment Rules empower you to specify exactly how your agent should behave. Simply add instruction files to .augment/rules/ and your agent will adapt.

09.07.2025 21:21 — 👍 0 🔁 0 💬 1 📌 0

With Augment Rules, your software agent can build just like your team does.

09.07.2025 21:21 — 👍 2 🔁 0 💬 1 📌 1

Stay in-session. Build context.

Correct it like you would a teammate:

“This is close—just fix the null case.”

“Leave the rest as-is.”

You’ll be surprised how far a few nudges go.

07.07.2025 16:22 — 👍 0 🔁 0 💬 0 📌 0

Failure is feedback.

It tells you:

– What the Agent misunderstood

– What you didn’t explain

– What to clarify next

Don’t bail—refine.

07.07.2025 16:22 — 👍 0 🔁 0 💬 1 📌 0

Let it write and run tests.

Then iterate:

“Tests failed—what went wrong?”

“Fix the off-by-one error in test 3.”

“Rerun and confirm.”

Quick cycles beat careful guesses every time.

07.07.2025 16:22 — 👍 0 🔁 0 💬 1 📌 0

The best Agent workflows look like test-driven development:

Write → run → fix → rerun.

You’re not aiming for a perfect prompt—you're building momentum.

07.07.2025 16:22 — 👍 0 🔁 0 💬 1 📌 0

Prompt.

Wait.

It messes up.

Start over?

Not if you build a feedback loop.

Here’s how to make Agents actually useful 👇

07.07.2025 16:22 — 👍 1 🔁 0 💬 1 📌 0

PS: That’s why we built Prompt Enhancer —it auto-pulls context from your codebase and rewrites your prompt for clarity.

Available now in VS Code & JetBrains: just click ✨ and ship better prompts, faster.

03.07.2025 17:15 — 👍 1 🔁 0 💬 0 📌 0

TL;DR:

🛠️ Tools help

📁 File paths help

🧠 Rationale helps

📌 Examples help

Agents don’t need perfect prompts.

They need complete ones.

03.07.2025 17:15 — 👍 0 🔁 0 💬 1 📌 0

Examples work too—but only if they’re scoped:

❌ “Implement tests for ImageProcessor.”

✅ “Implement tests for ImageProcessor. Follow the pattern in text_processor.py.”

Now it knows what “good” looks like.

03.07.2025 17:15 — 👍 0 🔁 0 💬 1 📌 0

A high-context prompt answers the silent questions:

- Where should I look?

- What else is relevant?

- Are there examples I can follow?

- What’s the user really trying to do?

Agents don’t ask.

So you have to pre-answer.

03.07.2025 17:15 — 👍 0 🔁 0 💬 1 📌 0

Here’s what a low-context prompt looks like: “Enable JSON parser for chat backend.”

Sounds fine, right?

Until the Agent:

- Picks the wrong file

- Misses the LLMOutputParsing class

- Uses a config that doesn’t exist

Because you didn’t ground it.

03.07.2025 17:15 — 👍 0 🔁 0 💬 1 📌 0

Prompting isn’t about being clever.

It’s about giving the Agent everything it needs to not guess.

That means:

- Clear goals

- Relevant files

- Helpful examples

- Precise constraints

Agents don’t hallucinate randomly—they hallucinate from missing context.

03.07.2025 17:15 — 👍 1 🔁 0 💬 1 📌 0

Most Agent failures aren’t model problems. They’re context problems.

If you give vague or incomplete info, the Agent will fill in the blanks—and usually get them wrong.

Here’s how to write high-context prompts that actually work 👇

03.07.2025 17:15 — 👍 2 🔁 0 💬 1 📌 0

Guess now we can predict Asia's lunch time 💀

02.07.2025 16:14 — 👍 0 🔁 0 💬 0 📌 0

A garden of courses to deepen and mature your coding practice, lovingly tended by @avdi.codes & friends. https://graceful.dev

• ex-TechCrunch.

• {Forkable} - A newsletter all about open source: https://forkable.io/

• All pitches: forkable@pm.me

• Anonymous tip-offs @ Signal: PSTC.08

Enjoy Linux & Unix-like systems, open-source software, and programming? Into Sysadmin & DevOps? Follow us to make the most of your IT career! Discover new tools and apps daily, plus a dose of humor ⤵️

https://www.cyberciti.biz → https://www.nixcraft.com

I make tv for developers at @codetv.dev

jason.energy/links

he/him

ZDNet columnist, ZATZ founder, puppy daddy, host of Advanced Geekery. Former UC Berkeley instructor, Mercer professor, CNN and Economist alum

I Teach You System Design • Founder @ System Design One

Join 150K+ Subscribers → https://newsletter.systemdesign.one/

A director of engineering at Taranis. Writing weekly for 16k engineering managers on https://zaidesanton.substack.com.

You can find me on LinkedIn too:

https://www.linkedin.com/in/anton-zaides

CTO | Founder of Engineering Leadership newsletter (140k+ subscribers) - Helping you become a great engineering leader!

Join 150k+ readers -> https://newsletter.eng-leadership.com

Helping you grow in Tech and become Financial Independent | Author of ‘The Hustling Engineer’ newsletter

thehustlingengineer.com

Practical React, Node, and Software Architecture Tips 🔥

Author of “The Conscious React” book ⚛️

Author of the "The T-Shaped Dev" newsletter 🧙

Join 17K+ devs → thetshaped.dev

Chief Roadblock Remover and Learning Enabler | Coach | Building great products, building great teams! Author of Tech World With Milan Newsletter

Senior Engineering Leader | Scaling Teams & Platforms | Generative AI Enthusiast | Remote & Async Work Advocate.

Author of The Engineering Leader Newsletter https://newsletter.rafapaez.com 📥

👩💻 Software eng

🤪 Creator of cringe tech videos

🥺 The funniest girl in us-east-1

beacons.ai/albertatech

That guy who makes visual essays about software at https://samwho.dev.

He/him.

Principal Software Engineer @ Webflow - Long Live the Open Web. Trying to follow Jesus. (he/him)

A professional 5-pin bowler who helps software professionals work with less stress for ready money. tdd.training jbrains.ca

most outspoken shy & introverted engineer 🙌 💪

former electromechanical engineer ⚙️

consulting CTO 🤵

founder https://thinkinglabs.io/