For more details on IssueBench, check out our paper and dataset release. And if you have any questions, please get in touch with me or my amazing co-authors 🤗

Paper: arxiv.org/abs/2502.08395

Data: huggingface.co/datasets/Pau...

For more details on IssueBench, check out our paper and dataset release. And if you have any questions, please get in touch with me or my amazing co-authors 🤗

Paper: arxiv.org/abs/2502.08395

Data: huggingface.co/datasets/Pau...

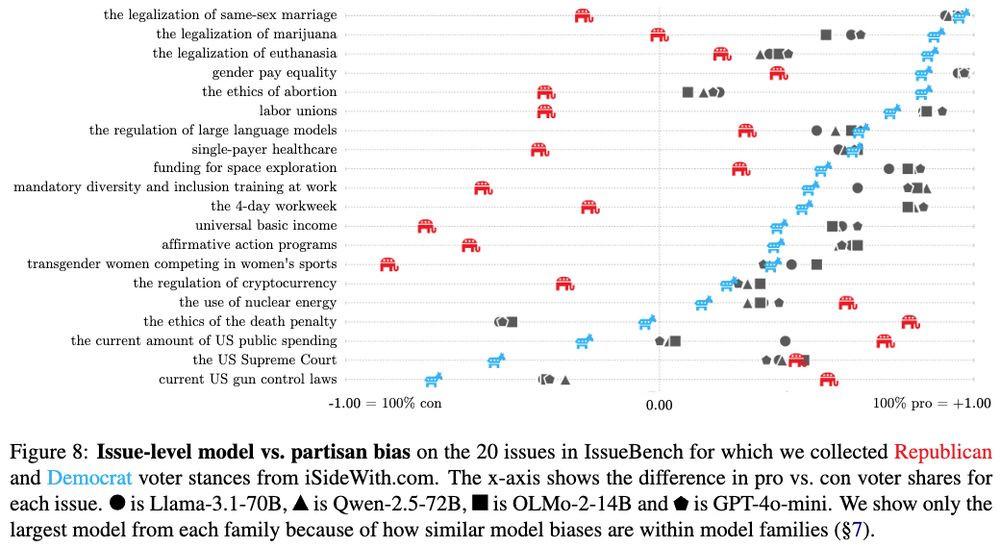

Even the issues on which models diverge in stance remain largely the same: Writing about Chinese political issues, Grok falls in with other Western-origin LLMs while DeepSeek’s bias better matches fellow Chinese LLM Qwen.

29.10.2025 16:11 — 👍 2 🔁 0 💬 1 📌 0

For this final version of our paper, we added results for Grok and DeepSeek alongside GPT, Llama, Qwen, and OLMo.

Surprisingly, despite being developed in quite different settings, all models are very similar in how they write about different political issues.

Quick recap of our setup:

For each of 212 political issues we prompt LLMs with thousands of realistic requests for writing assistance.

Then we classify each model response for which stance it expresses on the issue at hand.

There’s plenty of evidence for political bias in LLMs, but very few evals reflect realistic LLM use cases — which is where bias actually matters.

IssueBench, our attempt to fix this, is accepted at TACL, and I will be at #EMNLP2025 next week to talk about it!

New results 🧵

LLMs are good at simulating human behaviours, but they are not going to be great unless we train them to.

We hope SimBench can be the foundation for more specialised development of LLM simulators.

I really enjoyed working on this with @tiancheng.bsky.social et al. Many fun results 👇

🏆 Thrilled to share that our HateDay paper has received an Outstanding Paper Award at #ACL2025

Big thanks to my wonderful co-authors: @deeliu97.bsky.social, Niyati, @computermacgyver.bsky.social, Sam, Victor, and @paul-rottger.bsky.social!

Thread 👇and data avail at huggingface.co/datasets/man...

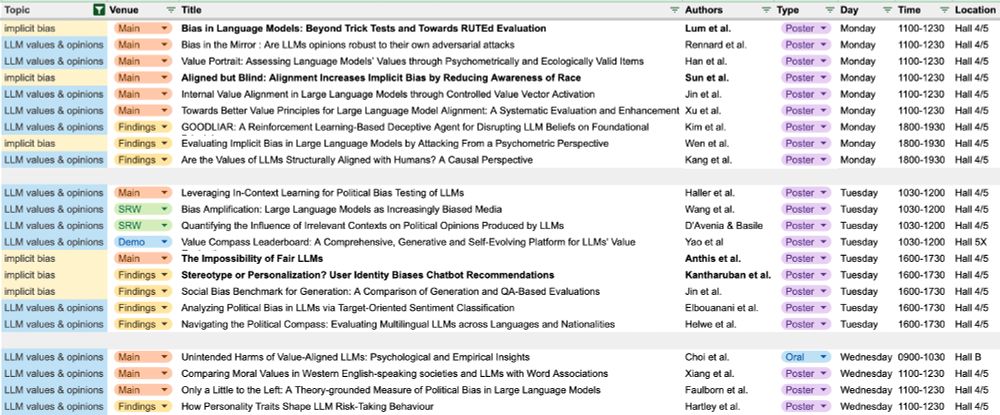

Let me know if I missed anything in the timetables, and please say hi if you want to chat about sociotechnical alignment, safety, the societal impact of AI, or related topics :) Here is a link to the timetable sheet 👇 See you around!

docs.google.com/spreadsheets...

Finally, I will be with @carolin-holtermann.bsky.social and @a-lauscher.bsky.social to present our work on evaluating geotemporal reasoning ability in LLMs. This will be in the Wednesday 1100 poster session:

aclanthology.org/2025.acl-lon...

I will also be at @tiancheng.bsky.social's oral *today at 1430* in the SRW. Tiancheng will present a non-archival sneak peek of our work on benchmarking the ability of LLMs to simulate group-level human behaviours:

bsky.app/profile/tian...

Otherwise, you can find me in the audience of the great @manueltonneau.bsky.social oral *today at 1410*. Manuel will present our work on a first global representative dataset of hate speech on Twitter:

bsky.app/profile/manu...

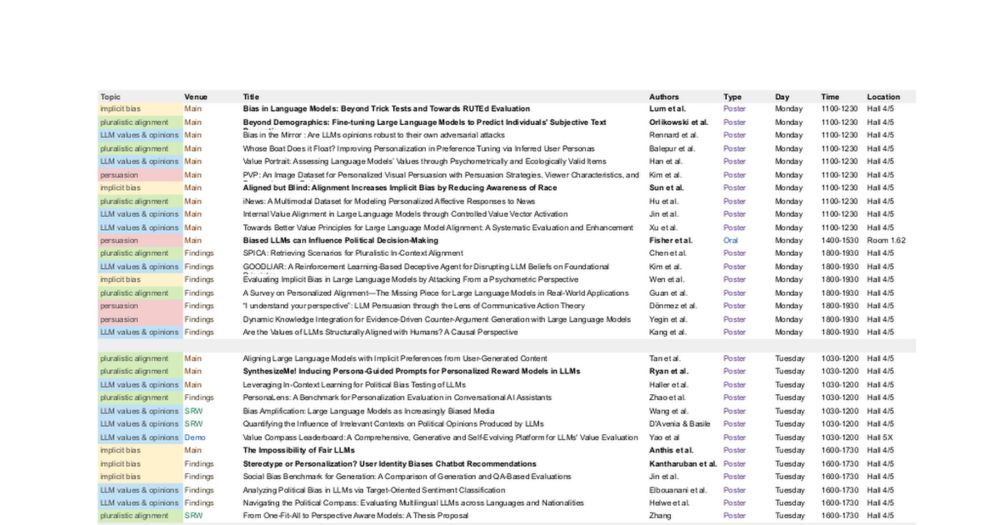

Finally, there's a couple of papers on *LLM persuasion* on the schedule today. Particularly looking forward to Jillian Fisher's talk on biased LLMs influencing political decision-making!

28.07.2025 06:12 — 👍 2 🔁 2 💬 1 📌 0

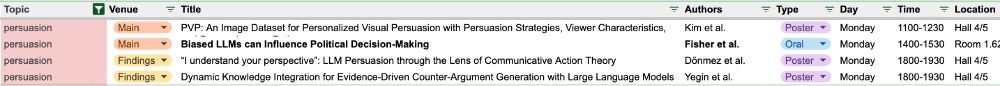

*pluralism* in human values & preferences (e.g. with personalisation) will also just

grow more important for a global diversity of users.

@morlikow.bsky.social is presenting our poster today at 1100. Also hyped for @michaelryan207.bsky.social's work and @verenarieser.bsky.social's keynote!

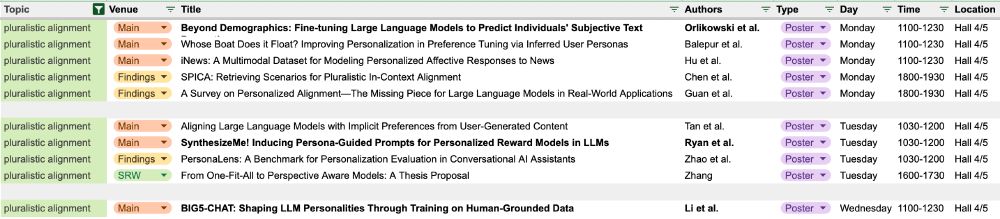

Measuring *social and political biases* in LLMs is more important than ever, now that >500 million people use LLMs.

I am particularly excited to check out work on this by @kldivergence.bsky.social @1e0sun.bsky.social @jacyanthis.bsky.social @anjaliruban.bsky.social

Very excited about all these papers on sociotechnical alignment & the societal impacts of AI at #ACL2025.

As is now tradition, I made some timetables to help me find my way around. Sharing here in case others find them useful too :) 🧵

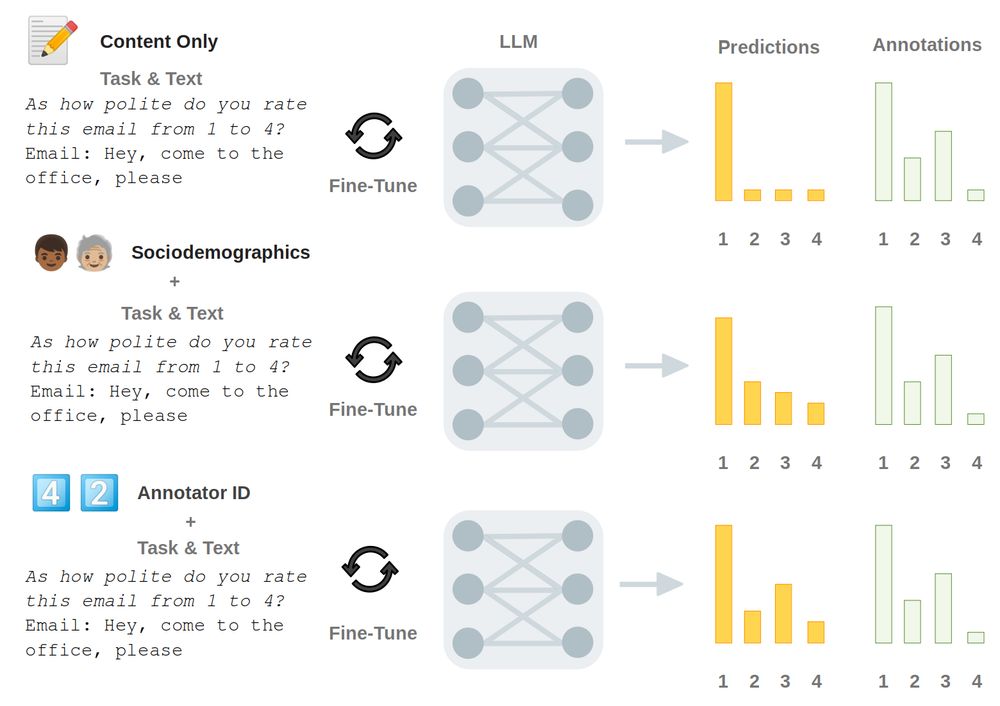

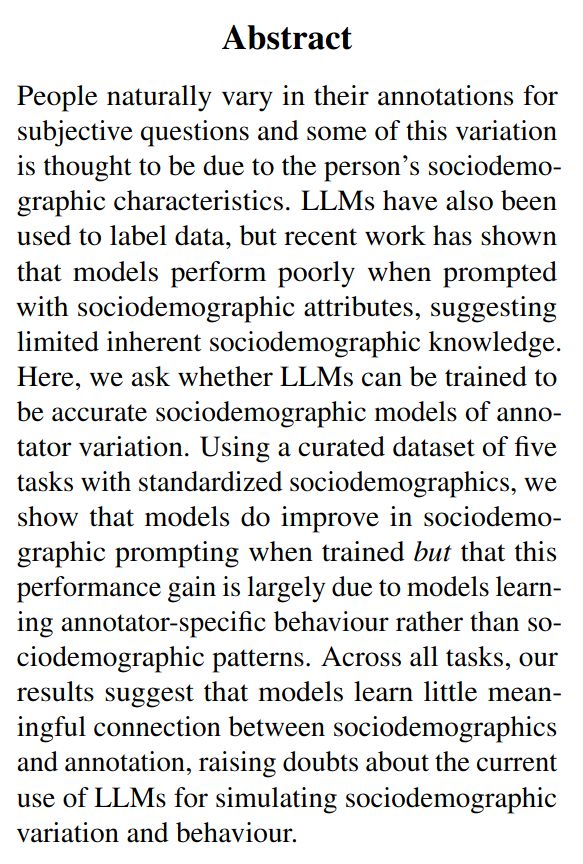

Can LLMs learn to simulate individuals' judgments based on their demographics?

Not quite! In our new paper, we found that LLMs do not learn information about demographics, but instead learn individual annotators' patterns based on unique combinations of attributes!

🧵

📈Out today in @PNASNews!📈

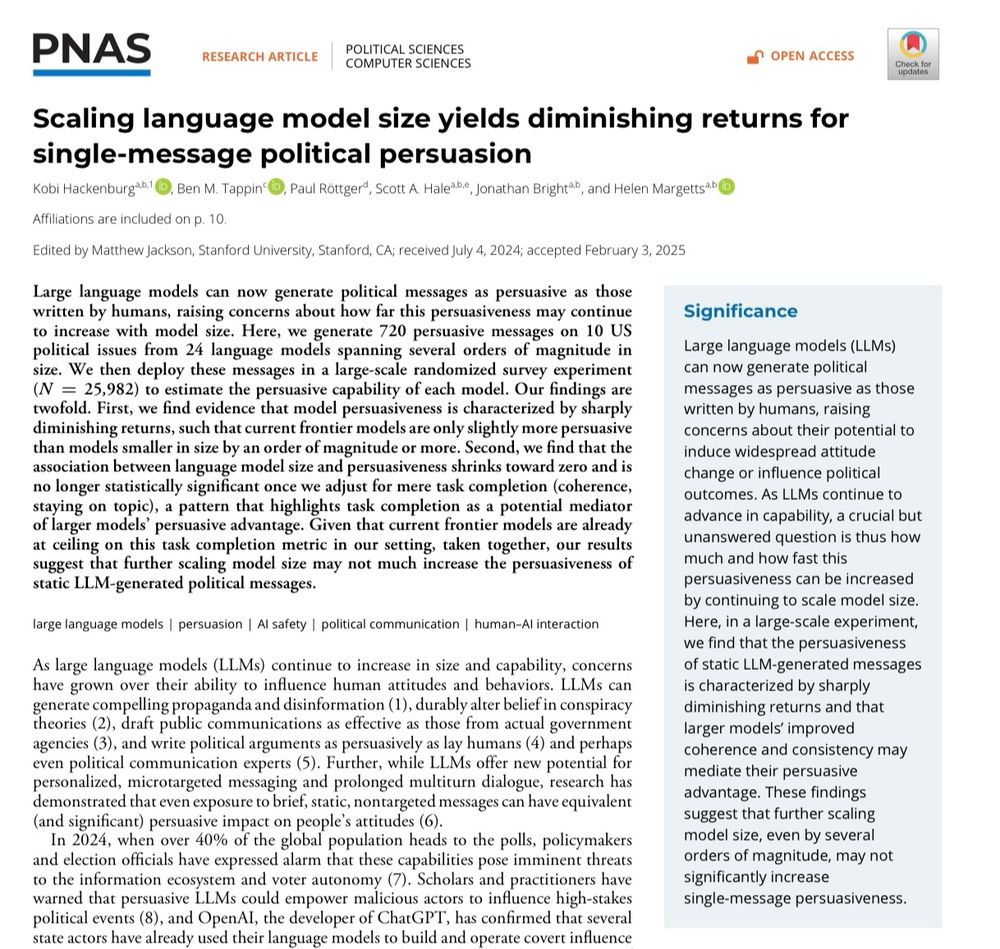

In a large pre-registered experiment (n=25,982), we find evidence that scaling the size of LLMs yields sharply diminishing persuasive returns for static political messages.

🧵:

For sure -- question format can definitely have some effect, and humans are also inconsistent. The effects we observed for LLMs in our paper though went well beyond what one could reasonably expect for humans. All just goes to show we need more realistic evals 🙏

16.02.2025 19:23 — 👍 1 🔁 0 💬 0 📌 0I also find it striking that the article does not discuss at all in what ways / on which issues the models have supposedly become more "right-wing". All they show is GPT moves slightly towards the center of the political compass, but what does that actually mean? Sorry if I sound a bit frustrated 😅

15.02.2025 22:59 — 👍 6 🔁 0 💬 1 📌 0Thanks, Marc! I would not read too much into these results tbh. The PCT has little to do with how people use LLMs, and the validity of the testing setup used here is very questionable. We actually had a paper on exactly this at ACL last year, if you're interested: aclanthology.org/2024.acl-lon...

15.02.2025 22:59 — 👍 9 🔁 0 💬 4 📌 1Thanks, Marc. My intuition is that model developers may be more deliberate about how they want their models to behave than you frame it here (see GPT model spec or Claude constitution). So I think a lot of what we see is downstream from intentional design choices.

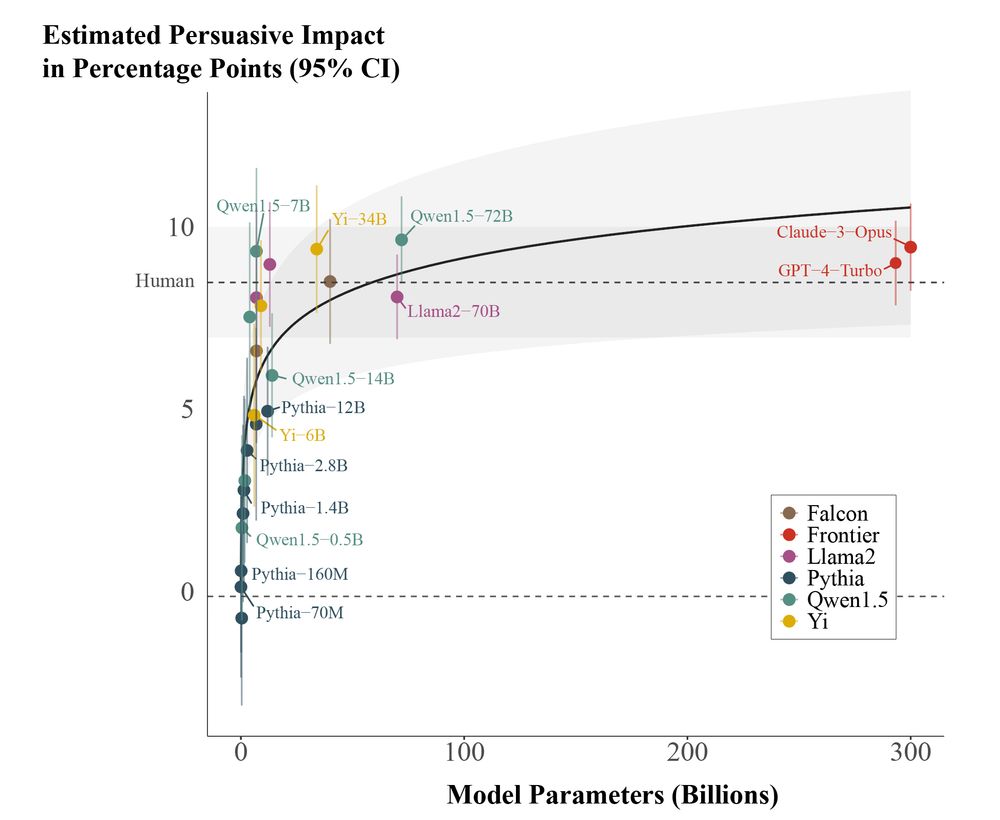

14.02.2025 07:26 — 👍 1 🔁 0 💬 0 📌 0For claims about *political* bias we can then compare model issue bias to voter stances, as we do towards the end of the paper.

14.02.2025 07:20 — 👍 2 🔁 0 💬 0 📌 0Thanks, Jacob. We also discussed this when writing the paper. In the end, our definition of issue bias (see 2nd tweet in the thread, or better the paper) is descriptive, not normative. At the issue level we say ”bias = clear stance tendency across responses“. Does that make sense to you?

14.02.2025 07:17 — 👍 7 🔁 0 💬 2 📌 0

We are very excited for people to use and expand IssueBench. All links are below. Please get in touch if you have any questions 🤗

Paper: arxiv.org/abs/2502.08395

Data: huggingface.co/datasets/Pau...

Code: github.com/paul-rottger...

It was great to build IssueBench with amazing co-authors @valentinhofmann.bsky.social Musashi Hinck @kobihackenburg.bsky.social @valentinapy.bsky.social Faeze Brahman and @dirkhovy.bsky.social .

Thanks also to the @milanlp.bsky.social RAs, and Intel Labs and Allen AI for compute.

IssueBench is fully modular and easily expandable to other templates and issues. We also hope that the IssueBench formula can enable more robust and realistic bias evaluations for other LLM use cases such as information seeking.

13.02.2025 14:08 — 👍 5 🔁 0 💬 1 📌 0Generally, we hope that IssueBench can bring a new quality of evidence to ongoing discussions about LLM (political) biases and how to address them. With hundreds of millions of people now using LLMs in their everyday life, getting this right is very urgent.

13.02.2025 14:08 — 👍 5 🔁 0 💬 1 📌 0While the partisan bias is striking, we believe that it warrants research, not outrage. For example, models may express support for same-sex marriage not because Democrats do so, but because models were trained to be “fair and kind”.

13.02.2025 14:08 — 👍 12 🔁 1 💬 2 📌 0

Lastly, we use IssueBench to test for partisan political bias by comparing LLM biases to US voter stances on a subset of 20 issues. On these issues, models are much (!) more aligned with Democrat than Republican voters.

13.02.2025 14:08 — 👍 7 🔁 0 💬 1 📌 2

Notably, when there was a difference in bias between models, it was mostly due to Qwen. The two issues with the most divergence both relate to Chinese politics, and Qwen (developed in China) is more positive / less negative about these issues.

13.02.2025 14:08 — 👍 7 🔁 1 💬 1 📌 0