Grateful to win Best Paper at ACL for our work on Fairness through Difference Awareness with my amazing collaborators!! Check out the paper for why we think fairness has both gone too far, and at the same time, not far enough aclanthology.org/2025.acl-lon...

30.07.2025 15:34 — 👍 26 🔁 4 💬 0 📌 0

Angelina Wang presents the benchmark with Jewish synagogue hiring as an example.

@angelinawang.bsky.social presents the "Fairness through Difference Awareness" benchmark. Fairness tests require no discrimination...

but the law supports many forms of discrimination! E.g., synagogues should hire Jewish rabbis. LLMs often get this wrong aclanthology.org/2025.acl-lon... #ACL2025NLP

30.07.2025 07:26 — 👍 5 🔁 1 💬 1 📌 0

Was beyond disappointed to see this in the AI Action Plan. Messing with the NIST RMF (which many private & public institutions currently rely on) feels like a cheap shot

24.07.2025 14:25 — 👍 11 🔁 5 💬 2 📌 1

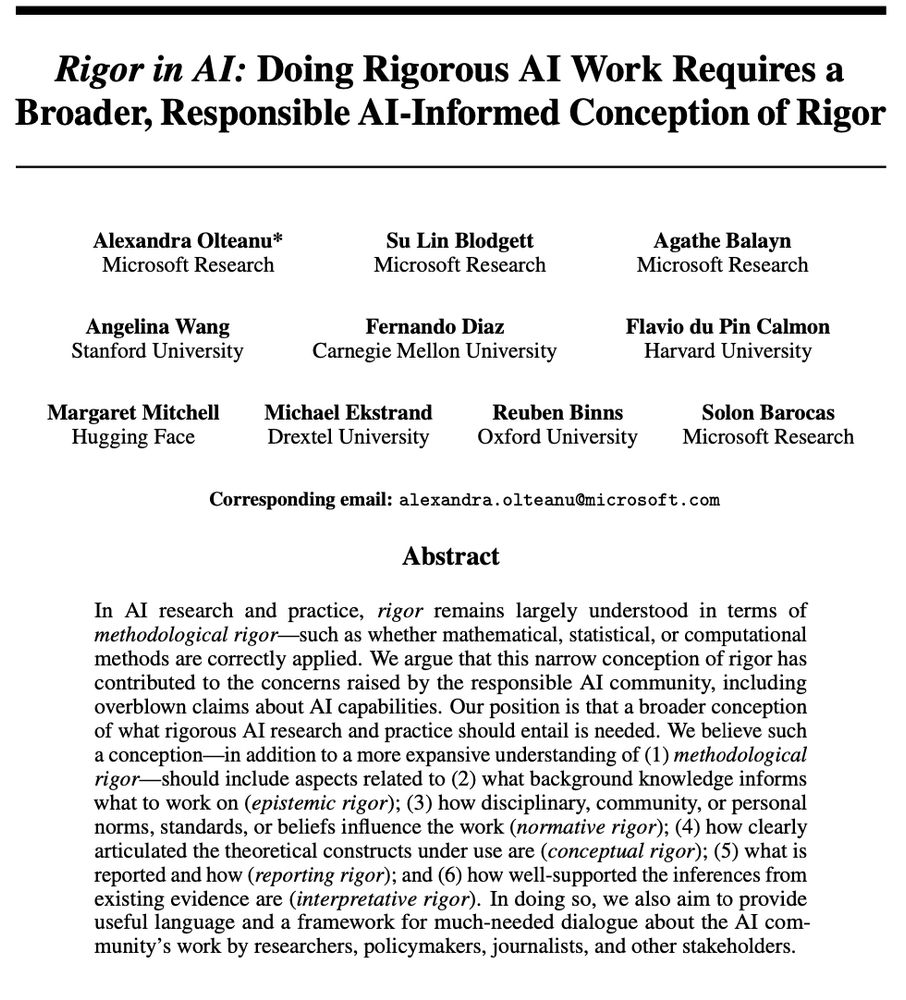

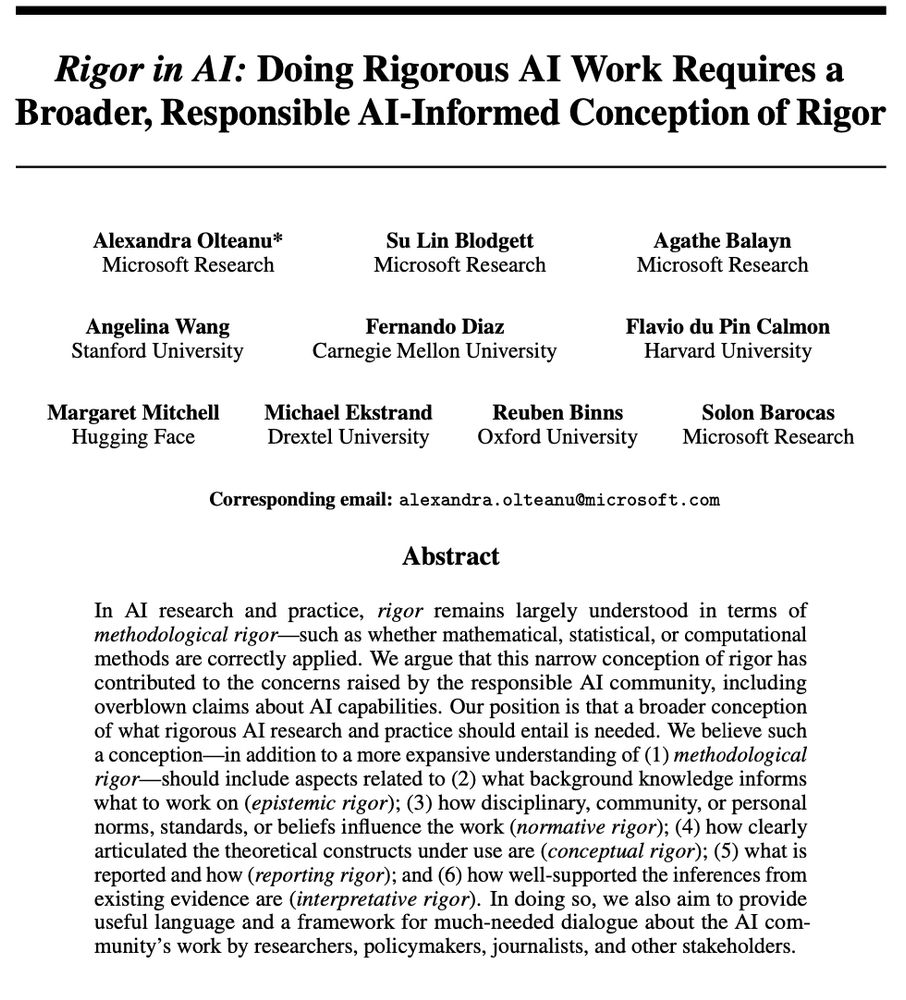

Print screen of the first page of a paper pre-print titled "Rigor in AI: Doing Rigorous AI Work Requires a Broader, Responsible AI-Informed Conception of Rigor" by Olteanu et al. Paper abstract: "In AI research and practice, rigor remains largely understood in terms of methodological rigor -- such as whether mathematical, statistical, or computational methods are correctly applied. We argue that this narrow conception of rigor has contributed to the concerns raised by the responsible AI community, including overblown claims about AI capabilities. Our position is that a broader conception of what rigorous AI research and practice should entail is needed. We believe such a conception -- in addition to a more expansive understanding of (1) methodological rigor -- should include aspects related to (2) what background knowledge informs what to work on (epistemic rigor); (3) how disciplinary, community, or personal norms, standards, or beliefs influence the work (normative rigor); (4) how clearly articulated the theoretical constructs under use are (conceptual rigor); (5) what is reported and how (reporting rigor); and (6) how well-supported the inferences from existing evidence are (interpretative rigor). In doing so, we also aim to provide useful language and a framework for much-needed dialogue about the AI community's work by researchers, policymakers, journalists, and other stakeholders."

We have to talk about rigor in AI work and what it should entail. The reality is that impoverished notions of rigor do not only lead to some one-off undesirable outcomes but can have a deeply formative impact on the scientific integrity and quality of both AI research and practice 1/

18.06.2025 11:48 — 👍 61 🔁 18 💬 2 📌 3

Alright, people, let's be honest: GenAI systems are everywhere, and figuring out whether they're any good is a total mess. Should we use them? Where? How? Do they need a total overhaul?

(1/6)

15.06.2025 00:20 — 👍 33 🔁 11 💬 1 📌 0

I’ll be at both FAccT in Athens and ACL in Vienna this summer presenting these works, come say hi :)

02.06.2025 16:38 — 👍 5 🔁 0 💬 0 📌 0

Screenshot of paper title and author: "Identities are not Interchangeable: The Problem of Overgeneralization in Fair Machine Learning" by Angelina Wang

2. 𝗿𝗮𝗰𝗶𝘀𝗺 ≠ 𝘀𝗲𝘅𝗶𝘀𝗺 ≠ 𝗮𝗯𝗹𝗲𝗶𝘀𝗺 ≠ … At FAccT 2025: Different oppressions manifest differently, and that matters for AI. Ex: neighborhoods segregate by race, but rarely by sex, shaping the harms we should target. arxiv.org/abs/2505.04038

02.06.2025 16:38 — 👍 4 🔁 0 💬 1 📌 0

Instead, we should permit differentiating based on the context. Ex: synagogues in America are legally allowed to discriminate by religion when hiring rabbis. Work with Michelle Phan, Daniel E. Ho, @sanmikoyejo.bsky.social arxiv.org/abs/2502.01926

02.06.2025 16:38 — 👍 1 🔁 1 💬 1 📌 0

Screenshot of paper title and author list: "Fairness through Difference Awareness: Measuring Desired Group Discrimination in LLMs" by Angelina Wang, Michelle Phan, Daniel E. Ho, Sanmi Koyejo

1. 𝗳𝗮𝗶𝗿𝗻𝗲𝘀𝘀 ≠ 𝘁𝗿𝗲𝗮𝘁𝗶𝗻𝗴 𝗴𝗿𝗼𝘂𝗽𝘀 𝘁𝗵𝗲 𝘀𝗮𝗺𝗲. At ACL 2025 Main: We diagnose issues like Google Gemini’s racially diverse Nazis to be a result of equating fairness with racial color-blindness, erasing important group differences.

02.06.2025 16:38 — 👍 3 🔁 0 💬 1 📌 0

In the pursuit of convenient definitions and equations of fairness that scale, we have abstracted away too much social context, enforcing equality between any and all groups. In two new works, we push back against two pervasive and pernicious assumptions:

02.06.2025 16:38 — 👍 1 🔁 0 💬 1 📌 0

Have you ever felt that AI fairness was too strict, enforcing fairness when it didn’t seem necessary? How about too narrow, missing a wide range of important harms? We argue that the way to address both of these critiques is to discriminate more 🧵

02.06.2025 16:38 — 👍 12 🔁 1 💬 1 📌 0

My ‘woke DEI’ grant has been flagged for scrutiny. Where do I go from here?

My work in making artificial intelligence fair has been noticed by US officials intent on ending ‘class warfare propaganda’.

The US government recently flagged my scientific grant in its "woke DEI database". Many people have asked me what I will do.

My answer today in Nature.

We will not be cowed. We will keep using AI to build a fairer, healthier world.

www.nature.com/articles/d41...

25.04.2025 17:19 — 👍 39 🔁 13 💬 1 📌 1

If you work in ML fairness, perhaps you tend to get asked similar sets of questions from ML-focused folks, such as what is the best definition or equation for fairness. For those interested, please read, and for those often asked these questions, feel free to pass on the site!

17.03.2025 14:39 — 👍 1 🔁 0 💬 0 📌 0

I've recently put together a "Fairness FAQ": tinyurl.com/fairness-faq. If you work in non-fairness ML and you've heard about fairness, perhaps you've wondered things like what the best definitions of fairness are, and whether we can train algorithms that optimize for it.

17.03.2025 14:39 — 👍 44 🔁 19 💬 3 📌 1

*Please repost* @sjgreenwood.bsky.social and I just launched a new personalized feed (*please pin*) that we hope will become a "must use" for #academicsky. The feed shows posts about papers filtered by *your* follower network. It's become my default Bluesky experience bsky.app/profile/pape...

10.03.2025 18:14 — 👍 509 🔁 292 💬 24 📌 79

I am excited to announce that I will join the University of Zurich as an assistant professor in August this year! I am looking for PhD students and postdocs starting from the fall.

My research interests include optimization, federated learning, machine learning, privacy, and unlearning.

06.03.2025 02:17 — 👍 28 🔁 5 💬 1 📌 1

Cutting $880 billion from Medicaid is going to have a lot of devastating consequences for a lot of people

26.02.2025 03:11 — 👍 31052 🔁 6915 💬 2031 📌 511

Yes these are good points, and thanks for the pointer! But the trajectory does seem to be towards LLMs replacing human participants in certain cases. The presence of these companies, for instance, to me signal real world use: www.syntheticusers.com, synthetic-humans.ai

20.02.2025 02:08 — 👍 2 🔁 0 💬 1 📌 0

Yes! The preprint is available here: arxiv.org/abs/2402.01908

20.02.2025 02:06 — 👍 2 🔁 0 💬 1 📌 0

A screenshot of an email notifying Spotsylvania parents that funding for a program for youth with disabilities has been canceled

the richest man in the world has decided that your kids don't deserve special education programs

16.02.2025 18:14 — 👍 10705 🔁 4700 💬 351 📌 628

Our results differ from work that affirmatively shows LLMs can simulate human participants. We test if LLMs can match the distribution of human responses — not just the mean — and use more realistic free responses instead of multiple choice. The details matter!

17.02.2025 16:37 — 👍 7 🔁 1 💬 1 📌 0

Training data phrases like “Black women” are more often used in text *about* a group rather than *from* a group, so that outputs to LLM prompts like “You are a Black woman” more resemble what out-group members think a group is like than what in-group members are actually like.

17.02.2025 16:37 — 👍 9 🔁 1 💬 1 📌 0

Our new piece in Nature Machine Intelligence: LLMs are replacing human participants, but can they simulate diverse respondents? Surveys use representative sampling for a reason, and our work shows how LLM training prevents accurate simulation of different human identities.

17.02.2025 16:36 — 👍 150 🔁 35 💬 9 📌 2

A poster advertising the first workshop on sociotechnical AI governance with a description of the workshop's core themes and faces of the organizers.

📢📢 Introducing the 1st workshop on Sociotechnical AI Governance at CHI’25 (STAIG@CHI'25)! Join us to build a community to tackle AI governance through a sociotechnical lens and drive actionable strategies.

🌐 Website: chi-staig.github.io

🗓️ Submit your work by: Feb 17, 2025

23.12.2024 18:47 — 👍 44 🔁 17 💬 2 📌 5

Ok #FAccT folks - I'm still looking around for people. Who am I missing?

go.bsky.app/EQF5Ne1

14.11.2024 17:16 — 👍 58 🔁 18 💬 25 📌 1

Benchmark suites are different from what we see today, which are often collections based on availability and convenience. Instead, suites should be curated with the goal of completeness, and encourage friction by design to force contextual deliberation about model superiority.

11.11.2024 21:02 — 👍 2 🔁 0 💬 1 📌 0

There are numerous leaderboards for AI capabilities and risks, for example fairness. In new work, we argue that leaderboards are misleading when the determination of concepts like “fairness” is always contextual. Instead, we should use benchmark suites.

11.11.2024 21:02 — 👍 7 🔁 2 💬 1 📌 0

Professor @ University of Washington iSchool. Computing, learning, design, justice, kitties, tacos, coffee, gender, politics, and transit. I write at amyjko.medium.com. I maintain wordplay.dev, bookish.press, adminima.app, reciprocal.reviews.

Building personalized Bluesky feeds for academics! Pin Paper Skygest, which serves posts about papers from accounts you're following: https://bsky.app/profile/paper-feed.bsky.social/feed/preprintdigest. By @sjgreenwood.bsky.social and @nkgarg.bsky.social

Applied scientist working on LLM evaluation and publishing in AI ethics. Formerly: technical writing, philosophy. Urbanism nerd in my spare time. Opinions here my own. he/they 🏳️⚧️. https://boltzmann-brain.github.io/

Postdoc @milanlp.bsky.social / Incoming Postdoc @stanfordnlp.bsky.social / Computational social science, LLMs, algorithmic fairness

I’m not like the other Bayesians. I’m different.

Thinks about philosophy of science, AI ethics, machine learning, models, & metascience. postdoc @ Princeton.

Researcher @Microsoft; PhD @Harvard; Incoming Assistant Professor @MIT (Fall 2026); Human-AI Interaction, Worker-Centric AI

zbucinca.github.io

Incoming Asst Prof @UMD Info College, currently postdoc @UChicago. NLP, computational social science, political communication, linguistics. Past: Info PhD @UMich, CS + Lx @Stanford. Interests: cats, Yiddish, talking to my cats in Yiddish.

Associate Professor, Yale Statistics & Data Science. Social networks, social and behavioral data, causal inference, mountains. https://jugander.github.io/

Computational social scientist researching human-AI interaction and machine learning, particularly the rise of digital minds. Visiting scholar at Stanford, co-founder of Sentience Institute, and PhD candidate at University of Chicago. jacyanthis.com

Associate prof at @UMich in SI and CSE working in computational social science and natural language processing. PI of the Blablablab blablablab.si.umich.edu

MIT postdoc, incoming UIUC CS prof

katedonahue.me

Faculty at MPI-SP. Computer scientist researching data protection & governance, digital well-being, and responsible computing (IR/ML/AI).

https://asiabiega.github.io/

Postdoc researcher @MicrosoftResearch, previously @TUDelft.

Interested in the intricacies of AI production and their social and political economical impacts; gap policies-practices (AI fairness, explainability, transparency, assessments)

PhD @ MIT EECS | ML Fairness and Responsible AI | he/they

Previously Princeton AB Mathematics and Visual AI Lab

aaronserianni.com

Assistant Professor at UMD College Park| Past: JPMorgan, CMU, IBM Research, Dataminr, IIT KGP

| Trustworthy ML, Interpretability, Fairness, Information Theory, Optimization, Stat ML

Psychologist study social cognition, stereotypes, individual & structural, computational principles. Assistant Prof @UChicago

CS PhD student at Cornell Tech. Interested in interactions between algorithms and society. Princeton math '22.

kennypeng.me

public interest technologist // luddite hacker.

information science phd at cornell tech.

inequality, ai, and the information ecosystem.

🤠 traeve.com 🤠