I hope you've found this post insightful.

Video by @VictorKaiWang1.

Follow me @_mwitiderrick for more thought-provoking posts.

Like/Retweet the first tweet below to share with your friends:

Derrick Mwiti

@mwiti.com.bsky.social

@mwiti.com.bsky.social

I hope you've found this post insightful.

Video by @VictorKaiWang1.

Follow me @_mwitiderrick for more thought-provoking posts.

Like/Retweet the first tweet below to share with your friends:

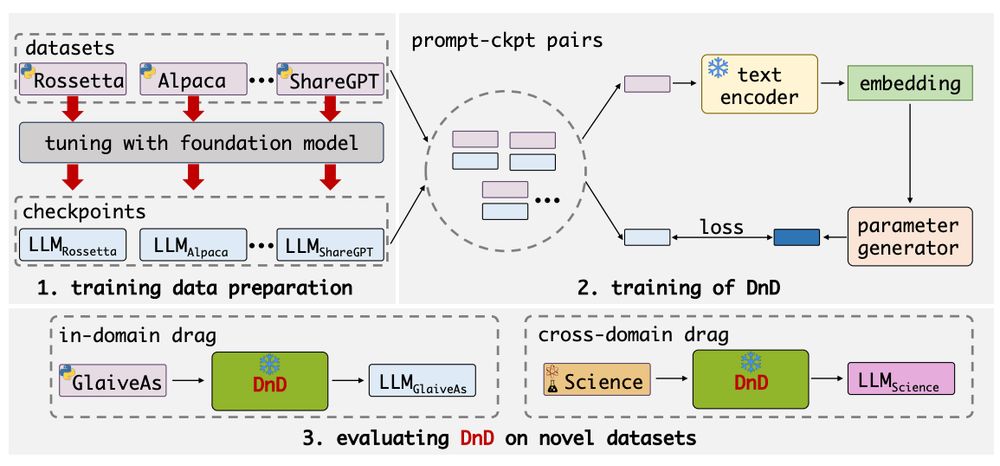

Drag-and-Drop LLMs unlock a future without fine-tuning bottlenecks.

No more hours of GPU time per task.

No more gradient descent.

Just drop in prompts and generate weights.

Get the repo: jerryliang24.github.io/DnD.

DnD uses a hyper-conv decoder, not diffusion.

It works with Qwen2.5 from 0.5B to 7B.

Uses Sentence-BERT to encode prompts, then generates full LoRA layers.

It's open-source and insanely efficient.

Instant LoRA generation is now real.

Outperforms LoRA on math, coding, reasoning, even multimodal.

Beats GPT-style baselines without seeing labels.

On HumanEval: pass@1 jumps from 17.6 to 32.7.

On gsm8k: better than fine-tuned models.

DnD generalizes across domains and model sizes.

DnD lets you customize LLMs in seconds.

Trained on prompt–checkpoint pairs, not full datasets.

It learns how prompts reshape weights, then skips training.

It’s like LoRA, but without the wait.

Zero-shot, zero-tuning, real results.

DnD lets you customize LLMs in seconds.

Trained on prompt–checkpoint pairs, not full datasets.

It learns how prompts reshape weights, then skips training.

It’s like LoRA, but without the wait.

Zero-shot, zero-tuning, real results.

Drag-and-Drop LLMs just changed fine-tuning forever.

No training. No gradients. No labels.

Just prompts in, task-specific LoRA weights out.

12,000x faster than LoRA. Up to 30% better.

Here’s everything you need to know.

Read the full article qdrant.tech/articles/mi...

16.06.2025 08:58 — 👍 0 🔁 0 💬 0 📌 0Inspired by COIL, fixed for real-world use:

✔ No expansion.

✔ Word-level, not token-level.

✔ Sparse by design.

✔ Generalizes out-of-domain.

And yes, it runs on a CPU in under a minute per word.

miniCOIL adds a semantic layer to BM25.

Each word gets a 4D vector that captures meaning*

Trained per word using self-supervised triplet loss.

Fast to train. Fast to run.

Fits inside classic inverted indexes.

BM25 can’t tell a fruit bat from a baseball bat.

Dense retrieval gets the meaning, but loses precision.

Now there’s miniCOIL: a sparse neural retriever that understands context.

It’s lightweight, explainable, and outperforms BM25 in 4 out of 5 domains.

Here’s how it works.

I hope you've found this post insightful.

Follow me @_mwitiderrick for more thought-provoking posts.

Like/Retweet the first tweet below to share with your friends:

Read the full article: qdrant.tech/documentati...

12.06.2025 09:00 — 👍 0 🔁 0 💬 1 📌 0Use Qdrant’s `hnsw_config(m=0)` to skip HNSW for rerank vectors.

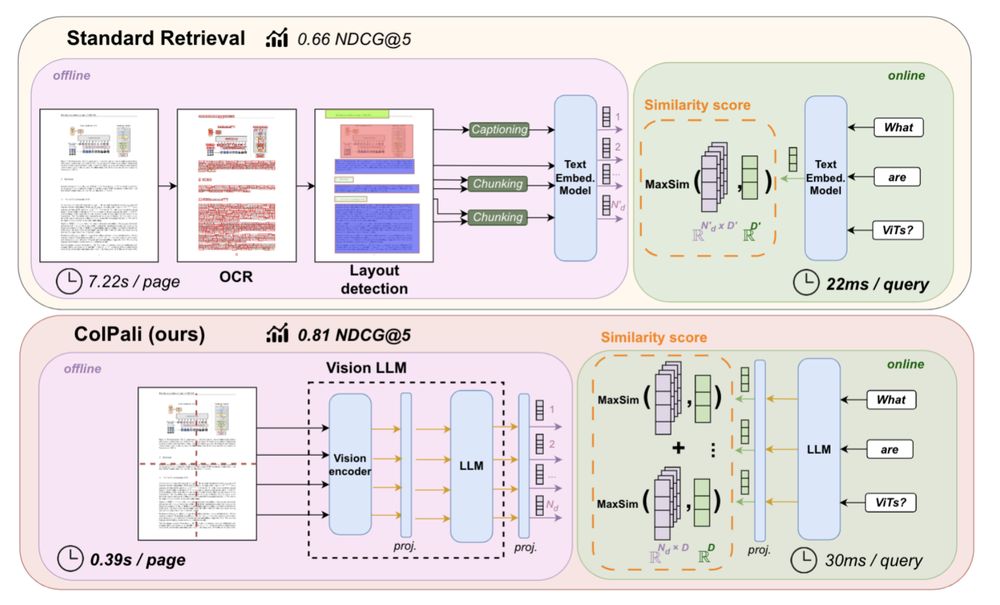

Dense vectors = fast first pass.

Multivectors = precise rerank.

Both live in the same Qdrant collection.

One API call handles it all.

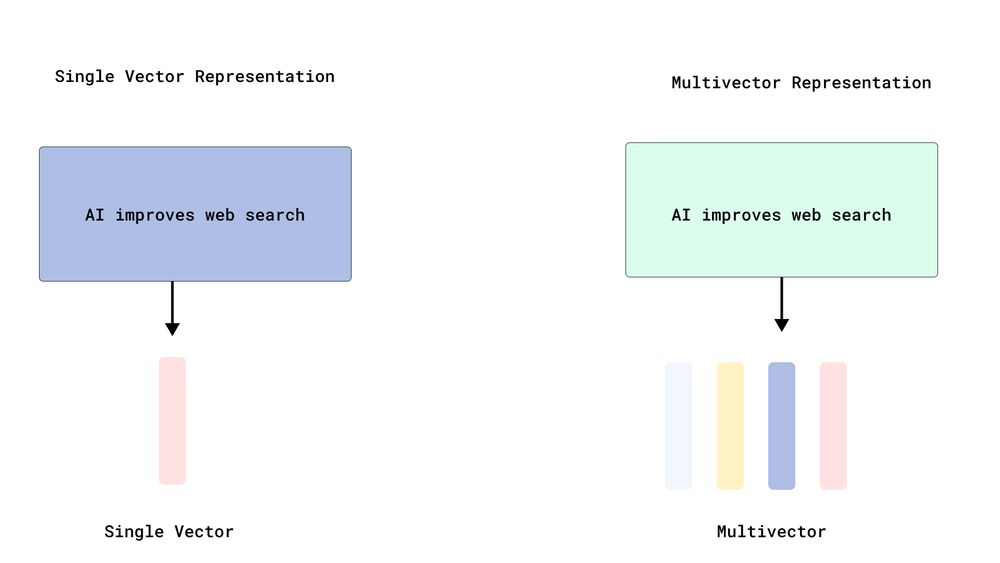

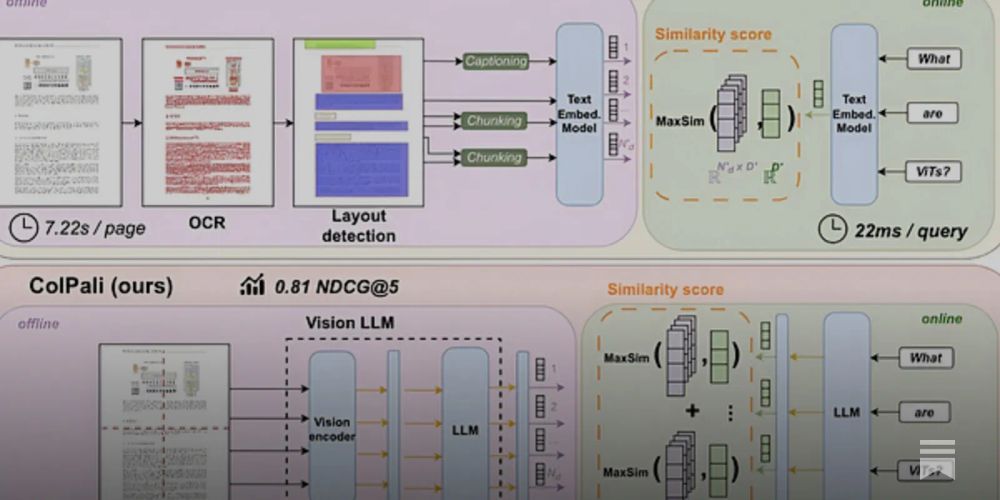

Most retrieval uses one vector per doc.

Multivector search? Hundreds.

Token-level embeddings let you match query phrases exactly.

Perfect for reranking with ColBERT or ColPali.

But don't index everything or you'll regret it.

Everyone loves multivector search.

But most are doing it wrong.

Too many vectors. Too much RAM. Too slow.

@qdrant_engine + ColBERT can be blazing fast, if you optimize right.

Here’s how to rerank like a pro without blowing up your memory:

I hope you've found this post insightful.

Follow me @_mwitiderrick for more thought-provoking posts.

Like/Retweet the first tweet below to share with your friends:

TLDR: Visual RAG works.

But don’t scale it naïvely.

Pooling + rerank = fast, accurate, scalable document search.

This is the cheat code for ColPali at scale.

Start here before your vector DB explodes.

Full guide: qdrant.tech/documentati...

Qdrant supports multivector formats natively.

Use MAX_SIM with mean-pooled + original vector fields.

Enable HNSW only for pooled vectors.

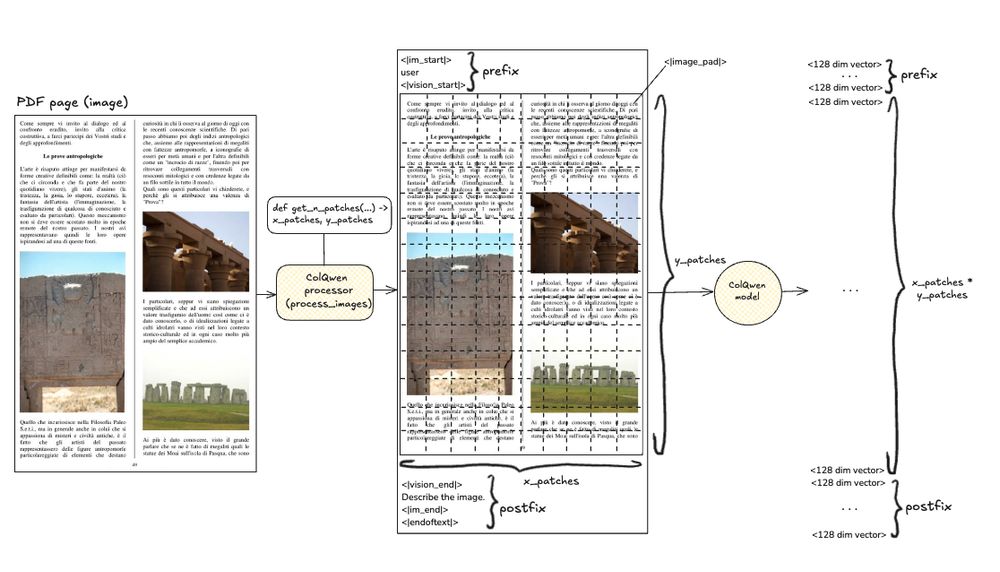

ColPali’s row-column patch structure makes this easy to implement.

Step 1: Use mean-pooled vectors for first-pass retrieval.

Step 2: Rerank results with full multivectors from ColPali/ColQwen.

Result: 10x faster indexing.

Same retrieval quality.

No hallucinated tables, no dropped layout info.

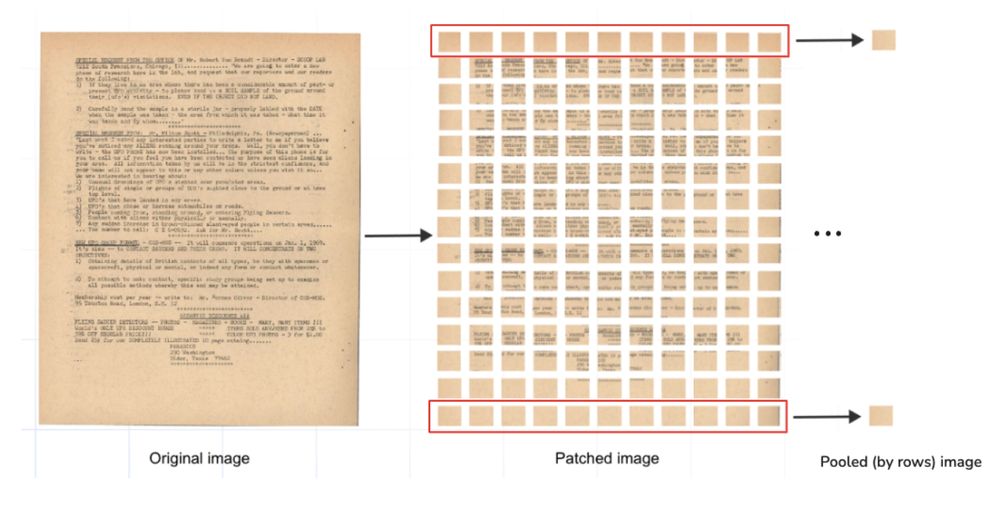

ColPali generates ~1,000 vectors per page.

ColQwen brings it down to ~700.

But that’s still too heavy for HNSW indexing at scale.

The fix: mean pooling.

Compress multivectors by rows/columns before indexing.

ColPali is cool.

But scaling it to 20,000+ PDF pages? Brutal.

VLLMs like ColPali and ColQwen create thousands of vectors per page.

@qdrant_engine solves this, but only if you optimize smart.

Here’s how to scale visual PDF retrieval without melting your GPU:

I hope you've found this post insightful.

Follow me @_mwitiderrick for more thought-provoking posts.

See the full implementation here: decodingml.substack.com/p/the-king-...

Why this matters:

ColPali unlocks true page-level understanding.

No more hallucinated rows or broken figures.

Text-only RAG is obsolete.

The pipeline:

PDF ➝ image ➝ ColQwen 2.5 ➝ vector embeddings ➝ @qdrant_engine.

Then an LLM reads the actual page images to answer questions.

It’s like ChatGPT, but with eyes.

Tables and diagrams are no longer black boxes.

ColPali skips text extraction.

It feeds document images into a vision-language model.

Every table, chart, and layout element is preserved.

Embeddings capture not just content, but spatial relationships.

Visual memory for documents is finally real.

RAG is broken for complex docs.

Tables? Lost.

Layouts? Scrambled.

Now there's ColPali, a breakthrough visual RAG system that sees like a human.

It doesn’t read documents. It looks at them.

Here’s how it changes everything:

Anthropic’s move is strategic and could reshape AI defense norms.

Their partnership with Palantir and AWS is a game-changer.

This isn't just business.

It's about staying ahead in AI safety.

This isn’t just about Anthropic – the trend is widespread.

OpenAI, Meta, and Google are also eyeing defense.

The intersection of AI and national security is heating up.

Powerhouses are aligning their models with defense needs.

Both AI and national security are evolving fast.

Anthropic’s new models focus on security applications.

They've added Fontaine to boost strategic decisions.

His past includes advising Senator McCain.

This move strengthens Anthropic’s governance and safety focus.