The Principles of Diffusion Models

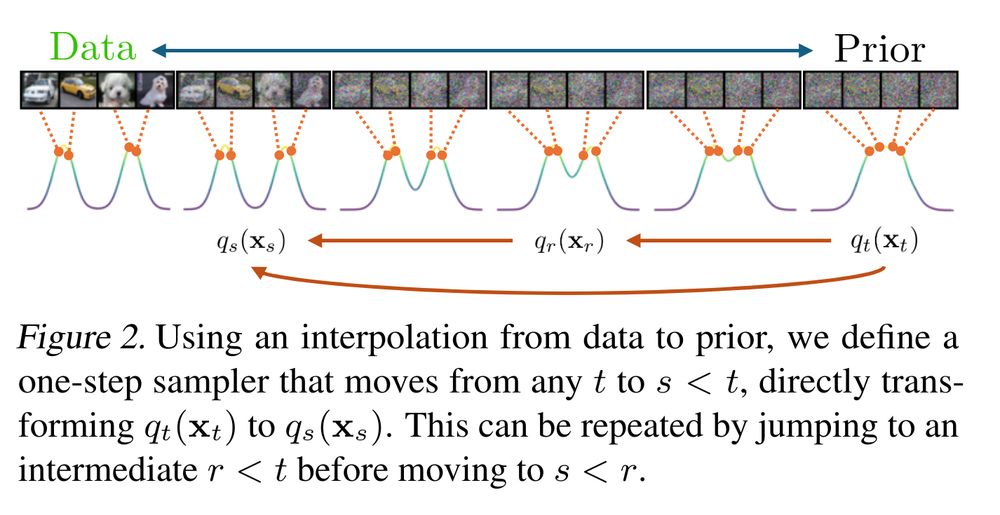

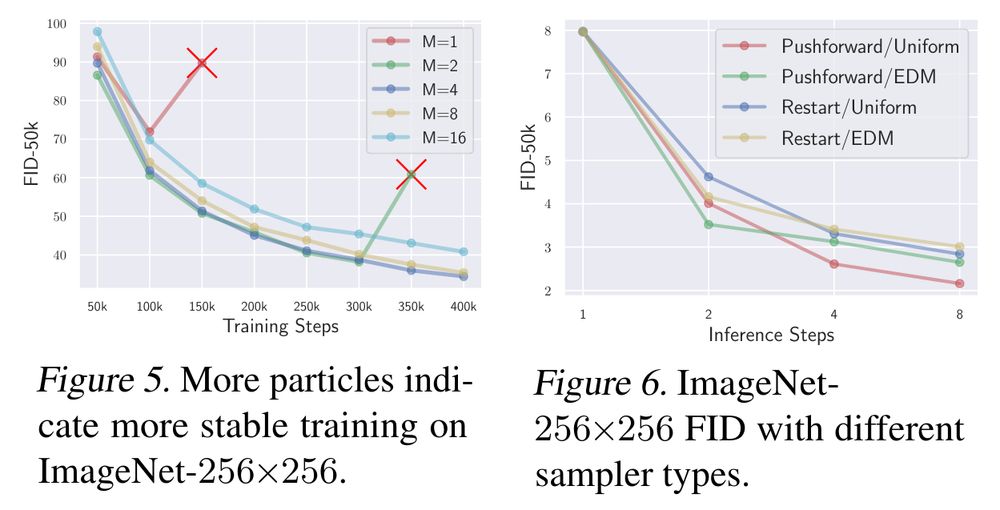

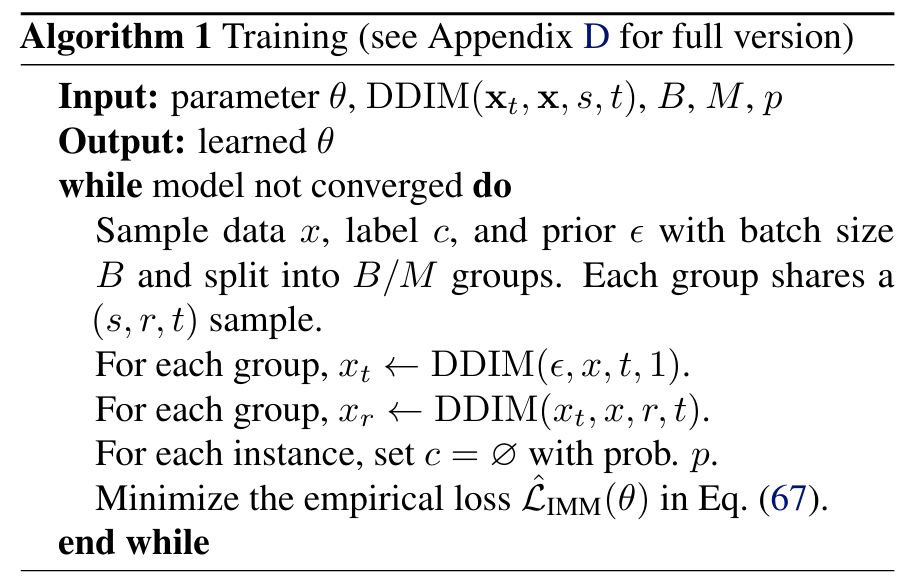

This monograph presents the core principles that have guided the development of diffusion models, tracing their origins and showing how diverse formulations arise from shared mathematical ideas. Diffu...

"The Principles of Diffusion Models" by Chieh-Hsin Lai, Yang Song, Dongjun Kim, Yuki Mitsufuji, Stefano Ermon. arxiv.org/abs/2510.21890

It might not be the easiest intro to diffusion models, but this monograph is an amazing deep dive into the math behind them and all the nuances

28.10.2025 08:35 — 👍 36 🔁 12 💬 1 📌 1

Video models are zero-shot learners and reasoners

Fascinating new paper from Google DeepMind which makes a very convincing case that their Veo 3 model - and generative video models in general - serve a similar role in …

Made some notes on the new DeepMind paper "Video models are zero-shot learners and reasoners" - it makes a convincing case that generative video models are to vision problems what LLMs were to NLP problems: single models that can solve a wide array of challenges simonwillison.net/2025/Sep/27/...

28.09.2025 00:29 — 👍 89 🔁 15 💬 1 📌 3

As AI agents face increasingly long and complex tasks, decomposing them into subtasks becomes increasingly appealing.

But how do we discover such temporal structure?

Hierarchical RL provides a natural formalism-yet many questions remain open.

Here's our overview of the field🧵

27.06.2025 20:15 — 👍 34 🔁 10 💬 1 📌 3

What is the probability of an image? What do the highest and lowest probability images look like? Do natural images lie on a low-dimensional manifold?

In a new preprint with Zahra Kadkhodaie and @eerosim.bsky.social, we develop a novel energy-based model in order to answer these questions: 🧵

06.06.2025 22:11 — 👍 70 🔁 23 💬 1 📌 1

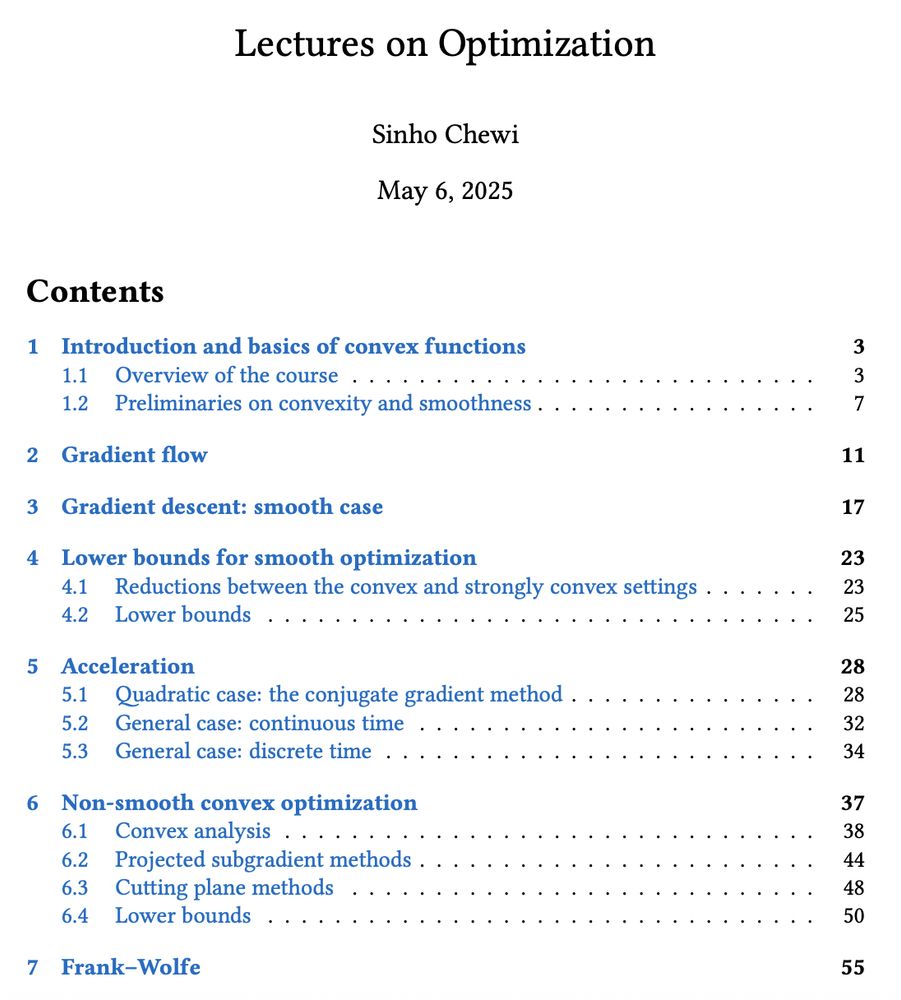

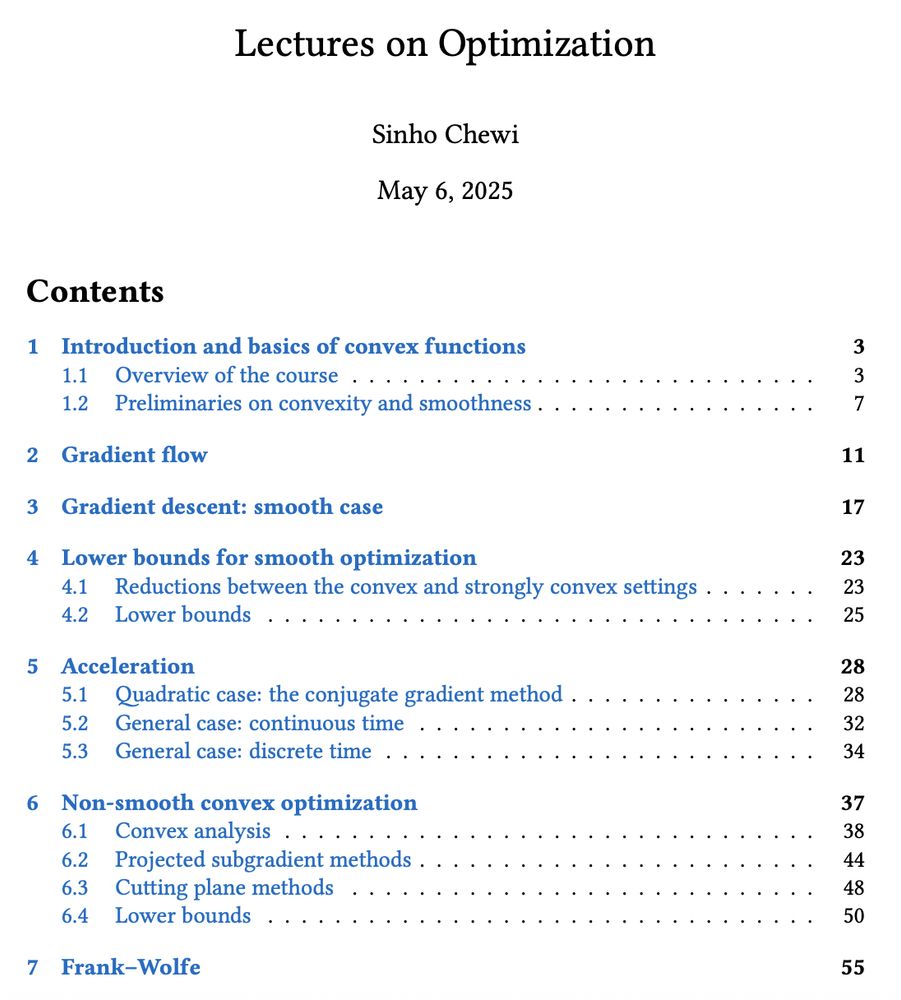

pretty sweet:

chewisinho.github.io/opt_notes_fi...

23.05.2025 15:33 — 👍 54 🔁 11 💬 1 📌 0

Happy to be recognized among outstanding reviewers 🙂

10.05.2025 15:16 — 👍 1 🔁 0 💬 0 📌 0

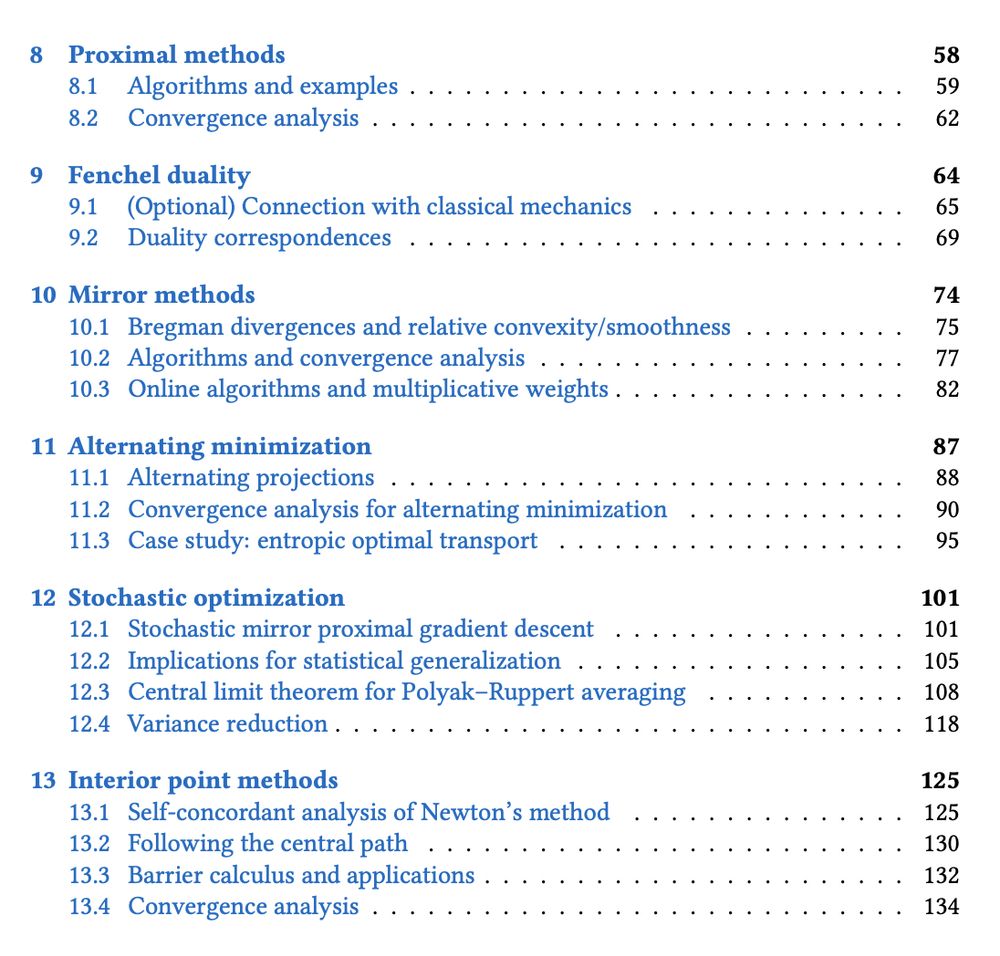

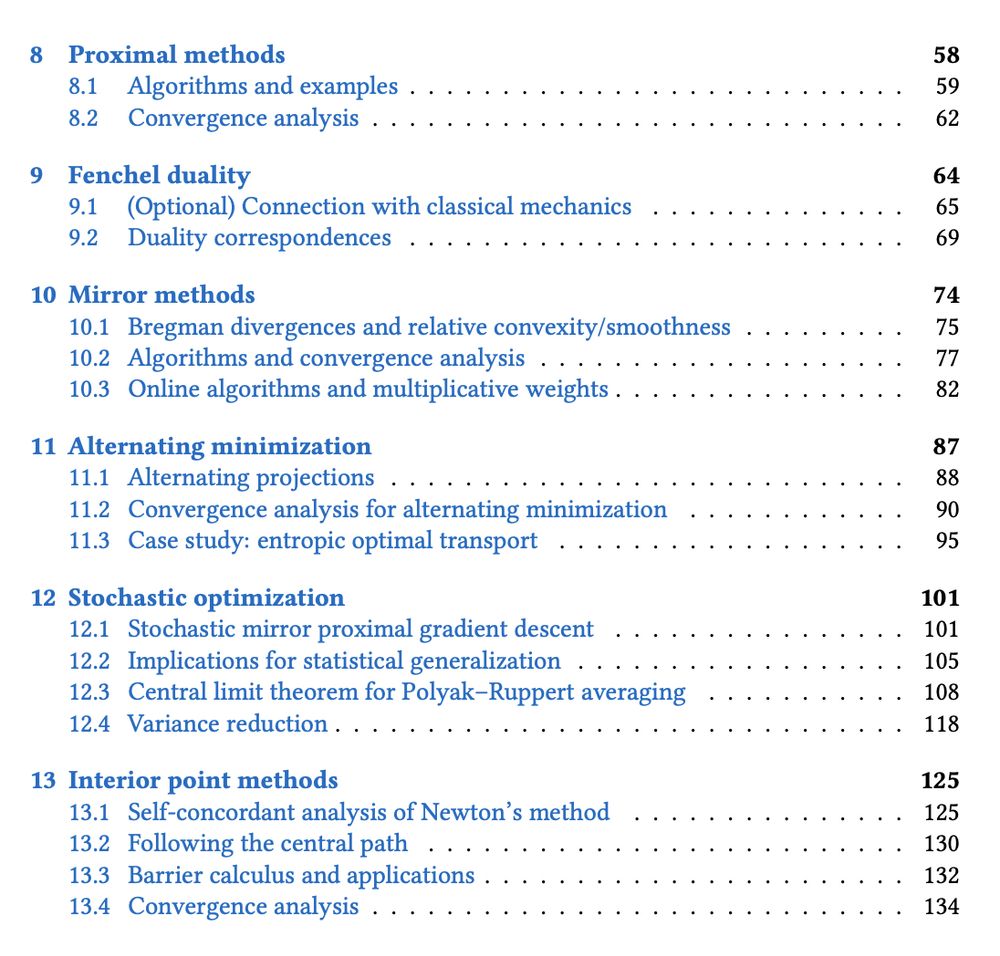

Enjoying reading this. Clarifies some nice connections between scoring rules, probabilistic divergences, convex analysis, and so on. Should read it even more closely, to be honest!

01.05.2025 22:22 — 👍 32 🔁 3 💬 6 📌 1

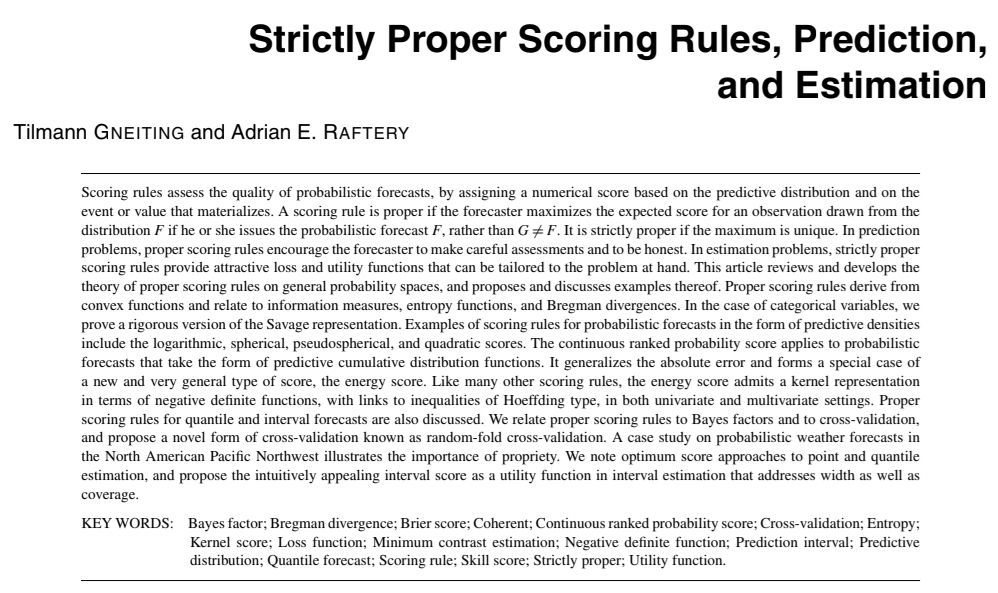

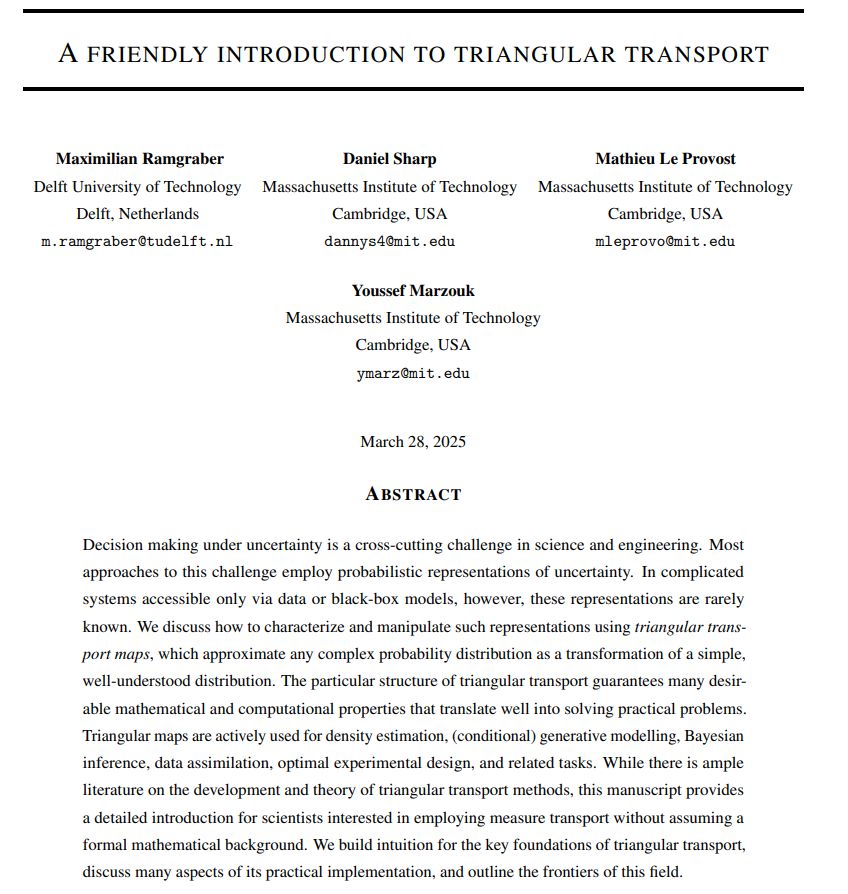

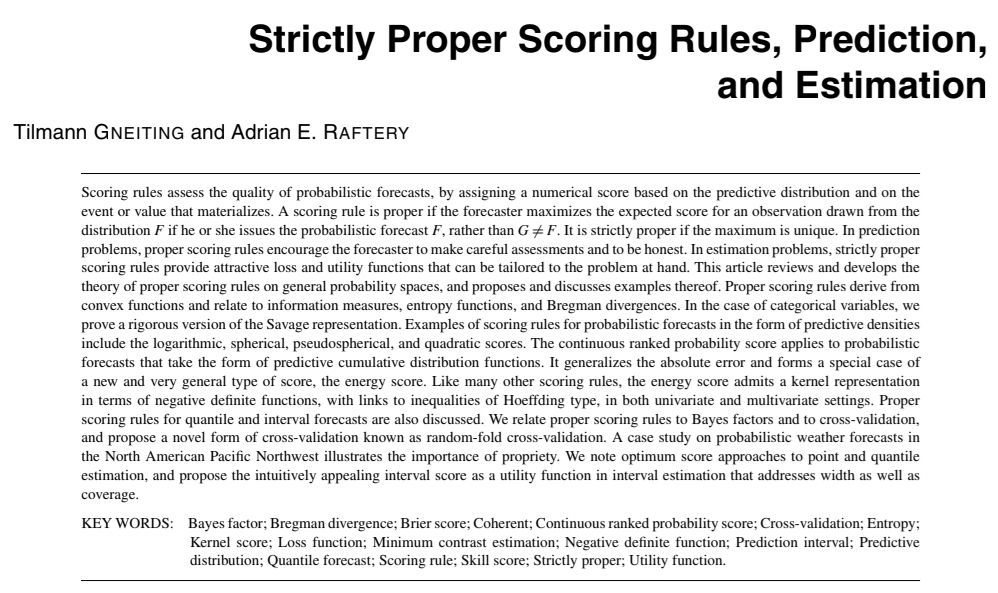

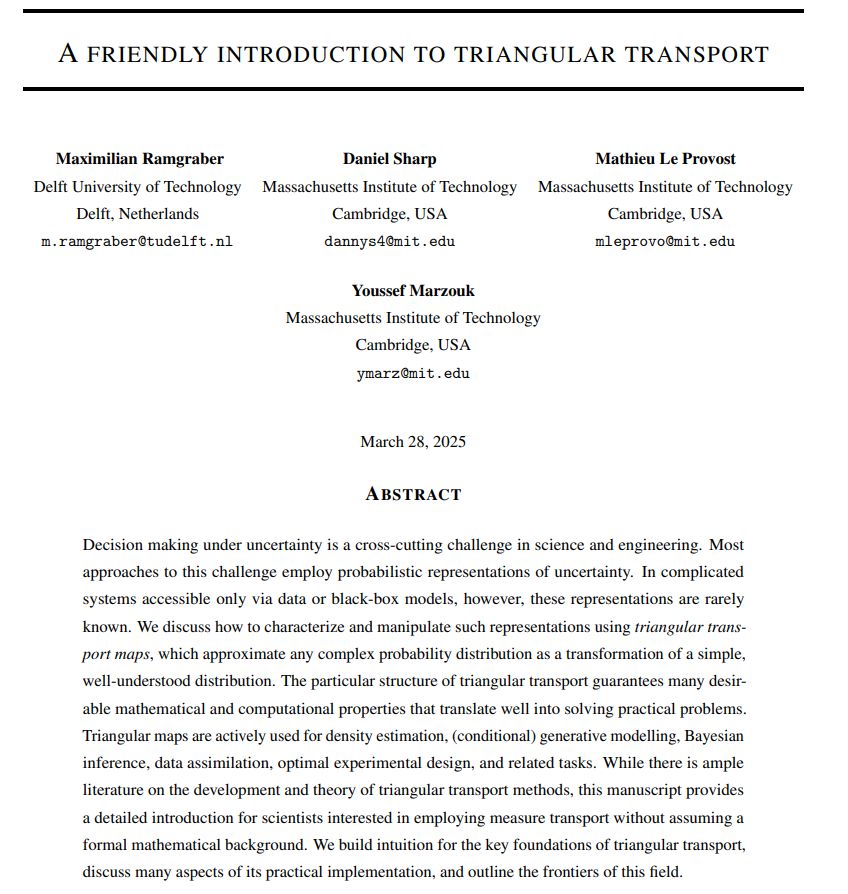

Nice tutorial arxiv.org/abs/2503.21673

29.03.2025 18:26 — 👍 12 🔁 4 💬 1 📌 0

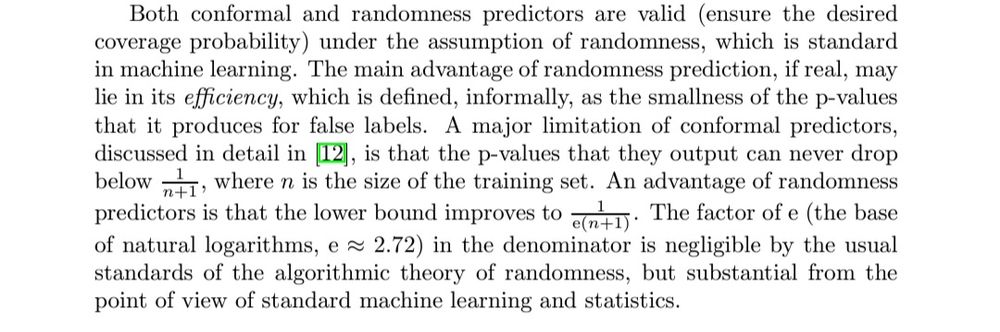

Yet another short paper on randomness predictors (of which conformal predictors are a subclass) by Vovk, focusing on an inductive variant. arxiv.org/abs/2503.02803

06.03.2025 06:01 — 👍 1 🔁 1 💬 1 📌 1

I already advertised for this document when I posted it on arXiv, and later when it was published.

This week, with the agreement of the publisher, I uploaded the published version on arXiv.

Less typos, more references and additional sections including PAC-Bayes Bernstein.

arxiv.org/abs/2110.11216

05.03.2025 01:16 — 👍 109 🔁 22 💬 1 📌 3

1/n🚀If you’re working on generative image modeling, check out our latest work! We introduce EQ-VAE, a simple yet powerful regularization approach that makes latent representations equivariant to spatial transformations, leading to smoother latents and better generative models.👇

18.02.2025 14:26 — 👍 18 🔁 8 💬 1 📌 1

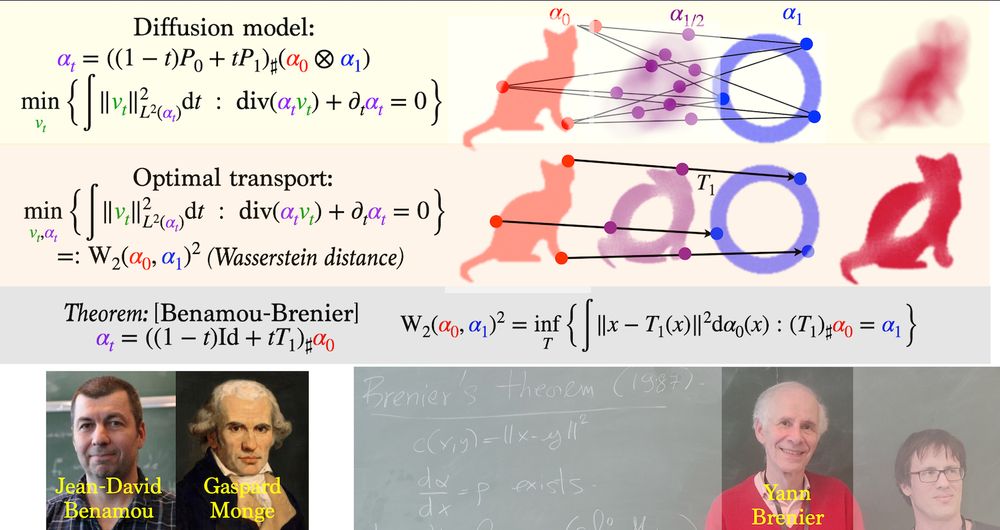

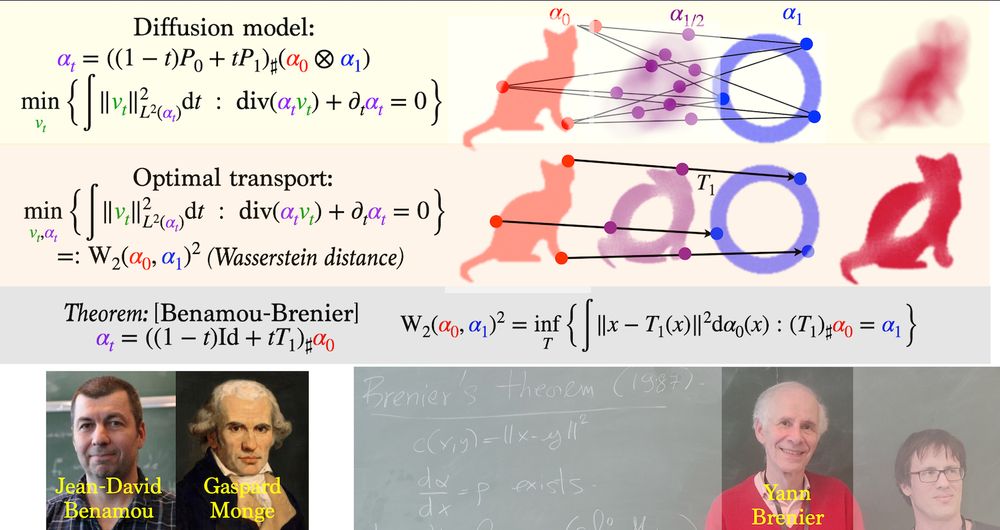

Slides for a general introduction to the use of Optimal Transport methods in learning, with an emphasis on diffusion models, flow matching, training 2 layers neural networks and deep transformers. speakerdeck.com/gpeyre/optim...

15.01.2025 19:08 — 👍 126 🔁 26 💬 4 📌 1

I don’t get the DeepSeek freak-out. Chinese orgs have been making models of all sorts that were on par with those of US orgs for a while now.

28.01.2025 00:48 — 👍 46 🔁 5 💬 2 📌 0

Convergence of Statistical Estimators via Mutual Information Bounds

Recent advances in statistical learning theory have revealed profound connections between mutual information (MI) bounds, PAC-Bayesian theory, and Bayesian nonparametrics. This work introduces a novel...

We uploaded yesterday on arXiv a paper on a variant of the "Mutual Information bound" taylored to analyze statistical estimators (MLE, Bayes and variational Bayes, etc).

I assume I should advertise for it after the holidays, but in case you are still online today:

arxiv.org/abs/2412.18539

25.12.2024 11:08 — 👍 34 🔁 4 💬 1 📌 1

100%

which reminded me of @ardemp.bsky.social rule #1 on how to science: Don’t be too busy

Being too busy (with noise) = less time to read papers, less time to think and to connect the dots, less time for creative work!

15.12.2024 14:28 — 👍 30 🔁 12 💬 0 📌 4

Reinforcement Learning: An Overview

This manuscript gives a big-picture, up-to-date overview of the field of (deep) reinforcement learning and sequential decision making, covering value-based RL, policy-gradient methods, model-based methods, and various other topics.

arxiv.org/abs/2412.05265

09.12.2024 08:37 — 👍 54 🔁 8 💬 0 📌 1

I've been around the block a few times. When deep learning first became hot, many older colleagues bemoaned it as just tinkering + chain rule, and not intellectually satisfying. Then came SSL, equivariance, VAEs, GANs, neural ODEs, transformers, diffusion, etc. The richness was staggering.

🧵👇

06.12.2024 17:06 — 👍 62 🔁 7 💬 2 📌 0

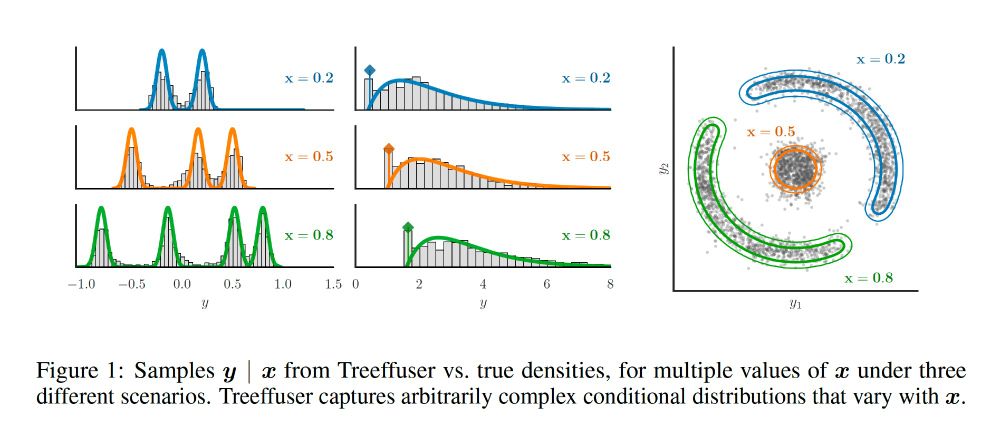

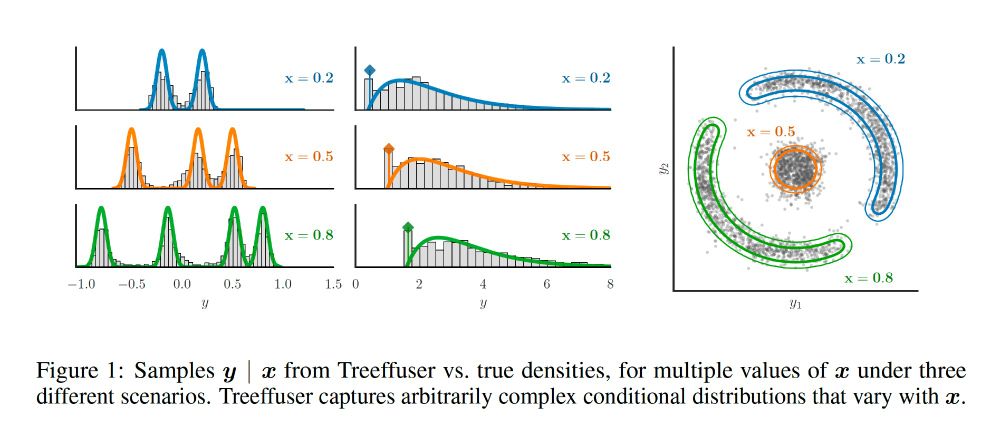

Samples y | x from Treeffuser vs. true densities, for multiple values of x under three different scenarios. Treeffuser captures arbitrarily complex conditional distributions that vary with x.

I am very excited to share our new Neurips 2024 paper + package, Treeffuser! 🌳 We combine gradient-boosted trees with diffusion models for fast, flexible probabilistic predictions and well-calibrated uncertainty.

paper: arxiv.org/abs/2406.07658

repo: github.com/blei-lab/tre...

🧵(1/8)

02.12.2024 21:48 — 👍 153 🔁 23 💬 4 📌 4

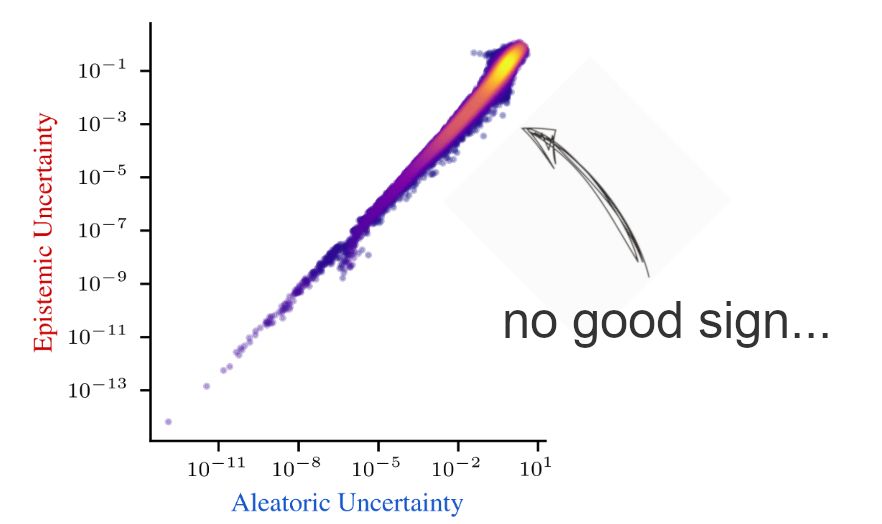

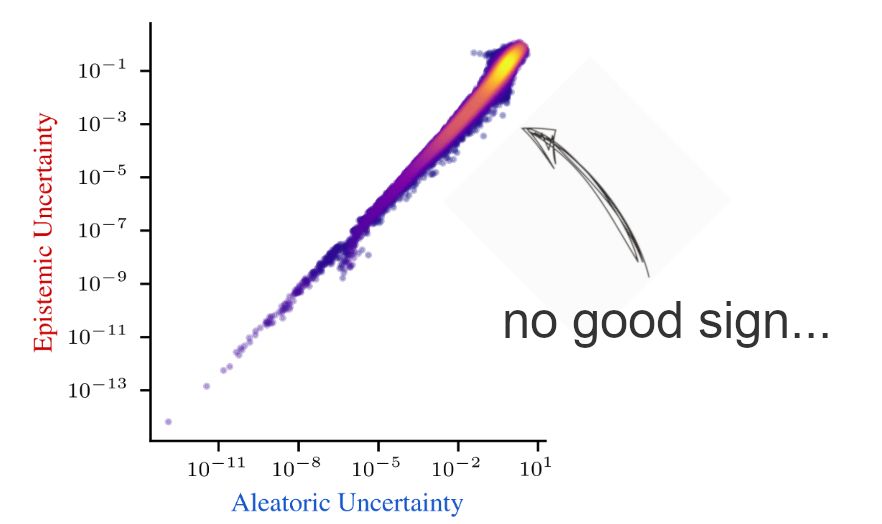

Proud to announce our NeurIPS spotlight, which was in the works for over a year now :) We dig into why decomposing aleatoric and epistemic uncertainty is hard, and what this means for the future of uncertainty quantification.

📖 arxiv.org/abs/2402.19460 🧵1/10

03.12.2024 09:45 — 👍 74 🔁 12 💬 3 📌 2

Optimal transport computes an interpolation between two distributions using an optimal coupling. Flow matching, on the other hand, uses a simpler “independent” coupling, which is the product of the marginals.

02.12.2024 12:46 — 👍 196 🔁 31 💬 9 📌 6

Our big_vision codebase is really good! And it's *the* reference for ViT, SigLIP, PaliGemma, JetFormer, ... including fine-tuning them.

However, it's criminally undocumented. I tried using it outside Google to fine-tune PaliGemma and SigLIP on GPUs, and wrote a tutorial: lb.eyer.be/a/bv_tuto.html

03.12.2024 00:18 — 👍 116 🔁 18 💬 3 📌 2

Anne Gagneux, Ségolène Martin, @quentinbertrand.bsky.social Remi Emonet and I wrote a tutorial blog post on flow matching: dl.heeere.com/conditional-... with lots of illustrations and intuition!

We got this idea after their cool work on improving Plug and Play with FM: arxiv.org/abs/2410.02423

27.11.2024 09:00 — 👍 354 🔁 102 💬 12 📌 11

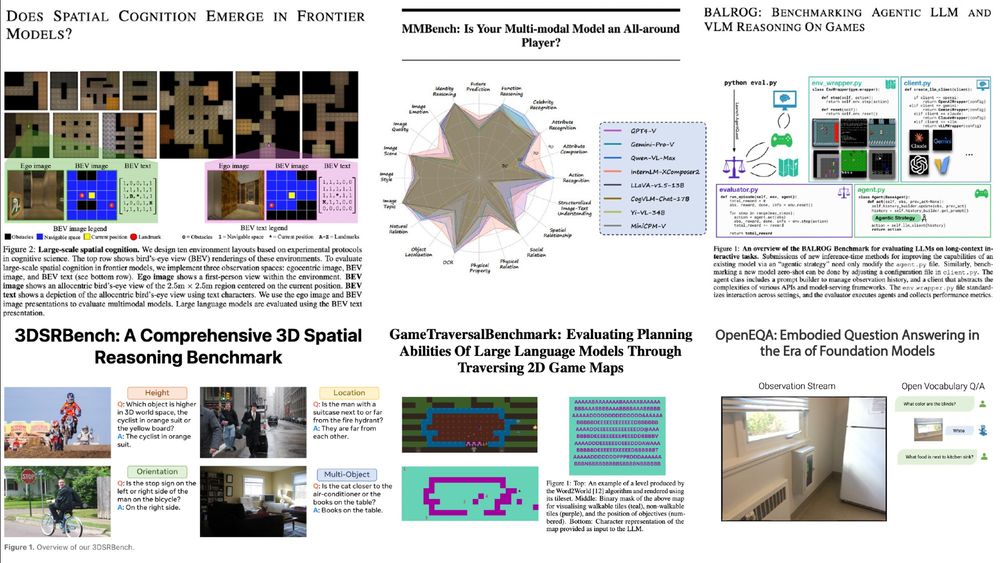

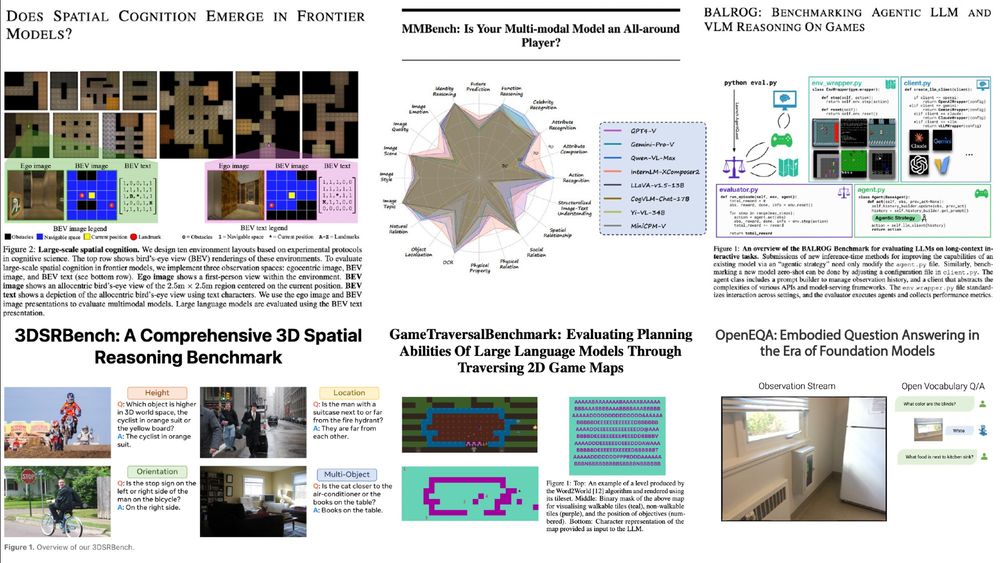

Updated: 6 benchmarks testing spatial and agent reasoning of LLM/VLMs

arxiv.org/abs/2410.06468 does spatial cognition

arxiv.org/abs/2307.06281 MMBench

arxiv.org/abs/2411.13543 BALROG

arxiv.org/abs/2410.07765 GameTraversalBenchmark

3dsrbench.github.io 3DSRBenchmark

open-eqa.github.io Open-EQA

26.11.2024 08:25 — 👍 62 🔁 9 💬 2 📌 1

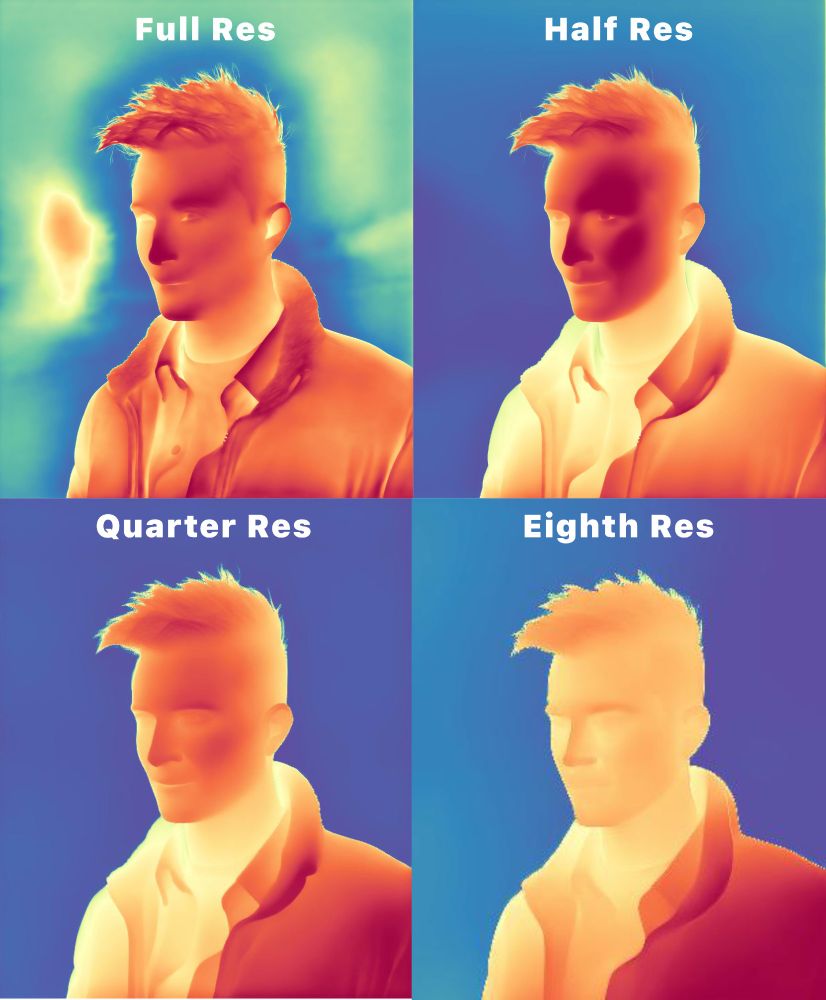

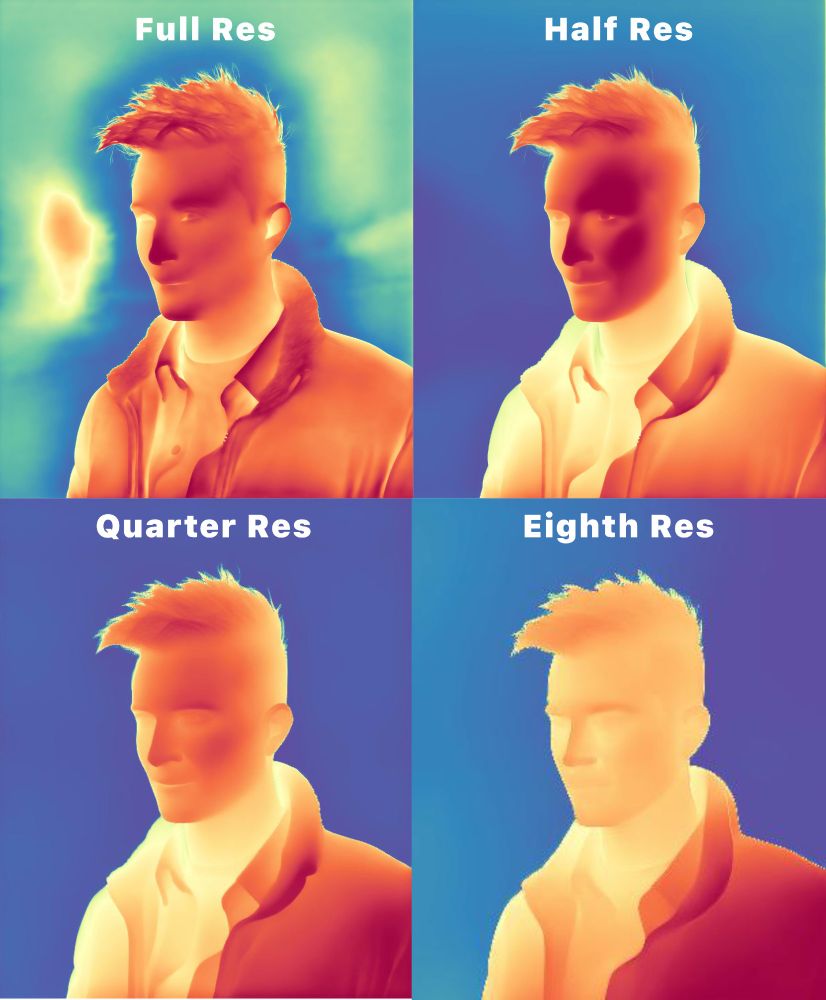

Yeah, looks like different image resolutions will give you entirely different depth maps.

26.11.2024 07:24 — 👍 70 🔁 4 💬 7 📌 1

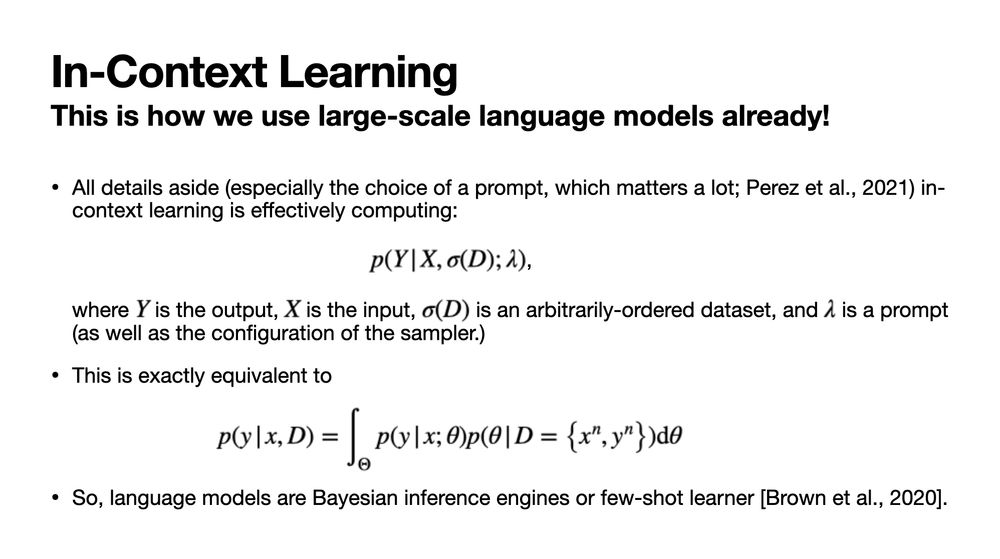

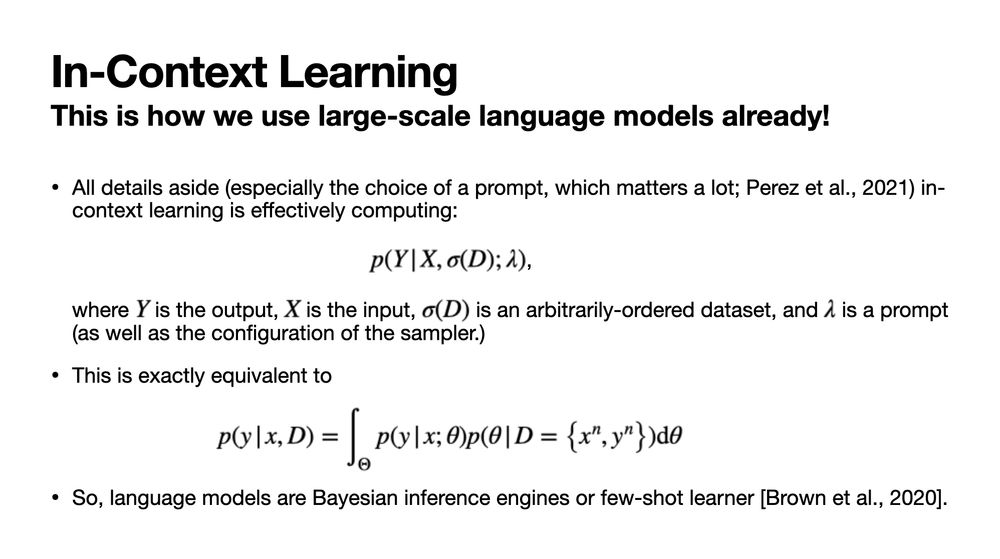

now, if we think of p(output | prompt, a few examples) as a predictive distribution p(y|x, D) ... it looks very much like learning to me :)

see e.g. my slide deck on drive.google.com/file/d/1B-Ka...

21.11.2024 00:43 — 👍 25 🔁 4 💬 3 📌 1

Assistant Professor at the Department of Computer Science, University of Liverpool.

https://lutzoe.github.io/

research @ Google DeepMind

Researcher @Microsoft; PhD @Harvard; Incoming Assistant Professor @MIT (Fall 2026); Human-AI Interaction, Worker-Centric AI

zbucinca.github.io

Probabilistic machine learning and its applications in AI, health, user interaction.

@ellisinstitute.fi, @ellis.eu, fcai.fi, @aifunmcr.bsky.social

Assistant Professor (Presidential Young Professor, PYP) at the National University of Singapore (NUS).

https://liuanji.github.io/

Postdoc at IBME in Oxford. Machine learning for healthcare.

https://www.fregu856.com/

Torr Vision Group (TVG) In Oxford @ox.ac.uk

We work on Computer Vision, Machine Learning, AI Safety and much more

Learn more about us at: https://torrvision.com

Professor a NYU; Chief AI Scientist at Meta.

Researcher in AI, Machine Learning, Robotics, etc.

ACM Turing Award Laureate.

http://yann.lecun.com

Professor Oxford in Machine Learning

Involved in many start ups including FiveAI, Onfido, Oxsight, AIStetic. Eigent, etc

I occasionally look here but am mostly on linkedin, find me there, www.linkedin.com/in/philip-torr-1085702

Assistant Prof of CS at the University of Waterloo, Faculty and Canada CIFAR AI Chair at the Vector Institute. Joining NYU Courant in September 2026. Co-EiC of TMLR. My group is The Salon. Privacy, robustness, machine learning.

http://www.gautamkamath.com

Lecturer in Maths & Stats at Bristol. Interested in probabilistic + numerical computation, statistical modelling + inference. (he / him).

Homepage: https://sites.google.com/view/sp-monte-carlo

Seminar: https://sites.google.com/view/monte-carlo-semina

Associate Professor in Machine Learning, Aalto University. ELLIS Scholar.

http://arno.solin.fi

The world's leading venue for collaborative research in theoretical computer science. Follow us at http://YouTube.com/SimonsInstitute.

Making robots part of our everyday lives. #AI research for #robotics. #computervision #machinelearning #deeplearning #NLProc #HRI Based in Grenoble, France. NAVER LABS R&D

europe.naverlabs.com

PhD student in Machine Learning @Warsaw University of Technology and @IDEAS NCBR

Math Assoc. Prof. (On leave, Aix-Marseille, France)

Teaching Project (non-profit): https://highcolle.com/

CS PhD student at the University of Birmingham. Research interests: automated machine learning-AutoAI (Bayesian Optimization, GPs & meta-learning) and reinforcement learning. . https://sites.google.com/view/zhaoyangwang/home