This result is particularly interesting for capabilities that are harder for models (like numerical reasoning or text rendering) as prompt-aware guidance boosts performance on these failure modes without retraining.

30.09.2025 16:00 — 👍 0 🔁 0 💬 0 📌 0

Most diffusion-based models use a fixed (model-tuned) guidance schedule. We show that picking the guidance value during inference, conditioned on the prompt/capability, significantly improves performance.

arxiv.org/abs/2509.16131

30.09.2025 16:00 — 👍 2 🔁 1 💬 1 📌 0

EVAL-FoMo 2 - Schedule

Date: June 11 (1:00pm - 6:00pm)

Our #CVPR2025 workshop on Emergent Visual Abilities and Limits of Foundation Models (EVAL-FoMo) is taking place this afternoon (1-6pm) in room 210.

Workshop schedule: sites.google.com/view/eval-fo...

11.06.2025 17:55 — 👍 7 🔁 3 💬 3 📌 0

Also at #ICLR2025: See this work in action! We're demoing "Gecko" showing how we use capability-based evaluators to help users customize evals & select models. 🦎

Find us at the Google booth, Fri 4/26, 12:00-12:30 PM.

23.04.2025 21:23 — 👍 1 🔁 0 💬 0 📌 0

At #ICLR2025, we're diving into what makes prompt adherence evaluators work for image/video generation.

Check out our poster Friday at 3 PM: iclr.cc/virtual/2025/p… 🦎

23.04.2025 21:23 — 👍 1 🔁 0 💬 1 📌 0

Generative models are powerful evaluators/verifiers, impacting evaluation and post-training. Yet, making them effective, particularly for highly similar models/checkpoints, is challenging. The devil is in the details.

23.04.2025 21:23 — 👍 5 🔁 1 💬 1 📌 0

I know multiple people who need to hear this piped into their offices during working hours

10.03.2025 05:02 — 👍 33 🔁 10 💬 1 📌 0

Our 2nd Workshop on Emergent Visual Abilities and Limits of Foundation Models (EVAL-FoMo) is accepting submissions. We are looking forward to talks by our amazing speakers that include @saining.bsky.social, @aidanematzadeh.bsky.social, @lisadunlap.bsky.social, and @yukimasano.bsky.social. #CVPR2025

13.02.2025 16:02 — 👍 7 🔁 3 💬 0 📌 1

ICLR 2025 Financial Assistance

if you would like to attend #ICLR2025 but have financial barriers, apply for financial assistance!

our priority categories are student authors, and contributors from underrepresented demographic groups & geographic regions.

deadline is march 2nd.

iclr.cc/Conferences/...

21.01.2025 14:34 — 👍 14 🔁 11 💬 0 📌 0

Our representational alignment workshop returns to #ICLR2025! Submit your work on how ML/cogsci/neuro systems represent the world & what shapes these representations 💭🧠🤖

w/ @thisismyhat.bsky.social @dotadotadota.bsky.social, @sucholutsky.bsky.social @lukasmut.bsky.social @siddsuresh97.bsky.social

16.01.2025 23:35 — 👍 20 🔁 9 💬 0 📌 1

The RE application is now open: boards.greenhouse.io/deepmind/job...

And here is the link to the RS position:

boards.greenhouse.io/deepmind/job...

08.01.2025 12:23 — 👍 3 🔁 0 💬 0 📌 0

What was the most impactful/visible/useful release on evaluation in AI in 2024?

06.01.2025 12:10 — 👍 11 🔁 3 💬 2 📌 0

Felix — Jane X. Wang

From the moment I heard him give a talk, I knew I wanted to work with Felix . His ideas about generalization and situatedness made explicit thoughts that had been swirling around in my head, incohe...

A brilliant colleague and wonderful soul Felix Hill recently passed away. This was a shock and in an effort to sort some things out, I wrote them down. Maybe this will help someone else, but at the very least it helped me. Rest in peace, Felix, you will be missed. www.janexwang.com/blog/2025/1/...

03.01.2025 04:02 — 👍 63 🔁 11 💬 2 📌 0

Felix Hill and some other DMers and I after cold water swimming at Parliament Hill Lido a few years ago

Felix Hill was such an incredible mentor — and occasional cold water swimming partner — to me. He's a huge part of why I joined DeepMind and how I've come to approach research. Even a month later, it's still hard to believe he's gone.

02.01.2025 19:01 — 👍 124 🔁 17 💬 7 📌 5

Beautiful crow against a black background

It seems to me that the time is ripe for a Bluesky thread about how—and maybe even why—to befriend crows.

(1/n)

20.08.2023 01:55 — 👍 7904 🔁 2570 💬 475 📌 749

NeurIPS 2024 Workshop on Adaptive Foundation Models

I've been getting a lot of questions about autoregression vs diffusion at #NeurIPS2024 this week! I'm speaking at the adaptive foundation models workshop at 9AM tomorrow (West Hall A), about what happens when we combine modalities and modelling paradigms.

adaptive-foundation-models.org

14.12.2024 04:02 — 👍 46 🔁 6 💬 2 📌 1

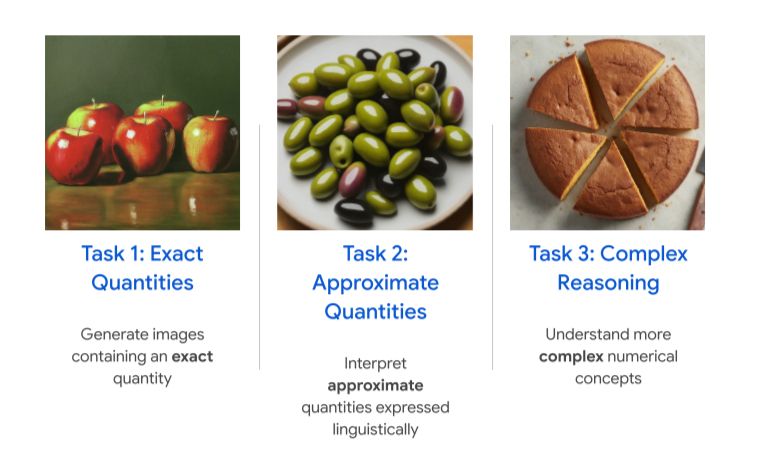

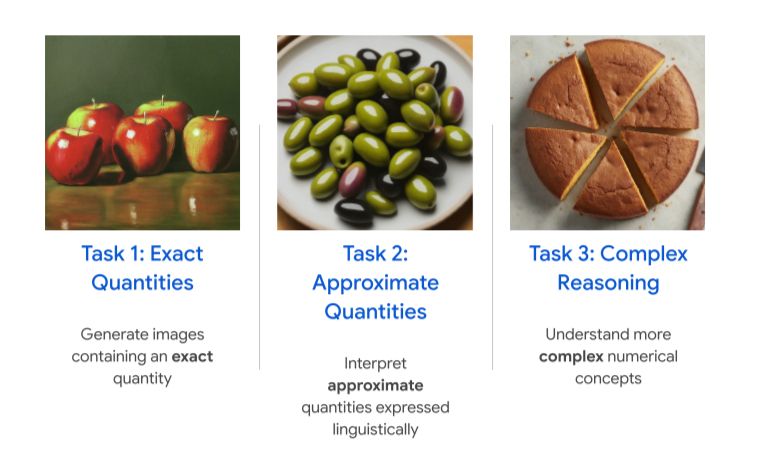

Our main task categories

We design 3 main tasks with varying degrees of difficulty and evaluate 13 models across different families. Models show rudimentary numerical reasoning skills, limited to small numbers and simple prompt formats; many models are affected by non-numerical prompt manipulations.

09.12.2024 19:08 — 👍 0 🔁 0 💬 1 📌 0

GitHub - google-deepmind/geckonum_benchmark_t2i: GeckoNum Benchmark for T2I Model Eval.

GeckoNum Benchmark for T2I Model Eval. Contribute to google-deepmind/geckonum_benchmark_t2i development by creating an account on GitHub.

What do text-to-image models know about numbers? Find out in our new paper 🦎 "Evaluating Numerical Reasoning in text-to-image Models" to be presented at #NeurIPS2024 (Wed 4:30-7:30 PM, #5304).

Dataset: github.com/google-deepm... (1386 prompts, 52,721 images, 479,570 annotations)

09.12.2024 19:08 — 👍 6 🔁 1 💬 1 📌 0

NeurIPS Tutorial Experimental Design and Analysis for AI ResearchersNeurIPS 2024

Stop by our #NeurIPS tutorial on Experimental Design & Analysis for AI Researchers! 📊

neurips.cc/virtual/2024/tutorial/99528

Are you an AI researcher interested in comparing models/methods? Then your conclusions rely on well-designed experiments. We'll cover best practices + case studies. 👇

07.12.2024 18:15 — 👍 86 🔁 13 💬 6 📌 1

If you will be at #NeurIPS2024 @neuripsconf.bsky.social and would like to come see our models in action, come say hi 👋 and check out our demo at the GDM booth!

Wednesday, Dec. 11th @ 9:30-10:00.

Lots of other great things to see as well! Check it out: 👇

deepmind.google/discover/blo...

06.12.2024 12:42 — 👍 41 🔁 6 💬 1 📌 0

Research Scientist, Language

London, UK

I am hiring for RS/RE positions! If you are interested in language-flavored multimodal learning, evaluation, or post-training apply here 🦎 boards.greenhouse.io/deepmind/job...

I will also be #NeurIPS2024 so come say hi! (Please email me to find time to chat)

06.12.2024 23:07 — 👍 28 🔁 7 💬 1 📌 1

Our big_vision codebase is really good! And it's *the* reference for ViT, SigLIP, PaliGemma, JetFormer, ... including fine-tuning them.

However, it's criminally undocumented. I tried using it outside Google to fine-tune PaliGemma and SigLIP on GPUs, and wrote a tutorial: lb.eyer.be/a/bv_tuto.html

03.12.2024 00:18 — 👍 116 🔁 18 💬 3 📌 2

Co-founder and CEO, Mistral AI

U.S. Senator, former teacher. Wife, mom (Amelia, Alex, Bailey, CFPB), grandmother, and Okie. She/her. Official campaign account.

AI @ OpenAI, Tesla, Stanford

a mediocre combination of a mediocre AI scientist, a mediocre physicist, a mediocre chemist, a mediocre manager and a mediocre professor.

see more at https://kyunghyuncho.me/

AI for storytelling, games, explainability, safety, ethics. Professor at Georgia Tech. Associate Director of ML Center at GT. Time travel expert. Geek. Dad. he/him

Professor, UW Biology / Santa Fe Institute

I study how information flows in biology, science, and society.

Book: *Calling Bullshit*, http://tinyurl.com/fdcuvd7b

LLM course: https://thebullshitmachines.com

Corvids: https://tinyurl.com/mr2n5ymk

he/him

CS Faculty at Mila and McGill, interested in Graphs and Complex Data, AI/ML, Misinformation, Computational Social Science and Online Safety

Research Scientist at Google DeepMind

https://e-bug.github.io

pedestrian and straphanger

Director, MIT Computational Psycholinguistics Lab. President, Cognitive Science Society. Chair of the MIT Faculty. Open access & open science advocate. He.

Lab webpage: http://cpl.mit.edu/

Personal webpage: https://www.mit.edu/~rplevy

Assistant Professor / Faculty Fellow @nyudatascience.bsky.social studying cognition in mind & brain with neural nets, Bayes, and other tools (eringrant.github.io).

elsewhere: sigmoid.social/@eringrant, twitter.com/ermgrant @ermgrant

asst prof @Stanford linguistics | director of social interaction lab 🌱 | bluskies about computational cognitive science & language

ex-GenMusic co-lead @ DeepMind 👩🏻💻

🎸🎹, prog/metal/alt/classic rock, jazz, poetry, 🚣🏻♀️🚴🏻♀️🧘🏻♀️, traveling, festivals. Sometimes ❤️s AI/ML/data 📊 Attends too many concerts 🎫 King's/Darwin/Murray Edwards College, University of Cambridge 🎓

Blog: https://sander.ai/

🐦: https://x.com/sedielem

Research Scientist at Google DeepMind (WaveNet, Imagen 3, Veo, ...). I tweet about deep learning (research + software), music, generative models (personal account).

Associate Professor in EECS at MIT. Neural nets, generative models, representation learning, computer vision, robotics, cog sci, AI.

https://web.mit.edu/phillipi/

AI policy researcher, wife guy in training, fan of cute animals and sci-fi. Started a Substack recently: https://milesbrundage.substack.com/

Professor, Programmer in NYC.

Cornell, Hugging Face 🤗