Appending "do not use defensive programming" to the end of my queries makes AI generated code much more terse and useful. I can handle weird edge cases after, but I want to start by getting the core functionality in place.

29.10.2025 17:32 — 👍 0 🔁 0 💬 0 📌 0

I've been surprised by how much I like OpenAI Atlas. It's so fast and it's the best AI-based search engine I've used. UX is amazing.

I reserve the right to dislike it in the future when it presumably tries to bake ADs into LLM outputs in a mublock-proof way :'(.

22.10.2025 04:56 — 👍 0 🔁 0 💬 0 📌 0

LLMs I've used have been almost completely useless at formalizing anything to do with denotational or operational semantics.

I find it so shocking how good models are at advanced math and coding, and then how bad they are at the intersection of the two.

04.10.2025 16:38 — 👍 0 🔁 0 💬 0 📌 0

I can't get any LLM to make a type system :(. PL folks have to write more papers to train the LLMs.

03.10.2025 16:30 — 👍 0 🔁 0 💬 0 📌 0

I remember when Windows added ads to the start menu. I think apple turned on a default to show ads (at least it can be toggled off for now). I left Linux because of the driver bugs, but Linux is looking pretty good now.

01.10.2025 17:08 — 👍 1 🔁 0 💬 0 📌 0

I believe these techniques can lead to:

new simulators that enable optimization through contact

new probabilistic algorithms with discontinuities

new differentiable renderers that better model occlusion

new ways to solve ML problems e.g. selecting MOEs and doing image gen in continuous space

25.09.2025 17:41 — 👍 0 🔁 0 💬 0 📌 0

TLDR: Modeling discontinuities (occlusion, cracks, and contact) is hard, but distribution theory gives a systematic way to model these phenomena. I introduce distribution programming, which enables implementation/autodiff by using programming primitives that denote distributions.

25.09.2025 17:41 — 👍 0 🔁 0 💬 1 📌 0

I'd love a good biking route from Cambridge to Logan Airport and a safe place to park the bike at the airport 🚲 ✈️ .

I know this isn't exclusively in Cambridge, but is this possible @realBurhanAzeem @Marc_C_McGovern? Thanks for all you've done for bikers!

17.09.2025 20:36 — 👍 0 🔁 0 💬 0 📌 0

I got an email that was clearly automated but had a personalized tag line about my research in it. Something of the form: "I was interested in your research on ..."

I'm just bracing for a new wave of spam like this. I need an AI to screen my emails from this slop.

12.09.2025 20:03 — 👍 0 🔁 0 💬 0 📌 0

My defense talk is completely hopeless. There is nothing anyone can do for it.

09.09.2025 15:46 — 👍 0 🔁 0 💬 0 📌 0

Manual, light-weight intuition building, automation to get a robust setup that can easily be edited, and manually edit.

05.09.2025 20:36 — 👍 0 🔁 0 💬 0 📌 0

Plotting workflow: prototype in Desmos, use chat to write matplotlib code, and do by-hand checking and tuning.

I want more of this workflow.

05.09.2025 20:36 — 👍 0 🔁 0 💬 1 📌 0

Does Meta stand for metadata because they store all your data and sell it to advertisers?

02.09.2025 15:11 — 👍 0 🔁 0 💬 0 📌 0

I just randomly joined a member of the MIT admin for lunch. He's part of the office that helps students reserve space at MIT. It was a great reminder of all the wonderful staff at MIT who made helped make my days at MIT better. Thanks Aaron!

27.08.2025 18:33 — 👍 0 🔁 0 💬 0 📌 0

April 25, 2025Russ Tedrake, MITTitle: A Careful Examination of Multitask Transfer in TRI’s Large Behavior Models for Dexterous ManipulationAbstract: Many of ...

Stanford Seminar - Multitask Transfer in TRI’s Large Behavior Models for Dexterous Manipulation

Russ Tedrake's recent talk about the robotics/AI work at TRI is soooo good www.youtube.com/watch?v=TN1M...

06.07.2025 01:35 — 👍 0 🔁 0 💬 0 📌 0

It would be so nice if there was more competition for WiFi.

Unfortunately, there's a monopoly in Cambridge. Xfinity is the only fast WiFi. They have these random price hikes (a high baseline price with "promotions" that randomly expire). Their customer support is terrible.

25.06.2025 19:05 — 👍 0 🔁 0 💬 0 📌 0

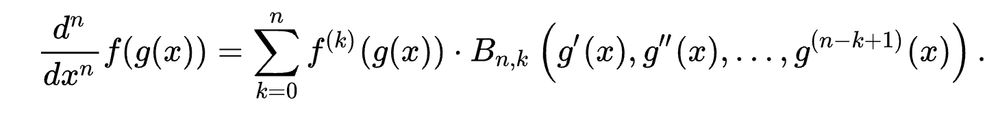

Today marks the second time that Faà di Bruno's formula was super useful to me.

en.wikipedia.org/wiki/Fa%C3%A...

It expresses a higher-order derivative of a composition of functions and uses the Bell polynomials. It came up in a distribution theory proof for my dissertation.

24.06.2025 14:01 — 👍 0 🔁 0 💬 0 📌 0

Cursor tab needs to stop repeatedly trying to modify the same line. If I rejected the last three recommendations, the fourth isn't going to change my mind...

03.06.2025 15:23 — 👍 0 🔁 0 💬 0 📌 0

Screw theory, differential kinematics, and singularities.

These are all topics that I'm interested in.

08.05.2025 20:26 — 👍 0 🔁 0 💬 0 📌 0

Cursor repeated tries to delete everything in my dissertation. It really understands me.

25.04.2025 22:47 — 👍 1 🔁 0 💬 0 📌 0

LLMs are excellent at choosing notation for math. They tend to be very concise and elegant.

Unfortunately, they also commonly make many mistakes in distribution theory :(.

24.04.2025 21:33 — 👍 0 🔁 0 💬 0 📌 0

Not to brag, but Elon has lost orders of magnitude more money than I have.

23.04.2025 21:20 — 👍 1 🔁 0 💬 0 📌 0

The audio and video aren't great early on, but improve later in the video. It has a pretty pedagogical introduction to distribution theory and why it's relevant to differentiable rendering.

18.04.2025 02:05 — 👍 0 🔁 0 💬 0 📌 0

Full Program: https://2024.splashcon.org/program/program-splash-2024/

[SPLASH'24] San Gabriel - OOPSLA (Oct 24th)

I found a recording of my talk on differentiating parametric discontinuities from OOPSLA:

www.youtube.com/live/ltA6hQA...

I explain some of the math techniques (e.g., distribution theory) and PL tools (e.g., formal semantics) behind developing a language for differentiable rendering.

18.04.2025 02:05 — 👍 0 🔁 0 💬 1 📌 0

gpt-4o makes for a solid technical editor. It finds missing words, repeated words, and issues with parallelism. I don't know how many mistakes it didn't find and I'm sure that there were definitely some false positives.

05.04.2025 04:27 — 👍 0 🔁 0 💬 0 📌 0

Why is accepting/rejecting AI-generated edits to a file so buggy in VSCode? There are so many cases where it duplicates lines or text disappears.

05.04.2025 03:14 — 👍 1 🔁 0 💬 0 📌 0

The biggest upgrade to MIT ever: baby bananas in the banana lounge.

04.04.2025 18:35 — 👍 0 🔁 0 💬 0 📌 0

I put a calls to an LLM in a loop and if it gives an answer (rather than a tool call) in too few iterations, I tell it to analyze what it did and then improve the results.

Does that mean I turned a regular LLM into an ✨ LLM with reasoning ✨?

04.04.2025 14:03 — 👍 0 🔁 0 💬 0 📌 0

Building personalized Bluesky feeds for academics! Pin Paper Skygest, which serves posts about papers from accounts you're following: https://bsky.app/profile/paper-feed.bsky.social/feed/preprintdigest. By @sjgreenwood.bsky.social and @nkgarg.bsky.social

DevAI @google, working on PL/SE+ ML. CS PhD (@MIT_CSAIL) 🇨🇷 in ATL. www.josecambronero.com

Google Chief Scientist, Gemini Lead. Opinions stated here are my own, not those of Google. Gemini, TensorFlow, MapReduce, Bigtable, Spanner, ML things, ...

machine learning research

Differentiable Programming & Scientific Machine Learning

Mathematician at UCLA. My primary social media account is https://mathstodon.xyz/@tao . I also have a blog at https://terrytao.wordpress.com/ and a home page at https://www.math.ucla.edu/~tao/

mit '23 | meng '24 | stanford phd ???

your friendly neighborhood PL enjoyer

she/her

software engineer | household alphabet teacher | karaoke try-hard

Taking a random walk down the software stack.

Also a dad of two toddlers, currently pursuing a management role in my house.

AI @ OpenAI, Tesla, Stanford

Director, Princeton Language and Intelligence. Professor of CS.

OpenAI and MIT faculty (on leave)

Blog: https://argmin.substack.com/

Webpage: https://people.eecs.berkeley.edu/~brecht/

head of product @bsky.app

build a better internet with us: bsky.social/about/join

Research Director, Founding Faculty, Canada CIFAR AI Chair @VectorInst.

Full Prof @UofT - Statistics and Computer Sci. (x-appt) danroy.org

I study assumption-free prediction and decision making under uncertainty, with inference emerging from optimality.

Senior Research Scientist at IBM Research and Explainability lead at the MIT-IBM AI Lab in Cambridge, MA. Interested in all things (X)AI, NLP, Visualization. Hobbies: Social chair at #NeurIPS, MiniConf, Mementor-- http://hendrik.strobelt.com

Software Engineer @ Google. Applying AI and LLMs to human-computer interaction and accessibility. On-device machine learning. Also interested in speech science and neuroscience. Tsinghua, JHU, & MIT alum. https://caisq.github.io/

AI quality at Databricks. Past: Co-founder of Lilac AI, acquired by Databricks. Co-created TensorFlow.js and Know Your Data. Google Brain // PAIR // Responsible AI. nsthorat@ over there

![[SPLASH'24] San Gabriel - OOPSLA (Oct 24th)](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:op2goumthwyhs7s56lvuk52c/bafkreigbdu7gxr5ioepb237wyvr645rqhgk5pfjkcw6sqdjbat5jbq36d4@jpeg)