LLMs power research, decision‑making, and exploration—but most benchmarks don’t test how well they stitch together evidence across dozens (or hundreds) of sources. Meet MoNaCo, our new eval for question-answering cross‑source reasoning. 👇

18.08.2025 16:05 — 👍 16 🔁 6 💬 1 📌 0

People at #ACL2025, come drop by our poster today & chat with me about how context matters for reliable language model evaluations!

Jul 30, 11:00-12:30 at Hall 4X, board 424.

30.07.2025 06:05 — 👍 4 🔁 1 💬 0 📌 0

issues w preference LM benchmarks:

🐡data contains cases where the "bad" response is just as good as chosen one

🐟model rankings can feel off (claude ranks lower than expected)

led by @cmalaviya.bsky.social, we study underspecified queries & detrimental effect on model evals; accepted to TACL 2025

22.07.2025 17:02 — 👍 14 🔁 4 💬 2 📌 0

Context is an overlooked aspect of language model evaluations. Check out how to incorporate context into evaluations in our TACL paper, how it changes evaluation conclusions and makes evaluation more reliable!

22.07.2025 17:12 — 👍 0 🔁 1 💬 0 📌 0

Our findings suggest that targeted debiasing using counterfactuals can help build more reliable preference models, a key step for both LLM alignment and evaluation.

Work led by Anirudh and done jointly with Nitish and @yatskar.bsky.social .

06.06.2025 16:31 — 👍 0 🔁 0 💬 1 📌 0

For instance, miscalibration for vagueness dropped from 51.3% to 28.5% and for jargon from 50.3% to 33.2% after CDA.

Even joint debiasing across multiple biases (length, vagueness, jargon) proved effective with minimal impact on general capabilities.

06.06.2025 16:31 — 👍 0 🔁 0 💬 1 📌 0

And the results? CDA works!

It significantly reduced average miscalibration (e.g., from 39.4% to 32.5%) and brought model skew much closer to human preferences. All this while maintaining overall performance on RewardBench!

06.06.2025 16:31 — 👍 2 🔁 0 💬 1 📌 0

So how do we debias models? We propose a simple yet effective post-training method based on counterfactual data augmentation (CDA).

We synthesize contrastive responses that explicitly magnify biases in dispreferred responses, & further finetune reward models on these responses.

06.06.2025 16:31 — 👍 1 🔁 0 💬 1 📌 0

Indeed, preference models can easily latch on to these subtle data artifacts!

Features that only weakly correlate with human preferences (r_human=−0.12) are strongly predictive for models (r_model=0.36). Points above y=x suggest that models overrely on these spurious cues😮

06.06.2025 16:31 — 👍 0 🔁 0 💬 1 📌 0

Where do these biases come from?🤔Our analysis suggests they originate from training data artifacts.

For eg, humans preferred structured responses >65% of the time when the alternative wasn't structured. This gives an opportunity for models to learn these patterns as heuristics!

06.06.2025 16:31 — 👍 2 🔁 0 💬 1 📌 0

How severe is the problem? Using controlled counterfactual pairs, we found that preference models (incl. LLM evaluators) prefer biased responses in >60% of cases (defined as skew) and show high miscalibration (~40%) wrt humans.

Vagueness & sycophancy are especially problematic!

06.06.2025 16:31 — 👍 1 🔁 0 💬 1 📌 0

Preference models act as proxies for human judgements in alignment (as reward models) & evaluation, but they can be miscalibrated.

We found that they overrely on many idiosyncratic features of AI-generated text, which can lead to reward hacking & unreliable evals. Features like:

06.06.2025 16:31 — 👍 4 🔁 0 💬 1 📌 0

Ever wondered what makes language models generate overly verbose, vague, or sycophantic responses?

Our new paper investigates these and other idiosyncratic biases in preference models, and presents a simple post-training recipe to mitigate them! Thread below 🧵↓

06.06.2025 16:31 — 👍 10 🔁 3 💬 1 📌 0

Evaluating language model responses on open-ended tasks is hard! 🤔

We introduce EvalAgent, a framework that identifies nuanced and diverse criteria 📋✍️.

EvalAgent identifies 👩🏫🎓 expert advice on the web that implicitly address the user’s prompt 🧵👇

22.04.2025 15:04 — 👍 22 🔁 5 💬 1 📌 2

🤔 How can we use context to learn more about model behavior?

We can study "default" responses from models. Under what type of context does their response get highest score?

We uncover a bias towards WEIRD contexts (Western, Educated, Industrialized, Rich & Democratic)!

13.11.2024 14:16 — 👍 0 🔁 0 💬 1 📌 0

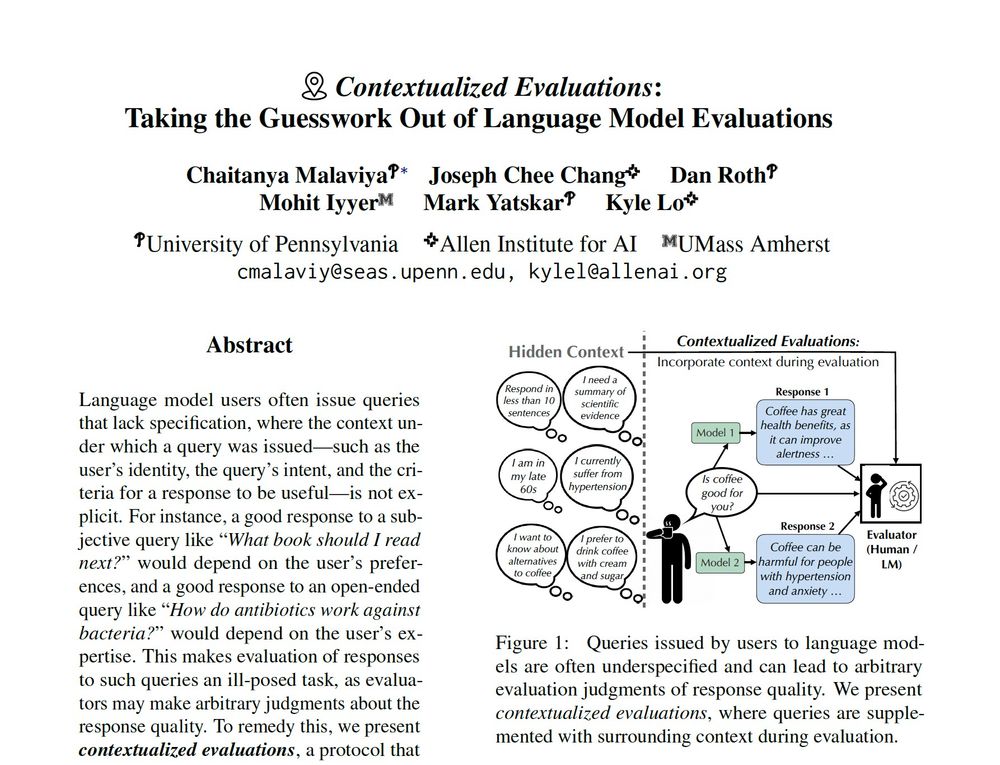

🤔 Does providing context to evaluators have a substantial effect on evaluation conclusions?

We find that (1) presence of context can improve agreement between evaluators and (2) even change model rankings! 🤯

13.11.2024 14:16 — 👍 0 🔁 0 💬 1 📌 0

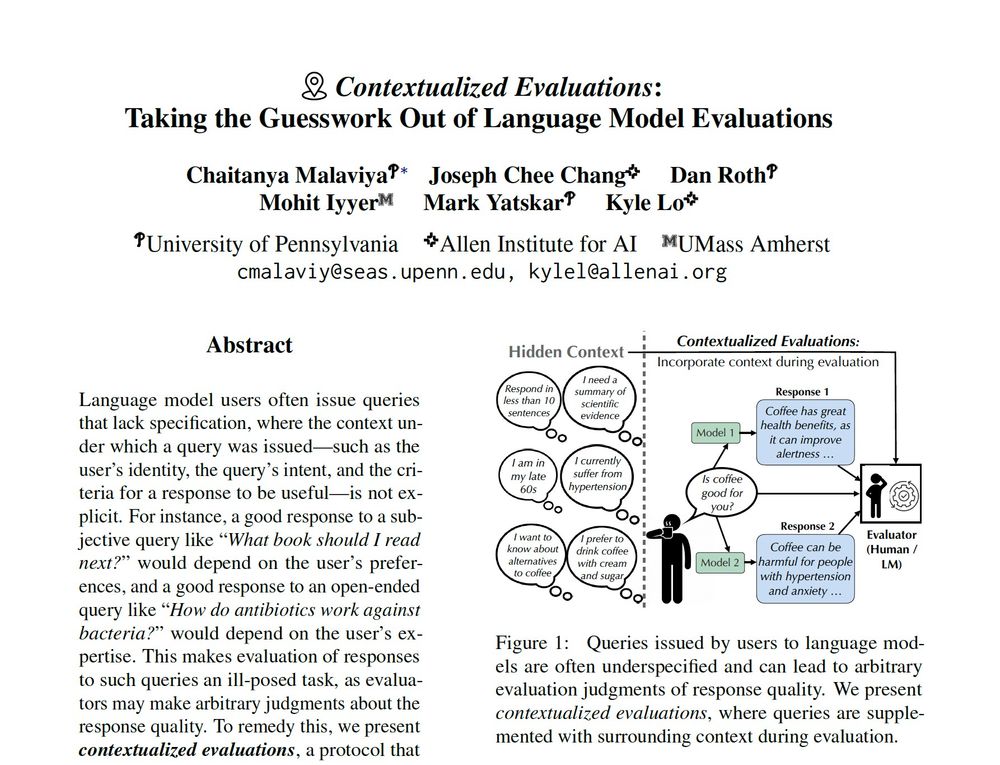

...we then conduct experiments providing context (1) during response generation, (2) during evaluation or (3) both.

13.11.2024 14:16 — 👍 0 🔁 0 💬 1 📌 0

With ✨Contextualized Evaluations✨, we synthetically generate context as clarifying, follow-up questions to an underspecified query...

13.11.2024 14:16 — 👍 0 🔁 0 💬 1 📌 0

Underspecified queries can lead to arbitrary evaluation judgments of response quality!

e.g., Given a query “Is coffee good for you?”, how can evaluators accurately judge model responses when they aren't informed about the user’s preferences, background or important criteria?

13.11.2024 14:16 — 👍 0 🔁 0 💬 1 📌 0

Underspecified queries are prevalent in many datasets used to benchmark language models (e.g., Chatbot Arena, AlpacaEval).

These can be ambiguous (e.g., what is a transformer? ... 🤔 for NLP or EE?), subjective (e.g., who is the best? ... 🤔 what criteria?), and more!

13.11.2024 14:16 — 👍 0 🔁 0 💬 1 📌 0

Excited to share ✨ Contextualized Evaluations ✨!

Benchmarks like Chatbot Arena contain underspecified queries, which can lead to arbitrary eval judgments. What happens if we provide evaluators with context (e.g who's the user, what's their intent) when judging LM outputs? 🧵↓

13.11.2024 14:16 — 👍 9 🔁 2 💬 1 📌 2

phd student-worker at penn, nsf grfp fellow, spelman alum. autonomy and identity in algorithmic systems.

they/she. 🧋 🍑 🧑🏾💻

https://psampson.net

The Association for Computational Linguistics (ACL) is a scientific and professional organization for people working on Natural Language Processing/Computational Linguistics.

Hash tags: #NLProc #ACL2026NLP

Postdoc @vectorinstitute.ai | organizer @queerinai.com | previously MIT, CMU LTI | 🐀 rodent enthusiast | she/they

🌐 https://ryskina.github.io/

Research in NLP (mostly LM interpretability & explainability).

Assistant prof at UMD CS + CLIP.

Previously @ai2.bsky.social @uwnlp.bsky.social

Views my own.

sarahwie.github.io

NLP/AI Research

Assistant Professor @Yale

Leading agents R&D at AI2. AI & HCI research scientist. https://jonathanbragg.com

associate prof at UMD CS researching NLP & LLMs

Assistant Professor at Bar-Ilan University

https://yanaiela.github.io/

Assistant Professor at @cs.ubc.ca and @vectorinstitute.ai working on Natural Language Processing. Book: https://lostinautomatictranslation.com/

https://shramay-palta.github.io

CS PhD student . #NLProc at CLIP UMD| Commonsense + xNLP, AI, CompLing | ex Research Intern @msftresearch.bsky.social

ELLIS PhD Fellow @belongielab.org | @aicentre.dk | University of Copenhagen | @amsterdamnlp.bsky.social | @ellis.eu

Multi-modal ML | Alignment | Culture | Evaluations & Safety| AI & Society

Web: https://www.srishti.dev/

https://jessyli.com Associate Professor, UT Austin Linguistics.

Part of UT Computational Linguistics https://sites.utexas.edu/compling/ and UT NLP https://www.nlp.utexas.edu/

Open spaces and open-sourced AI

training olmos at Ai2, prev at Apple, Penn …. 🎤 dabbler of things🎸 🐈⬛enjoyer of cats 🐈 and mountains🏔️he/him

PhD Student at University of Pennsylvania CIS in ML and explainable AI. | Former MS @UMassCS, Intern @OIST @IBM @USC_ISI | She/her

https://fallcat.github.io/

Assist. Prof at CMU, CS PhD at UW. HCI+AI, map general-purpose models to specific use cases!

NLP PhD student at UPenn | Prev USC

cylumn.com

Senior Research Scientist @nvidia | Adjunct Prof @BU | PhD from @CMU

PhD Student @nyudatascience.bsky.social, working with He He on NLP and Human-AI Collaboration.

Also hanging out @ai2.bsky.social

Website - https://vishakhpk.github.io/