New Paper Alert! Read the thread below for key takeaways from “Does Q&A Boost Engagement? Health Messaging Experiments in the United States and Ghana” by @erikakirgios.bsky.social @susanathey.bsky.social @angeladuckworth.bsky.social et al.

07.10.2025 16:48 — 👍 0 🔁 0 💬 0 📌 0

A Century of Impact

In 1925, Herbert Hoover, a Stanford alum and future U.S. president, had an idea. “A graduate School of Business Administration is urgently needed upon the Pacific Coast,” he wrote.

During the @gsb.stanford.edu 100 Faculty Celebration of Scholarship @susanathey.bsky.social highlighted the GSB’s proud tradition of synergy between business and practice during her presentation. Listen to her thoughts and more in the podcast: stanford.io/3K8mcrz

03.10.2025 17:32 — 👍 0 🔁 0 💬 0 📌 0

Earlier this month @susanathey.bsky.social joined Stanford University President Levin, @siepr.bsky.social Director @nealemahoney.bsky.social and other distinguished faculty to connect over cutting-edge research developments at Stanford Open Minds New York.

24.09.2025 18:15 — 👍 3 🔁 1 💬 0 📌 0

Listen to Raj Chetty talk about how surrogate indices make it possible to make decisions more quickly using multiple short-term outcomes to predict long-term effects with @nber.org

www.nber.org/research/vid...

27.08.2025 18:33 — 👍 0 🔁 0 💬 1 📌 0

Service Quality on Online Platforms: Empirical Evidence about Driving Quality at Uber

Founded in 1920, the NBER is a private, non-profit, non-partisan organization dedicated to conducting economic research and to disseminating research findings among academics, public policy makers, an...

Learn more about how ratings, measurement, nudges, and dashboards are used to support service quality on online platforms in the paper by @susanathey.bsky.social, Juan Camilo Castillo, & Bharat Chandar

www.nber.org/papers/w33087

22.08.2025 19:09 — 👍 0 🔁 0 💬 0 📌 0

How Uber Steers Its Drivers Toward Better Performance

Check out @susanathey.bsky.social’s interview on how AI-powered after-the-fact quality checks boost driver performance at Uber—and what it means when AI can track compliance. Insights via @StanfordGSB.

www.gsb.stanford.edu/insights/how...

22.08.2025 19:08 — 👍 0 🔁 0 💬 1 📌 0

YouTube video by SAReserveBank

#G20SouthAfrica Side event — The implications of Al on the organisation of industry and work

Watch @Susan_Athey’s talk on the implications of AI on the organisation of industry and work at #G20SouthAfrica

www.youtube.com/watch?v=okDG...

18.07.2025 13:06 — 👍 1 🔁 2 💬 0 📌 1

“Governments will play a key role…in whether we actually develop the technology that will help lower-skilled workers become more productive by using AI to augment them with expertise that previously was difficult to acquire.”

18.07.2025 13:06 — 👍 3 🔁 2 💬 1 📌 0

The talk will cover:

✔️How AI is altering industry dynamics & structures

✔️How these shifts will impact public services such as health and education

✔️How AI market concentration could tax the global economy

✔️Why govt policy will be crucial in shaping AI competition and innovation

15.07.2025 13:42 — 👍 0 🔁 0 💬 0 📌 0

AI & digitisation are rapidly reshaping the way we work.

Policymakers need to understand how, and what to do about it.

Watch @Susan_Athey speak to G20 leaders about these issues tomorrow 16 July @ 13:30 CET. #G20SouthAfrica

bit.ly/3GyMFgm or bit.ly/44PTXFP

15.07.2025 13:42 — 👍 0 🔁 0 💬 1 📌 0

Beyond predictions, @keyonv.bsky.social also worked with @gsbsilab.bsky.social to show how these models can be used to make better estimations of important problems, such as the gender wage gap among men and women with the same career histories. Learn more here: bsky.app/profile/gsbs...

30.06.2025 15:39 — 👍 0 🔁 0 💬 0 📌 0

Keyon Vafa: Predicting Workers’ Career Trajectories to Better Understand Labor Markets

If we know someone’s career history, how well can we predict which job they’ll have next?

If we know someone’s career history, how well can we predict which jobs they’ll have next? Read our profile of @keyonv.bsky.social to learn how ML models can be used to predict workers’ career trajectories & better understand labor markets.

medium.com/@gsb_silab/k...

30.06.2025 15:39 — 👍 9 🔁 2 💬 1 📌 0

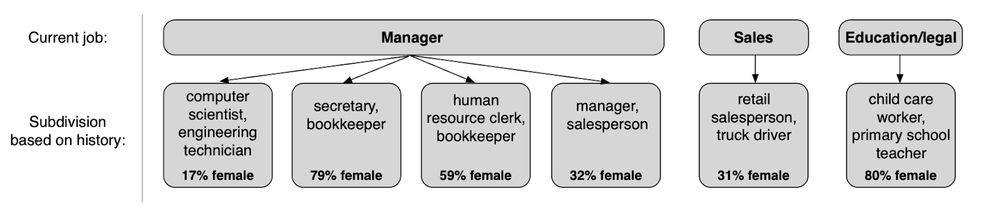

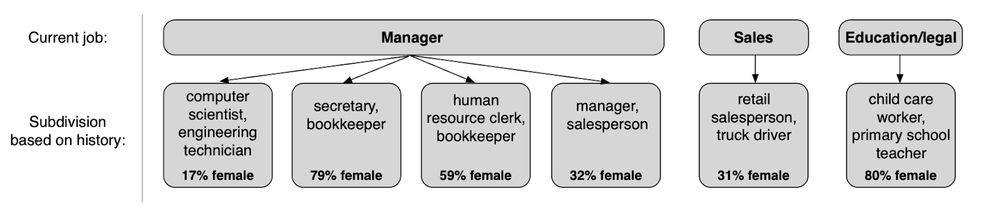

Analyzing representations tells us where history explains the gap.

Ex: there are two kinds of managers: those who used to be engineers and those who didn’t. The first group gets paid more and has more males than the second.

Models that don’t use history omit this distinction.

30.06.2025 12:15 — 👍 0 🔁 0 💬 1 📌 0

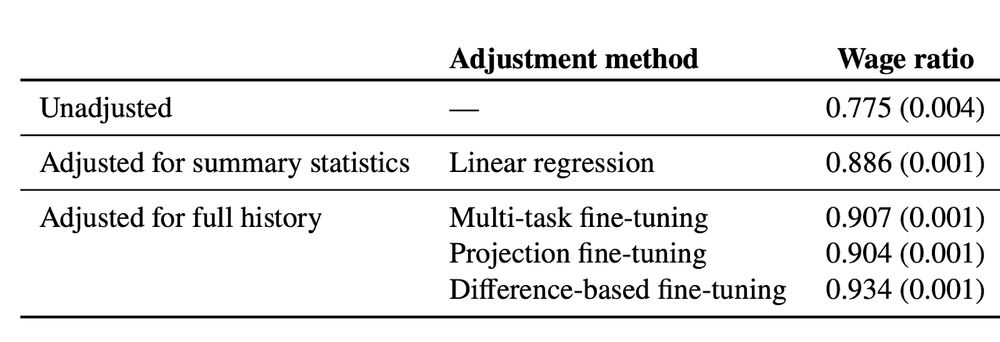

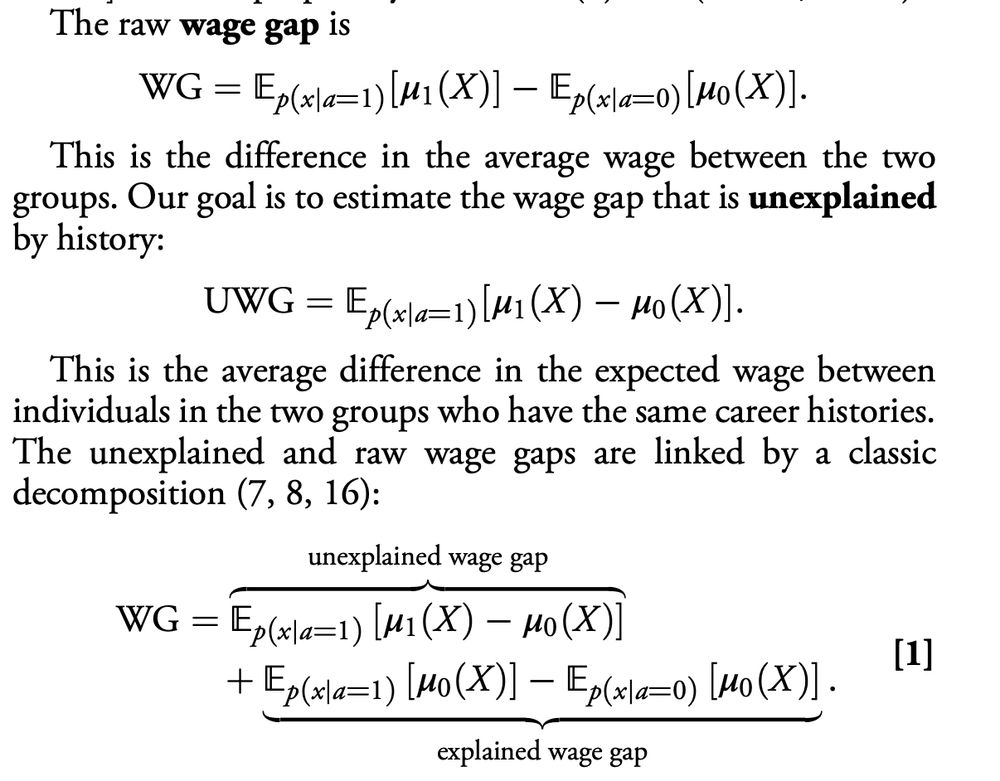

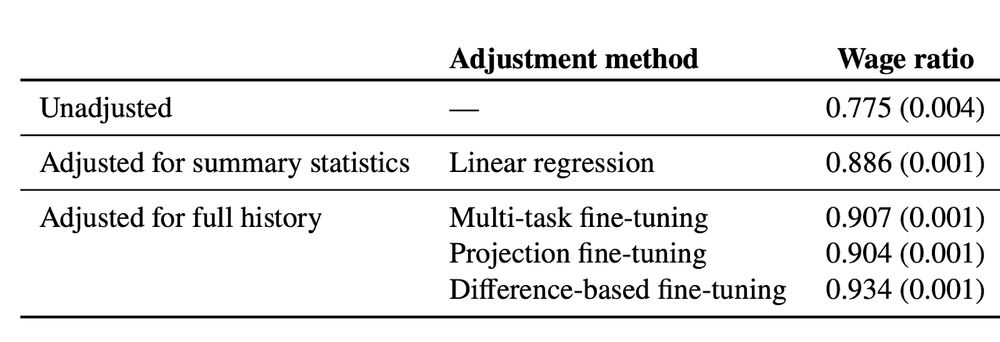

We use these methods to estimate wage gaps adjusted for full job history, following the literature on gender wage gaps.

History explains a substantial fraction of the remaining wage gap when compared to simpler methods. But there’s still a lot that history can’t account for.

30.06.2025 12:15 — 👍 0 🔁 0 💬 1 📌 0

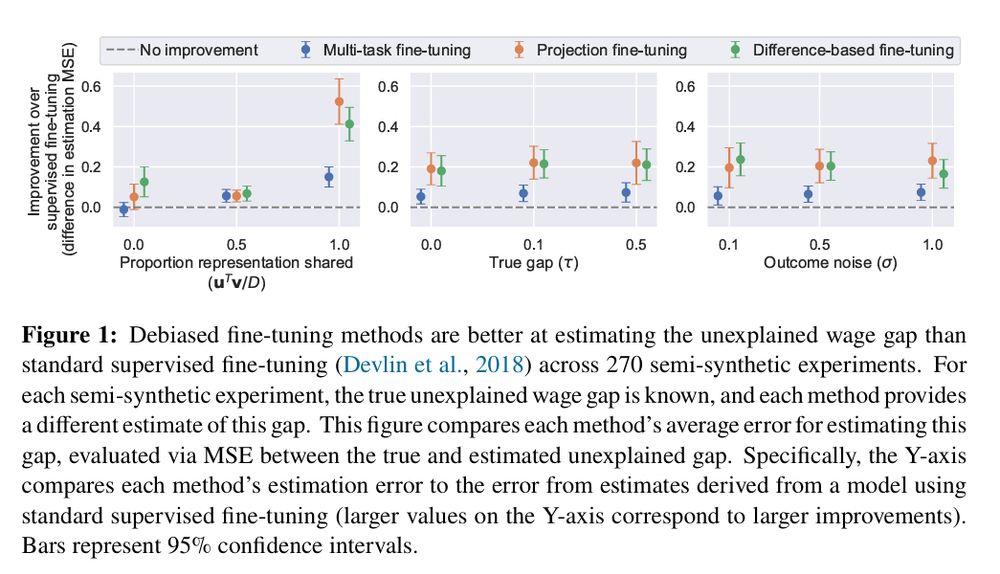

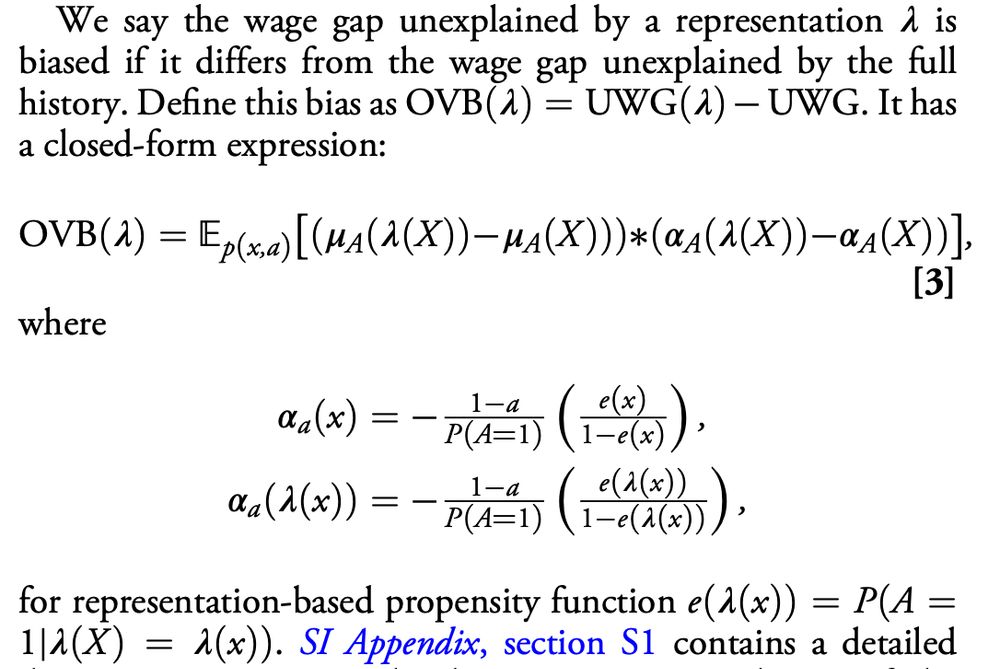

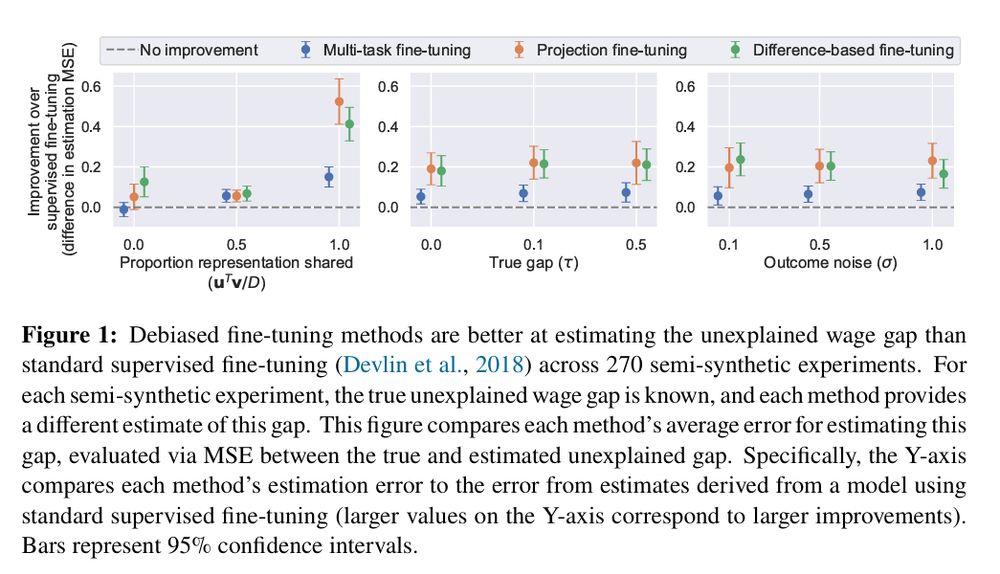

This result motivates new fine-tuning strategies.

We consider 3 strategies similar to methods from the causal estimation literature. E.g. optimize representations to predict the *difference* in male-female wages instead of individual wages.

All perform well on synthetic data.

30.06.2025 12:15 — 👍 0 🔁 0 💬 1 📌 0

Two extremes:

A representation that's just the identity function meets condition (1) trivially but not (2).

A representation that uses a very simple summary of history (e.g. # of years worked) should meet (2) but fails (1)

30.06.2025 12:15 — 👍 0 🔁 0 💬 1 📌 0

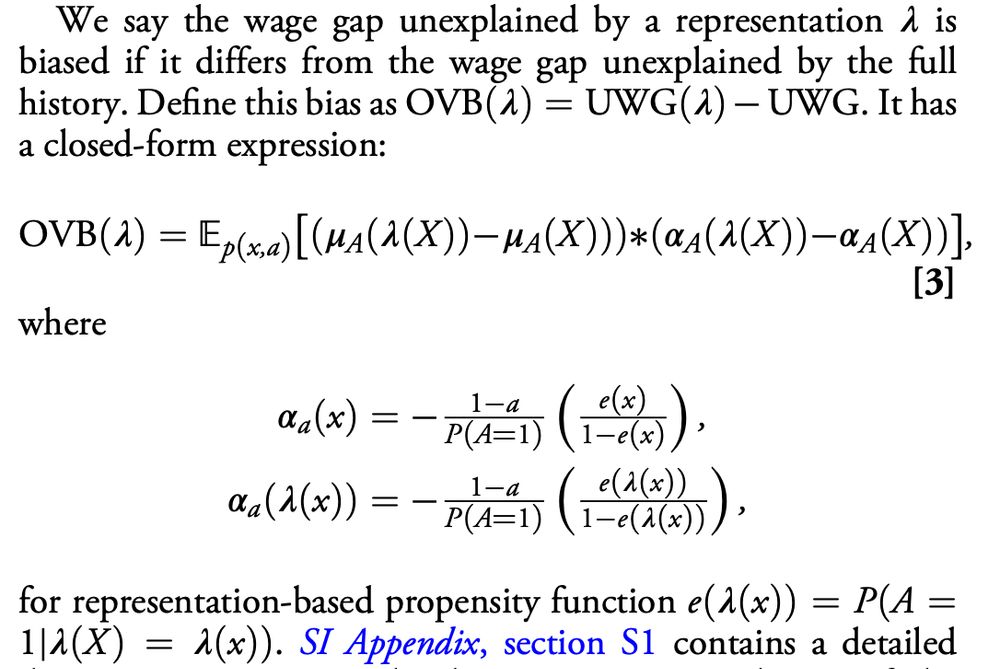

New result: Fast + consistent estimates are possible even if a representation drops info

Two main fine-tuning conditions:

1. Representation only drops info that isn't correlated w/ both wage & gender

2. Representation is simple enough that it’s easy to model wage & gender from it

30.06.2025 12:15 — 👍 0 🔁 0 💬 1 📌 0

Intuition: If working in job X at some point has a small effect on wages, but men are much likelier to have worked in job X than women, it may be omitted by a model optimized to predict wage.

30.06.2025 12:15 — 👍 0 🔁 0 💬 1 📌 0

Foundation models are usually fine-tuned to make predictions (like wages).

But representations fine-tuned this way can induce omitted variable bias: the gap adjusted for full history can be different from the gap adjusted for the representation of job history.

30.06.2025 12:15 — 👍 0 🔁 0 💬 1 📌 0

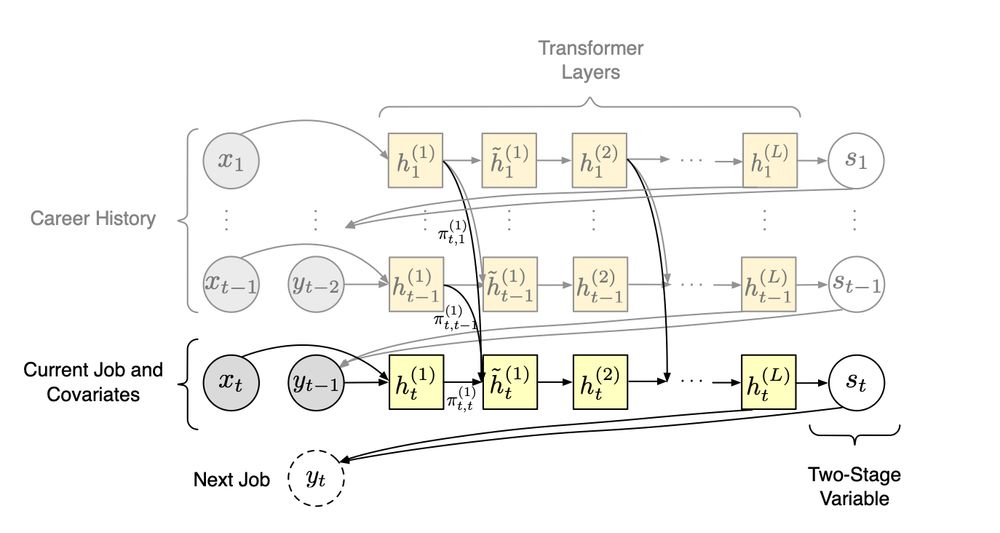

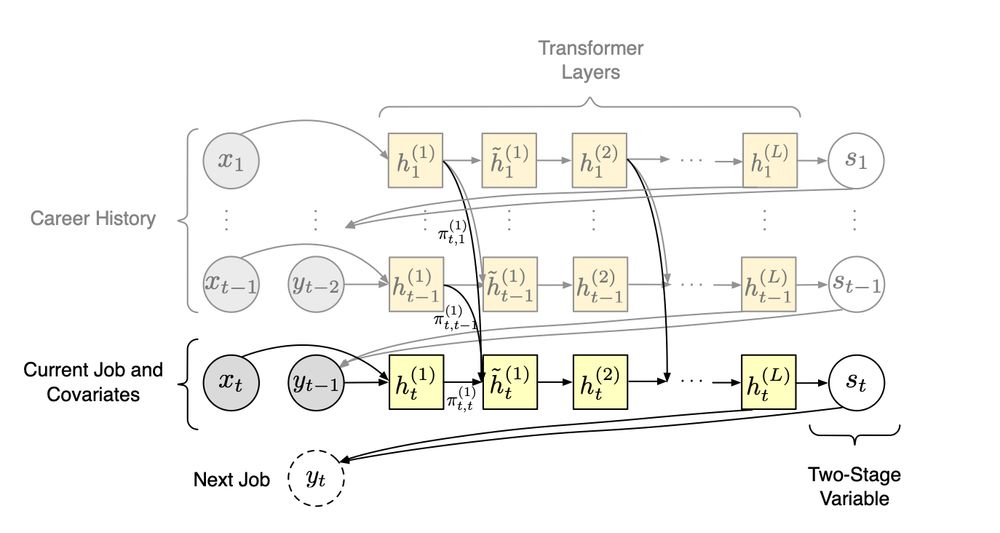

We use CAREER, a foundation model of job histories. It’s pretrained on resumes but its representations can be fine-tuned on the smaller datasets used to estimate wage gaps.

30.06.2025 12:15 — 👍 0 🔁 0 💬 1 📌 0

But this discards information that’s relevant to the wage gap.

In contrast, foundation models learn *representations*: lower-dimensional variables that summarize information.

30.06.2025 12:15 — 👍 0 🔁 0 💬 1 📌 0

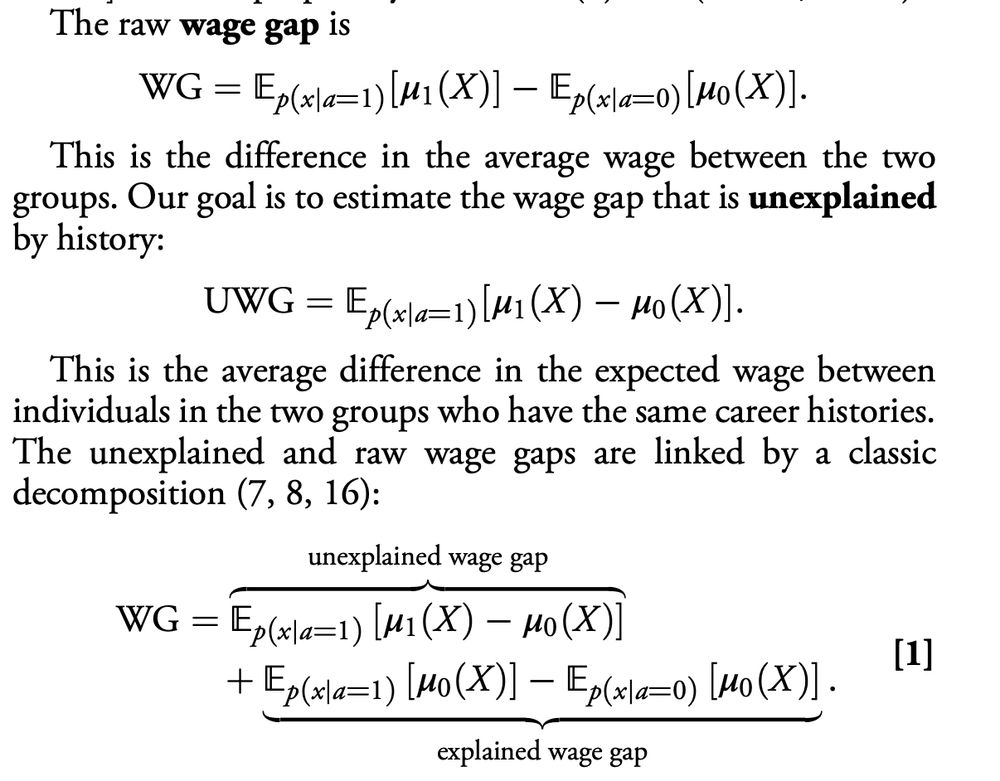

Consider estimating the wage gap explained by differences in job history.

Job history is high-dimensional since there are many possible sequences of jobs. So most economic models describe histories using hand-selected summary stats (e.g., # of years worked).

30.06.2025 12:15 — 👍 0 🔁 0 💬 1 📌 0

Decompositions can inform policy: a large explained gender wage gap can suggest differences in choices or opportunities earlier in a worker’s career, while an unexplained gap may arise due to differences in factors such as skill, care responsibilities, or bargaining.

30.06.2025 12:15 — 👍 0 🔁 0 💬 1 📌 0

Ex.: estimating the gender wage gap between men & women with the same job histories.

A large literature decomposes wage gaps into two parts: the part “explained” by gender gaps in observed characteristics (e.g. education, experience), and the part that’s “unexplained.”

30.06.2025 12:15 — 👍 0 🔁 0 💬 1 📌 0

Foundation models make great predictions. How should we use them for estimation problems in social science?

New PNAS paper @susanathey.bsky.social & @keyonv.bsky.social & @Blei Lab:

Bad news: Good predictions ≠ good estimates.

Good news: Good estimates possible by fine-tuning models differently 🧵

30.06.2025 12:15 — 👍 7 🔁 3 💬 1 📌 1

Takeaway 2: Our experiment isolates the effect for the least employable learners from credibly and systematically informing employers about online credentials. The positive finding helps build a case that such credentials may be good investments for workers seeking to transition jobs

22.05.2025 15:03 — 👍 0 🔁 0 💬 1 📌 0

Takeaway 1: Online learning platforms and professional networking sites (e.g., LinkedIn) can boost job outcomes with simple features. Even light nudges to encourage skill signaling (like sharing a certificate) can improve employment prospects

22.05.2025 15:03 — 👍 0 🔁 0 💬 1 📌 0

Professor at UPenn, author of GRIT, co-host of the podcast No Stupid Questions, co-founder of Character Lab

Assistant Professor of Behavioral Science at Chicago Booth

Wharton OID PhD

Princeton '17

I study gender & race and prosocial behavior

she, her, hers

https://www.erikakirgios.com/

Change lives. Change organizations. Change the world.

gsb.stanford.edu

IO economist + assistant prof at @StanfordGSB. I use theory + data to study how risk, commitment and information flows interplay with (good) policy design.

shoshanavasserman.com

We are a global poverty research center at MIT that conducts randomized evaluations and builds partnerships to bring innovative, effective programs to scale.

The official account of the Stanford Institute for Human-Centered AI, advancing AI research, education, policy, and practice to improve the human condition.

The official Bluesky account of the Stanford Institute for Economic Policy Research (SIEPR), Stanford University's home for addressing economic policy challenges around the globe.

Learn more about us: https://siepr.stanford.edu

Books et Veritas. Bringing truth to light for more than one hundred years.

Economist, Prof at Columbia University.

Chief Economist: Centre for Net Zero (Octopus Energy Group).

Co-editor: Journal of Public Economics.

1st gen, 🏴

https://www.rmetcalfe.net/

Cornell | AEA Data Editor | 🇨🇦🇺🇸🇩🇪

Committed to the daily re-imagining of what a university press can be since 1962.

Website: https://mitpress.mit.edu // The Reader (our home for excerpts, essays, & interviews): https://thereader.mitpress.mit.edu

Our publications, research, and higher education solutions spread knowledge, spark curiosity and aid understanding around the world.

View our social media commenting policy here: https://cup.org/38e0Gv2

Stanford University Press has been publishing books across the humanities, social sciences, law, business, and other areas since 1892.

official Bluesky account (check username👆)

Bugs, feature requests, feedback: support@bsky.app