Some SAC context for NeurIPS 2025 review scores - out of my batch of 100 papers:

- 1 paper ≥5.0

- 6 papers ≥4.5

- 11 papers ≥4.0

- 25 papers ≥3.75

- 42 papers ≥3.5

Good luck to all with rebuttals!

24.07.2025 17:02 — 👍 2 🔁 0 💬 0 📌 0

I will be at #NeurIPS between Dec 10-15. Looking forward to catching up with friends and colleagues!

09.12.2024 20:21 — 👍 5 🔁 0 💬 0 📌 0

I was actually discussing SimPO a few weeks ago in my LLM class. Solid work!

03.12.2024 17:37 — 👍 2 🔁 0 💬 0 📌 0

I like "slay" here. Makes theory research more RPG-like

28.11.2024 14:59 — 👍 3 🔁 0 💬 0 📌 0

NeurIPS Test of Time Awards:

Generative Adversarial Nets

Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, Yoshua Bengio

Sequence to Sequence Learning with Neural Networks

Ilya Sutskever, Oriol Vinyals, Quoc V. Le

27.11.2024 17:32 — 👍 312 🔁 28 💬 6 📌 4

All credits go to my amazing students :)

21.11.2024 22:19 — 👍 1 🔁 0 💬 0 📌 0

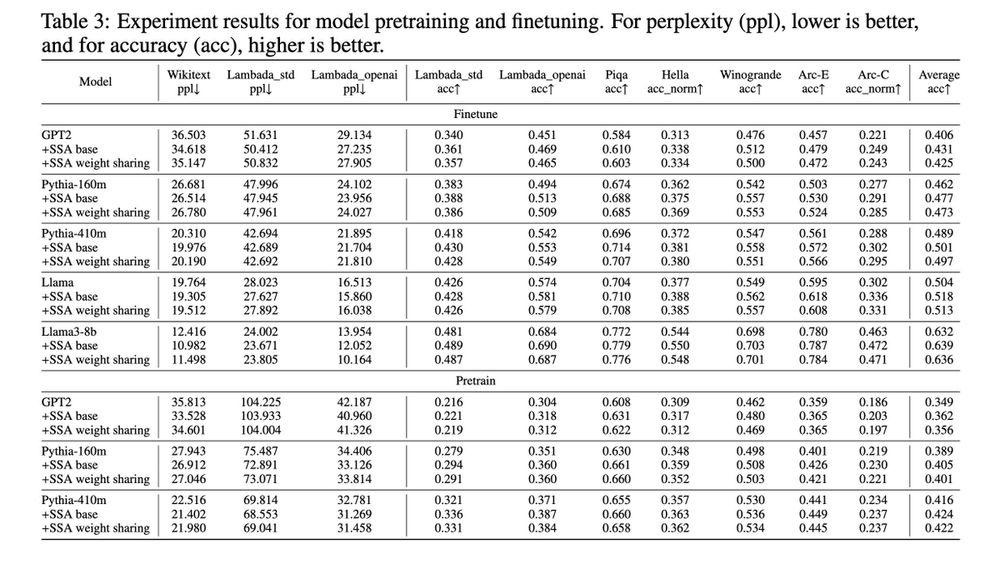

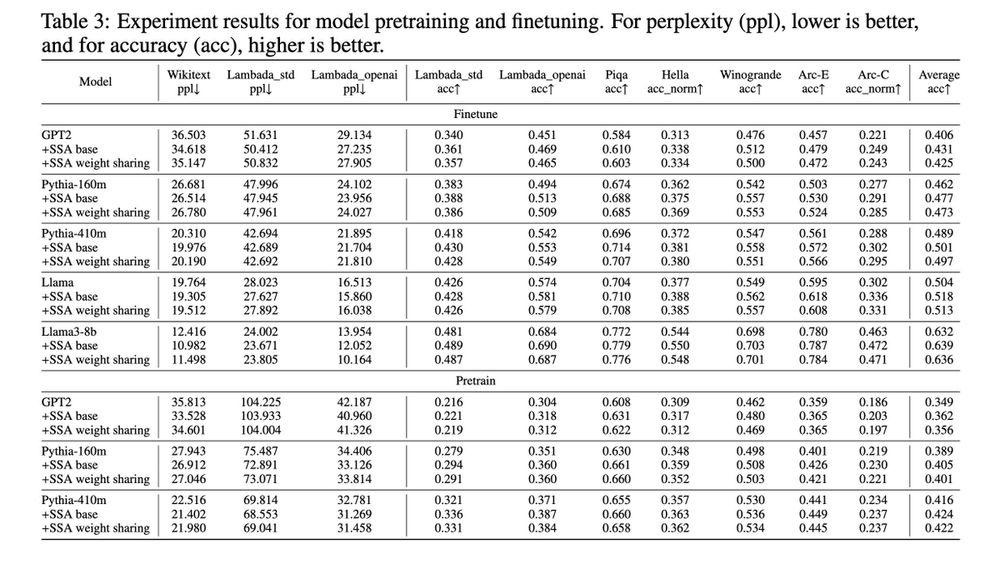

Our method uniformly improves language modeling evals with negligible compute overhead. During evals, we just plug in SSA and don't touch hyperparams/architecture so there is likely further headroom.

21.11.2024 22:19 — 👍 0 🔁 0 💬 2 📌 0

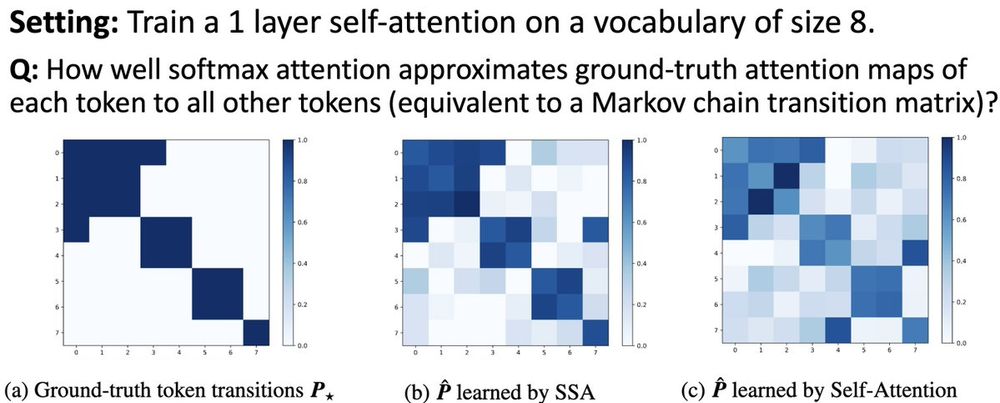

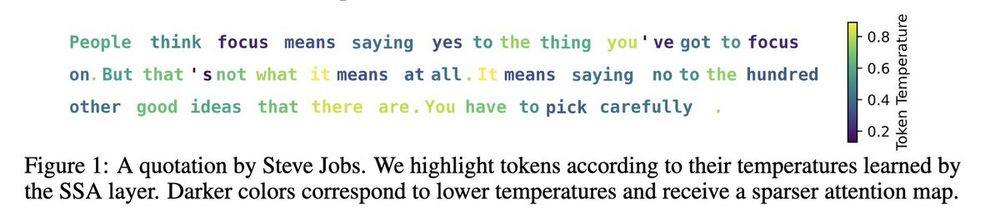

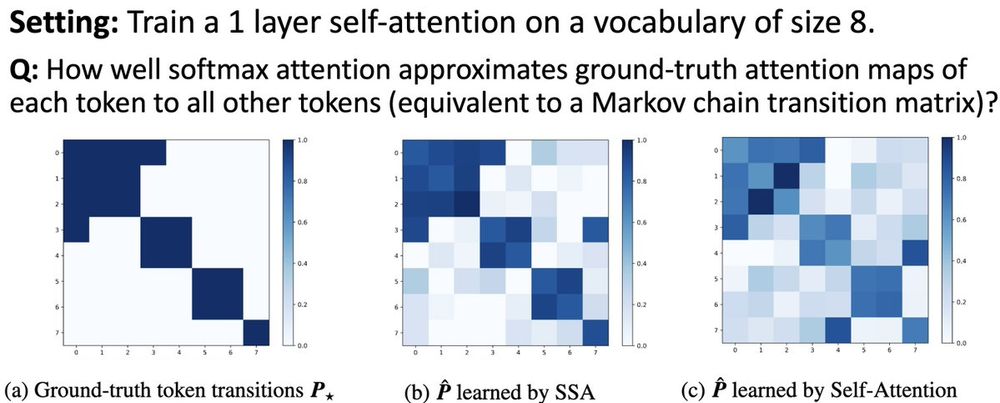

We can also see the approximation benefit directly from the quality/sharpness of the attention maps.

21.11.2024 22:19 — 👍 0 🔁 0 💬 1 📌 0

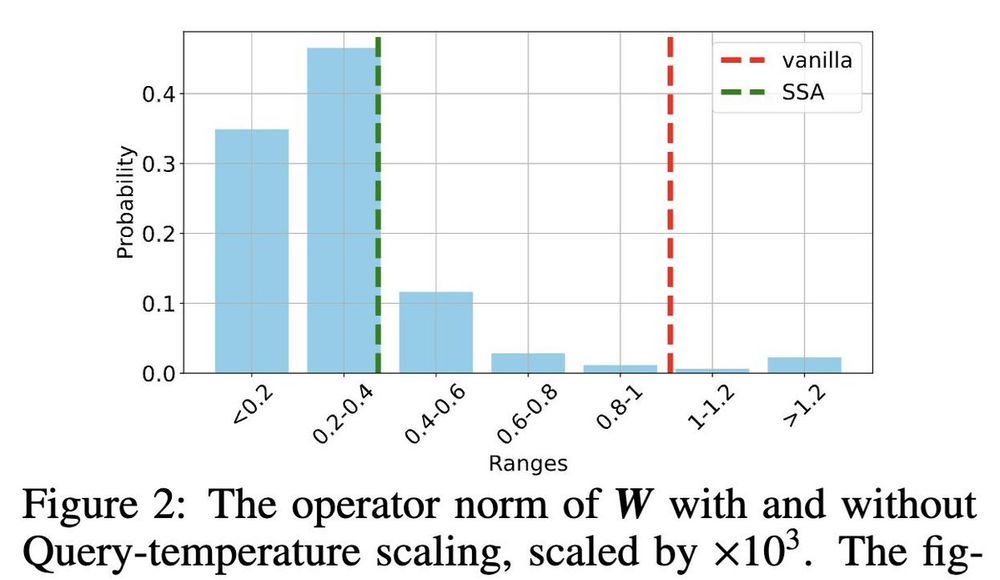

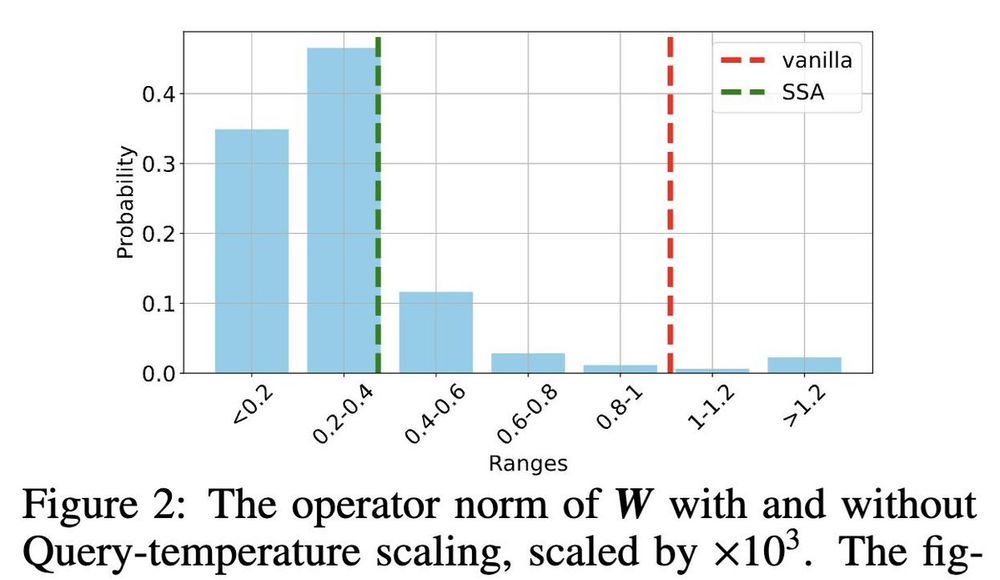

Why is this useful? Consider the tokens "Hinton" and "Scientist". These have high cosine similarity but we wish to assign them different spikiness levels. We show that this is provably difficult to achieve for vanilla attention, namely its weights have to grow much larger compared to our method.

21.11.2024 22:19 — 👍 0 🔁 0 💬 1 📌 0

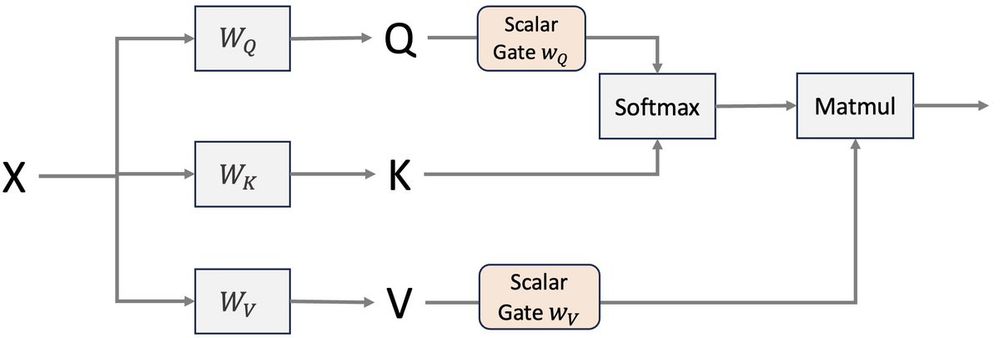

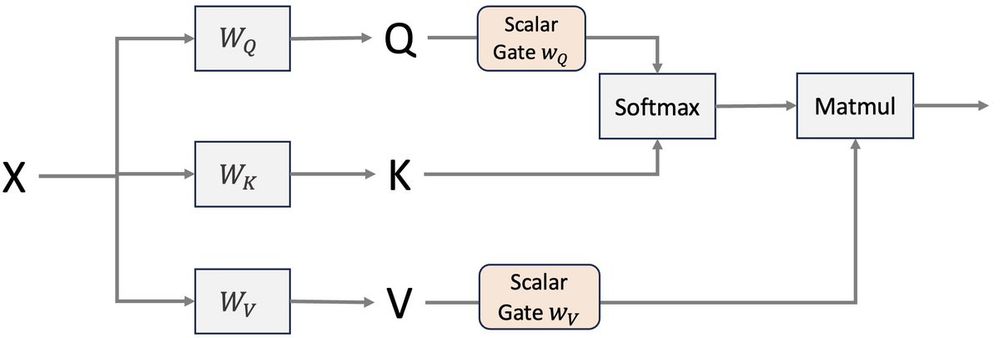

The method adds a temperature-scaling (scalar gating) after K/Q/V embeddings and before softmax. Temperature is a function of the token embedding and its position. Notably, this can be done by - fine-tuning rather than pretraining - using very few additional parameters

21.11.2024 22:19 — 👍 0 🔁 0 💬 1 📌 0

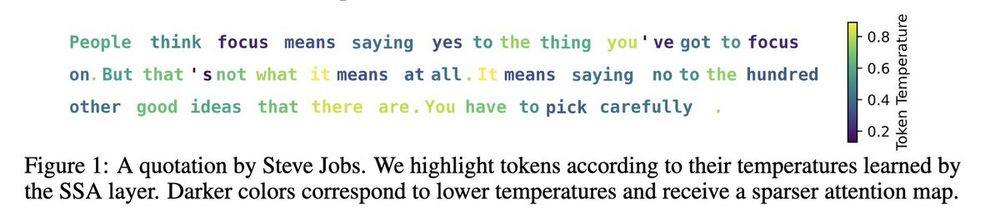

The intuition is that specific tokens like "Hinton" should receive a spikier attention map compared to generalist tokens like "Scientist". Learning token-dependent temperatures with this results in the colormap above where (arguably) more specific words receive low temperatures.

21.11.2024 22:19 — 👍 1 🔁 0 💬 1 📌 0

Hello world! Unfortunately, my first post happens to be a paper (thre)ad 😊: Our “Selective Attention” is a simple but effective method that dynamically adjusts the sparsity of the attention maps through temperature scaling: arxiv.org/pdf/2411.12892 (#neurips2024)

21.11.2024 22:19 — 👍 7 🔁 0 💬 1 📌 0

Research scientist at Google

Building generative models for high-dimensional science and engineering.

Assistant prof. @CarnegieMellon & affiliated faculty @mldcmu, previously instructor @NYU_Courant, PhD jointly @Harvard and @MIT

https://nmboffi.github.io

Assistant Professor of CS at UCLA

Machine learning, Optimization, Data-efficient learning

Professor at IST Austria | Machine learning, information theory | Prev: Stanford, EPFL | opinions my own

Associate professor @ Cornell Tech

Assistant Prof @ UC Riverside. Research on Efficient ML, RL, and LLMs. CS PhD @ UW Madison.

yinglunz.com

https://willett.psd.uchicago.edu/

Worah Family Professor, University of Chicago

National Institute for Theory and Mathematics in Biology (https://www.nitmb.org/)

Institute for AI in the Sky (SkAI, https://skai-institute.org/)

Assoc. Prof. ECE @UCSBEngineering | building p-bits for probabilistic computing | e^2-hardware for AI | chess fan, ~2300 Bullet @LiChess 😎 | opinions my own

Professor of Applied Mathematics at UCLA. Interested in deep learning and optimization.

asst. professor @ Technion. former postdoc @ Stanford. machine learning. statistics.

https://sites.google.com/view/yaniv-romano/

Research Scientist @ Google DeepMind. Physics of learning, ML / AI, condensed matter. Prev Ph.D. Physics @ UC Berkeley.

Chief scientist at Robust Intelligence and professor at Yale (on leave)

Love Physics, Maths, Machine learning, Computer Science but above all playing 🎸🎵 Happy dad 👧 👧. Also professor @ EPFL. Views are my own.

Sr Research Scientist at Google DeepMind, Toronto. Member, Mila. Adjunct, McGill CS. PhD Machine Learning & MASt Applied Math (Cambridge), BSc Math (Warwick). gkdz.org

Professor and Head of Algorithms, Data Structures and Foundations of Machine Learning at Computer Science, Aarhus University

PhD student at USC | prev. intern at Amazon

AI for science, ML theory

theory of neural networks for natural and artificial intelligence

https://pehlevan.seas.harvard.edu/