Three panel thing. In the left panel we use error bars. In the second, we take statistical significance as the biggest number but still have error bars. In LLM science, we just have the biggest number

What if we did a single run and declared victory

23.10.2025 02:28 — 👍 317 🔁 65 💬 13 📌 9

📰 I really enjoyed writing this article with @thetransmitter.bsky.social! In it, I summarize parts of our recent perspective article on neural manifolds (www.nature.com/articles/s41...), with a focus on highlighting just a few cool insights into the brain we've already seen at the population level.

04.08.2025 18:45 — 👍 54 🔁 15 💬 1 📌 1

YouTube video by Gerstner Lab

From Spikes To Rates

Is it possible to go from spikes to rates without averaging?

We show how to exactly map recurrent spiking networks into recurrent rate networks, with the same number of neurons. No temporal or spatial averaging needed!

Presented at Gatsby Neural Dynamics Workshop, London.

08.08.2025 15:25 — 👍 60 🔁 17 💬 2 📌 1

I wonder, where would be a good place to do modeling and chat with many people that study different species or do comparative studies? (asking for a friend)

16.07.2025 22:13 — 👍 15 🔁 3 💬 2 📌 0

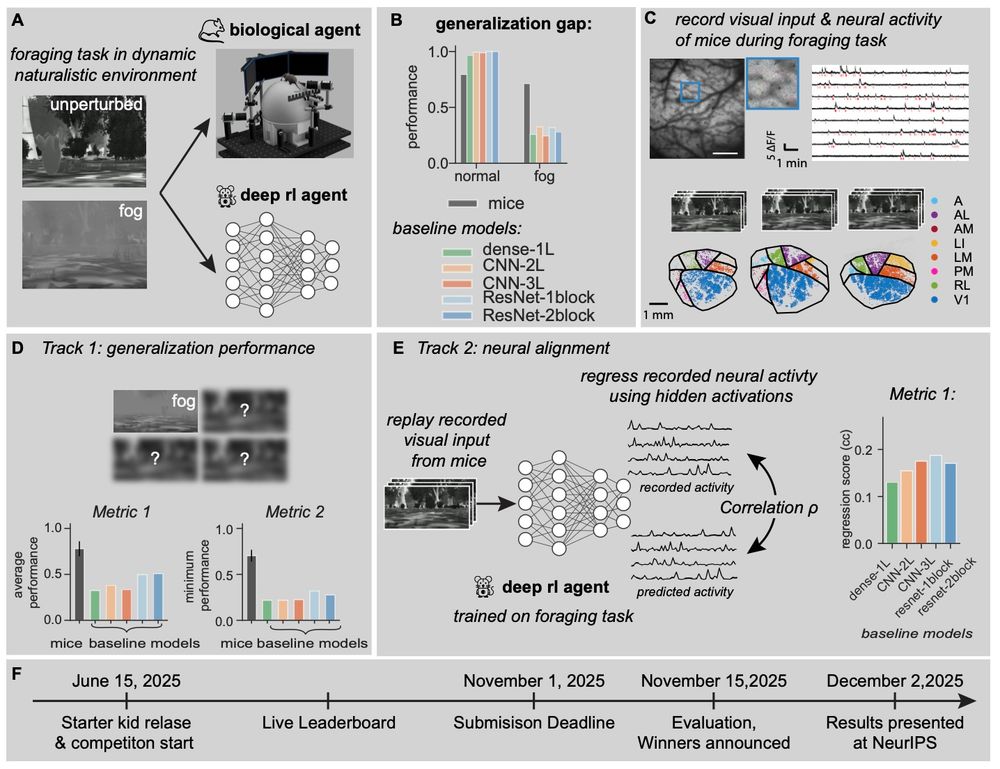

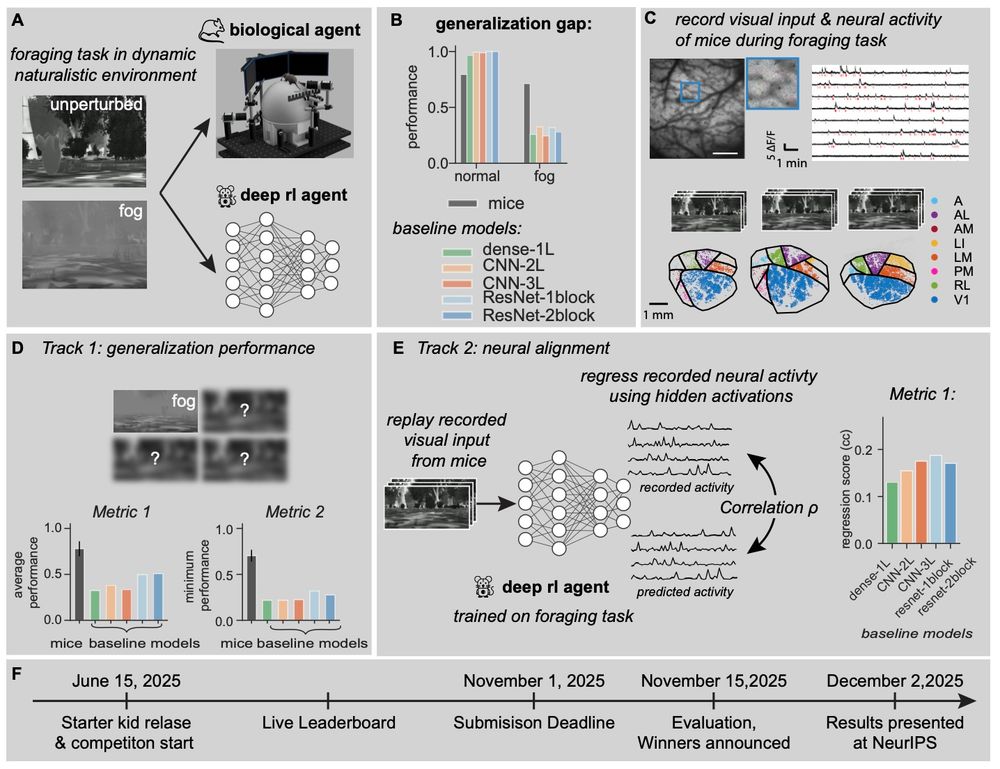

A summary figure for a NeurIPS competition where AI agents compete with mice in a visual foraging task.

Mice learn these tasks and are robust to perturbations like fog. Now, we invite you all to make AI agents to beat mice.

We present our #NeurIPS competition. You can learn about it here: robustforaging.github.io (7/n)

10.07.2025 12:22 — 👍 39 🔁 7 💬 1 📌 1

Simple low-dimensional computations explain variability in neuronal activity

Our understanding of neural computation is founded on the assumption that neurons fire in response to a linear summation of inputs. Yet experiments demonstrate that some neurons are capable of complex...

This paper carefully examines how well simple units capture neural data.

To quote someone from my lab (they can take credit if they want):

Def not news to those of us who use [ANN] models, but a good counter argument to the "but neurons are more complicated" crowd.

arxiv.org/abs/2504.08637

🧠📈 🧪

25.06.2025 15:49 — 👍 64 🔁 13 💬 3 📌 2

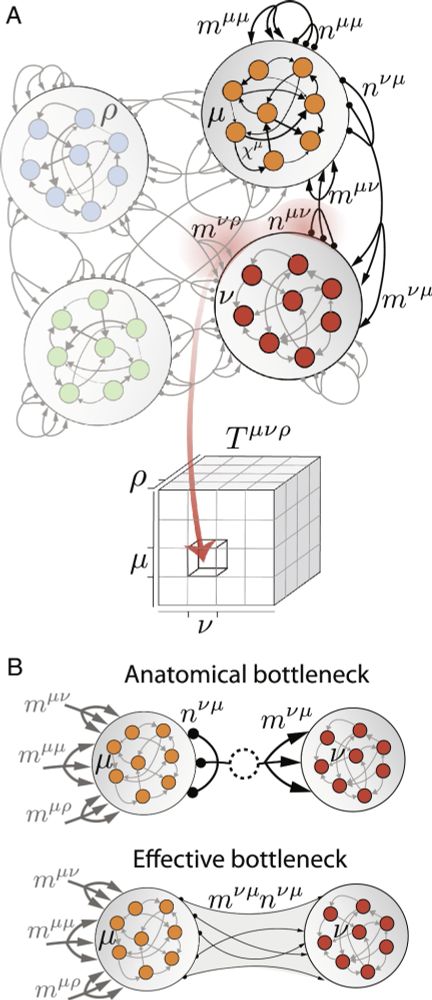

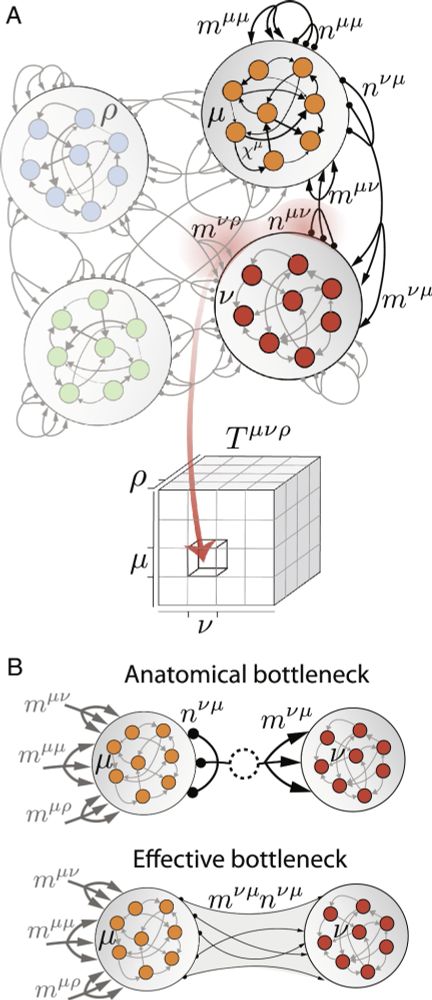

Structure of activity in multiregion recurrent neural networks | PNAS

Neural circuits comprise multiple interconnected regions, each with complex dynamics.

The interplay between local and global activity is thought to...

(1/23) In addition to the new Lady Gaga album "Mayhem," my paper with Manuel Beiran, "Structure of activity in multiregion recurrent neural networks," has been published today.

PNAS link: www.pnas.org/doi/10.1073/...

(see dclark.io for PDF)

An explainer thread...

07.03.2025 19:39 — 👍 87 🔁 18 💬 2 📌 0

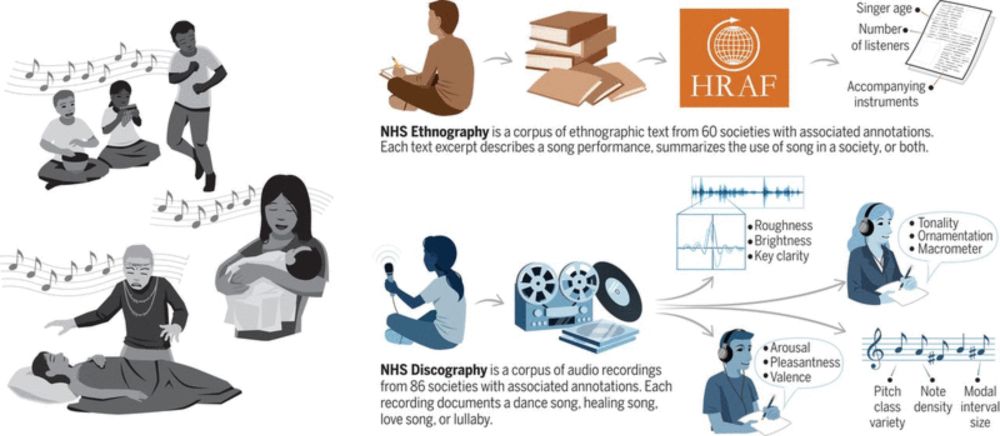

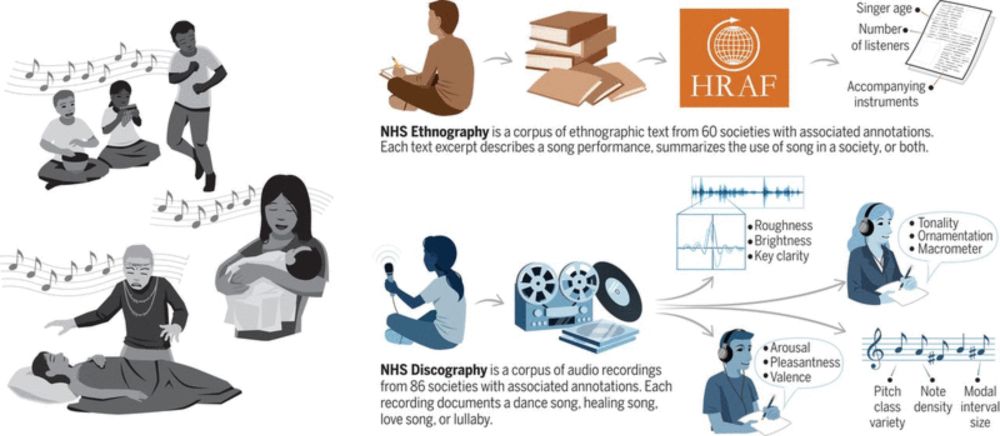

Universality and diversity in human song

Songs exhibit universal patterns across cultures.

Music is universal. It varies more within than between societies and can be described by a few key dimensions. That’s because brains operate by using the raw materials of music: oscillations (brainwaves).

www.science.org/doi/10.1126/...

#neuroscience

23.06.2025 11:38 — 👍 39 🔁 20 💬 4 📌 1

1/N

How do neural dynamics in motor cortex interact with those in subcortical networks to flexibly control movement? I’m beyond thrilled to share our work on this problem, led by Eric Kirk @eric-kirk.bsky.social with help from Kangjia Cai!

www.biorxiv.org/content/10.1...

23.06.2025 12:28 — 👍 70 🔁 22 💬 3 📌 1

Thrilled to announce I'll be starting my own neuro-theory lab, as an Assistant Professor at @yaleneuro.bsky.social @wutsaiyale.bsky.social this Fall!

My group will study offline learning in the sleeping brain: how neural activity self-organizes during sleep and the computations it performs. 🧵

23.06.2025 15:55 — 👍 408 🔁 48 💬 61 📌 7

screenshot of biorxiv paper titled "Neuromorphic hierarchical modular reservoirs", authors Filip Milisav,

Andrea I Luppi, Laura E Suárez, Guillaume Lajoie, Bratislav Misic

aside from this being a v cool paper I also want to congratulate the authors on the incredible SNR achieved in the title via a complete absence of filler words

Neuromorphic hierarchical modular reservoirs

www.biorxiv.org/content/10.1...

22.06.2025 17:13 — 👍 25 🔁 3 💬 3 📌 0

New preprint! 🧠🤖

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

06.06.2025 17:40 — 👍 52 🔁 23 💬 2 📌 8

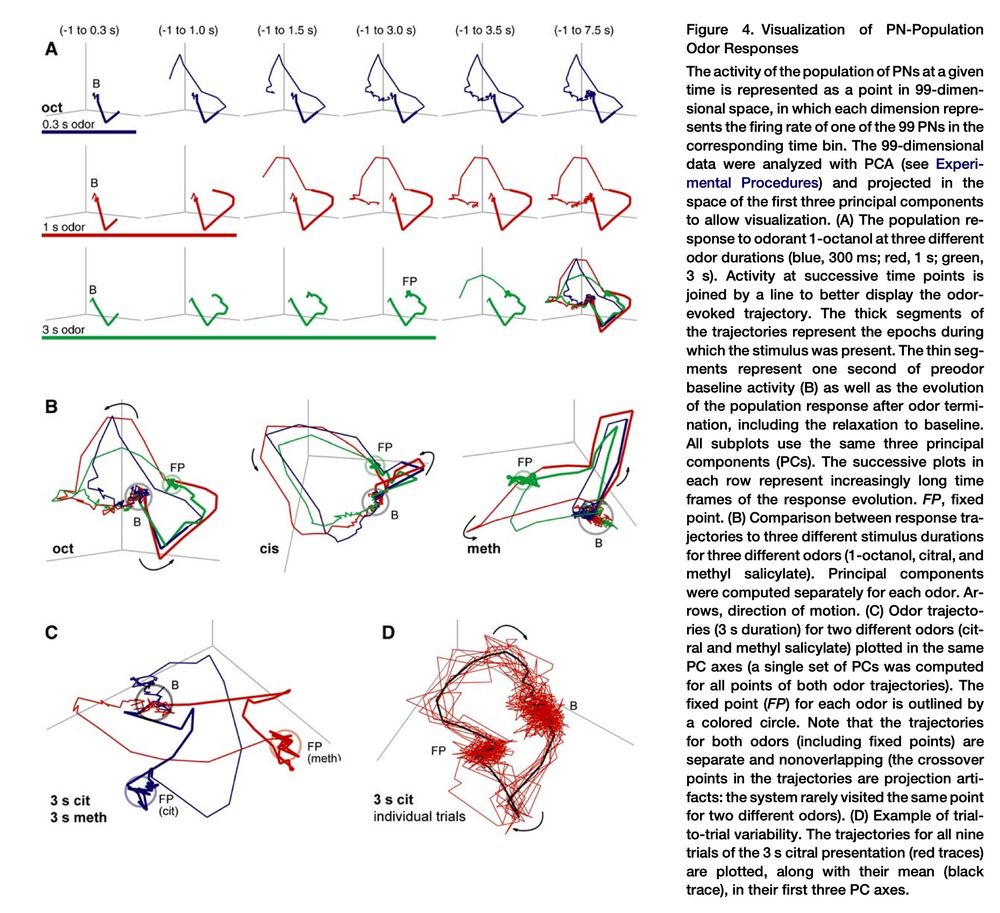

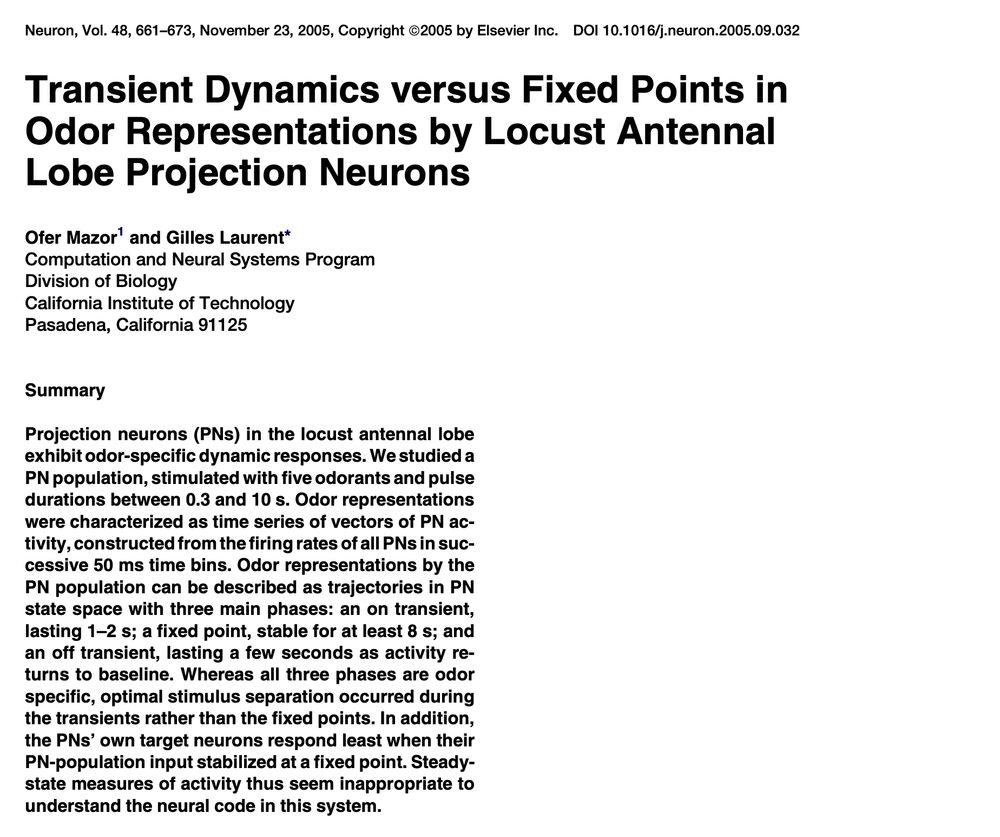

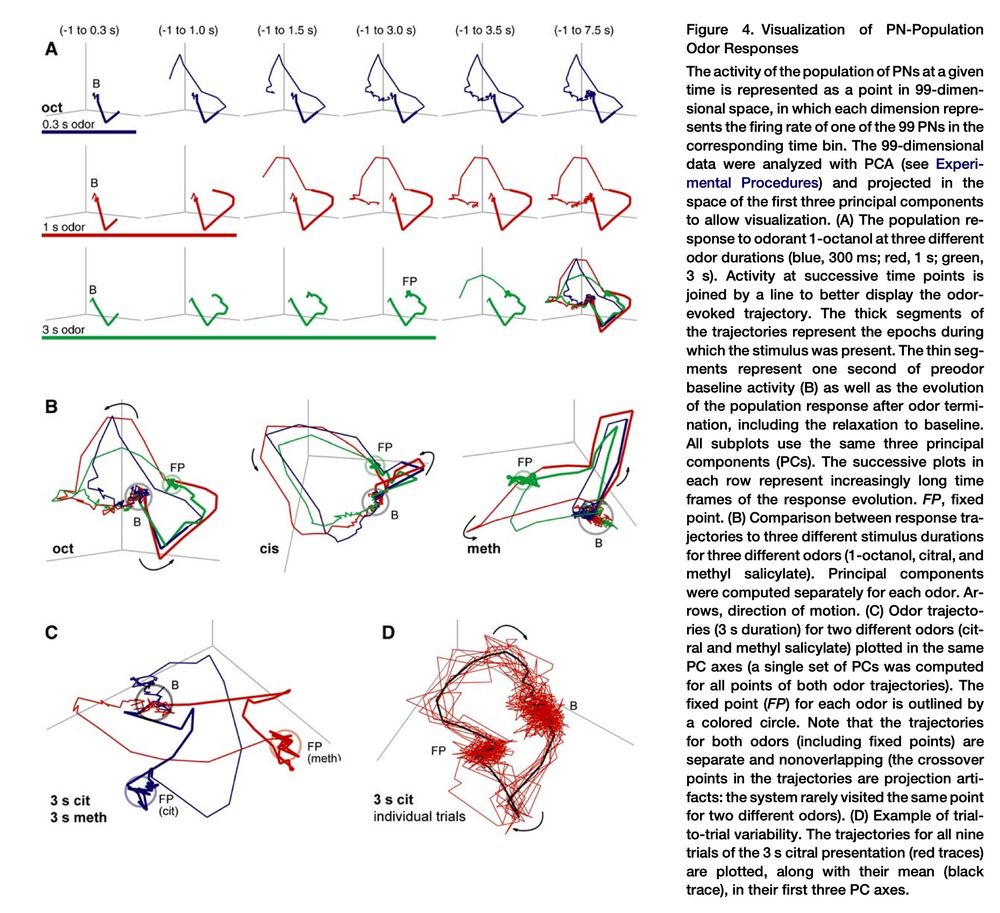

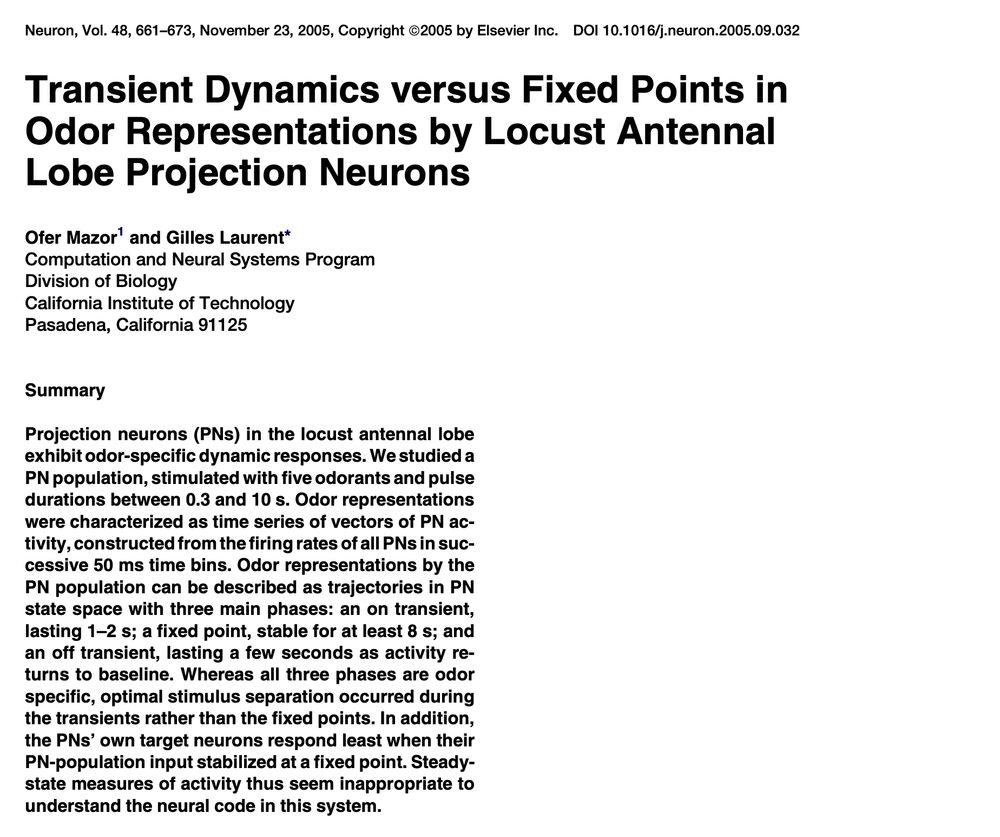

Curious about the history of the manifold/trajectory view of neural activity.

My own first exposure was Gilles Laurent's chapter in "21 Problems in Systems Neuroscience", where he cites odor trajectories in locust AL (2005). This was v inspiring as a biophysics student studying dynamical systems...

21.02.2025 19:11 — 👍 116 🔁 18 💬 10 📌 1

I think the biological evidence points to this not being the case. We can see instances where synapses literally undergo a form of reverse plasticity, e.g. see here: www.cell.com/trends/cogni...

I think it cannot be assumed that we never wipe memories from our brains completely!

24.01.2025 23:10 — 👍 10 🔁 1 💬 1 📌 0

How a neuroscientist solved the mystery of his own long COVID

How a neuroscientist solved the mystery of his own

#LongCovid and lead to a new scientific discovery. Inspiring story.

Thank you for sharing your journey @jeffmyau.bsky.social

www.youcanknowthings.com/how-one-neur...

08.01.2025 16:53 — 👍 150 🔁 44 💬 6 📌 3

RL promises "systems that can adapt to their environment". However, no RL system that I know of actually fulfill anything close to this goal, and, furthermore, I'd argue that all the current RL methodologies are actively hostile to this goal. Prove me wrong.

30.12.2024 22:41 — 👍 53 🔁 4 💬 10 📌 1

sinthlab EoY social! I'm grateful everyday that I get to work with such a kind and intelligent group of individuals.

@mattperich.bsky.social @oliviercodol.bsky.social @anirudhgj.bsky.social

12.12.2024 19:24 — 👍 4 🔁 1 💬 0 📌 1

Neural Attention Memory Models are evolved to optimize the performance of Transformers by actively pruning the KV cache memory. Surprisingly, we find that NAMMs are able to zero-shot transfer its performance gains across architectures, input modalities and even task domains! arxiv.org/abs/2410.13166

10.12.2024 01:41 — 👍 57 🔁 9 💬 1 📌 0

Thrilled to share a new preprint exploring the spatial organization of multisensory convergence in the mouse isocortex! 🧠🎉 Even more special as it builds on work I started during my undergrad, a lifetime ago 👨🦳

Check it out here: www.biorxiv.org/content/10.1...

10.12.2024 22:41 — 👍 13 🔁 4 💬 1 📌 0

I’m speechless. Truly an art and despite being only four minutes long, I learned a lot!

17.11.2024 21:54 — 👍 5 🔁 2 💬 0 📌 0

Advancing the frontiers of basic science through grantmaking, research and public engagement. Sign up for our newsletter: simonsfoundation.org/newsletter

UCSD professor of neurobiology and neurosciences

Neuroscientist. Professor at Harvard University.

Studies the neural mechanisms underlying decision-making and learning. Dopamine.

Assistant Prof. Princeton Neuroscience Institute. Neural dynamics, hormones, behavioral quantification. Understanding the world, one fighting mouse at a time.

www.falknerlab.com

comp neuro prof @ Princeton

brains, machine learning, & postmodern angst

pillowlab.princeton.edu

Assistant Professor of Physics & Neuroscience at Princeton University. Studying how C. elegans nervous system processes information to generate actions.

Neuroscientist @Janelia, HHMI

www.ahrenslab.org

Neuroscientist at University College London (www.ucl.ac.uk/cortexlab). Opinions my own.

Computational neuroscientist @princetonneuro.bsky.social deciphering natural and advancing artificial intelligence.

Neuroscientist studying neural circuits underlying perception and behaviour. Professor at the Sainsbury's Wellcome Centre, London

Neuroscientist at CSHL. Interests: neuroAI, molecular connectomics, & cortical circuits. Co-founder of Cosyne and NAISys meetings.

Neuroscientist. Long-form opinions at https://markusmeister.com.

Computational neuroscientist interested in cognition, computation, memory, decision making. Studying the human brain.

Computational neuroscientist, Assistant Professor at @sinaibrain.bsky.social, Mount Sinai. Views are my own. New York, NY. He/him. schafferlab.com

Neuroscientist @ CNRS & Aix-Marseille University, France | SCGB TTI fellow | ERC StG 2024 | fannycazettes.com

Neuroscientist at the Allen Institute for Neural Dynamics | UW NBio | prev: Champaliamud & UCSD

Neuroscientist and painter | group leader at SIDB, University of Edinburgh | SCGB BTI Fellow | Prediction and Plasticity lab

Neuroscientist. Assistant Professor at Scripps Research.

https://www.ran-lab.org