Huge news out of the UK 🇬🇧

www.reuters.com/world/uk/sau...

@jsrailton.bsky.social

Chasing digital badness. Senior Researcher at Citizen Lab, but words here are mine.

Huge news out of the UK 🇬🇧

www.reuters.com/world/uk/sau...

Spyware found on Belarusian journalist's phone shortly after interrogation by security services. Reporters w/o Borders: Spyware likely installed while the journalist was detained. The same infection method recently used in Kenya + Serbia. H/t @jsrailton.bsky.social

therecord.media/spyware-bela...

And I forgot to mention that Russia, too, has a history of implanting spyware onto devices when people are in custody.

Absolutely a growing trend.

Shoutout to the teams at @rsf.org and Resident.ngo for their work on this project.

The full report is a great read.

Belarusian KGB put spyware on phones of detained journalist.

Growing list of cases where authoritarian regimes use detention to implant spyware on phones:

🦠Belarus

🦠Kenya

🦠Serbia

And likely plenty more.

Important investigation & reminder that dictators don't always need zero-days.

1/ Yesterday’s Q2-Q3 Adversarial Threat Report by Meta was interesting in many ways. For us @citizenlab.ca, it was a blast from the past.

For the first time, Meta’s investigators attributed what in 2019 we had named Endless Mayfly - a relentless, sophisticated influence op targeting Iran’s enemies.

In 2019, we @citizenlab.ca published an investigation into a disinfo / influence operation we called "Endless Mayfly", and which we attributed at the time to an "Iran-aligned entity"

citizenlab.ca/2019/05/burn...

Now, Meta's latest adversarial threat report showing we were spot on 👇

Fascinating theory.

25.11.2025 09:44 — 👍 8 🔁 0 💬 0 📌 0

3/ Everybody wants privacy in the bathroom.

There's even a whole #BringBackDoors campaign.

Yet I keep accidentally booking into hotel rooms that seem determined to reject this basic human comfort.

Great video by @kendragaylord.bsky.social

www.youtube.com/watch?v=QFPG...

A twin bed hotel room with no bathroom door?

What is going on?

Hotel toilet privacy is disappearing.

Glass doors.

Or no door.

Or a big window into the room.

Or frosted glass so that the light spills out.

Who is asking for this?

Part of Amazon AWS went down back in October and a lot of things broke.

Now something is up with Cloudflare...

Now is a good time to remember that a lot of eggs are in a handful baskets.

Time again to have those big conversations about what resiliency looks like.

Massive global issue with Cloudflare.

App not working? Can't login? Probably why.

SO much of the internet depends on Cloudflare to stay online.

But what happens when Cloudflare itself goes down?

Well, you're watching it.

9. You can find the orders on the wonderful Court Listener

Permanent injunction storage.courtlistener.com/recap/gov.us...

Order resolving objections: storage.courtlistener.com/recap/gov.us...

Final judgement: storage.courtlistener.com/recap/gov.us...

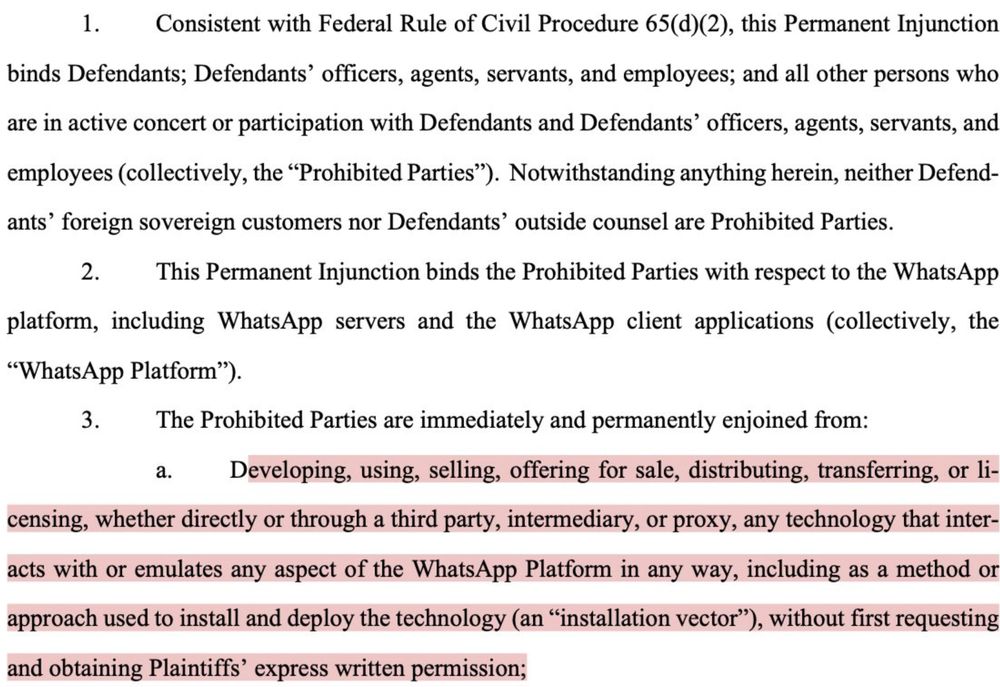

8. Big picture: NSO has made some risky bets around the US & landed some comeback coverage.

But the court order banning hacking WhatsApp is immediately operationally crippling.

NSO Group's investors, new owner & CEO are all probably having a very nasty Wednesday evening.

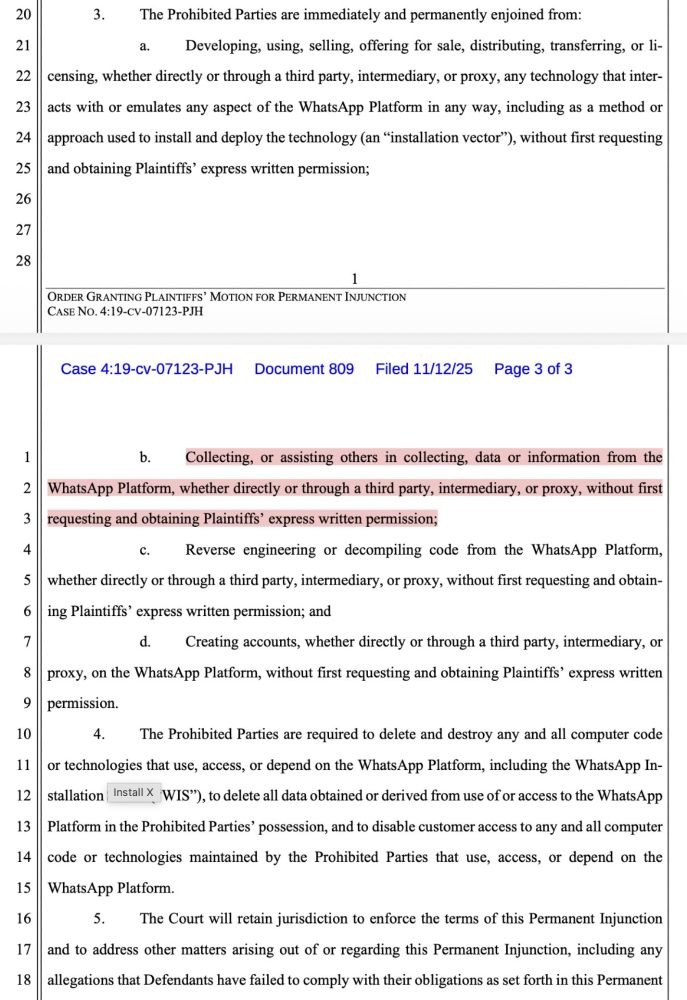

7. NSO" hey court, please clarify that our foreign gov customers don't have to delete WhatsApp data.

Court: ok we will tweak this a little bit, but beyond that, nope.

Impact: foreign govs can keep previously-exfiltrated WhatsApp data.

But they lose NSO's support & NSO must delete its own copies

6. NSO also said: hey don't prohibit us from collecting data from Pegasus spyware infected phones..if we didn't do it by accessing WhatsApp servers.

Court: overruled.

Ouch.

5. NSO made a series of objections to the proposed injunction.

They floated Pegasus being used in the future in the US "in a time of domestic crisis"

And asked court for a 'sweeping' carve out.

Court: overruled.

4. I believe NSO's core business requires constantly illegally hacking American companies' products.

The music might have kept playing as long as they kept far outside of the US.

But then they made a big bet on trying to get into the US.

And now they are in a pickle.

3. But it's hard to make a competitive spyware product if you are legally forbidden from hacking world's most popular encrypted messaging app.

And since NSO just made big moves into the US, they'll have a hard time arguing that US law doesn't apply.

2. NSO Group has been on a pre-comeback tour of sorts. New ownership. New CEO..

All in the service, I believe, of getting Pegasus the US market.

And getting out of a a truly dire financial pickle.

www.wsj.com/tech/israeli...

CRUSHING BLOW TO NSO: 🇺🇸Court permanently bans Pegasus spyware-maker from targeting WhatsApp

🚫Must destroy tools exploiting WhatsApp

🚫Stop future development of WA targeting

Foreign gov customers exempt from data deletion but...

🚫NSO is barred from helping them hack WA. 1/

3/ I just realized I forgot to include a link to the source filing!

It comes from the Whatsapp v NSO case and reflects NSO Group's efforts to get out from under a permanent injunction to stop hacking WhatsApp users.

storage.courtlistener.com/recap/gov.us...

What used to be bugs and informants is now #spyware. Bringing together victims of #Stasi surveillance and #spyware to discuss: How can we protect #freedom in the digital age?

🗓️ November 12, 18:30

#BerlinFreedomWeek

Register here 👇

www.berlin-freedom-week.com/en/event/sta...

A firm that sells extraordinarily powerful phone hacking technology that has been demonstrably connected to widespread harms worldwide, including gruesome murder, and was properly sanctioned and held liable because of it

....is now in the hands of these people.

That's Bad News, everyone.

Bringing NSO Group out of the cold would signal to the rest of the spyware industry that even the most notorious mercenary spyware company..

...with a history of harming the US.

...and a mountain of abuses..

Can get a free pass.

It would defang US efforts to curb proliferation & bad behavior.

8/ I believe NSO does not change.

They've churned through countless lobbyists to persuade you that they are turning over a new leaf

But in the end it's always the same story.

Activists, elections, politicians, dissidents getting their lives turned upside down.

Story

www.wsj.com/tech/israeli...

7/ Even in Trump 1, the admin was concerned about Pegasus proliferation.

And in 2021 with a clear-eyed assessment that NSO was harming US national security and foreign policy objectives.

What followed? Entity listing, visa bans, and an executive order on spyware. Plus congressional action..

6/ Today NSO desperately wants to be relieved of the consequences of their own choices.

Their 'secret' tech keeps getting discovered.

They've lost in American court.

Their valuation cratered.

They're scandal-ridden

Don't believe the spin. Now, I think they want a bailout.

5/ NSO doesn't just help foreign governments hack American companies.

They scoff at American law.

Don't take my word for it.

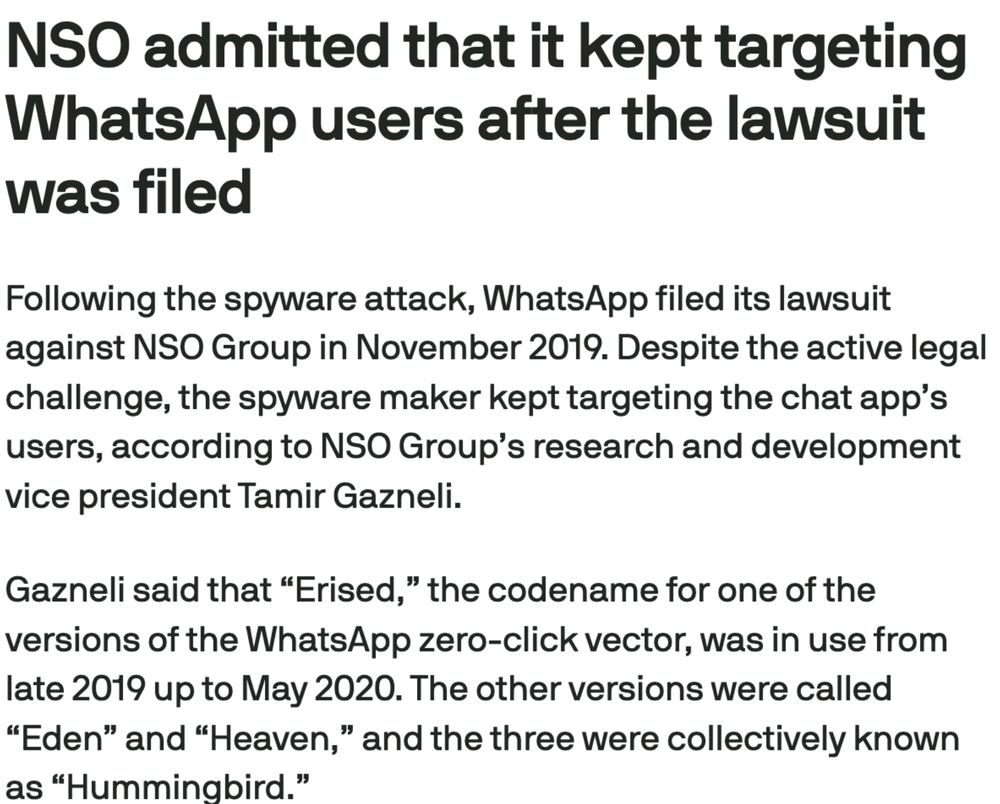

After getting sued for breaking the law and hacking WhatsApp users.. they kept doing it.

By @lorenzofb.bsky.social

techcrunch.com/2025/05/30/e...

4/ NSO's business model: hacking 🇺🇸American companies.

NSO sources vulnerabilities in US products.

Then weaponizes them to fuel Pegasus hacking by foreign governments.

Diminishing the value of American tech & making us all less safe.