🚀 Excited to share REPPO, a new on-policy RL agent!

TL;DR: Replace PPO with REPPO for fewer hyperparameter headaches and more robust training.

REPPO, led by @cvoelcker.bsky.social, will be presented at ICLR 2026. How does it work? 🧵👇

13.02.2026 19:28 — 👍 24 🔁 10 💬 1 📌 0

Great resource!

This is specially important for people starting their research careers.

Getting to know different research communities is really important! Technical insight is crucial, but science is ultimately a social endeavor -- understanding and communicating to your peers is key.

09.01.2026 21:24 — 👍 3 🔁 1 💬 1 📌 0

Technology and Social Isolation: From Cars to “AI”

In this blog post, I tell a story of how some technologies from the past century or so have, overall, led to increased social isolation in the United States, as we replaced in-person social interactio...

As we in the US gather with our families this weekend, it's a good time to consider the ways that technology brings us together—but also keeps us apart. In this blog post, I tell a story about how technology may have tended to increase social isolation.

aaronhertzmann.com/2025/10/26/i...

26.11.2025 21:48 — 👍 7 🔁 5 💬 0 📌 0

Breaking: we release a fully synthetic generalist dataset for pretraining, SYNTH and two new SOTA reasoning models exclusively trained on it. Despite having seen only 200 billion tokens, Baguettotron is currently best-in-class in its size range. pleias.fr/blog/blogsyn...

10.11.2025 17:30 — 👍 182 🔁 33 💬 3 📌 18

Session this afternoon (in 30 minutes)!!

Poster 153 — see you there!

22.10.2025 23:53 — 👍 2 🔁 0 💬 0 📌 0

I wrote a notebook for a lecture/exercice on image generation with flow matching. The idea is to use FM to render images composed of simple shapes using their attributes (type, size, color, etc). Not super useful but fun and easy to train!

colab.research.google.com/drive/16GJyb...

Comments welcome!

27.06.2025 16:52 — 👍 41 🔁 8 💬 2 📌 0

Oh nvm I read “our ICCV paper…” haha

20.06.2025 20:26 — 👍 1 🔁 0 💬 0 📌 0

Are the results out? I see nothing in OpenReview :-(

20.06.2025 20:21 — 👍 1 🔁 0 💬 2 📌 0

CVPR 2025 Workshop List

For folks attending CVPR: is there a website where I can see the list of workshops, their location AND time? Day and time are empty when I access cvpr.thecvf.com/Conferences/...

11.06.2025 04:04 — 👍 1 🔁 0 💬 0 📌 0

I will be in Nashville until Saturday for CVPR'25 \o/

DM if you want to meet!

09.06.2025 19:53 — 👍 7 🔁 0 💬 0 📌 0

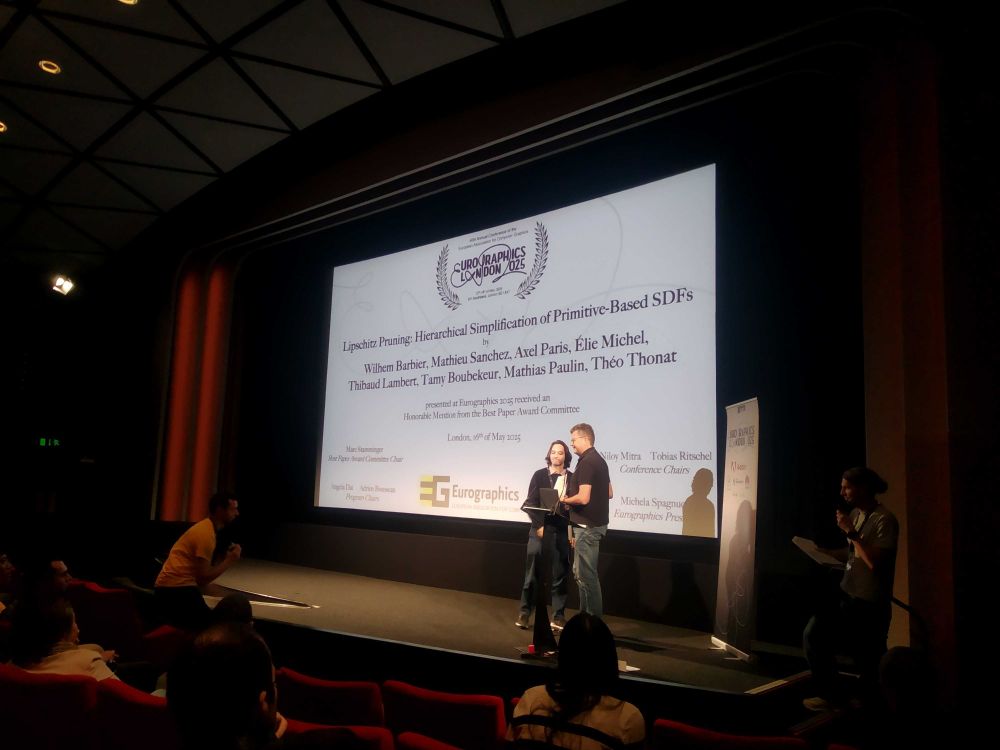

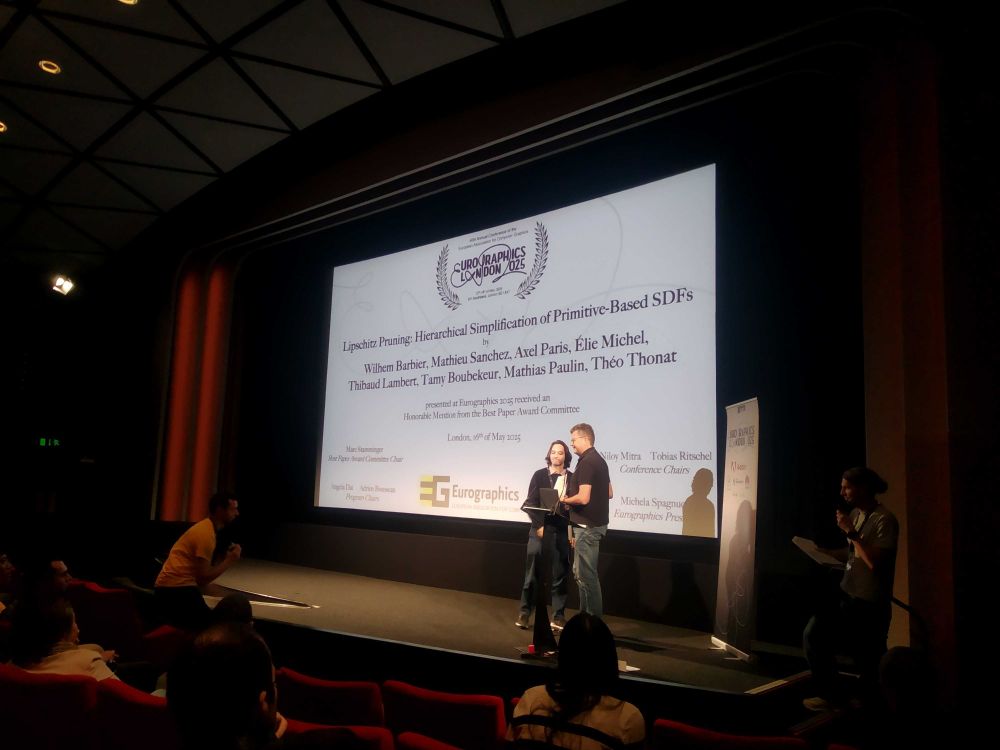

Wilhem receiving the award on stage

🏅Honored to have been awarded at #Eurographics25 for our paper on #LipschitzPruning to speed-up SDF rendering!

👉 The paper's page: wbrbr.org/publications...

Congrats to @wbrbr.bsky.social, M. Sanchez, @axelparis.bsky.social, T. Lambert, @tamyboubekeur.bsky.social, M. Paulin and T. Thonat!

19.05.2025 09:54 — 👍 25 🔁 4 💬 0 📌 0

GitHub - JelteF/PyLaTeX: A Python library for creating LaTeX files

A Python library for creating LaTeX files. Contribute to JelteF/PyLaTeX development by creating an account on GitHub.

I usually write a python script that prints some .npy file as tex a .tex table. It is also useful as an easy way to share results throughout the project, so I consider this as part of the codebase. I heard that people that are more serious about such practice use smth like github.com/JelteF/PyLaTeX

14.05.2025 17:46 — 👍 2 🔁 0 💬 0 📌 0

Comecei a fazer por causa disso e por causa do vim, mas até no overleaf é vantagem

14.05.2025 00:47 — 👍 0 🔁 0 💬 0 📌 0

Eu n uso pra coisas que faço sozinho (anotações, apresentações, etc). Mas pra trabalho colaborativo é meio q obrigatório. Estudantes vão entrar em revolta se vc usar git hahaha

14.05.2025 00:45 — 👍 0 🔁 0 💬 1 📌 0

NeurIPS and SIGGRAPH Asia deadline are coming.

Make your life easier: read this thread.

14.05.2025 00:09 — 👍 7 🔁 2 💬 1 📌 0

Let's gooo!!! \o/

Probably my first time visiting Brazil for professional reasons :-)

28.04.2025 19:48 — 👍 4 🔁 0 💬 0 📌 0

What features did you find particularly useful?

I liked asking questions about the code base and the tab completion seems nice, but I've been getting unhelpful suggestions for all the "agentic" stuff.

14.04.2025 23:20 — 👍 1 🔁 0 💬 1 📌 0

By popular demand, we are extending #CVPR2025 coverage to Bluesky. Stay tuned!

27.02.2025 21:07 — 👍 124 🔁 17 💬 5 📌 2

Exciting news! MegaSAM code is out🔥 & the updated Shape of Motion results with MegaSAM are really impressive! A year ago I didn't think we could make any progress on these videos: shape-of-motion.github.io/results.html

Huge congrats to everyone involved and the community 🎉

24.02.2025 18:52 — 👍 74 🔁 17 💬 3 📌 0

*it

23.02.2025 19:50 — 👍 0 🔁 0 💬 0 📌 0

I understand the sentiment, but it is important for people to know that is currently does not reflect reviewer guidelines at CVPR: cvpr.thecvf.com/Conferences/...

“(…) you should include specific feedback on ways the authors can improve their papers.”

23.02.2025 18:54 — 👍 5 🔁 0 💬 2 📌 0

But is Meta indeed cancelling professional fact checking worldwide? Their response to the Brazilian supreme court inquiry said the dismissal of professional fact checking was exclusive to the US

21.02.2025 17:52 — 👍 0 🔁 0 💬 0 📌 0

Late to post, but excited to introduce CUT3R!

An online 3D reasoning framework for many 3D tasks directly from just RGB. For static or dynamic scenes. Video or image collections, all in one!

Project Page: cut3r.github.io

Code and Model: github.com/CUT3R/CUT3R

18.02.2025 17:03 — 👍 34 🔁 6 💬 2 📌 1

Those plots are so cool! \o/

18.02.2025 22:10 — 👍 2 🔁 0 💬 0 📌 0

It’s a plugin for neovim that has some stuff similar to cursor; it is open source and you can configure it to use the model of your choice

13.02.2025 02:10 — 👍 1 🔁 0 💬 0 📌 0

I am using Avante + vim and I am pretty happy about it

13.02.2025 02:03 — 👍 2 🔁 0 💬 1 📌 0

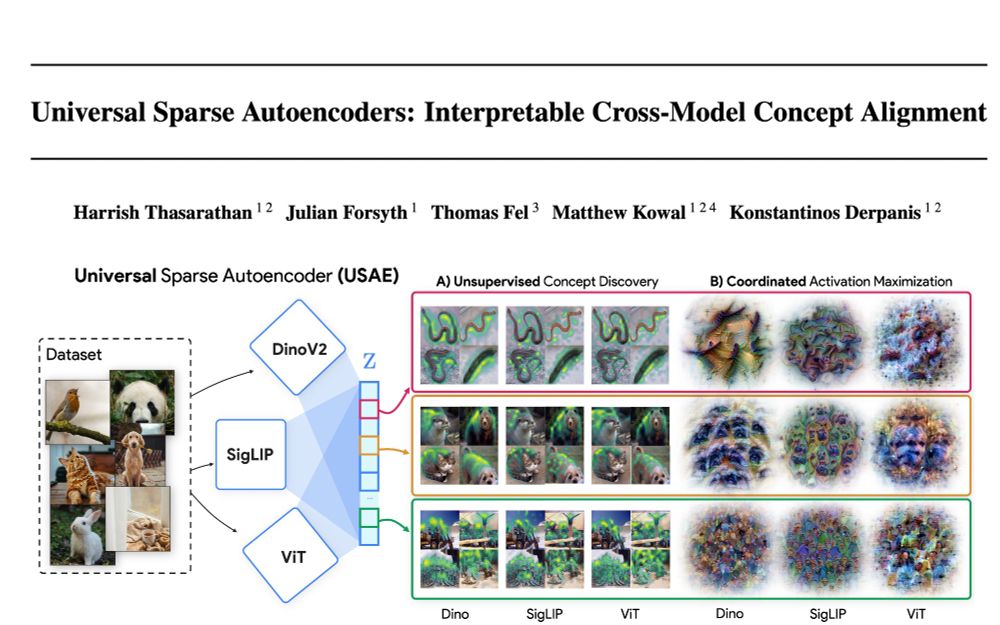

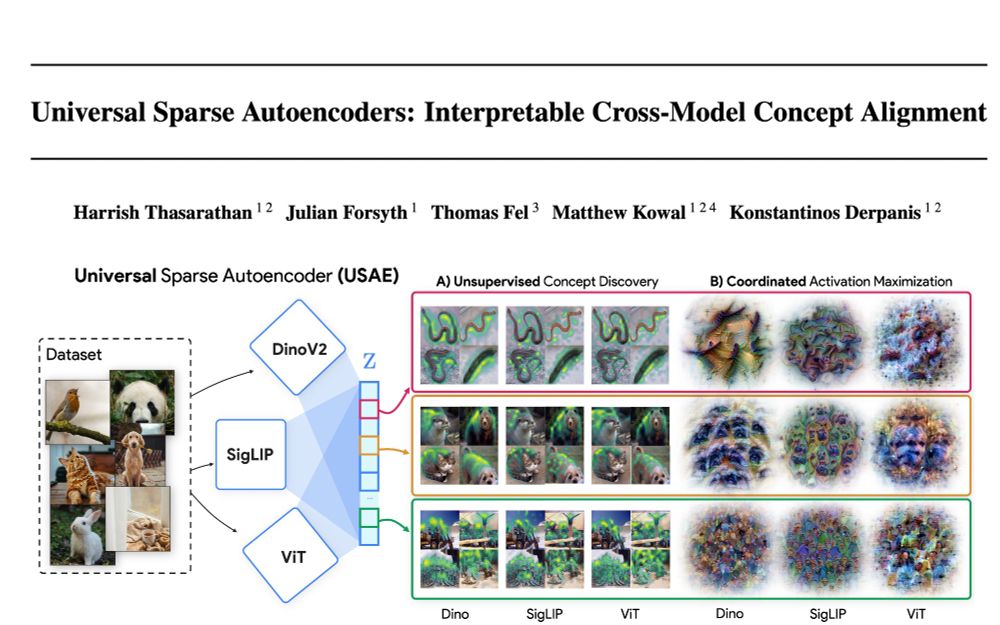

🌌🛰️🔭Wanna know which features are universal vs unique in your models and how to find them? Excited to share our preprint: "Universal Sparse Autoencoders: Interpretable Cross-Model Concept Alignment"!

arxiv.org/abs/2502.03714

(1/9)

07.02.2025 15:15 — 👍 56 🔁 17 💬 1 📌 5

OSF

New paper about pictures: I identify trends in geometric perspective in my own drawings and photos, and compare them to how the original scenes looked. I discuss what these trends might say about art history and vision science. Published in _Art & Perception_. #visionscience

psyarxiv.com/pq8nb

06.02.2025 22:58 — 👍 19 🔁 6 💬 3 📌 0

"𝐑𝐚𝐝𝐢𝐚𝐧𝐭 𝐅𝐨𝐚𝐦: Real-Time Differentiable Ray Tracing"

A mesh-based 3D represention for training radiance fields from collections of images.

radfoam.github.io

arxiv.org/abs/2502.01157

Project co-lead by my PhD students Shrisudhan Govindarajan and Daniel Rebain, and w/ co-advisor Kwang Moo Yi

05.02.2025 18:59 — 👍 54 🔁 12 💬 2 📌 1

It would be nice to mention someone and have them receive an e-mail with a subject like "You (Reviewer HjKl) were mentioned in a discussion for Paper 447809 in OpenReview"

03.02.2025 18:31 — 👍 0 🔁 0 💬 0 📌 0

Fortune reporter covering AI

Sharon.goldman@fortune.com

The need for independent journalism has never been greater. Become a Guardian supporter https://support.theguardian.com

🇺🇸 Guardian US https://bsky.app/profile/us.theguardian.com

🇦🇺 Guardian Australia https://bsky.app/profile/australia.theguardian.com

Waiting on a robot body. All opinions are universal and held by both employers and family. ML/NLP professor.

nsaphra.net

AI and Games Researcher at NYU. Head of AI at Nof1.

Assistant Professor of Computer Science at the University of British Columbia. I also post my daily finds on arxiv.

Lead Rendering Engineer at Playground Games working on Fable. Always open for graphics questions or mentoring people who want to get in the industry. I tweet about graphics mostly. Views my own. Blog: https://interplayoflight.wordpress.com/

So far I have not found the science, but the numbers keep on circling me.

Views my own, unfortunately.

Brown Computer Science / Brown University || BootstrapWorld || Pyret || Racket

I'm unreasonably fascinated by, delighted by, and excited about #compsci #education #cycling #cricket and the general human experience.

Assistant Prof @UNIMORE 🇮🇹 Human Visual Attention, Remote Physio, Affective Computing. Former @UNIMI, co-founder @opendot, Milan

https://www.vcuculo.com

Assistant Prof of CS at the University of Waterloo, Faculty and Canada CIFAR AI Chair at the Vector Institute. Joining NYU Courant in September 2026. Co-EiC of TMLR. My group is The Salon. Privacy, robustness, machine learning.

http://www.gautamkamath.com

PhD student @ LIX | BX 21 | MVA 23

Associate Professor @brownvc.bsky.social

Affiliate Faculty @uwcse.bsky.social

Chair @wigraph.bsky.social

ai/ml researcher 🔍

dreamer, clown, only human - civciv baronu 🐣

- overthinking engineer.

< 𝙰𝙳𝙷𝙳 >

🐦: x.com/itsneuron

🌐: dar.vin/itsneuron

https://iszihan.github.io/

CS PhD student at University of Toronto with Prof. David Levin | graphics

PhD student @Columbia | Opinions are my own

https://www.cs.columbia.edu/~honglinchen/

PhD candidate in Computer Graphics and Computational Imaging at Graphics & Imaging Lab, University of Zaragoza.

Graphics programmer wannabe | Student @ Istanbul Technical University

CG&AI PhD Student @KIT

Now at Reality Lab, Meta

ex-EagleDynamics, ex-WellDone Games

mishok43.com