Looking good on Intel too, improving measured latency by ~6.8 ns, or 19-20 cycles

28.10.2025 01:15 — 👍 0 🔁 0 💬 0 📌 0@chlamchowder.bsky.social

Gamer who analyzes tech too much Working on the next generation Z-series chip at IBM, opinions here are my own

Looking good on Intel too, improving measured latency by ~6.8 ns, or 19-20 cycles

28.10.2025 01:15 — 👍 0 🔁 0 💬 0 📌 0

So far I used a simple a=a[a] pattern to test GPU memory latency, but that indexed addressing penalty always bothered me. I finally got around to making the compiler spit out a chain of dependent loads and nothing else.

Good start on AMD. I save ~4 or ~12 ns for scalar and vector accesses

Yeah. I was more surprised ARL didn't dynamically adjust the SNCU/D2D clock, even with XMP disabled. They clearly had that capability in MTL and the uncores are very similar.

Still ARL idle power is just fine for a desktop platform, so maybe they didn't bother.

Intel's desktop Arrow Lake always keeps the SNCU (die to die interface and some other parts of the uncore) at 2.6 GHz. On Meteor Lake, it goes up to 2.4 GHz but varies a lot probably to save power.

24.09.2025 04:57 — 👍 1 🔁 0 💬 1 📌 0

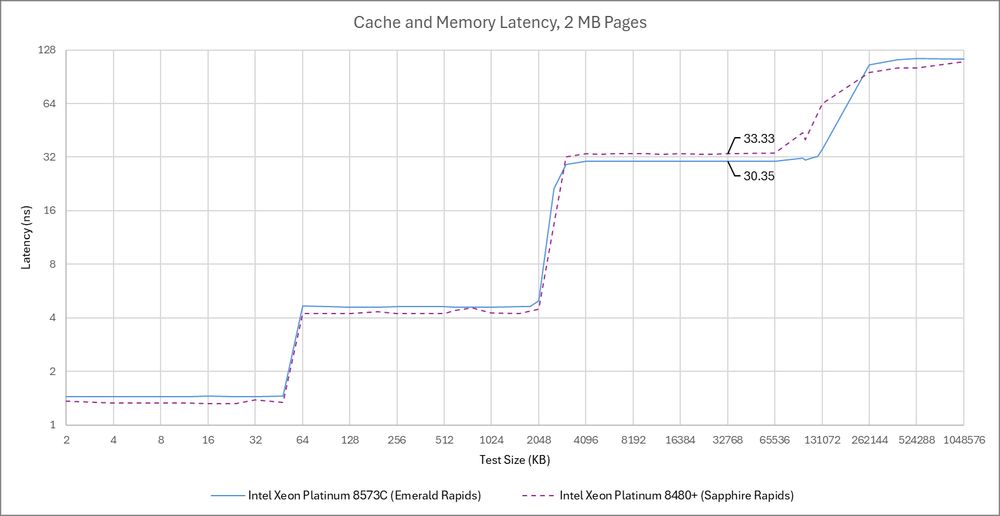

Intel's newer Emerald Rapids improves L3 latency compared to Sapphire Rapids, at least when one core is able to allocate a similar amount of L3 capacity. It's still high at ~105 core cycles, but better than ~125 cycles from the last generation.

11.07.2025 22:36 — 👍 2 🔁 2 💬 1 📌 0Yep, that was a fun one. Loved the quirky Northbridge with its separate paths for CPU and GPU memory accesses. I should boot that system back up sometime and check the NB power states too.

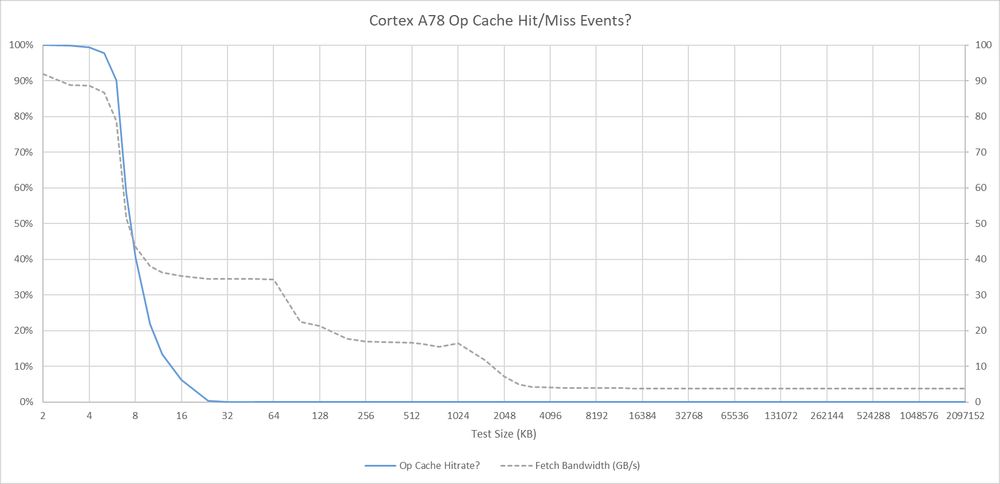

20.06.2025 17:17 — 👍 1 🔁 0 💬 0 📌 0That's for Cortex A78. I have not tried on anything else. With officially documented events, 0x26 (iTLB access) can infer op cache misses at the 32B fetch window granularity. A78's op cache is virtually addressed, and doesn't require TLB lookup on a hit.

28.05.2025 18:03 — 👍 1 🔁 0 💬 0 📌 0

Arm never documented PMU events for their op cache. From brute force searching, there's a pair of possibly related events. Event 0x177 may be op cache hits, and 0x178 may be op cache misses. Both events appear to count instructions (not micro-ops or cachelines)

28.05.2025 18:00 — 👍 1 🔁 2 💬 1 📌 0

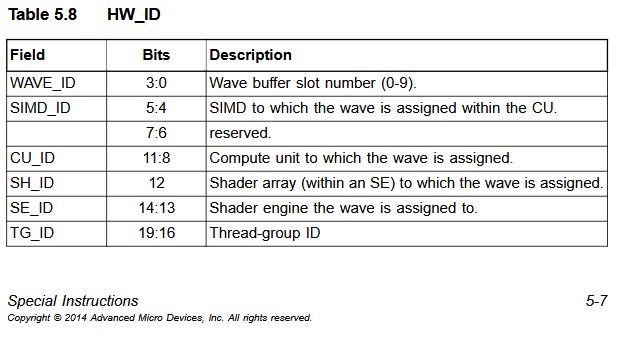

AMD had a separate Shader Array subdivision within Shader Engines even in the original GCN architecture. Interesting that it never mattered until RDNA added a L1 cache to the Shader Arrays and had multiple SAs per SE

13.04.2025 01:33 — 👍 2 🔁 1 💬 0 📌 0

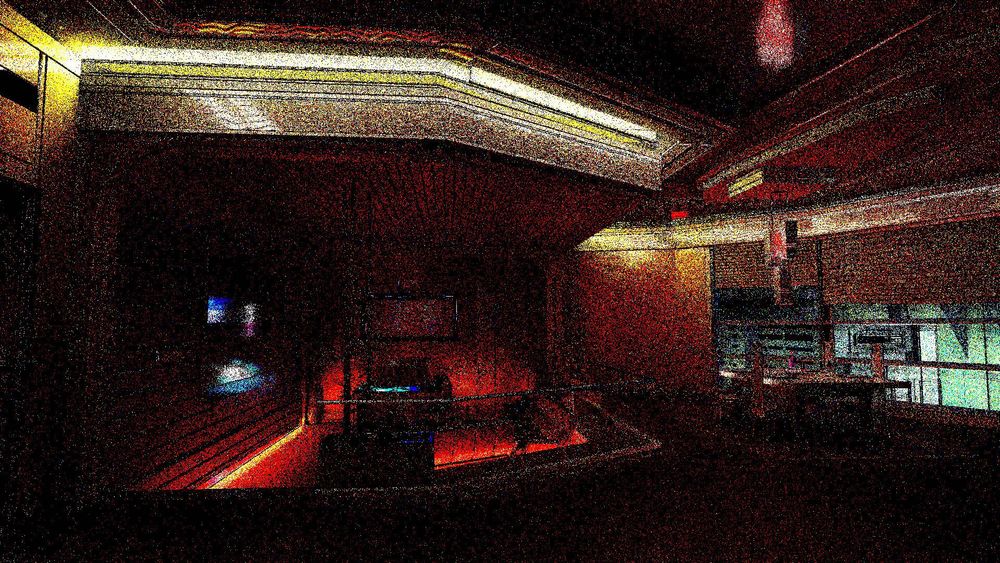

Output from DispatchRays calls in CP2077's path tracing mode, with exposure adjusted manually and no denoising done

There's just not enough computing power available to get a good sample count while maintaining real-time performance. It's like setting ISO 102400 on a DSLR

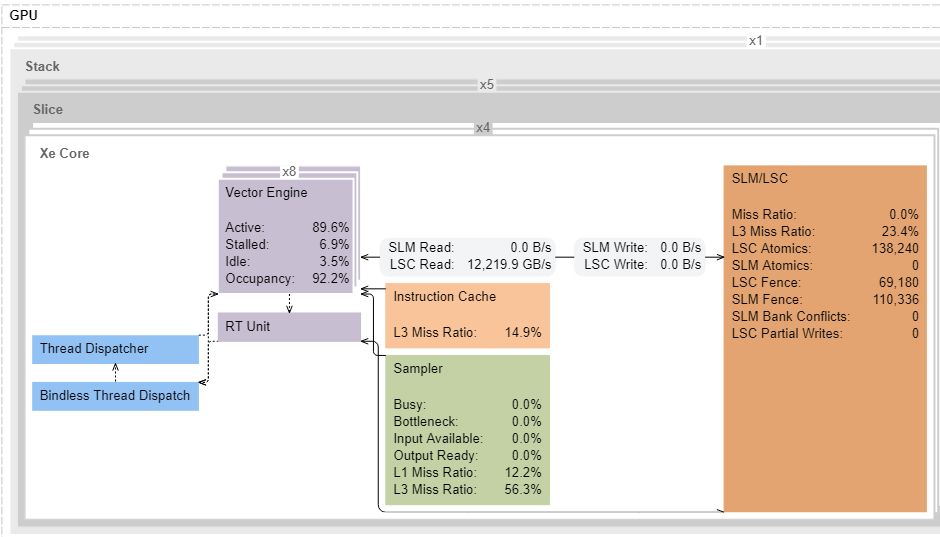

Messing around with microbenchmarking Arc B580

12.2 TB/s of L1 bandwidth, or ~214 bytes per Xe Core cycle

Theoretical is probably 256B/cycle. But close enough for now

Cinebench 2024 on the Ryzen 7 4800H (Zen 2)

Stock: 600 pts

Op cache disabled: 525 pts

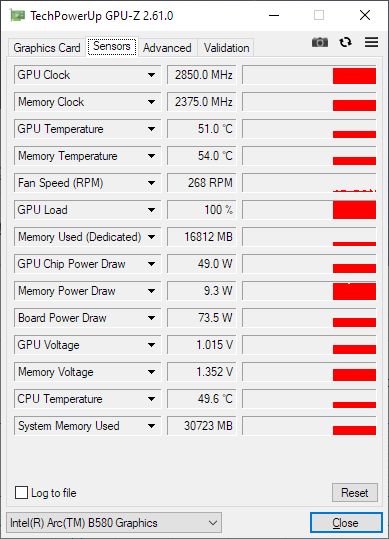

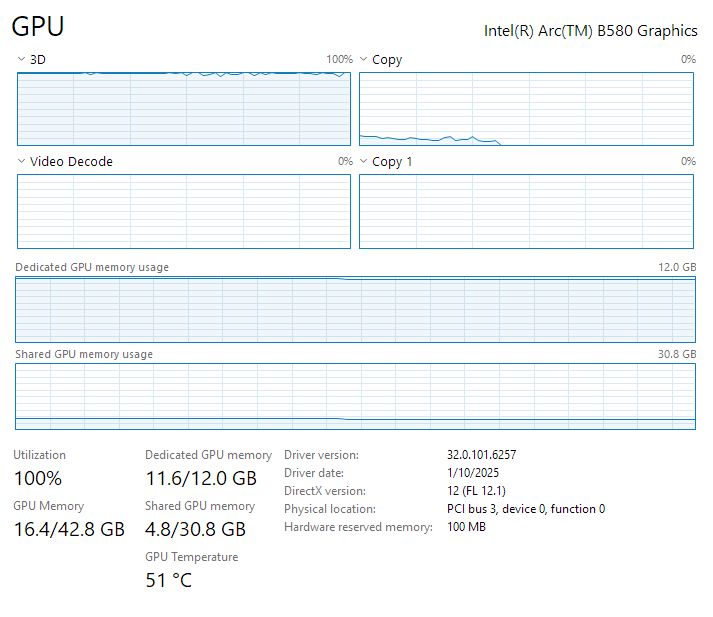

In games with higher VRAM usage (DCS), GPU-Z incorrectly shows 16.8 GB of dedicated memory used (out of 12 GB total lol). Task manager correctly shows shared memory allocated

19.01.2025 20:26 — 👍 1 🔁 0 💬 0 📌 0

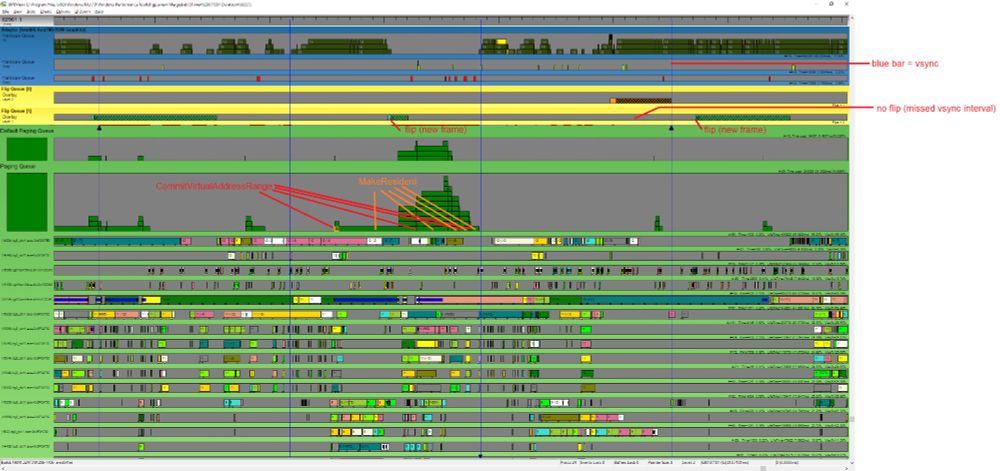

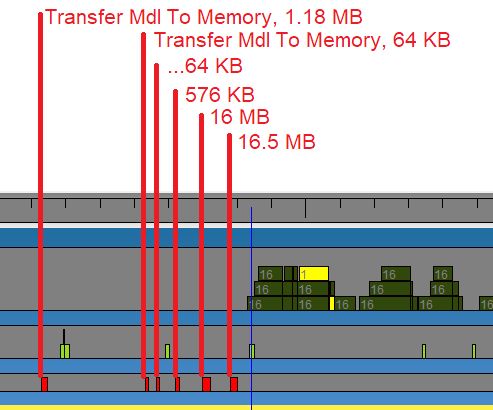

Frame drop in Baldur's Gate 3, as captured by GPUView. The game has to move ~35 MB to the GPU, which means reserving space to hold the data, getting the data contiguous in physical memory, and of course doing the transfer. Really fast, takes just 12.6 ms, but is enough to miss a 60Hz vsync interval

09.01.2025 09:52 — 👍 1 🔁 0 💬 0 📌 0

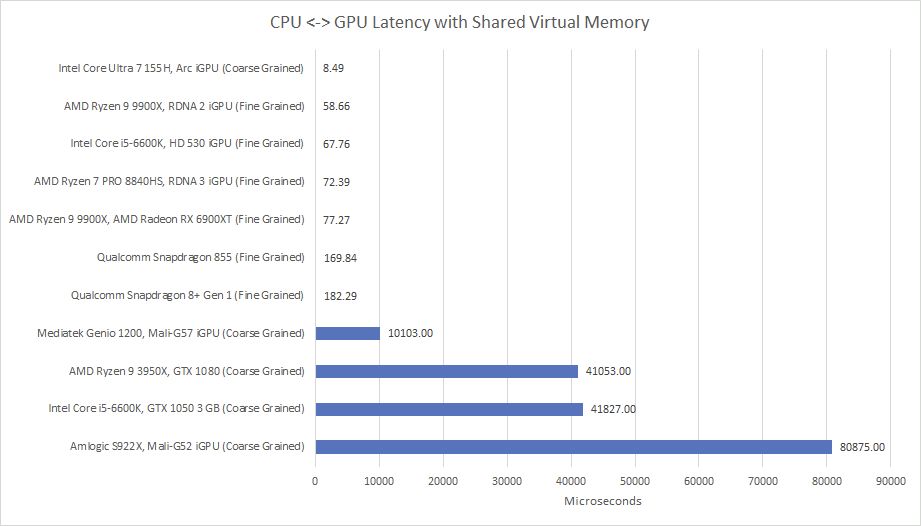

Zero-copy should be more natural on an iGPU versus a discrete one, but not all iGPUs can do zero-copy.

Here I'm testing OpenCL Shared Virtual Memory with a 256 MB buffer and only modifying one 32-bit value in it. Anything in the millisecond range implies the driver had to copy the entire buffer.

www.youtube.com/watch?v=SwlK...

Discussing turning off Zen 4's op cache and its performance consequences, in video format :)

Ooh, thanks for the link! I looked on Intel's PDFs and sites, and didn't find anything on LNL/ARL. I will check this out

09.12.2024 00:48 — 👍 1 🔁 0 💬 0 📌 0Skymont perfmon events (specifically unit masks for evt 0xD1, retired mem loads by source) appear to act differently on Arrow Lake and Lunar Lake. Expected, given their different cache setups.

But I wish Intel would hurry up and get LNL/ARL documentation written up :/

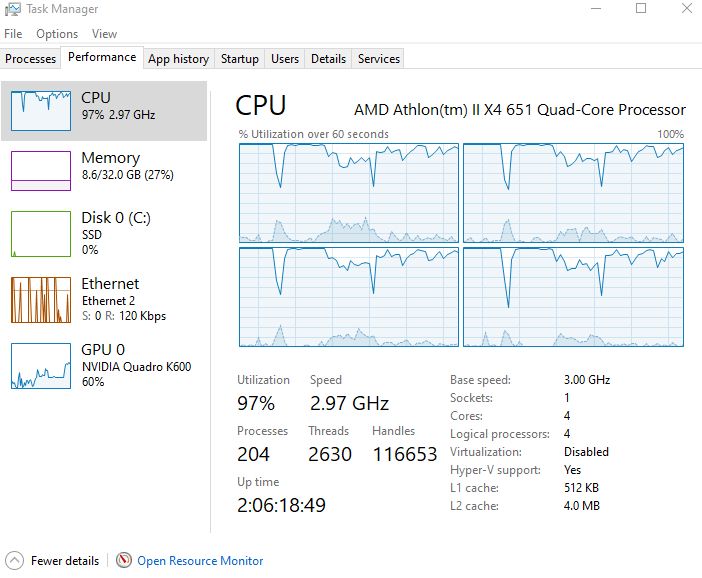

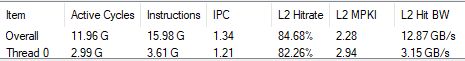

Youtube AV1 decoding can be heavy on old CPUs, even at 1080P. IPC though is surprisingly good for AMD's very outdated 12h architecture.

08.12.2024 02:32 — 👍 4 🔁 0 💬 0 📌 0Remember that after Bulldozer, it took AMD five years to change direction. And even with Zen, that's only a foundation. They built on that for several more years before really threatening Intel

07.12.2024 02:24 — 👍 2 🔁 0 💬 0 📌 0Exactly. It's like getting one move in a turn based game and having a board call whether you won or lost based on the results of that single turn.

I also think an engineer should lead the company because they can appreciate the technical challenges/sniff out BS, and Pat Gelsinger is an engineer.

x.com/lamchester/s... I figured some of it out for Zen 2. Events 7 and 0x47 correspond to traffic on the two DDR4 channels. Never got around to doing that for newer Zen generations though

02.12.2024 05:56 — 👍 1 🔁 0 💬 0 📌 0:D That was a fun article to write, though I spent way too much free time poking around and gathering data

02.12.2024 02:58 — 👍 2 🔁 0 💬 1 📌 0

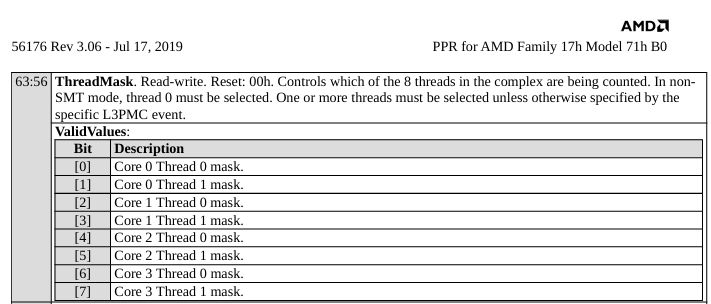

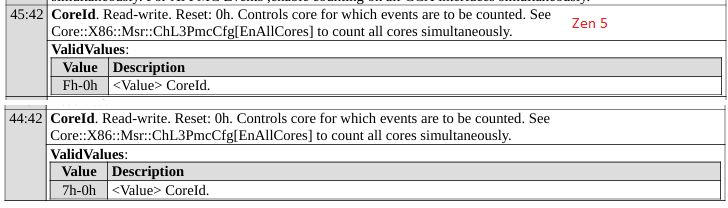

(bottom is from the Zen 4 PPR)

Zen 2 used eight bits, letting you select any combination of logical SMT threads within a CCX for L3 performance monitoring. More flexible, but would take too many bits with Zen 3's larger CCXes.

In Zen 5's Processor Programming Reference, the L3 performance event select registers now take core IDs from 0-15. That would let the register handle 16 core CCX-es.

Of course this doesn't mean a 16 core CCX will show up, but it's interesting that AMD's laying the groundwork for it.

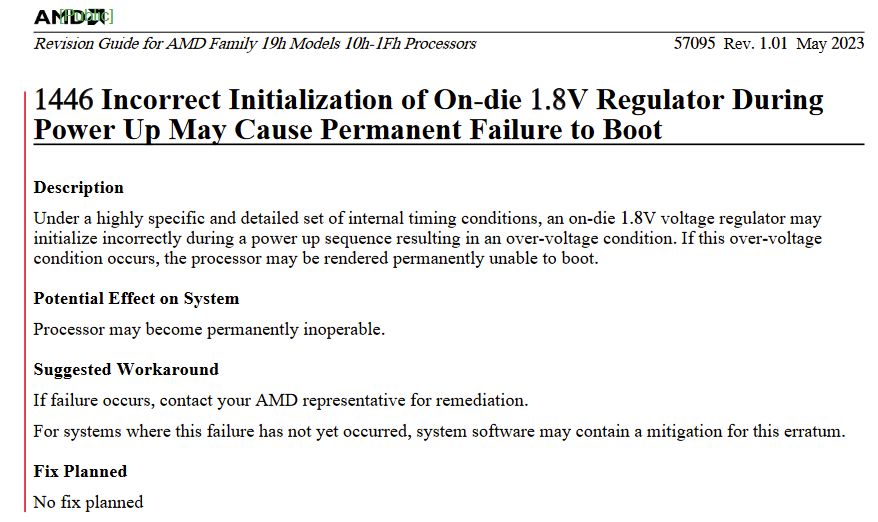

Zen 4 has a funny errata where an on-die 1.8V voltage regulator might be configured incorrectly, which then kills the CPU by feeding something too much voltage.

26.11.2024 01:36 — 👍 2 🔁 0 💬 0 📌 0Zen 4 had a 144 entry loop buffer. However, it's disabled in the latest BIOS for my ASRock B650 PG Lightning. Maybe AMD found a bug that no one else ran into (or realized they were hitting).

Likely doesn't affect performance, as the op cache has more than enough bandwidth to feed downstream stages.

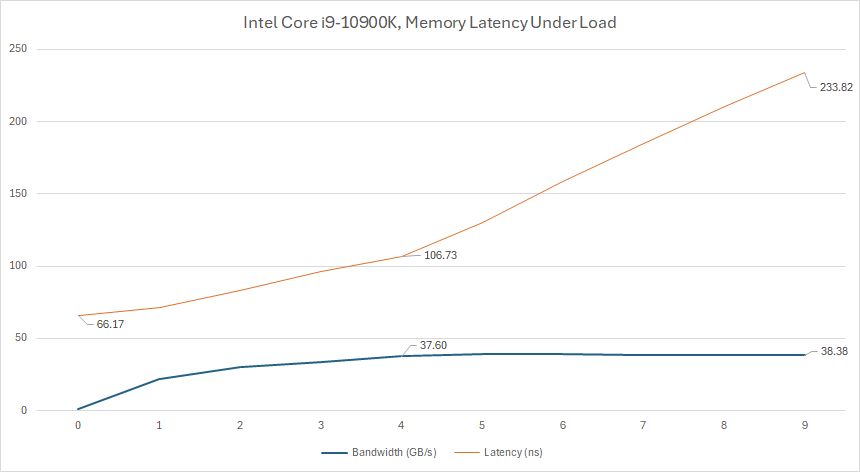

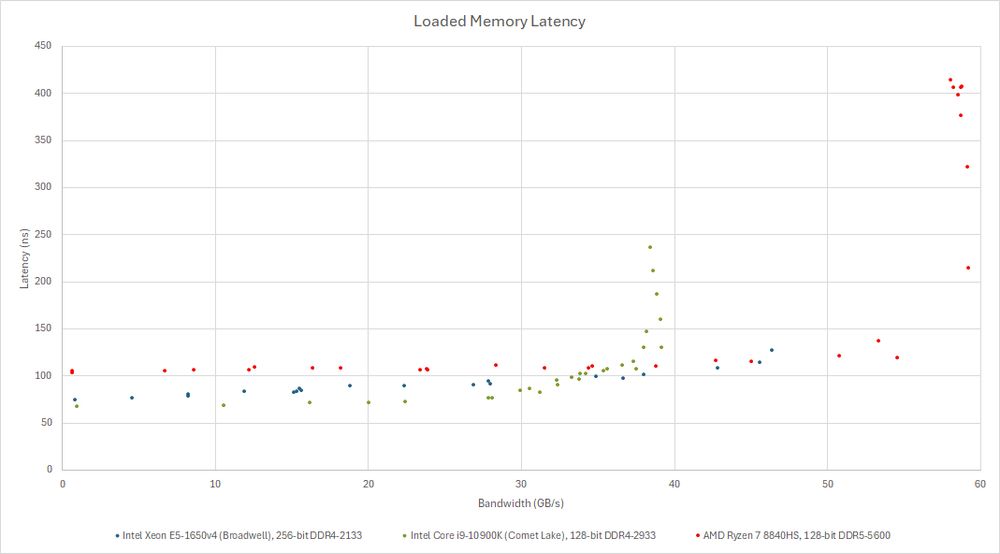

A closer look at the 10900K - you can get pretty close to bandwidth limits with 4 threads reading from big arrays, while latency stays under control.

But if more cores demand maximum bandwidth, latency goes way up

Memory latency under varying bandwidth loads on three different CPUs. The old Broadwell HEDT chip does well at providing consistent performance and minimizing noisy neighbor effects

22.11.2024 00:54 — 👍 1 🔁 0 💬 1 📌 0