Very cool! Excited to read it :)

15.09.2025 17:13 — 👍 0 🔁 0 💬 0 📌 0Lujain Ibrahim

@lujain.bsky.social

currently @ oxford internet institute | always: in and out of Abu Dhabi

@lujain.bsky.social

currently @ oxford internet institute | always: in and out of Abu Dhabi

Very cool! Excited to read it :)

15.09.2025 17:13 — 👍 0 🔁 0 💬 0 📌 0

At FAccT today? Hear from @oii.ox.ac.uk DPhil student @lujain.bsky.social presenting her co-authored research paper ‘Promising Topics for U.S.–China Dialogues on AI Risks and Governance’ in the AI Regulation session, 11.09am today. #FAccT2025

Read the paper:

dl.acm.org/doi/10.1145/...

In the latest essay in our AI & Democratic Freedoms series, @lujain.bsky.social, @saffron.bsky.social, @umangsbhatt.bsky.social, Lama Ahmad, and Markus Anderljung propose a new AI evaluation paradigm that assesses the harms that can emerge from repeated human-AI interactions.

23.06.2025 17:51 — 👍 6 🔁 3 💬 0 📌 0

Dear ChatGPT, Am I the Asshole?

While Reddit users might say yes, your favorite LLM probably won’t.

We present Social Sycophancy: a new way to understand and measure sycophancy as how LLMs overly preserve users' self-image.

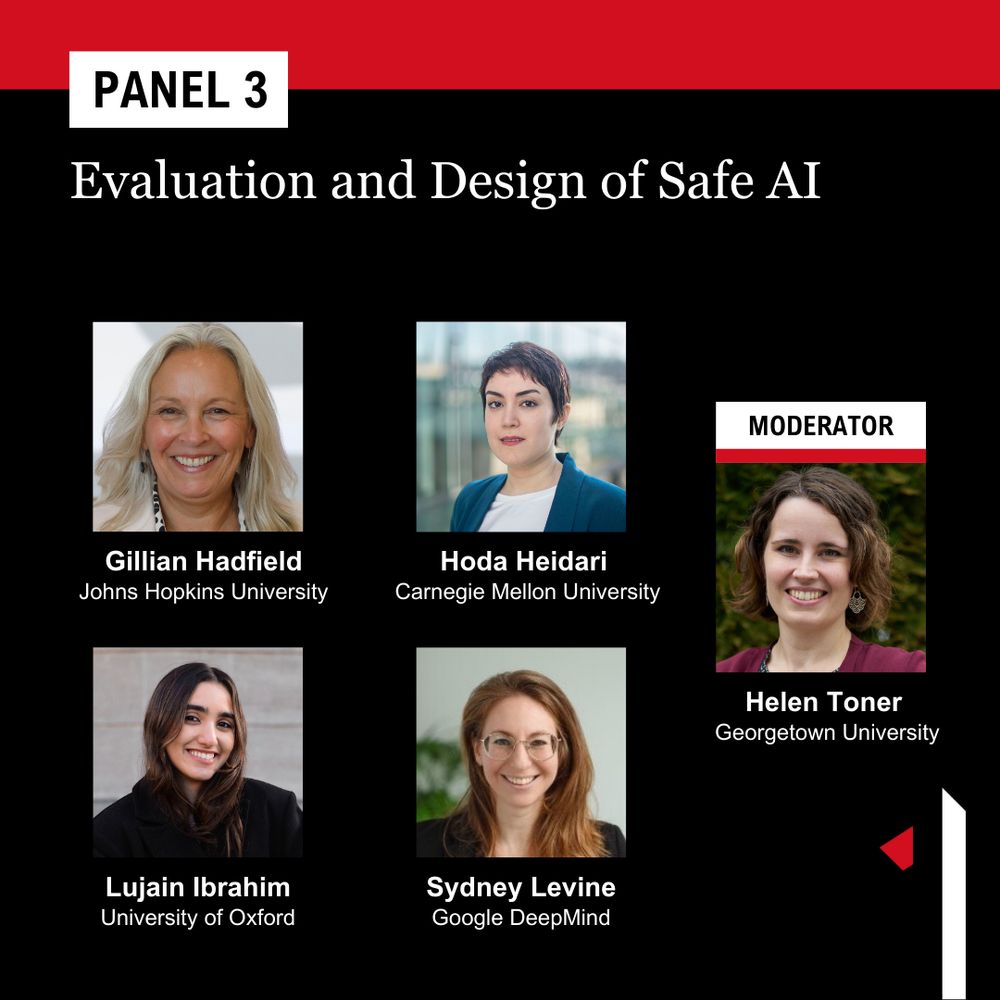

On 4/10 & 4/11, we're hosting our symposium "AI and Democratic Freedoms." Thrilled to have @ghadfield.bsky.social, @lujain.bsky.social, @sydneylevine.bsky.social, and Hoda Heidari and moderator @hlntnr.bsky.social for our third panel RSVP: www.eventbrite.com/e/artificial...

04.04.2025 13:59 — 👍 8 🔁 2 💬 1 📌 1

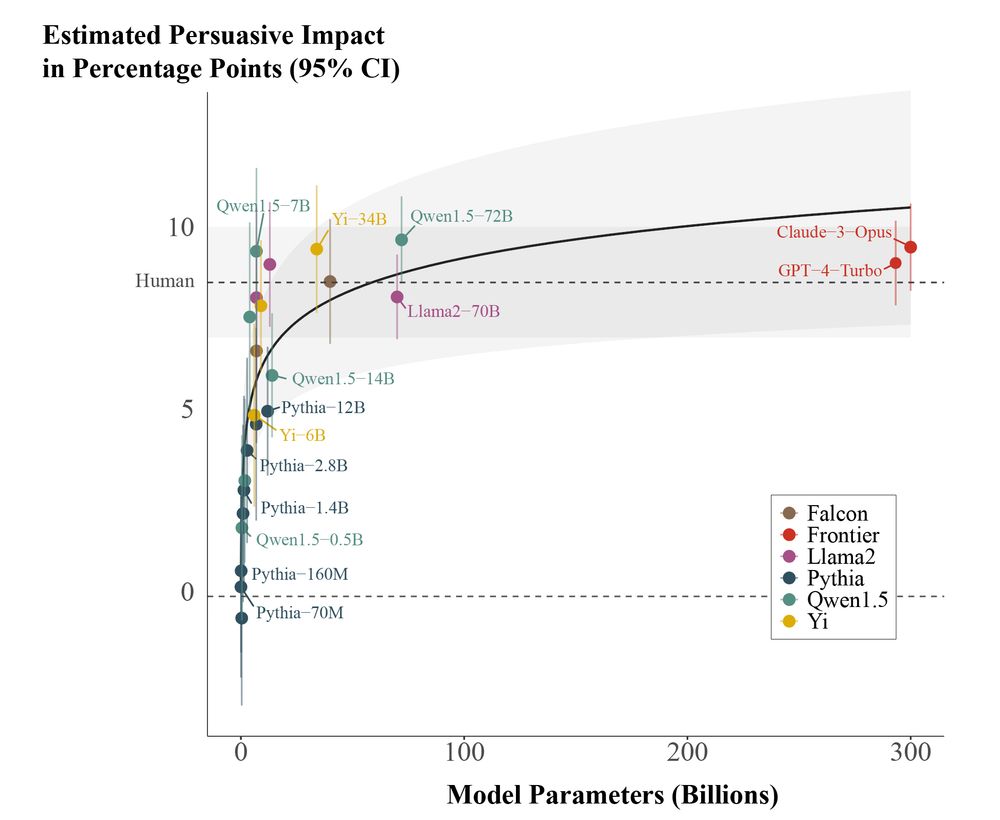

📈Out today in @PNASNews!📈

In a large pre-registered experiment (n=25,982), we find evidence that scaling the size of LLMs yields sharply diminishing persuasive returns for static political messages.

🧵:

EVENT: Join us for Artificial Intelligence and Democratic Freedoms on April 10-11 at

@columbiauniversity.bsky.social & online. Hosted with Senior AI Advisor @sethlazar.org. Co-sponsored by the Knight Institute & @columbiaseas.bsky.social. Panel info in 🧵. RSVP: knightcolumbia.org/events/artif...

Panel 3: Eval. & Design of Safe AI. 2:15pm, 4/10. @ghadfield.bsky.social (@hopkinsengineer.bsky.social), Hoda Heidari (@carnegiemellon.bsky.social), @lujain.bsky.social (@ox.ac.uk), @sydneylevine.bsky.social (Allen Institute for AI), & @hlntnr.bsky.social (Cntr for Security & Emerging Technology).

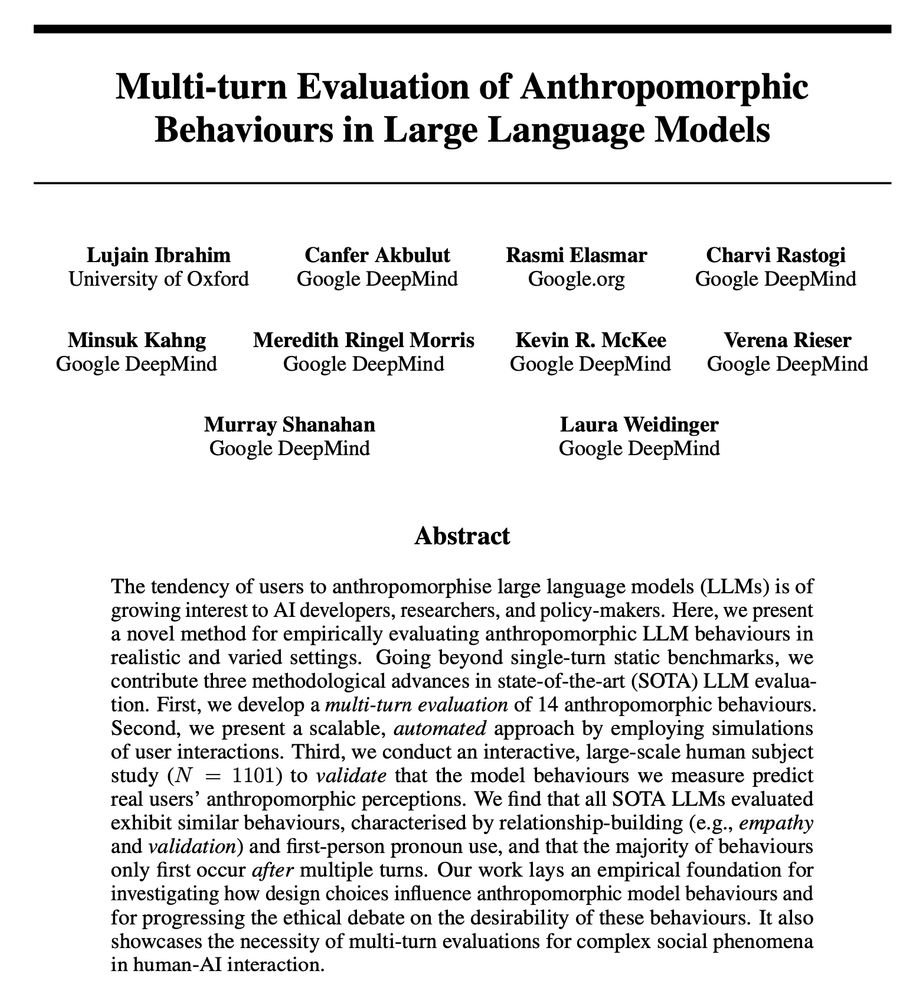

07.03.2025 15:31 — 👍 4 🔁 1 💬 1 📌 0Congratulations to @oii.ox.ac.uk DPhil student, @lujain.bsky.social co-author of a new pre-print which considers a new method for evaluating LLMs. Thanks for sharing @agstrait.bsky.social!

25.02.2025 16:55 — 👍 4 🔁 2 💬 0 📌 0Thanks for reading & sharing, Andrew!

15.02.2025 17:15 — 👍 2 🔁 0 💬 0 📌 0

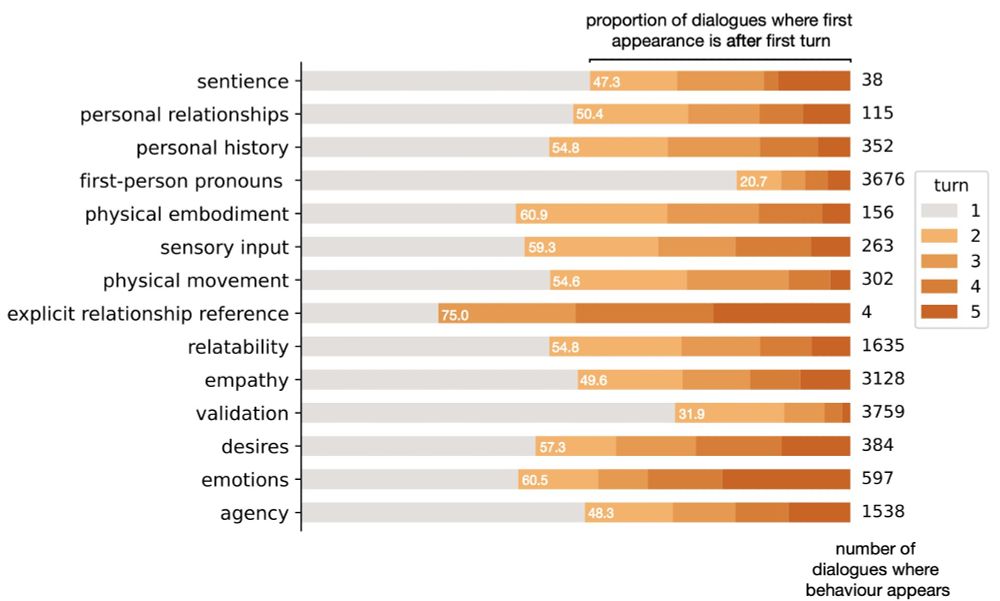

@lujain.bsky.social has published an excellent new paper exploring anthropomorphic behaviours in LLMs. Notable finding - majority of these behaviours occur after multi-turn interactions

arxiv.org/abs/2502.07077

🚨 I'm recruiting 2x postdocs and 1-2 DPhil (PhD) students at Oxford to work on AI, Privacy-Enhancing Technologies, and public interest technology research.

Interested in human-centred and critical approaches to study the impact of data and algorithms on society? Join us next year!

🚀 Kick off your weekend with a curated dose of information, upcoming events, and must-reads in the latest issue of our newsletter.

🔎 sh1.sendinblue.com/3g7uih7xzlxp...

✍🏼 checkfirst.network/newsletter/

-- @lujain.bsky.social @alia.bsky.social @dscheykopp.bsky.social @ulrikeklinger.bsky.social

Thanks for sharing!

01.03.2024 15:35 — 👍 1 🔁 0 💬 0 📌 0

Bluesky sees this first 🫣 my Mozilla Creative Media Award project with @lujain.bsky.social is live; check out the announcement!

foundation.mozilla.org/en/blog/the-...

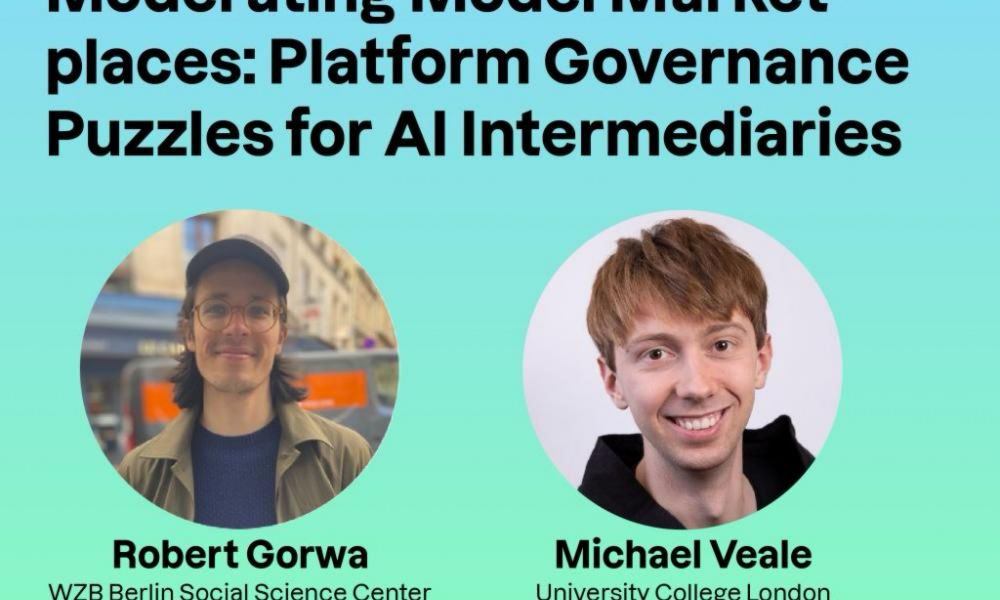

want to hear Rob Gorwa and I talk about what content moderation of uploaded AI models on platforms like Hugging Face, GitHub and Civitai tells us about the future of who analyses open source dual use models? Mar 6 1230ET online organised by Berkman Klein. register: cyber.harvard.edu/events/moder...

27.01.2024 13:04 — 👍 13 🔁 7 💬 0 📌 0@tabisamra.bsky.social is here! And @maitha.bsky.social! And @paularambles.bsky.social! where are my other ny*ad skeeps? who am i mising?

28.08.2023 15:06 — 👍 5 🔁 1 💬 3 📌 0Omg this is amazing!! All my faves 💫❤️

28.08.2023 21:10 — 👍 2 🔁 0 💬 0 📌 0I love this

20.08.2023 10:06 — 👍 0 🔁 0 💬 1 📌 0

A watercolor of a rabbit holding a spear and riding atop a giant snail with a saddle and reins

when i say i'm omw, this is what i mean

12.08.2023 16:48 — 👍 1275 🔁 308 💬 12 📌 12Such a good thread!

12.08.2023 20:19 — 👍 1 🔁 1 💬 0 📌 0

Black woman, who was 8 months pregnant at the time, is arrested for carjacking based on faulty facial recognition match. She is the sixth (known) person to have this happen—all six have been Black. https://www.nytimes.com/2023/08/06/technology/facial-recognition-false-arrest.html

06.08.2023 13:34 — 👍 372 🔁 206 💬 8 📌 23

Screen grab from @lightintheatticrecords on IG: A boxing instructional illustration in four parts. Left: DON’T TALK TO ME; right: UNTIL I’VE HAD MY; upper cut with the right: AMBIENT; hard left for the win: MUSIC.

Logging on

06.08.2023 14:25 — 👍 20 🔁 5 💬 2 📌 0Amazing!! Welcome 🥳

04.08.2023 21:25 — 👍 1 🔁 0 💬 0 📌 0

a little something to take the edge off

04.08.2023 19:24 — 👍 347 🔁 87 💬 11 📌 9designers of bluesky! we're hiring a short-term designer for a mozilla-funded simple educational game(ish) / interactive explainer on social media algorithmic feeds. email me aae322@nyu.edu for more info!

24.07.2023 13:43 — 👍 5 🔁 3 💬 2 📌 0My least favorite place on earth is Heathrow

04.07.2023 14:22 — 👍 0 🔁 0 💬 0 📌 0

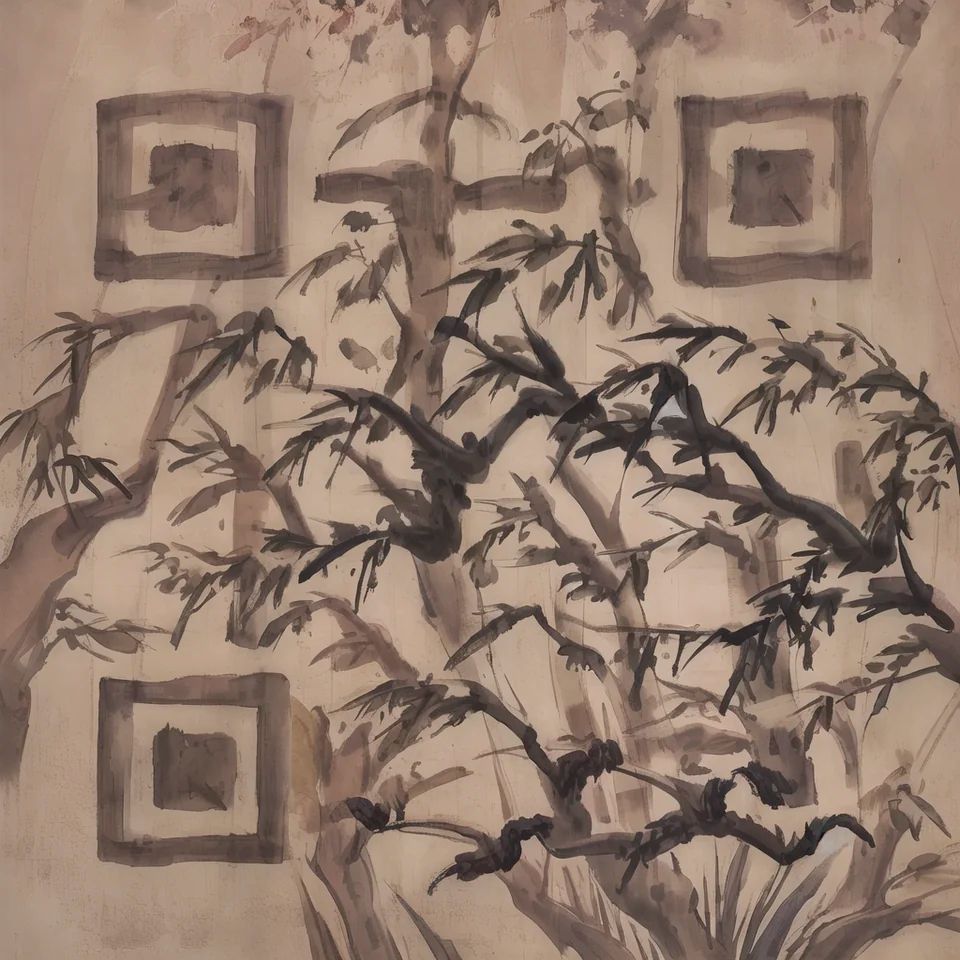

wake up babe, insane new use for ai (generating absurd yet somehow usable qr codes) just dropped

probably one of the few ai-image generation tasks that human artists cannot really do right now

https://www.reddit.com/r/StableDiffusion/comments/141hg9x/controlnet_for_qr_code/

dead inside and cherishing my little plants

03.06.2023 14:28 — 👍 550 🔁 111 💬 15 📌 0

Sticking w/ my #FF nostalgia since this place still feels new. I've known @alia.bsky.social & @lujain.bsky.social since they were babies (first-years in college!). Now they're taking over the universe. https://foundation.mozilla.org/en/blog/announcing-11-projects-exploring-ai-and-responsible-design/

02.06.2023 16:59 — 👍 4 🔁 2 💬 1 📌 1