Towards Interactive Evaluations for Interaction Harms in Human-AI Systems

In the latest essay in our AI & Democratic Freedoms series, @lujain.bsky.social, @saffron.bsky.social, @umangsbhatt.bsky.social, Lama Ahmad, and Markus Anderljung propose a new AI evaluation paradigm that assesses the harms that can emerge from repeated human-AI interactions.

23.06.2025 17:51 — 👍 6 🔁 3 💬 0 📌 0

Here’s How To Share AI’s Future Wealth | NOEMA

Advanced AI threatens to increase inequality and concentrate power, but we can proactively distribute AI’s benefits to foster a just and inclusive economy before it’s too late.

“As AI advances, many are worried about the tech's potential to concentrate unprecedented wealth among a few, while eroding the economic value of human work for everyone else.”

Here’s how @saffron.bsky.social & Sam Manning believe we can stop that from happening.

#ai #wealthinequality #economy

22.04.2025 17:55 — 👍 12 🔁 6 💬 0 📌 0

I particularly want to call out that we're releasing the first empirical, large scale taxonomy of AI values, to encourage additional research into AI (and possibly also human) values, and the development of more grounded evals of models' values huggingface.co/datasets/Ant...

21.04.2025 15:53 — 👍 2 🔁 0 💬 1 📌 0

We developed one way to figure this out, finding thousands of values that Claude expresses in practice, from some very common values (like helpfulness!) to a long tail of highly context-dependent values that respond and engage with a diverse range of users.

21.04.2025 15:53 — 👍 1 🔁 0 💬 1 📌 0

There is a lot of work on training models to follow particular behaviors, and trying to align them with “human values”, but how do we know if this is working in practice, and what values are actually being expressed?

21.04.2025 15:53 — 👍 1 🔁 0 💬 1 📌 0

Really proud and excited to release work on empirically measuring AI values “in the wild” — understanding, analyzing and taxonomizing what values guide model outputs in real interactions with real users.

www.anthropic.com/research/val...

21.04.2025 15:53 — 👍 11 🔁 4 💬 2 📌 0

This piece I wrote is now in the Stanford CS ethics curriculum! honestly, the exact kind of audience i wanted, so 🥹.

(Also I do actually still think this piece is ~my compass for what technology is and what it means to build it!)

www.kernelmag.io/1/what-is-te...

20.03.2025 01:58 — 👍 5 🔁 0 💬 0 📌 0

something divine shook me by the shoulders

when you see so clearly that everything on the outside is really on the inside

Reviving my Substack to try to describe something that is very difficult to describe (a near death experience 20 years after it happened) saffron.substack.com/p/something-...

26.01.2025 17:11 — 👍 7 🔁 0 💬 0 📌 0

oh my god i love this one:

Q: Which philosopher/logician identified an inconsistency in the US Constitution. Einstein tried (and failed) to persuade him not to point this out during his US citizenship test.

A: Godel

17.01.2025 20:23 — 👍 2 🔁 0 💬 0 📌 0

one of my favourite chinese words is 时光 which Google-translates as ‘time’ but really it’s ’time-light’. as in, ‘the wonderful time-light we spent together’

14.01.2025 16:19 — 👍 8 🔁 0 💬 0 📌 0

The future is here, and it should be co-created.

These global dialogues convene thousands of people from around the world to set a vision - and concrete goals - for what world they want.

Our first dialogue centers around the fears, dreams, hopes, and attitudes people have about AI.

20.12.2024 01:36 — 👍 34 🔁 14 💬 3 📌 0

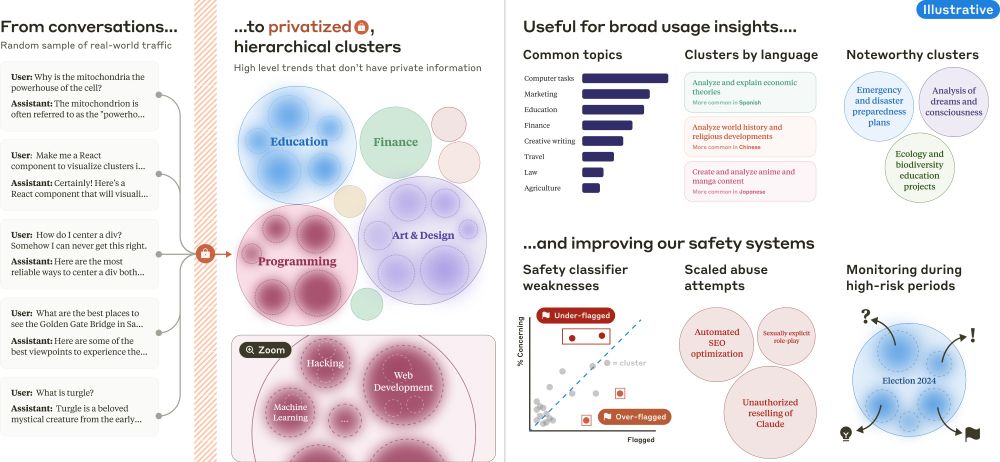

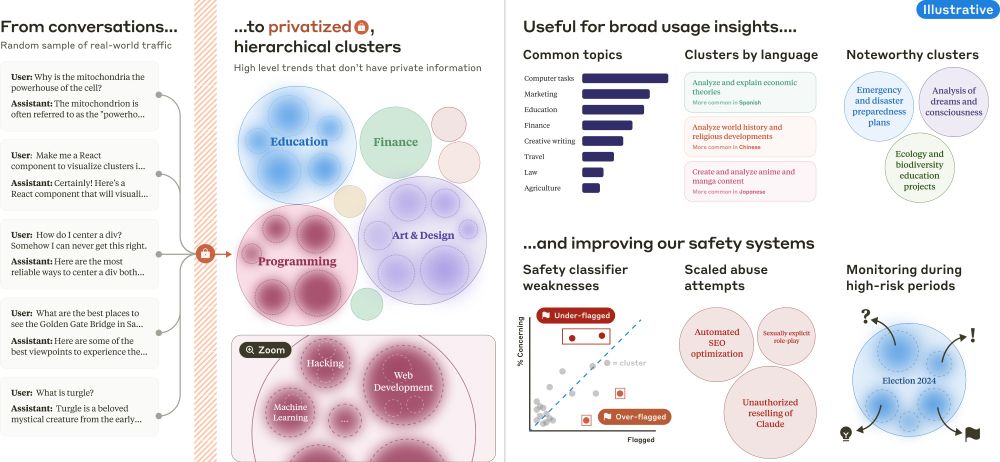

How are AI Assistants being used in the real world?

Our new research shows how to answer this question in a privacy preserving way, automatically identifying trends in Claude usage across the world.

1/

12.12.2024 21:37 — 👍 24 🔁 7 💬 1 📌 0

read the paper — there are some fun anecdotes! www.anthropic.com/research/clio

12.12.2024 21:15 — 👍 13 🔁 2 💬 0 📌 0

Clio: Privacy-preserving insights into real-world AI use

A blog post describing Anthropic’s new system, Clio, for analyzing how people use AI while maintaining their privacy

Anthropic blog post on Clio here: www.anthropic.com/research/clio

Proud of the societal impacts team and particularly of @miles.land and @alextamkin.bsky.social who have been incredibly dedicated to getting Clio right.

12.12.2024 21:35 — 👍 16 🔁 2 💬 1 📌 0

SO excited to share Clio with the world (and on Bsky before Twitter)!

Clio generates insights on AI usage patterns, in a way that keeps user data private. It has unlocked, and will continue to unlock, an immense amount of understanding about the present and future of AI use.

(Blog linked below)

12.12.2024 21:35 — 👍 55 🔁 4 💬 2 📌 0

This prompting does make me wonder if the distinction between humans doing ‘socially misaligned’ things (which we’ve generally termed ‘misuse’) and AIs being misaligned makes much sense.

06.12.2024 15:40 — 👍 3 🔁 0 💬 1 📌 0

humans are still good for something guys (holding a camera steadily and panning around)

05.12.2024 05:06 — 👍 1 🔁 0 💬 0 📌 0

great news: using AI to generate a silly little short film for my friend's birthday. its so hard to get anything you actually want to happen, happen, consistency is terrible, and what it gives you is so diff from regular filmmaking

this overall makes me happy although i am currently suffering

05.12.2024 05:05 — 👍 4 🔁 0 💬 1 📌 0

good to know. i just copied the description on display

01.12.2024 01:38 — 👍 1 🔁 0 💬 0 📌 0

computer people were also just furniture people

30.11.2024 22:40 — 👍 4 🔁 0 💬 1 📌 0

i really like this museum and highly recommend! it’s like my 4th time here

30.11.2024 22:36 — 👍 1 🔁 0 💬 1 📌 0

IBM merch was incredible

30.11.2024 22:36 — 👍 0 🔁 0 💬 1 📌 0

jacquard loom cards — one of the first uses of stored data in commercial production, for determining loom weaving patterns

30.11.2024 22:17 — 👍 4 🔁 0 💬 2 📌 0

computer history museum highlights: punched-card distribution maps of plant and flower species on the british isles

30.11.2024 22:15 — 👍 23 🔁 2 💬 2 📌 0

unbeatable aesthetics

30.11.2024 22:08 — 👍 1 🔁 0 💬 0 📌 0

a USB cable from back in the day

30.11.2024 22:08 — 👍 0 🔁 0 💬 1 📌 0

Incoming Assistant Professor @ University of Cambridge.

Responsible AI. Human-AI Collaboration. Interactive Evaluation.

umangsbhatt.github.io

currently @ oxford internet institute | always: in and out of Abu Dhabi

Researcher at @ox.ac.uk (@summerfieldlab.bsky.social) & @ucberkeleyofficial.bsky.social, working on AI alignment & computational cognitive science. Author of The Alignment Problem, Algorithms to Live By (w. @cocoscilab.bsky.social), & The Most Human Human.

[高松ー直島(宮浦)ー宇野][直島(本村)ー宇野] [直島(宮浦)ー豊島(家浦)ー犬島]を結ぶ、四国汽船です。随時運行情報などをお知らせします。運航時刻等に関しては、ホームページをご覧下さい。

For timetables, please visit our website.

https://www.shikokukisen.com/instant/

Entrepreneur

Costplusdrugs.com

Essays, case studies, and fiction from the frontier of protocol science. Subscribe to get a weekly glimpse of a new worldview. | protocolized.summerofprotocols.com

Notebooks & computers, mountains. taking my time and getting plenty of sleep as an act of rebellion. always down to eat pizza. Hand jam size: #1

📍Mammoth+🚐

https://daiyi.co

In print only ⚫️ Issue Two is here ⚫️ Subscriptions are available at the link below 🌝 Everyone is a critic ⚫️

sfreview.org

Oregonian on the @anthropic.com Policy team.

Views are my own.

Institute Professor, MIT Economics. Co-Director of @mitshapingwork.bsky.social. Author of Why Nations Fail, The Narrow Corridor, and Power & Progress.

We're an Al safety and research company that builds reliable, interpretable, and steerable Al systems. Talk to our Al assistant Claude at Claude.ai.

Historian of the intelligentsia. Odi profanum vulgus et arceo. Deputy Editor @NoemaMag.com. Check out "Children of a Modest Star": https://www.sup.org/books/politics/children-modest-star. Posts my own

CEO & co-founder @far.ai non-profit | PhD from Berkeley | Alignment & robustness

Senior Researcher at Oxford University.

Author — The Precipice: Existential Risk and the Future of Humanity.

tobyord.com

Journalist, editor, author of Ace.

For Future Reference.

Sign up for our newsletters: https://wrd.cm/newsletters

Find our WIRED journalists here: https://bsky.app/starter-pack/couts.bsky.social/3l6vez3xaus27

AI, national security, China. Part of the founding team at @csetgeorgetown.bsky.social (opinions my own). Author of Rising Tide on substack: helentoner.substack.com

I make sure that OpenAI et al. aren't the only people who are able to study large scale AI systems.