Looks like Tesla’s models sometimes confuse train tracks with road lanes.

04.01.2025 21:23 — 👍 1 🔁 0 💬 0 📌 0Looks like Tesla’s models sometimes confuse train tracks with road lanes.

04.01.2025 21:23 — 👍 1 🔁 0 💬 0 📌 0

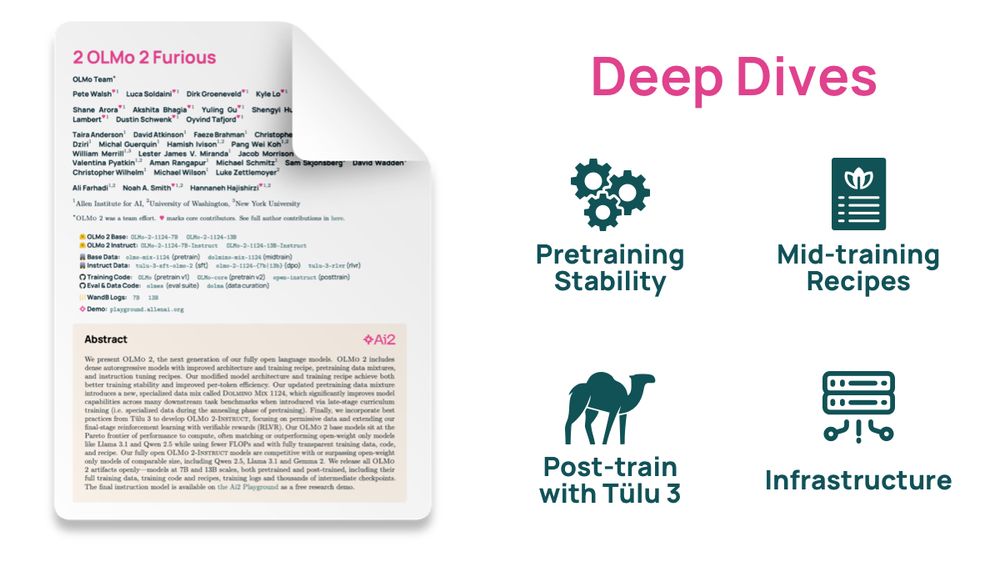

OLMo 2 tech report is out!

We get in the weeds with this one, with 50+ pages on 4 crucial components of LLM development pipeline:

The LCMs are cool though; however, it is early days. They give us a knob (concept representations) to understand and change the model's outputs. There is no reason why an LCM should also not have a COT (or be able to reason via search/planning)...we just have to ask it :)

03.01.2025 23:32 — 👍 1 🔁 0 💬 0 📌 0The reasoning models are cool though; they explicitly enforce dependence on the model's cot, so here it should be a reliable explanation (? not sure tho). Played with 'thinking' gemini: it generate pages of COT sometimes, and now we have to figure what (and which part) is relevant.

03.01.2025 23:32 — 👍 1 🔁 0 💬 1 📌 0This reminds me of all the issues with heatmaps and probes. The model really has no incentive to rely on its cot unless it is explicitly asked to do so via fine-tuning or some kind of penalty.

03.01.2025 23:32 — 👍 0 🔁 0 💬 1 📌 0You always ask the right questions :) I don't think chain-of-thought, of current models, (except the reasoning ones) gives reliable insight about models. The issue is that cot is an output (and input) of the model, and you can change it in all sort of ways without affecting the model's output.

03.01.2025 23:32 — 👍 1 🔁 0 💬 1 📌 0

It is too early to tell :) I like the papers on your list but I think only a few of them were instant ‘classics’.

Having said that, I like: large concept models paper from meta.

Is the final output actually “causally” dependent on the long COT generated? How key are these traces to the search/planning clearly happening here? Some many questions but so little answers.

21.12.2024 19:12 — 👍 1 🔁 0 💬 0 📌 0

Great to see clarification comments. o3 is impressive nonetheless.

Played around with o1 and the ‘thinking’ Gemini model. The cot output (for Gemini) can confusing and convoluted, but it got 3/5 problems right. Stopped on the remaining 2.

These models are an impressive interpretability test bed.

New paper. We show that the representations of LLMs, up to 3B params(!), can be engineered to encode biophysical factors that are meaningful to experts.

We don't have to hope Adam magically finds models that learn useful features; we can optimize for models that encode for interpretable features!

Pinging into the void.

18.11.2024 03:31 — 👍 4 🔁 0 💬 1 📌 0