This work was mainly a collaboration for GTC so it all came together quickly in 2 months and we didn't want to change much :)

We are working on improving the system and will release a tech report in a few months.

29.04.2025 03:09 — 👍 3 🔁 0 💬 1 📌 0

*us being lazy not "using" :)

29.04.2025 01:33 — 👍 2 🔁 0 💬 1 📌 0

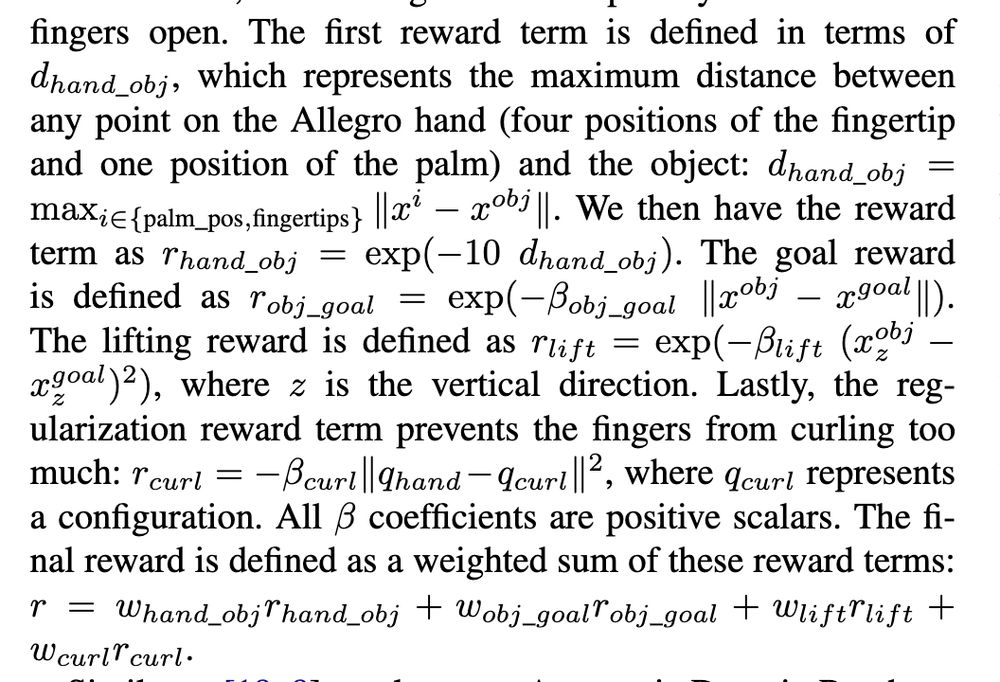

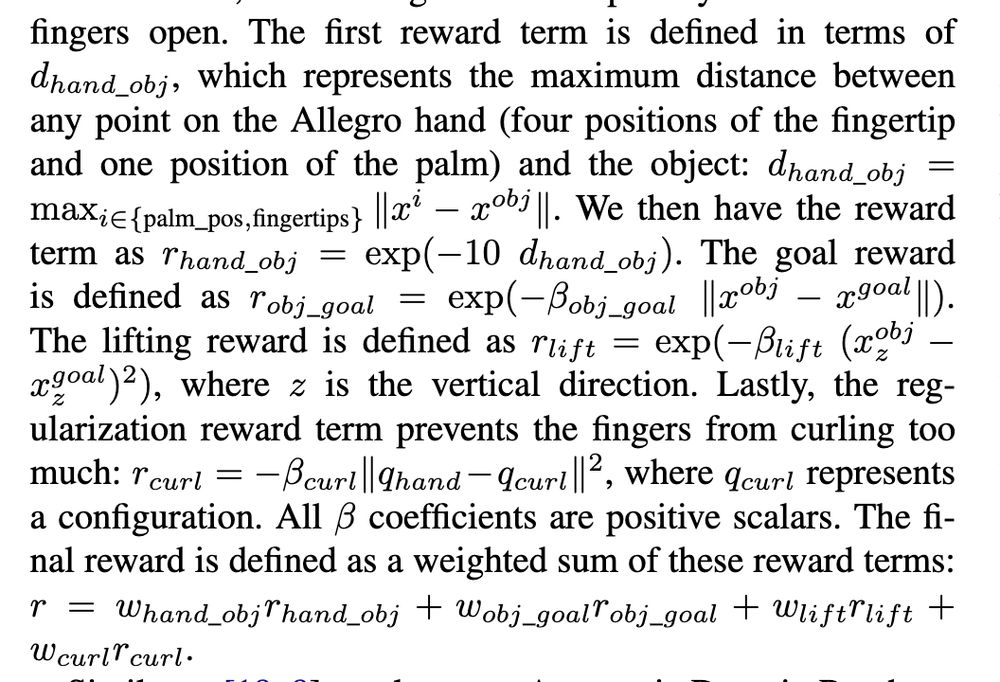

The robot is rewarded to lift the object beyond a certain height to ensure that the grasp is stable. So it lifts it first and takes it to a certain height and then does the dropping. This was using being lazy for not changing the reward - vestigial stuff. The lift reward here.

29.04.2025 01:33 — 👍 3 🔁 0 💬 1 📌 0

Stereo camera images that the networks use as input. They go directly into the network without any pre-processing and out comes action that is sent to the robot as target.

29.04.2025 00:54 — 👍 3 🔁 0 💬 1 📌 0

YouTube video by Boston Dynamics

Arm you glad to see me, Atlas? | Boston Dynamics

Collaboration with Boston Dynamics on extending our DextrAH-RGB work on their robot.

The head movement is deliberate and learned thru training.

Each arm has its own independent controller. Which one to invoke when is determined by its proximity to the object.

www.youtube.com/watch?v=dFOb...

29.04.2025 00:52 — 👍 11 🔁 2 💬 1 📌 2

Nice substack post that has a funny legend about Brunelleschi and his challenge to make an egg stand on its end. Whoever can make it stand gets to build the dome of santa maria del fiore.

www.james-lucas.com/p/it-always-...

07.04.2025 02:32 — 👍 2 🔁 0 💬 0 📌 0

*cupola

07.04.2025 02:31 — 👍 0 🔁 0 💬 0 📌 0

I have been using cursor and it's great. It's a vscode fork so I like it as I have been using vscode for years now.

13.02.2025 04:37 — 👍 1 🔁 0 💬 1 📌 0

Just remembered this session "Discussion for Direct versus Features Session" link.springer.com/chapter/10.1... that I cited in my phd thesis but can't download now as it is behind paywall. But I remember it being very interesting and fun to read.

12.02.2025 05:35 — 👍 0 🔁 0 💬 0 📌 0

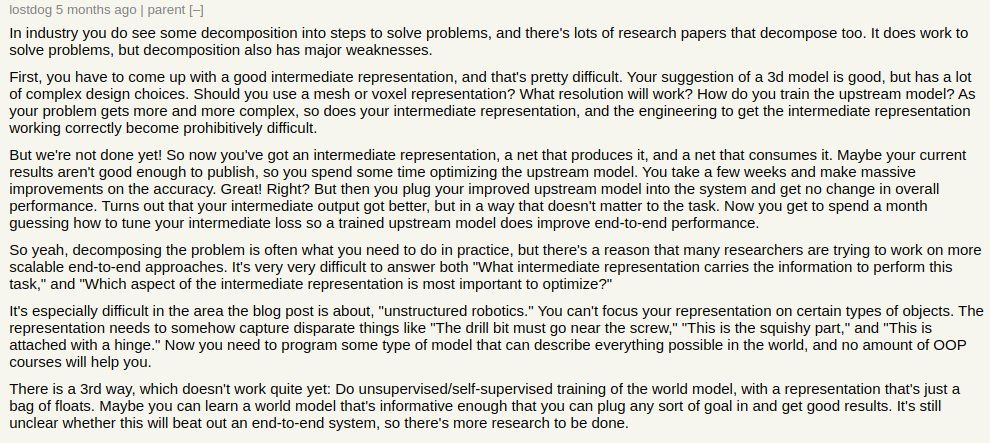

The message here is you should try to stay as close to raw pixels as possible - it just works out much better in the long run.

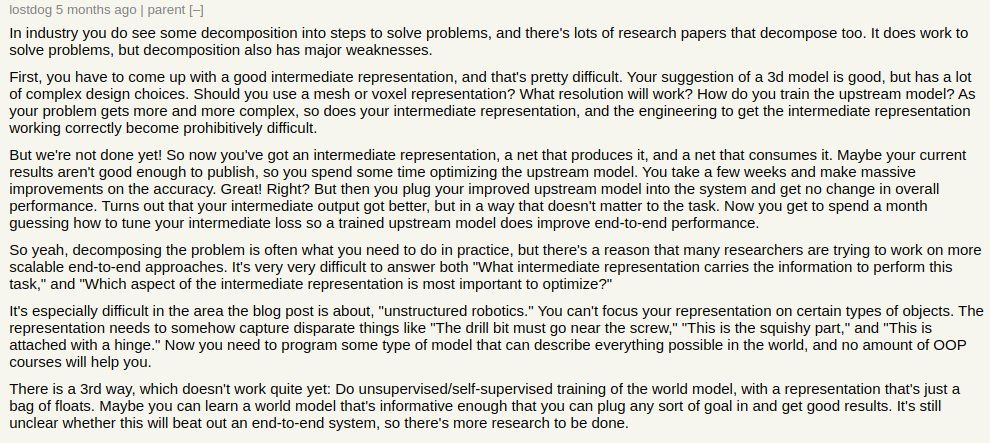

I love this hacker news comment that I saw on twitter few years ago.

11.02.2025 03:57 — 👍 5 🔁 0 💬 1 📌 0

Michal Irani, Michael Black, Padmanabham Anandan, Rick Szeliski, Harpreet Sawhney were all looking at recovering camera pose transformation directly from image pixels. And the tracking in ARIA glasses is uses "direct".

11.02.2025 03:55 — 👍 2 🔁 0 💬 1 📌 0

We have been using "direct" image to action mapping for a while now and the word "direct" took me back to tracking in SLAM. Back in the 90s many people were looking at recovering camera pose directly from the images rather than doing feature tracking first and then recovering homography afterwards.

11.02.2025 03:52 — 👍 5 🔁 0 💬 1 📌 0

Great work by my colleagues Ritvik Singh, Karl Van Wyk, Arthur Allshire and Nathan Ratliff.

.

10.02.2025 05:04 — 👍 0 🔁 0 💬 0 📌 0

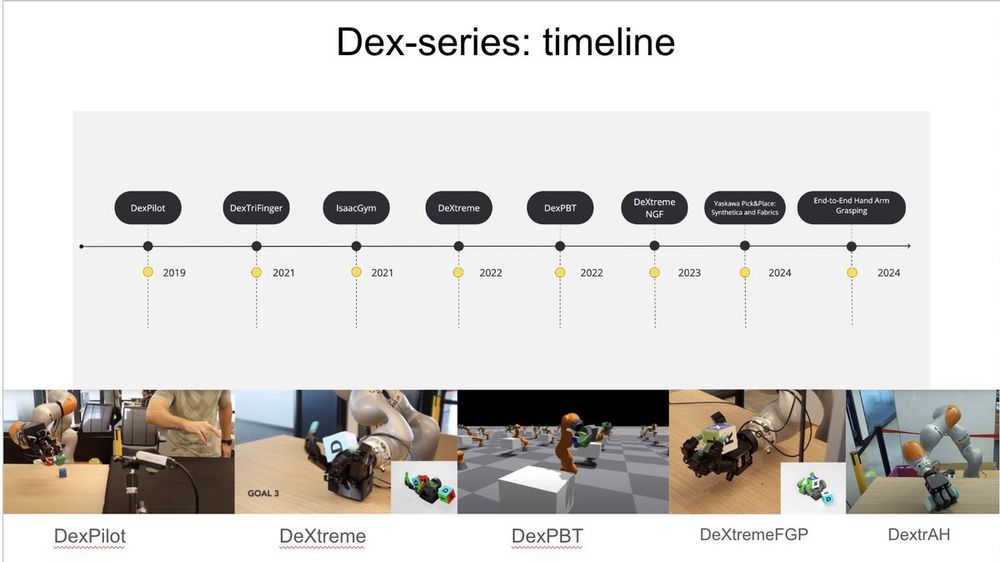

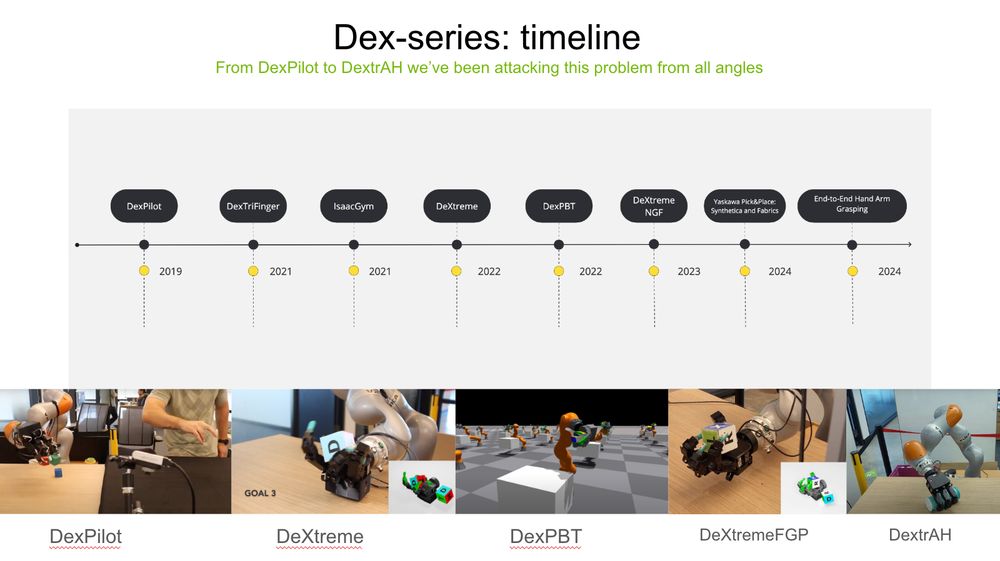

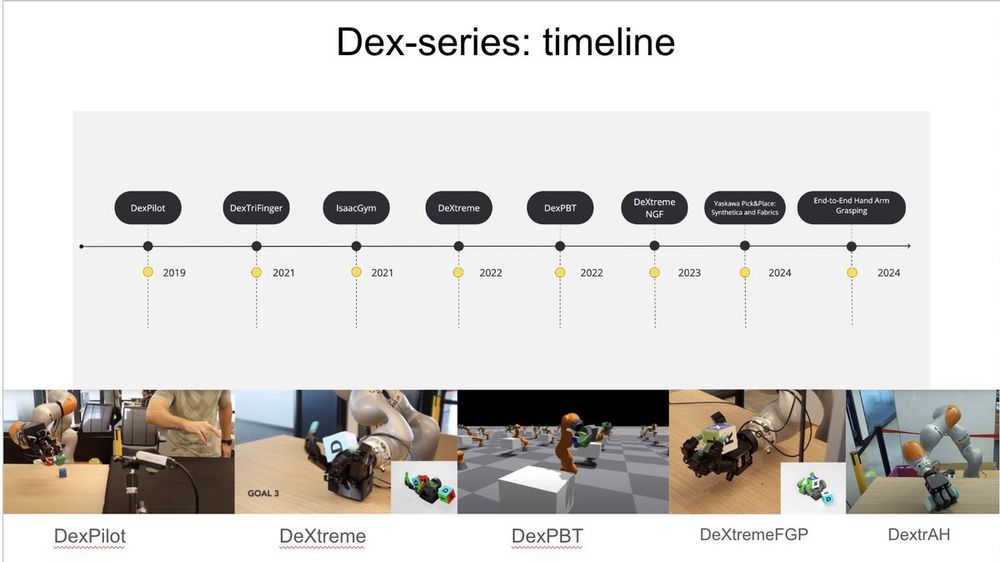

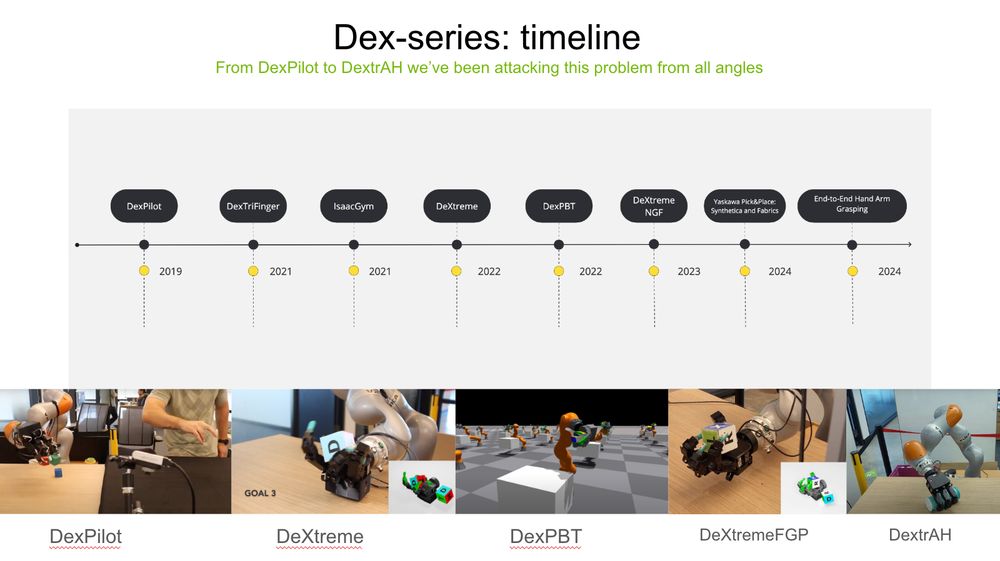

This is the next one in the line in our dex-series of work where we started off with pose estimation as the representation of the object and gradually moved towards more general end-to-end image based direct pixels to action mapping.

10.02.2025 05:03 — 👍 0 🔁 0 💬 1 📌 0

When doing distillation, we also regress to the location of the object, which serves as a diagnostic tool for us to see what the network is predicting and use it for any state machine on top.

10.02.2025 05:03 — 👍 0 🔁 0 💬 1 📌 0

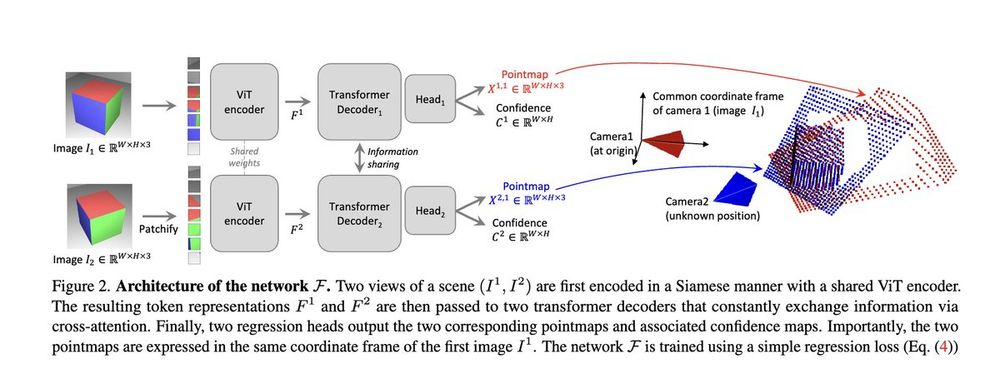

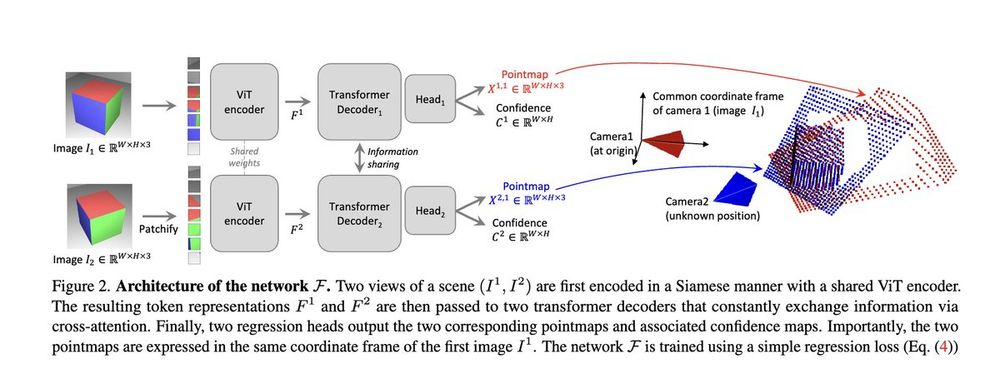

Our (stereo) vision network takes inspiration from the dust3r/mast3r work (with no explicit epipolar geometry imposed) where image embeddings are passed to a transformer with cross attention.

10.02.2025 05:02 — 👍 0 🔁 0 💬 1 📌 0

This approach differs from the common two-stage pipeline, where grasping or pick location is first regressed and then followed by motion planning. Instead, it integrates both stages into a single process and trains the entire system end-to-end using RL.

10.02.2025 05:02 — 👍 0 🔁 0 💬 1 📌 0

The benefits of training with parallel tiled rendering in simulation are still underappreciated. With modern tools like Scene Synthesizer and ControlNets, which transform synthetic images into photorealistic ones, the value of simulation-based training will only continue to grow.

10.02.2025 05:01 — 👍 0 🔁 0 💬 1 📌 0

Depth sensors are noisy and haven’t seen major improvements in a while and pure vision-based systems have caught up and most frontier models today use raw rgb pixels. We always wanted to move towards direct rgb based control and this work is our first attempt at doing so.

10.02.2025 05:01 — 👍 0 🔁 0 💬 1 📌 0

We train a teacher via RL with state vectors and student via distillation on images for learning control for 23 DoF multi-fingered hand and arm system. Doing end-to-end with real data as in BC-like systems is hard already but doing end-to-end with simulation is even harder due to the sim-to-real gap

10.02.2025 05:01 — 👍 0 🔁 0 💬 1 📌 0

Our new work has made a big leap moving away from depth based end-to-end to raw rgb pixels based end-to-end. We have two versions: mono and stereo, all trained entirely in simulation (IsaacLab).

10.02.2025 04:59 — 👍 21 🔁 2 💬 1 📌 1

Can you share the other ones that you liked?

20.11.2024 19:25 — 👍 0 🔁 0 💬 0 📌 0

Why were you not convinced before? Is it because it uses images and did a lot more scaling than the previous work?

20.11.2024 18:42 — 👍 0 🔁 0 💬 1 📌 0

Is this the PoliFormer work?

20.11.2024 18:31 — 👍 1 🔁 0 💬 1 📌 0

My workshop talk at CoRL on our work on dexterity that we've been doing for the past 5 years is here docs.google.com/presentation...

We have only started to scratch the surface of what we can do with simulations & I hope we can leverage ideas of self-play and alphazero going forward for robotics.

19.11.2024 17:47 — 👍 19 🔁 1 💬 0 📌 0

Yeah, I've almost stopped using google search :)

18.11.2024 16:46 — 👍 3 🔁 0 💬 1 📌 0

Official account managed by Cosmos Studios

Assistant Prof. at Georgia Tech | NVIDIA AI | Making robots smarter

Empowering scientists to reach for the edge of the possible

www.aria.org.uk

Postdoc @ UC Berkeley. 3D Vision/Graphics/Robotics. Prev: CS PhD @ Stanford.

janehwu.github.io

Graphics ∩ Vision, Electronic Elsewheres. AI-Mediated Reality & Interaction Research @ NVIDIA; previously: Omniverse XR and Robotics, much before that VR, VFX & TV.

Thinkpiece Piñata & Artist inbetween.

Opinions? No

📍Brooklyn/TLV

https://omershapira.com

Sensor algorithms at DLR.de robotics | www.klaustro.com

AI for Life Sciences at NVIDIA | trained as a scientist from JGI, UW & UC Berkeley | views are all mine

#RobotLearning Professor (#MachineLearning #Robotics) at @ias-tudarmstadt.bsky.social of

@tuda.bsky.social @dfki.bsky.social @hessianai.bsky.social

Research Scientist at NVIDIA. Robotics. 🤖

🔗 https://clemense.github.io/

From SLAM to Spatial AI; Professor of Robot Vision, Imperial College London; Director of the Dyson Robotics Lab; Co-Founder of Slamcore. FREng, FRS.

Algorithmic governance and computer vision. Assoc Prof Oxford, Ellis fellow

Robotics PhD student at CMU | Humanoid Control, Perception, and Behavior.

zhengyiluo.com

AI @ OpenAI, Tesla, Stanford

Research scientist, computer vision and robotics for agriculture. she/her. Largely not checking this account or posting about work at the moment.

https://amytabb.com/

Close-ish to Washington, D.C., USA.

Assistant Professor of CS @nyuniversity.

I like robots!

Building generally intelligent robots that *just work* everywhere, out of the box, at Berkeley AI Research (BAIR) and Meta FAIR.

Previously at NYU Courant, MIT and visiting researcher at Meta AI.

https://mahis.life/