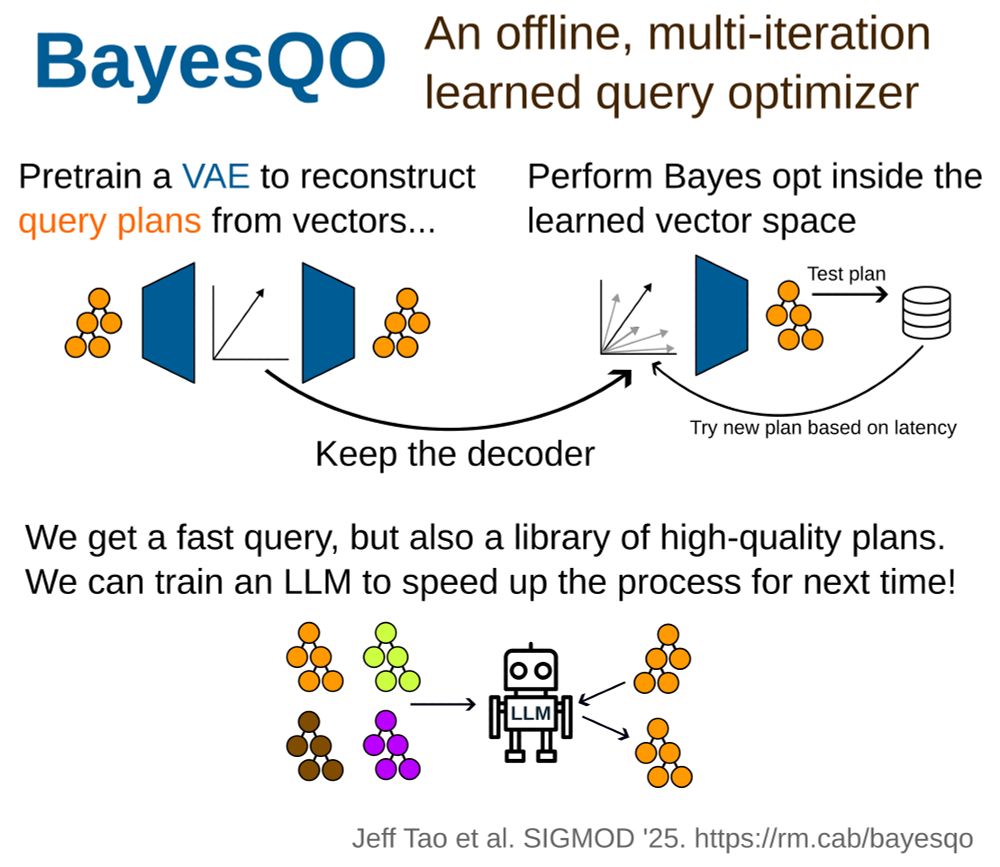

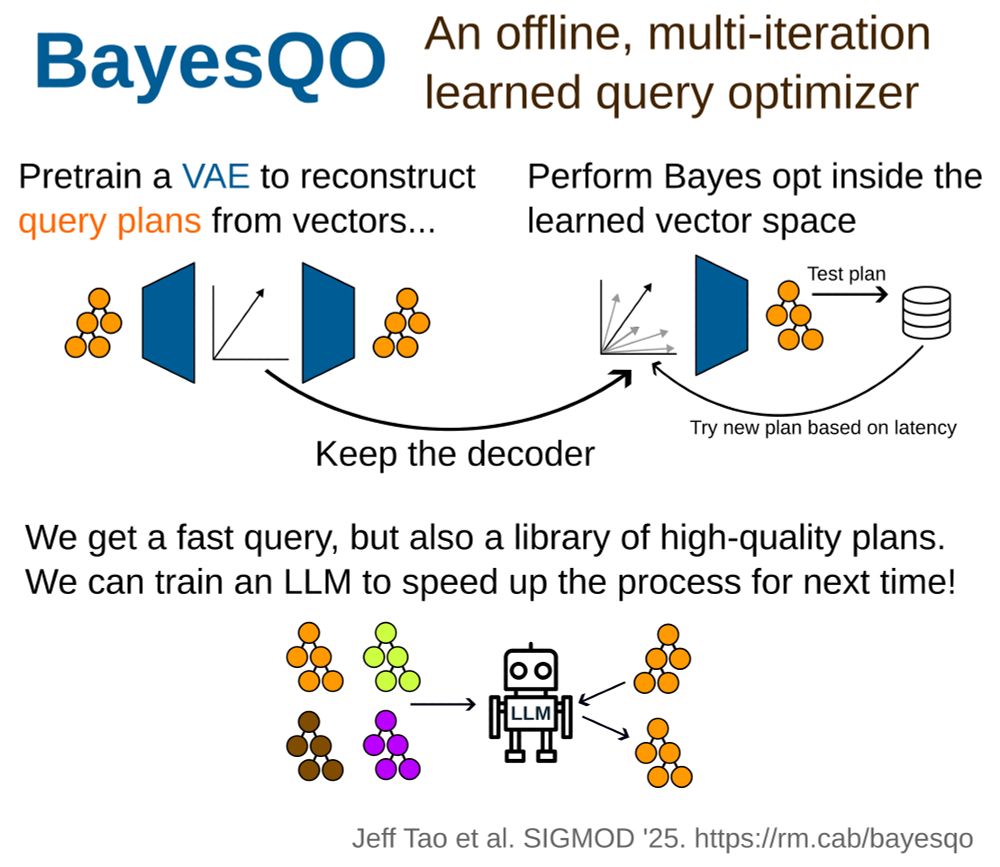

Infographic describing BayesQO, an offline, multi-iteration learned query optimizer. On the left, it shows a Variational Autoencoder (VAE) being pretrained to reconstruct query plans from vectors, using orange-colored plan diagrams. The decoder part of the VAE is retained. In the center and right, the image shows Bayesian optimization being performed in the learned vector space: new vectors are decoded into query plans, tested for latency, and refined iteratively. At the bottom, a library of optimized query plans is used to train a robot labeled “LLM,” which can then generate new plans directly. The caption reads: "We get a fast query, but also a library of high-quality plans. We can train an LLM to speed up the process for next time!" The image credits Jeff Tao et al., SIGMOD '25, and links to https://rm.cab/bayesqo

For that one query that must go 𝑟𝑒𝑎𝑙𝑙𝑦 𝑓𝑎𝑠𝑡, BayesQO (by Jeff Tao) finds superoptimized plans using Bayesian optimization in a learned plan space. It’s costly, but the results can train an LLM to speed things up next time.

📄https://rm.cab/bayesqo

03.06.2025 19:34 — 👍 3 🔁 0 💬 0 📌 0

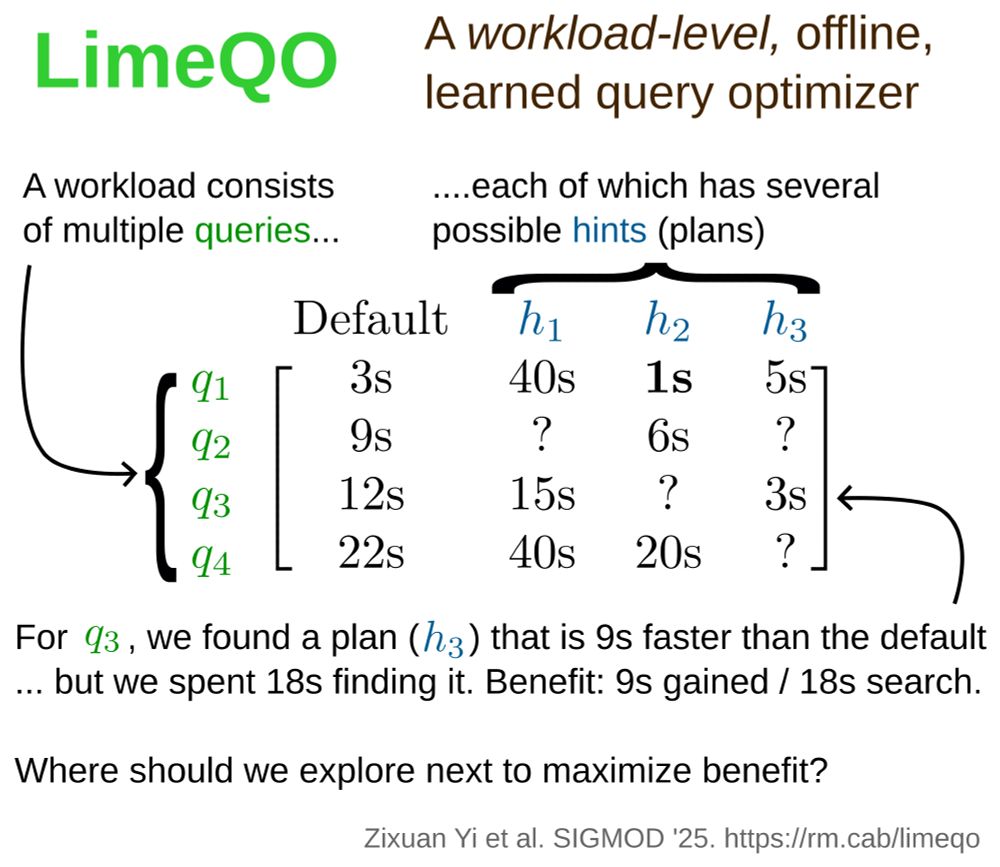

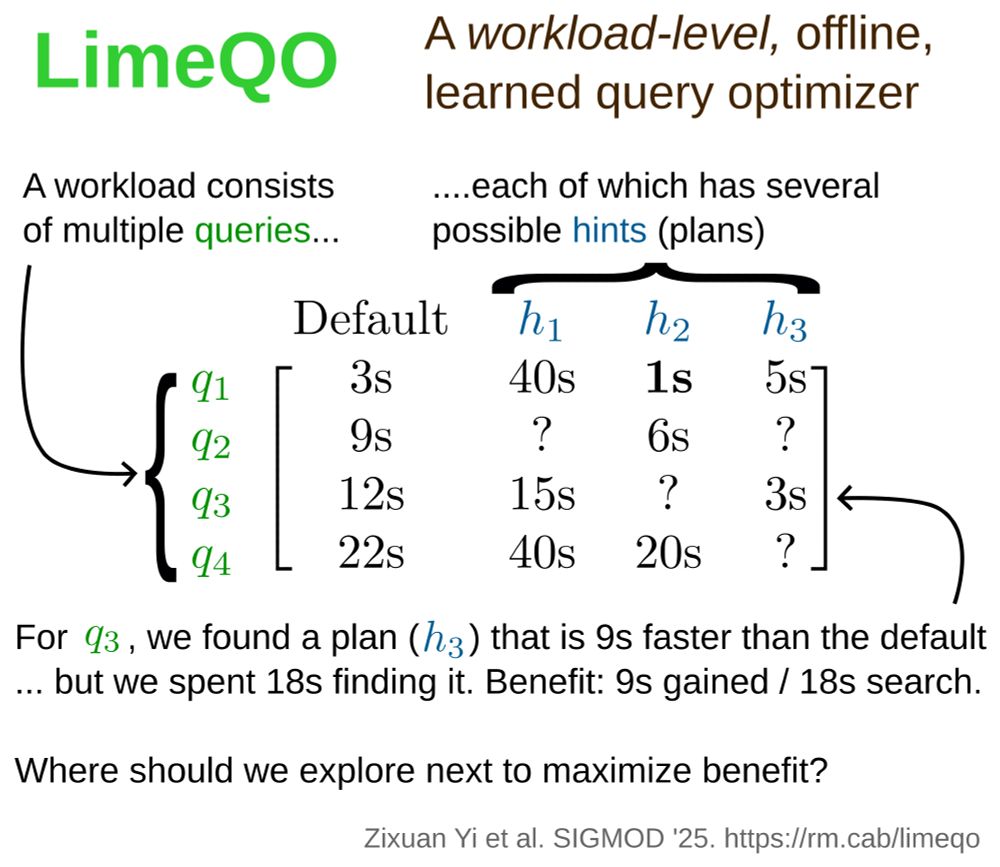

Infographic describing LimeQO, a workload-level, offline, learned query optimizer. On the left, it shows a workload consisting of multiple queries (q₁ to q₄), each with a default execution time (3s, 9s, 12s, 22s respectively). On the right, alternate plans (h₁, h₂, h₃) show varying execution times for each query, with some entries missing (represented by question marks). For example, q₁ takes 1s under h₂, much faster than the 3s default. A specific callout highlights that for q₃, plan h₃ reduced the time from 12s to 3s, but took 18s to find, resulting in a benefit of 9s gained / 18s search. The image poses the question: “Where should we explore next to maximize benefit?” The image credits Zixuan Yi et al., SIGMOD '25, and provides a link: https://rm.cab/limeqo

LimeQO (by Zixuan Yi), a 𝑤𝑜𝑟𝑘𝑙𝑜𝑎𝑑-𝑙𝑒𝑣𝑒𝑙 approach to query optimization, can use neural networks or simple linear methods to find good query hints significantly faster than a random or brute force search.

📄https://rm.cab/limeqo

03.06.2025 19:34 — 👍 2 🔁 0 💬 1 📌 0

OLAP workloads are dominated by repetitive queries -- how can we optimize them?

A promising direction is to do 𝗼𝗳𝗳𝗹𝗶𝗻𝗲 query optimization, allowing for a much more thorough plan search.

Two new SIGMOD papers! 🧵

03.06.2025 19:34 — 👍 6 🔁 0 💬 1 📌 0