NeurIPS 2025 Workshop DBM

Welcome to the OpenReview homepage for NeurIPS 2025 Workshop DBM

🚨 Deadline Extended 🚨

The submission deadline for the Data on the Brain & Mind Workshop (NeurIPS 2025) has been extended to Sep 8 (AoE)! 🧠✨

We invite you to submit your findings or tutorials via the OpenReview portal:

openreview.net/group?id=Neu...

27.08.2025 19:45 — 👍 4 🔁 2 💬 0 📌 0

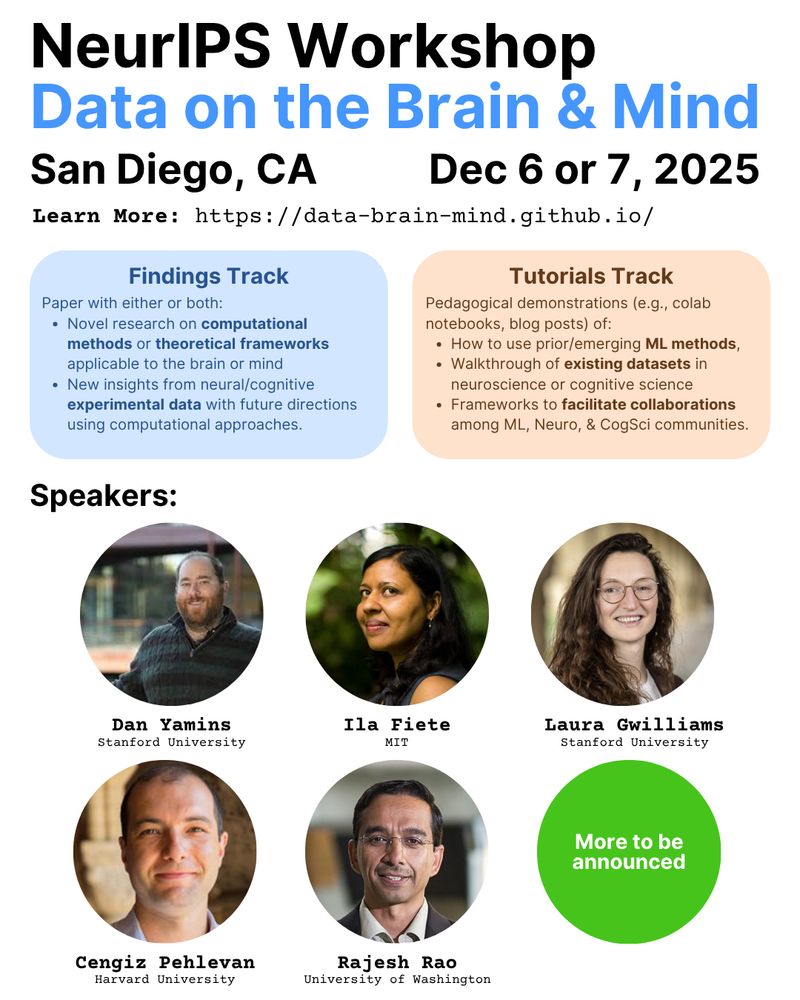

Data on the Brain & Mind

📢 10 days left to submit to the Data on the Brain & Mind Workshop at #NeurIPS2025!

📝 Call for:

• Findings (4 or 8 pages)

• Tutorials

If you’re submitting to ICLR or NeurIPS, consider submitting here too—and highlight how to use a cog neuro dataset in our tutorial track!

🔗 data-brain-mind.github.io

25.08.2025 15:43 — 👍 8 🔁 5 💬 0 📌 0

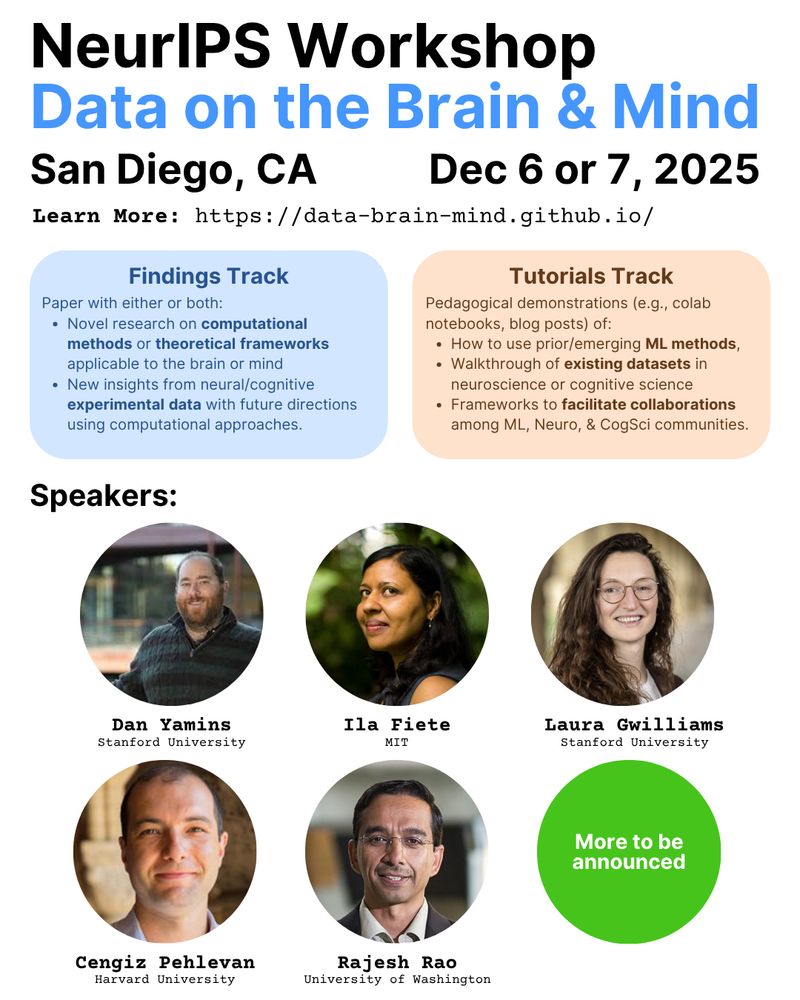

🚨 Excited to announce our #NeurIPS2025 Workshop: Data on the Brain & Mind

📣 Call for: Findings (4- or 8-page) + Tutorials tracks

🎙️ Speakers include @dyamins.bsky.social @lauragwilliams.bsky.social @cpehlevan.bsky.social

🌐 Learn more: data-brain-mind.github.io

04.08.2025 15:28 — 👍 31 🔁 10 💬 0 📌 3

Normalizing Flows (NFs) check all boxes for RL: exact likelihoods (imitation learning), efficient sampling (real-time control), and variational inference (Q-learning)! Yet they are overlooked over more expensive and less flexible contemporaries like diffusion models.

Are NFs fundamentally limited?

05.06.2025 17:05 — 👍 5 🔁 1 💬 1 📌 1

How can agents trained to reach (temporally) nearby goals generalize to attain distant goals?

Come to our #ICLR2025 poster now to discuss 𝘩𝘰𝘳𝘪𝘻𝘰𝘯 𝘨𝘦𝘯𝘦𝘳𝘢𝘭𝘪𝘻𝘢𝘵𝘪𝘰𝘯!

w/ @crji.bsky.social and @ben-eysenbach.bsky.social

📍Hall 3 + Hall 2B #637

26.04.2025 02:12 — 👍 1 🔁 0 💬 0 📌 0

🚨Our new #ICLR2025 paper presents a unified framework for intrinsic motivation and reward shaping: they signal the value of the RL agent’s state🤖=external state🌎+past experience🧠. Rewards based on potentials over the learning agent’s state provably avoid reward hacking!🧵

26.03.2025 00:05 — 👍 10 🔁 3 💬 1 📌 1

...but to create truly autonomous self-improving agents, we must not only imitate, but also 𝘪𝘮𝘱𝘳𝘰𝘷𝘦 upon the training capabilities. Our findings suggest that this improvement might emerge from better task representations, rather than more complex learning algorithms. 7/

14.02.2025 01:39 — 👍 0 🔁 0 💬 1 📌 0

𝘞𝘩𝘺 𝘥𝘰𝘦𝘴 𝘵𝘩𝘪𝘴 𝘮𝘢𝘵𝘵𝘦𝘳? Recent breakthroughs in both end-to-end robot learning and language modeling have been enabled not through complex TD-based reinforcement learning objectives, but rather through scaling imitation with large architectures and datasets... 6/

14.02.2025 01:39 — 👍 0 🔁 0 💬 1 📌 0

We validated this in simulation. Across offline RL benchmarks, imitation using our TRA task representations outperformed standard behavioral cloning-especially for stitching tasks. In many cases, TRA beat "true" value-based offline RL, using only an imitation loss. 5/

14.02.2025 01:39 — 👍 0 🔁 0 💬 1 📌 0

Successor features have long been known to boost RL generalization (Dayan, 1993). Our findings suggest something stronger: successor task representations produce emergent capabilities beyond training even without RL or explicit subtask decomposition. 4/

14.02.2025 01:39 — 👍 0 🔁 0 💬 1 📌 0

This trick encourages a form of time invariance during learning: both nearby and distant goals are represented similarly. By additionally aligning language instructions 𝜉(ℓ) to the goal representations 𝜓(𝑔), the policy can also perform new compound language tasks. 3/

14.02.2025 01:39 — 👍 0 🔁 0 💬 1 📌 0

What does temporal alignment mean? When training, our policy imitates the human actions that lead to the end goal 𝑔 of a trajectory. Rather than training on the raw goals, we use a representation 𝜓(𝑔) that aligns with the preceding state “successor features” 𝜙(𝑠). 2/

14.02.2025 01:39 — 👍 0 🔁 0 💬 1 📌 0

Current robot learning methods are good at imitating tasks seen during training, but struggle to compose behaviors in new ways. When training imitation policies, we found something surprising—using temporally-aligned task representations enabled compositional generalization. 1/

14.02.2025 01:39 — 👍 2 🔁 0 💬 1 📌 0

Excited to share new work led by @vivekmyers.bsky.social and @crji.bsky.social that proves you can learn to reach distant goals by solely training on nearby goals. The key idea is a new form of invariance. This invariance implies generalization w.r.t. the horizon.

06.02.2025 01:13 — 👍 13 🔁 3 💬 0 📌 0

Want to see an agent carry out long horizons tasks when only trained on short horizon trajectories?

We formalize and demonstrate this notion of *horizon generalization* in RL.

Check out our website! horizon-generalization.github.io

04.02.2025 20:50 — 👍 11 🔁 4 💬 0 📌 0

What does this mean in practice? To generalize to long-horizon goal-reaching behavior, we should consider how our GCRL algorithms and architectures enable invariance to planning. When possible, prefer architectures like quasimetric networks (MRN, IQE) that enforce this invariance. 6/

04.02.2025 20:37 — 👍 1 🔁 0 💬 1 📌 0

Empirical results support this theory. The degree of planning invariance and horizon generalization is correlated across environments and GCRL methods. Critics parameterized as a quasimetric distance indeed tend to generalize the most over horizon. 5/

04.02.2025 20:37 — 👍 0 🔁 0 💬 1 📌 0

Similar to how CNN architectures exploit the inductive bias of translation-invariance for image classification, RL policies can enforce planning invariance by using a *quasimetric* critic parameterization that is guaranteed to obey the triangle inequality. 4/

04.02.2025 20:37 — 👍 3 🔁 0 💬 1 📌 1

The key to achieving horizon generalization is *planning invariance*. A policy is planning invariant if decomposing tasks into simpler subtasks doesn't improve performance. We prove planning invariance can enable horizon generalization. 3/

04.02.2025 20:37 — 👍 4 🔁 2 💬 1 📌 0

Certain RL algorithms are more conducive to horizon generalization than others. Goal-conditioned (GCRL) methods with a bilinear critic ϕ(𝑠)ᵀψ(𝑔) as well as quasimetric methods better-enable horizon generalization. 2/

04.02.2025 20:37 — 👍 3 🔁 0 💬 1 📌 0

Reinforcement learning agents should be able to improve upon behaviors seen during training.

In practice, RL agents often struggle to generalize to new long-horizon behaviors.

Our new paper studies *horizon generalization*, the degree to which RL algorithms generalize to reaching distant goals. 1/

04.02.2025 20:37 — 👍 34 🔁 7 💬 1 📌 3

Learning to Assist Humans without Inferring Rewards

Website: empowering-humans.github.io

Paper: arxiv.org/abs/2411.02623

Many thanks to wonderful collaborators Evan Ellis, Sergey Levine, Benjamin Eysenbach, and Anca Dragan!

22.01.2025 02:17 — 👍 0 🔁 0 💬 0 📌 0

Effective empowerment could also be combined with other objectives (e.g., RLHF), to improve assistance and promote safety (prevent human disempowerment). 6/

22.01.2025 02:17 — 👍 0 🔁 0 💬 1 📌 0

In principle, this approach provides a general way to align RL agents from human interactions without needing human feedback or other rewards. 5/

22.01.2025 02:17 — 👍 0 🔁 0 💬 1 📌 0

We show that optimizing this human effective empowerment helps in assistive settings. Theoretically, we show that maximizing the effective empowerment optimizes an (average-case) lower bound the human's utility/reward/objective under a uninformative prior. 4/

22.01.2025 02:17 — 👍 0 🔁 0 💬 1 📌 0

Our recent paper, "Learning to Assist Humans Without Inferring Rewards," proposes a scalable contrastive estimator for human empowerment. The estimator learns successor features to model the effects of a human's actions on the environment, approximating the *effective empowerment*. 3/

22.01.2025 02:17 — 👍 0 🔁 0 💬 1 📌 0

This distinction is subtle but important. An agent that maximizes a misspecified model of the human's reward or seeks power for itself can lead to arbitrarily bad outcomes where the human becomes disempowered. Maximizing human empowerment avoids this. 2/

22.01.2025 02:17 — 👍 0 🔁 0 💬 1 📌 0

Postdoc at Helmholtz Munich (Schulz lab) and MPI for Biological Cybernetics (Dayan lab) || Ph.D. from EPFL (Gerstner lab) || Working on computational models of learning and decision-making in the brain; https://sites.google.com/view/modirsha

Senior Research Fellow @ ucl.ac.uk/gatsby & sainsburywellcome.org

{learning, representations, structure} in 🧠💭🤖

my work 🤓: eringrant.github.io

not active: sigmoid.social/@eringrant @eringrant@sigmoid.social, twitter.com/ermgrant @ermgrant

Workshop at #NeurIPS2025 aiming to connect machine learning researchers with neuroscientists and cognitive scientists by focusing on concrete, open problems grounded in emerging neural and behavioral datasets.

🔗 https://data-brain-mind.github.io

(he/him) Assistant Professor of Cognitive Science at Central European University in Vienna, PI of the CEU Causal Cognition Lab (https://ccl.ceu.edu) #CogSci #PsychSciSky #SciSky

Personal site: https://www.jfkominsky.com

PhD student in Reinforcement Learning at KTH Stockholm 🇸🇪

https://www.kth.se/profile/stesto

https://www.linkedin.com/in/stojanovic-stefan/

AI PhD student at Berkeley

alyd.github.io

Alignment and coding agents. AI @ BAIR | Scale | Imbue

http://evanellis.com/

PhD @ Warsaw University of Technology

JacGCRL

Reinforcement Learning / Continual Learning / Neural Networks Plasticity

princeton physics phd

mit '23 physics + math

RL, interpretable AI4Science, stat phys

Assistant professor at Princeton CS working on reinforcement learning and AI/ML.

Site: https://ben-eysenbach.github.io/

Lab: https://princeton-rl.github.io/

Persian psychologist

Therapist for depression and anxiety disorders.

Research Scientist at DeepMind. Opinions my own. Inventor of GANs. Lead author of http://www.deeplearningbook.org . Founding chairman of www.publichealthactionnetwork.org

VP of Research, GenAI @ Meta (Multimodal LLMs, AI Agents), UPMC Professor of Computer Science at CMU, ex-Director of AI research at @Apple, co-founder Perceptual Machines (acquired by Apple)

Google Chief Scientist, Gemini Lead. Opinions stated here are my own, not those of Google. Gemini, TensorFlow, MapReduce, Bigtable, Spanner, ML things, ...

PhD student @uwnlp.bsky.social @uwcse.bsky.social | visiting researcher @MetaAI | previously @jhuclsp.bsky.social

https://stellalisy.com

AI interp @UC Berkeley | prev. MIT

jiahai-feng.github.io

Research Director, Founding Faculty, Canada CIFAR AI Chair @VectorInst.

Full Prof @UofT - Statistics and Computer Sci. (x-appt) danroy.org

I study assumption-free prediction and decision making under uncertainty, with inference emerging from optimality.

Assistant Professor at UW and Staff Research Scientist at Google DeepMind. Social Reinforcement Learning in multi-agent and human-AI interactions. PhD from MIT. Check out https://socialrl.cs.washington.edu/ and https://natashajaques.ai/.