Human abstraction ability applies not just to language but across all of the subjects we reason about.

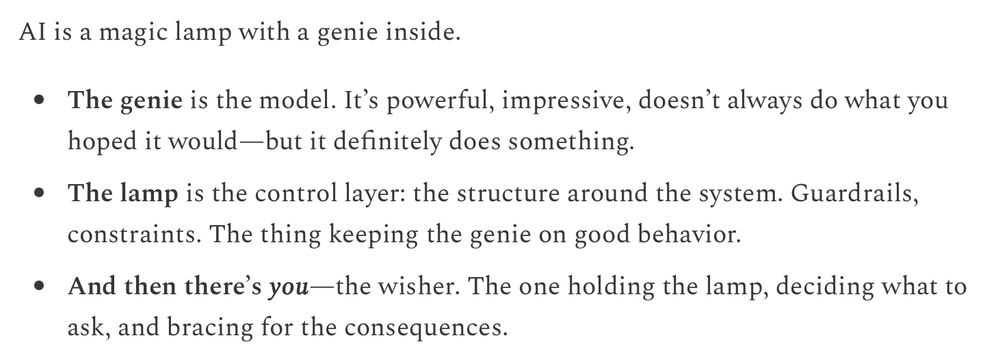

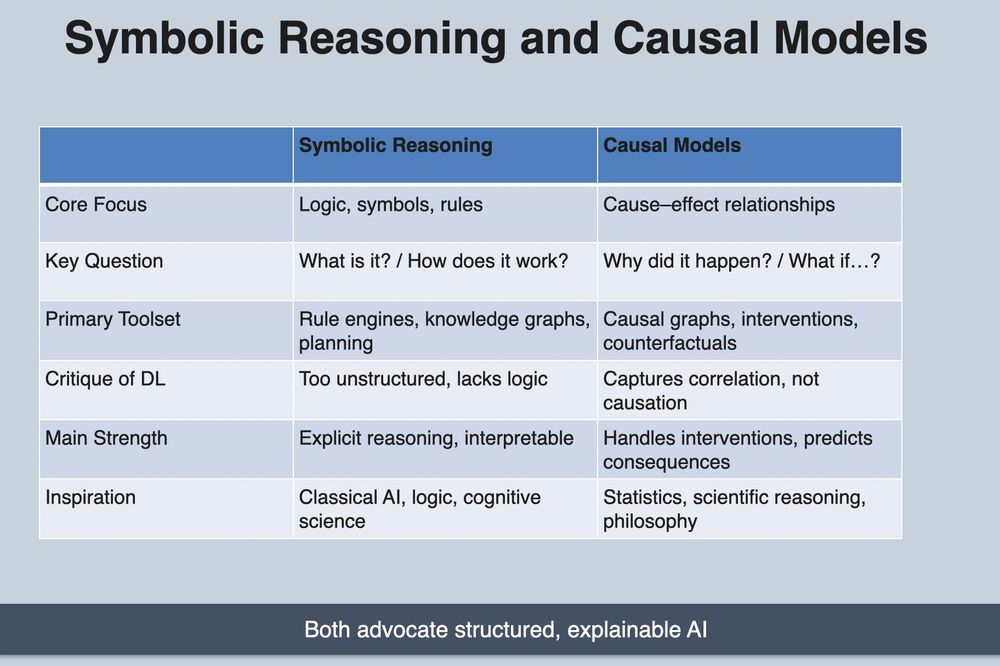

AI won’t reach its potential till we learn to blend symbolic and causal capabilities with the statistical pattern matching that powers today’s LLMs.

#AI #NeurosymbolicAI #CausalAI

22.06.2025 22:41 — 👍 0 🔁 0 💬 0 📌 0

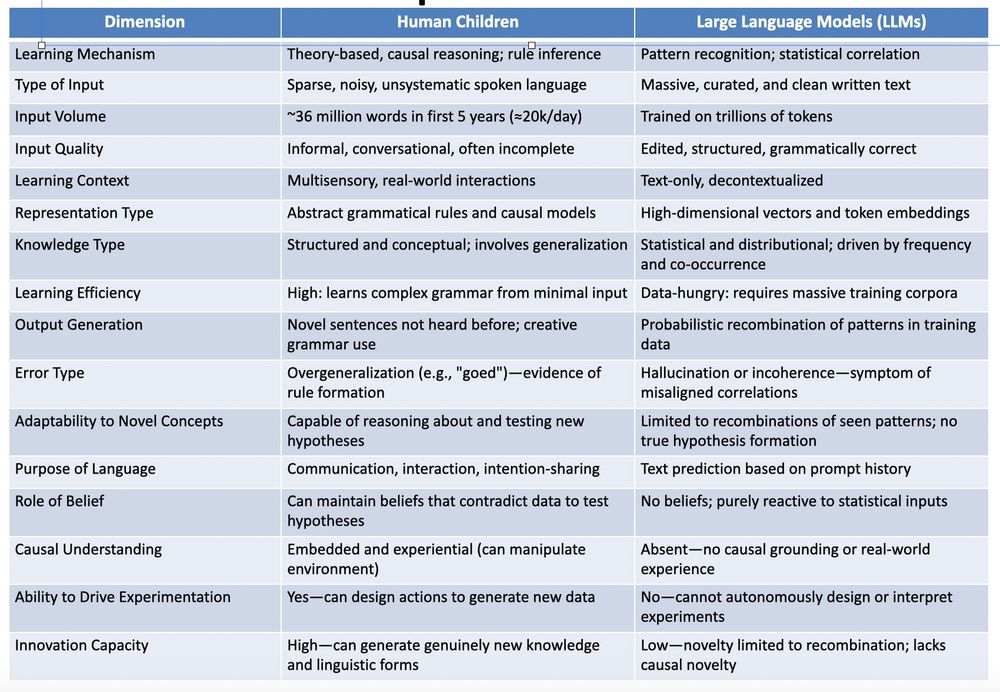

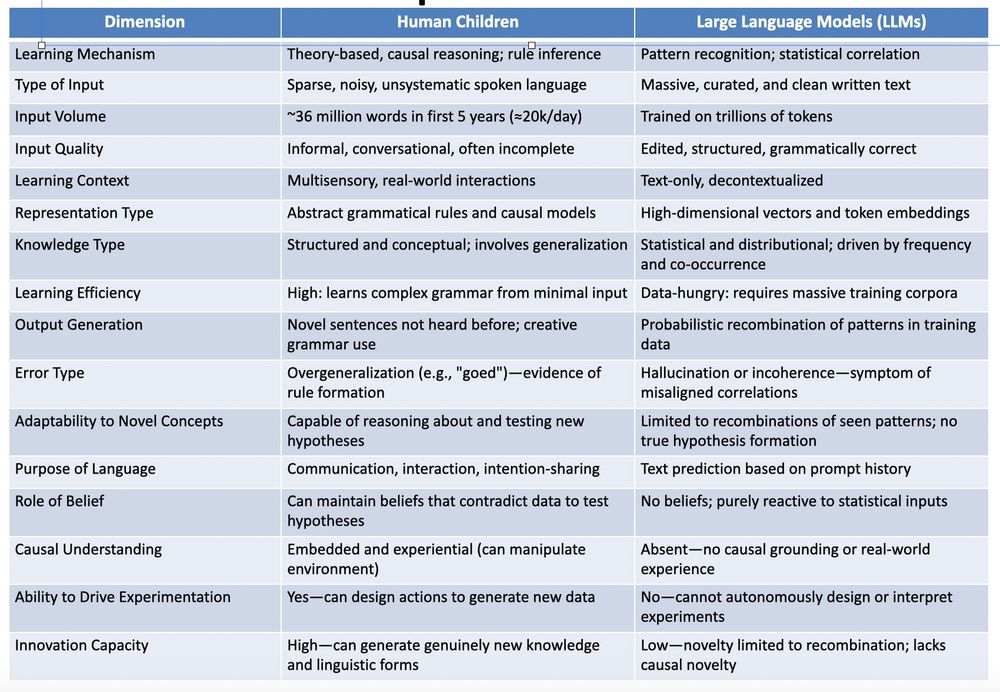

Instead of relying only on patterns in input, humans:

+ Form internal, rule-based models of language structure (e.g. grammar, syntax).

+ Infer underlying rules even when they’re not explicitly taught.

+ Use these abstractions to generalize beyond what they’ve directly heard.

2/n

22.06.2025 22:40 — 👍 0 🔁 0 💬 1 📌 0

Table comparing human and LLM language learning patterns

LLMs are missing “theoretical abstraction” capability we see in children.

Multiple folks pointed this out, for example @teppofelin.bsky.social and Holweg in “Theory Is All You Need: AI, Human Cognition, and Causal Reasoning”. papers.ssrn.com/sol3/papers....

#AI #CausalAI #SymbolicAI

1/n

22.06.2025 22:39 — 👍 2 🔁 0 💬 1 📌 0

Enjoying the graphic.

On your list of ways to address these concerns, where would you put implementation neurosymbolic AI?

Seems to me that combining deep learning (LLMs) with symbolic/causal models could go a long way to creating more reliable, auditable, and aligned AI.

#AI

19.06.2025 15:12 — 👍 0 🔁 0 💬 1 📌 0

@jwmason.bsky.social The other thing worth knowing is that bigger LLMs are not the only path forward for AI. Combining LLMs with symbolic/causal models has the promise of creating hybrid AI systems that are much more reliable in reflecting the world as it is.

#AI #SymbolicAI #CausalAI

19.06.2025 15:06 — 👍 3 🔁 0 💬 0 📌 0

LLMs form a semi-accurate representation of the world as it is reflected in the writing they train on. A next step would be to create hybrid AIs that combine LLMs with symbolic and causal models that have explicit (and more accurate/auditable) representations of the world #AI #SymbolicAI #CausalAI

19.06.2025 14:57 — 👍 1 🔁 0 💬 0 📌 0

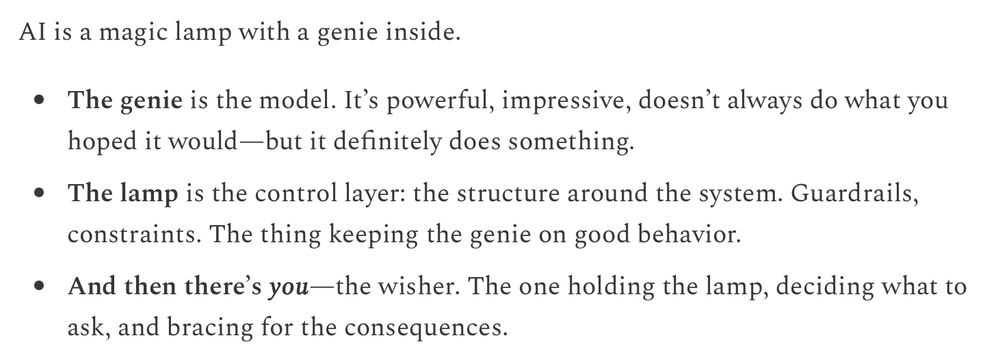

The opportunity here is for us to perfect hybrid systems that integrate deep learning with symbolic reasoning and causal understanding. This will reduce our dependence on filtering bad consequences, by having models that are inherently more reliable.

#AI #CausalAI #SymbolicAI

18.06.2025 19:49 — 👍 1 🔁 0 💬 1 📌 0

Progress on the "control layer" feels far behind our breakthroughs with the "genie".

Having the control layer be a smart filter on the input and output is helpful but in the end seems fundamentally wrong headed.

2/n

18.06.2025 19:48 — 👍 0 🔁 0 💬 1 📌 0

“Wishes have consequences. Especially when they run in production.” - ain't that a fact!

Cassie Kozyrkov's genie metaphor rings true:

1/n

18.06.2025 19:48 — 👍 0 🔁 0 💬 1 📌 0

First, through a think-aloud study (N=16) in which participants use ChatGPT to answer objective questions, we identify 3 features of LLM responses that shape users' reliance: #explanations (supporting details for answers), #inconsistencies in explanations, and #sources.

2/7

28.02.2025 15:21 — 👍 3 🔁 1 💬 1 📌 0

@rohanpaul.bsky.social this post is feeling lonely ;-)

Why not cross post on both X and Bluesky?

18.06.2025 00:14 — 👍 2 🔁 0 💬 1 📌 0

Table contrasting symbolic reasoning and causal models as next steps in AI evolution.

#SymbolicAI and #CausalAI companions in search for next #AI breakthrough

17.06.2025 23:51 — 👍 1 🔁 0 💬 0 📌 0

When are AI/ML models unlikely to help with decision-making? | Statistical Modeling, Causal Inference, and Social Science

@jessicahullman.bsky.social persuasively argues that current AI is poor tool for decisions that fit FIRE (forward-looking, individual/idiosyncratic, require reasoning or experimentation/intervention) profile

Hmm … does this call for #CausalAI

statmodeling.stat.columbia.edu/2025/06/05/w...

17.06.2025 22:35 — 👍 1 🔁 0 💬 0 📌 0

For example, Apple’s approach to callbacks to app code from the model as it reasons and their support for multi-layered guardrails illustrates what they have learned about needing components with use case specific checks and balances.

#PervasiveAI #AgenticAI #AppleAI #AISafety

2/n

17.06.2025 22:22 — 👍 0 🔁 0 💬 0 📌 0

Apple opening on device LLM take-aways:

1) Medium term “Pervasive AI” will have more reach than “Agentic AI”

2) AI best implemented through systems of components and not a single blackbox neural net

3) Use case specific adjustments is needed to balance latency, cost, reliability and safety

1/n

17.06.2025 22:21 — 👍 1 🔁 0 💬 1 📌 0

Urban Planner, Partner in Speck Dempsey, author of Walkable City, Walkable City Rules, and other stuff I'd like you to read.

Waiting on a robot body. All opinions are universal and held by both employers and family. ML/NLP professor.

nsaphra.net

Research Scientist at Google Research, working on Bio-inspired AI • PhD at Mila & McGill University, Vanier scholar • Ex-RealityLabs, Meta AI • Comedy+Cricket enthusiast

Writer. Blogger. Truth-teller from Donbas🇺🇦

PayPal: angelica.shalagina@gmail.com

💛Friends, please beware of fakes - only these links mine👇

https://linktr.ee/angelica.shala

Director of Research, @dairinstitute.bsky.social

Roller derby athlete

https://alex-hanna.com

Book: thecon.ai

Pod+newsletter: https://dair-institute.org/maiht3k

🇪🇬Ⲛⲓⲣⲉⲙⲛ̀ⲭⲏⲙⲓ

🏳️⚧️ she/هي

📸 @willtoft.bsky.social

Rep📘 @ianbonaparte.bsky.social

Book: https://thecon.ai

Web: https://faculty.washington.edu/ebender

ion foundation endowed prof | university of utah | cognition, AI, generative rationality, theory-based view, causal reasoning, economics, strategy

Computer Scientist, SAP Expert, IT Manager, Consultant, Independent AI Researcher, AI Engineer 👉 AI = Deep Learning + Causal Inference + Symbol Manipulation

Research Scientist in AI at Wimmics (Université Côte d'Azur, Inria, CNRS, I3S). Interested in Neurosymbolic AI, Knowledge graphs, Semantic Web, Graph Embedding. Spokesperson of @afiainfo.bsky.social

@piermonn@sigmoid.social

Nucleoid is open source #NeuroSymbolic #AI with Knowledge Graph | Reasoning Engine 🌿🌱🐋🌍 Star us ⭐ https://github.com/NucleoidAI/Nucleoid

I believe in self sovereign data #ssi , data economy and decentralized tech. I am democratizing personal and privacy-first #ai at affinidi.com

Assistant Professor at Imperial College London | EEE Department and I-X.

Neuro-symbolic AI, Safe AI, Generative Models

Previously: Post-doc at TU Wien, DPhil at the University of Oxford.

human being | assoc prof in #ML #AI #Edinburgh | PI of #APRIL | #reliable #probabilistic #models #tractable #generative #neuro #symbolic | heretical empiricist | he/him

👉 https://april-tools.github.io

Science, AI, Tech. #Neurosymbolic #AI #innovation PhD from Imperial College; MBA from MIT. Professor, researcher, manager, dad. WA, MA, UK, BR.

Associate professor of economics, John Jay College-CUNY, senior fellow at the Groundwork Collaborative. Blog and other writing: jwmason.org. Study economics with me: https://johnjayeconomics.org. Anti-war Keynesian, liberal socialist, Brooklyn dad.

Visual Investigations at The New York Times

CEO, AI Advisor, Keynote Speaker, fmr Chief Decision Scientist at Google

Newsletter: decision.substack.com

asst prof of computer science at cu boulder

nlp, cultural analytics, narratives, communities

books, bikes, games, art

https://maria-antoniak.github.io

Assistant Professor of Computer Science at Princeton | #HCI #AR #VR #SpatialComputing

parastooabtahi.com

hci.social/@parastoo