Common pitfalls (with examples) when building AI applications, both from public case studies and my personal experience.

huyenchip.com/2025/01/16/a...

Would love to hear from your experience about the pitfalls you've seen!

@chiphuyen.bsky.social

AI x storytelling AI Engineering: https://amazon.com/dp/1098166302 Designing ML Systems: http://amazon.com/dp/1098107969 @chipro

Common pitfalls (with examples) when building AI applications, both from public case studies and my personal experience.

huyenchip.com/2025/01/16/a...

Would love to hear from your experience about the pitfalls you've seen!

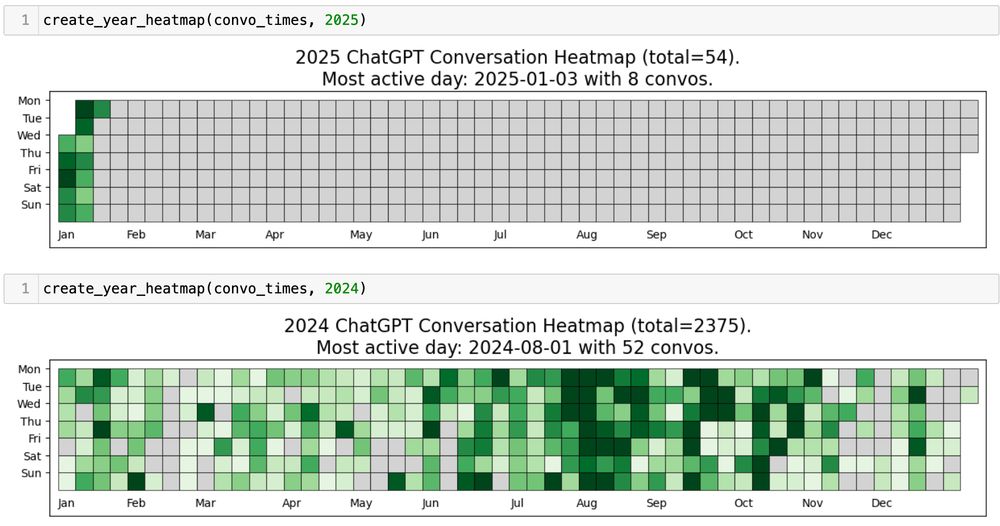

I'm using AI so much for work that I can tell how productive I am by how many conversations I've had with AI.

Script to generate this heatmap: github.com/chiphuyen/ai...

Finally got my copy! “AI Engineering” is officially out 🙏 🎉

It’s heavier than I expected (500 pages) and I’m so glad O’Reilly decided to publish it in color.

Thanks everyone for making this happen! Thank you for giving this book a chance!

My 8000-word note on agents: huyenchip.com//2025/01/07/...

1. An AI-powered agent's capability is determined by its tools and its planning ability

2. How to select the best tools for your agent

3. How to augment a model’s planning capability

4. Agent’s failure modes

Feedback is much appreciated!

O'Reilly said the first physical copies would appear around Dec 22 but my copies arrive on Jan 7 :(

13.12.2024 07:37 — 👍 2 🔁 0 💬 0 📌 0

6. AI Incident Database

For those interested in seeing how AI can go wrong, this contains over 3000 reports of AI harms: incidentdatabase.ai

5. Chameleon: Plug-and-Play Compositional Reasoning with Large Language Models (Lu et al., 2023)

A cool study on LLM planners, how they use tools, and their failure modes. An interesting finding is that different LLMs have different tool preferences: arxiv.org/abs/2304.09842

4. Efficiently Scaling Transformer Inference (Pope et al., 2022)

An amazing paper about inference optimization for transformers. It provides a guideline to optimize for different aspects, e.g. lowest possible latency, highest possible throughput, or longest context length: arxiv.org/abs/2211.05102

3. Llama 3 paper

The section on post-training data is a gold mine! It details different techniques they used to generate 2.7M examples for instruction finetuning. It also covers synthetic data verification! arxiv.org/abs/2407.21783

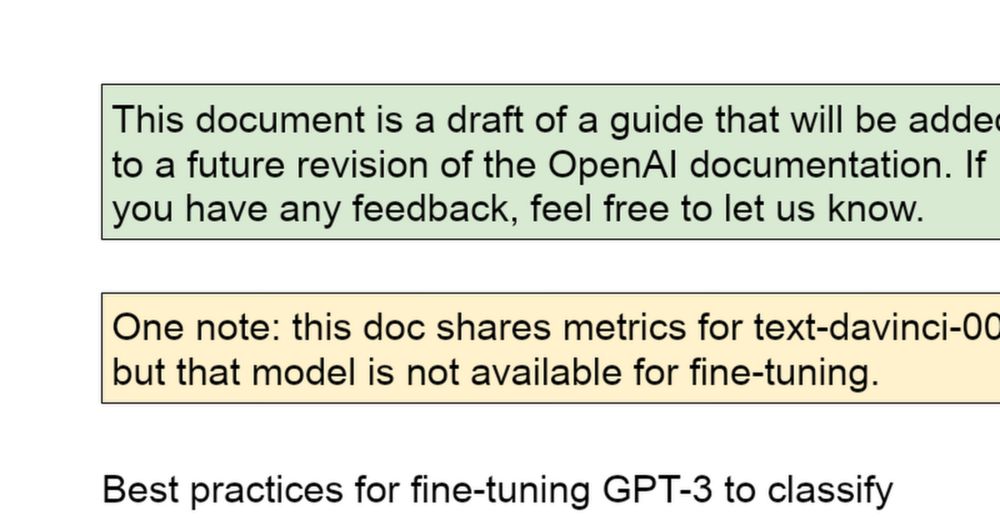

2. OpenAI’s best practices for finetuning

While this guide focuses on GPT-3, many techniques are applicable to finetuning in general. It explains how finetuning works, how to prepare training data, how to pick hyperparameters, and common finetuning mistakes: docs.google.com/document/d/1...

The highlights:

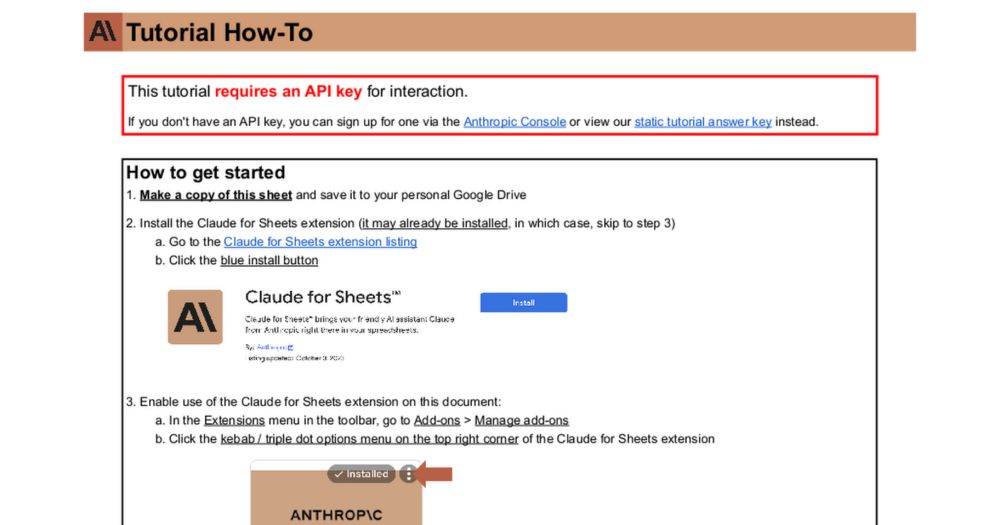

1. Anthropic’s Prompt Engineering Interactive Tutorial

The Google Sheets-based interactive exercises make it easy to experiment with different prompts. docs.google.com/spreadsheets...

When doing research for AI Engineering, I went through so many papers, case studies, blog posts, repos, tools, etc. This repo contains ~100 resources that really helped me understand various aspects of building with foundation models.

github.com/chiphuyen/ai...

Where are the AI people? Who should I follow?

06.12.2024 16:27 — 👍 34 🔁 0 💬 9 📌 0Hello, world. So I caved and got on Bsky :-)

I finally finished my book, AI Engineering, and I'm excited to get back to building. So many fun applications to build!

What are you excited about?