YouTube video by Andrew Perfors

Part 1: How do LLMs work?

I just created a series of seven deep-dive videos about AI, which I've posted to youtube and now here. 😊

Targeted to laypeople, they explore how LLMs work, what they can do, and what impacts they have on learning, well-being, disinformation, the workplace, the economy, and the environment.

22.01.2026 00:45 — 👍 483 🔁 189 💬 19 📌 18

The cerebellum supports high-level language?? Now out in @cp-neuron.bsky.social, we systematically examined language-responsive areas of the cerebellum using precision fMRI and identified a *cerebellar satellite* of the neocortical language network!

authors.elsevier.com/a/1mUU83BtfH...

1/n 🧵👇

22.01.2026 17:21 — 👍 68 🔁 20 💬 2 📌 4

What does it mean to understand language?

Language understanding entails not just extracting the surface-level meaning of the linguistic input, but constructing rich mental models of the situation it describes. Here we propose that because pr...

What does it mean to understand language? We argue that the brain’s core language system is limited, and that *deeply* understanding language requires EXPORTING info to other brain regions.

w/ @neuranna.bsky.social @evfedorenko.bsky.social @nancykanwisher.bsky.social

arxiv.org/abs/2511.19757

1/n🧵👇

26.11.2025 16:26 — 👍 82 🔁 33 💬 2 📌 5

𝗔 𝗡𝗘𝗨𝗥𝗢𝗘𝗖𝗢𝗟𝗢𝗚𝗜𝗖𝗔𝗟 𝗣𝗘𝗥𝗦𝗣𝗘𝗖𝗧𝗜𝗩𝗘 𝗢𝗡 𝗧𝗛𝗘 𝗣𝗥𝗘𝗙𝗥𝗢𝗡𝗧𝗔𝗟 𝗖𝗢𝗥𝗧𝗘𝗫

By Mars and Passingham

"Understanding anthropoid foraging challenges may thus contribute to our understanding of human cognition"

Going to the top of the reading list!

doi.org/10.1016/j.ne...

#neuroskyence

11.10.2025 16:31 — 👍 62 🔁 16 💬 4 📌 2

PNAS

Proceedings of the National Academy of Sciences (PNAS), a peer reviewed journal of the National Academy of Sciences (NAS) - an authoritative source of high-impact, original research that broadly spans...

🚨Out in PNAS🚨

with @joshtenenbaum.bsky.social & @rebeccasaxe.bsky.social

Punishment, even when intended to teach norms and change minds for the good, may backfire.

Our computational cognitive model explains why!

Paper: tinyurl.com/yc7fs4x7

News: tinyurl.com/3h3446wu

🧵

08.08.2025 14:04 — 👍 66 🔁 28 💬 3 📌 1

YouTube video by McGovern Institute

Things and Stuff: How the brain distinguishes oozing fluids from solid objects

Super excited to share our new article: “Dissociable cortical regions represent things and stuff in the human brain” with @nancykanwisher.bsky.social, @rtpramod.bsky.social and @joshtenenbaum.bsky.social

Video abstract: www.youtube.com/watch?v=B0XR...

Paper: authors.elsevier.com/a/1lWxv3QW8S...

01.08.2025 13:50 — 👍 33 🔁 12 💬 1 📌 2

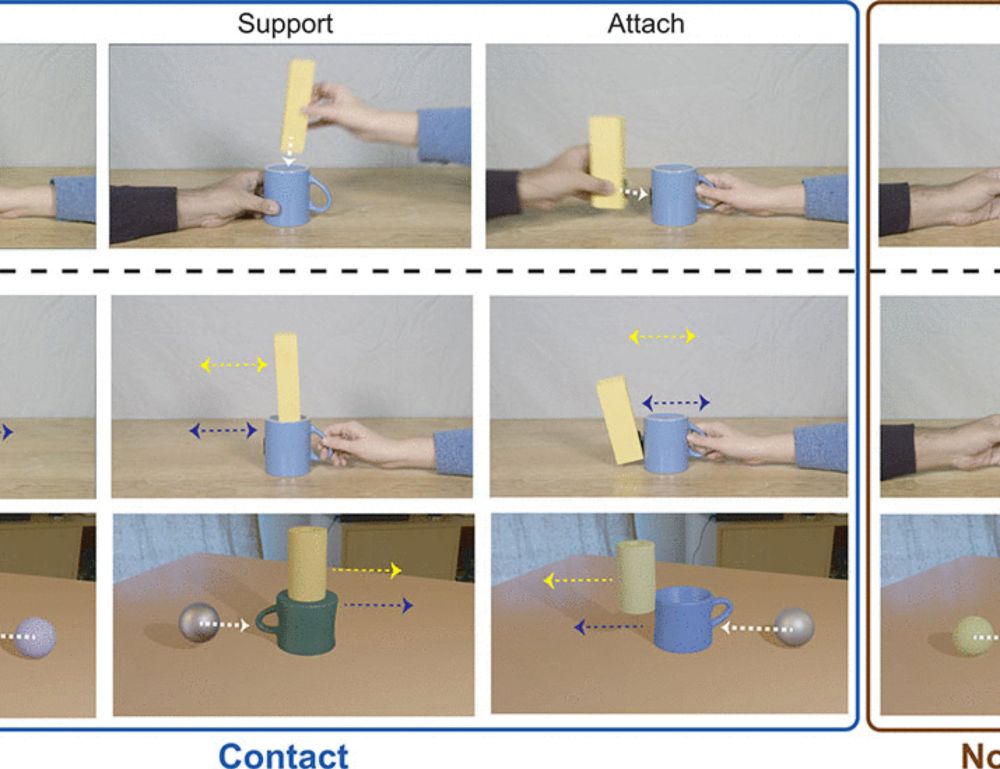

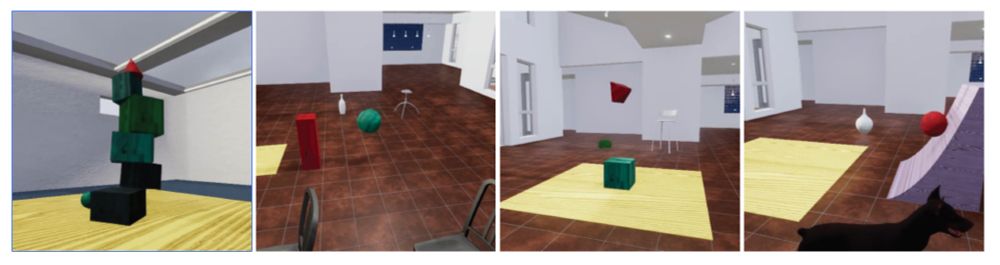

Can you tell if a tower will fall or if two objects will collide — just by looking? 🧠👀 Come check out my #CogSci2025 poster (P1-W-207) on July 31, 13:00–14:15 PT to learn how people do general-purpose physical reasoning from visual input!

29.07.2025 23:15 — 👍 13 🔁 4 💬 1 📌 0

Good question! We haven't tested these cases you've mentioned but Jason Fischer's 2016 paper found that PN doesn't respond strongly to social prediction (on hieder and simmel-like displays)

19.06.2025 13:58 — 👍 1 🔁 0 💬 0 📌 0

We have started to look in the cerebellum. It is still early days so keep an eye out for updates in the future!

19.06.2025 13:54 — 👍 1 🔁 0 💬 0 📌 0

Decoding predicted future states from the brain’s “physics engine”

Using fMRI in humans, this study provides evidence for future state prediction in brain regions involved in physical reasoning.

Thrilled to announce our new publication titled 'Decoding predicted future states from the brain's physics engine' with @emiecz.bsky.social, Cyn X. Fang, @nancykanwisher.bsky.social, @joshtenenbaum.bsky.social

www.science.org/doi/full/10....

(1/n)

17.06.2025 18:23 — 👍 48 🔁 19 💬 1 📌 2

Thanks to my co-authors and all the people who gave constructive feedback over the course of this project! Special shout out to Kris Brewer for shooting the videos used in Experiment 1 and @georginawooxy.bsky.social for her deep neural network expertise.

(12/12)

17.06.2025 18:23 — 👍 1 🔁 1 💬 0 📌 0

Our findings show that PN has abstract object contact information and provide the strongest evidence yet that PN is engaged in predicting what will happen next. These results open many new avenues of investigation into how we understand, predict, and plan in the physical world

(11/n)

17.06.2025 18:23 — 👍 1 🔁 0 💬 1 📌 0

Our main results are i) not present in the ventral temporal cortex, ii) not present in the primary visual cortex -- i.e, our stimuli were unlikely to have low-level visual confounds and iii) are replicable with different analysis criteria & methods. See paper for details.

(10/n)

17.06.2025 18:23 — 👍 1 🔁 0 💬 1 📌 0

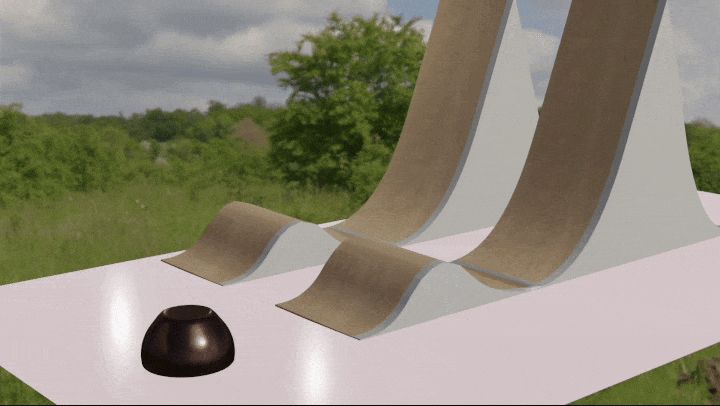

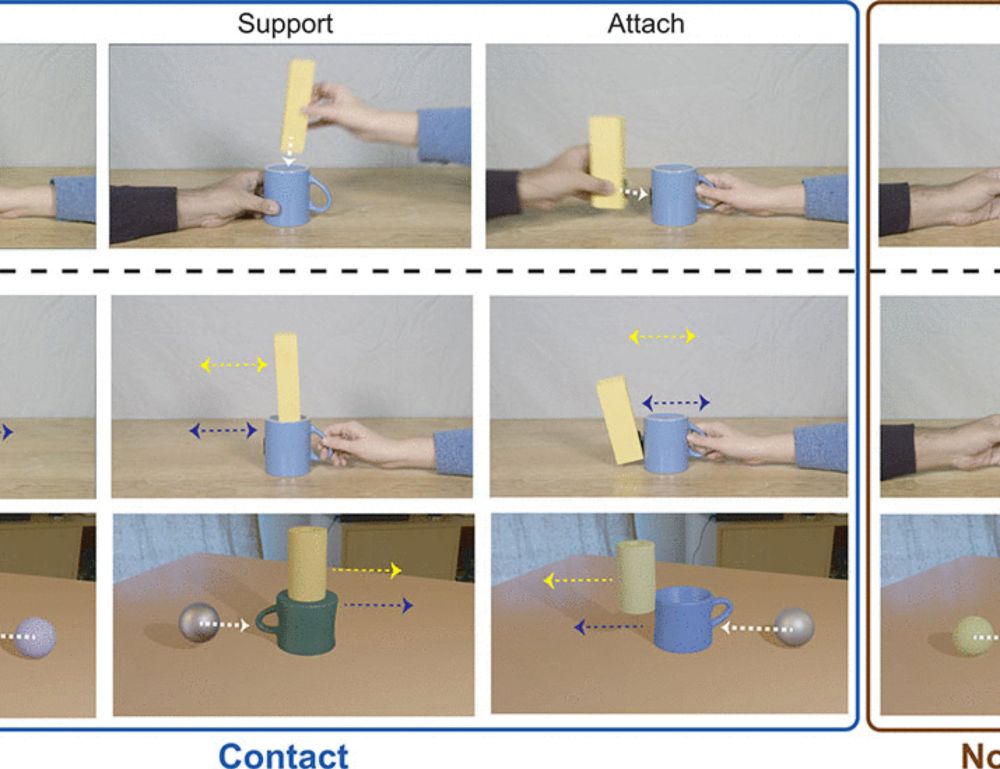

Short answer: Yes! Using MVPA we found that the PN has information about predicted contact events (i.e., collisions). This was true not only within a scenario (the ‘roll’ scene above), but also generalized across scenarios indicating the abstractness of representation.

(9/n)

17.06.2025 18:23 — 👍 1 🔁 0 💬 1 📌 0

That is,

(8/n)

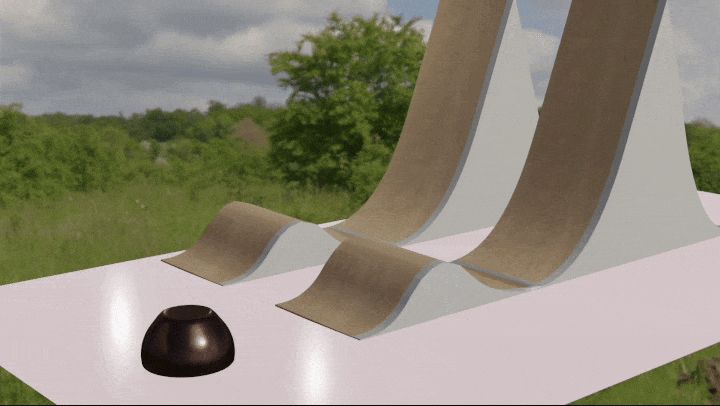

When we see this: Does the PN predict this?

17.06.2025 18:23 — 👍 1 🔁 0 💬 1 📌 0

In our second pre-registered fMRI experiment, we tested the central tenet of the ‘physics engine’ hypothesis – that the PN runs forward simulations to predict what will happen next. If true, PN should contain information about predicted future states before they occur.

(7/n)

17.06.2025 18:23 — 👍 2 🔁 0 💬 1 📌 0

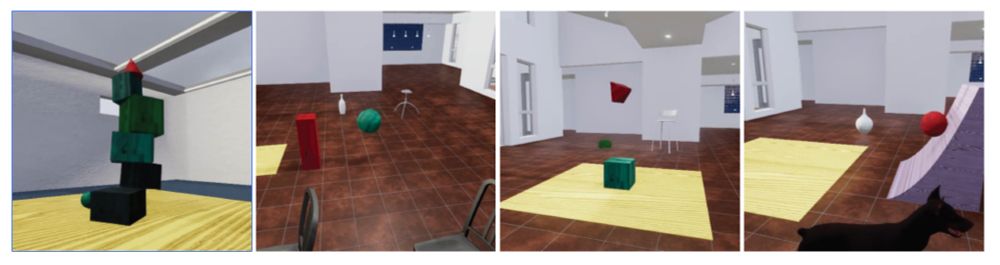

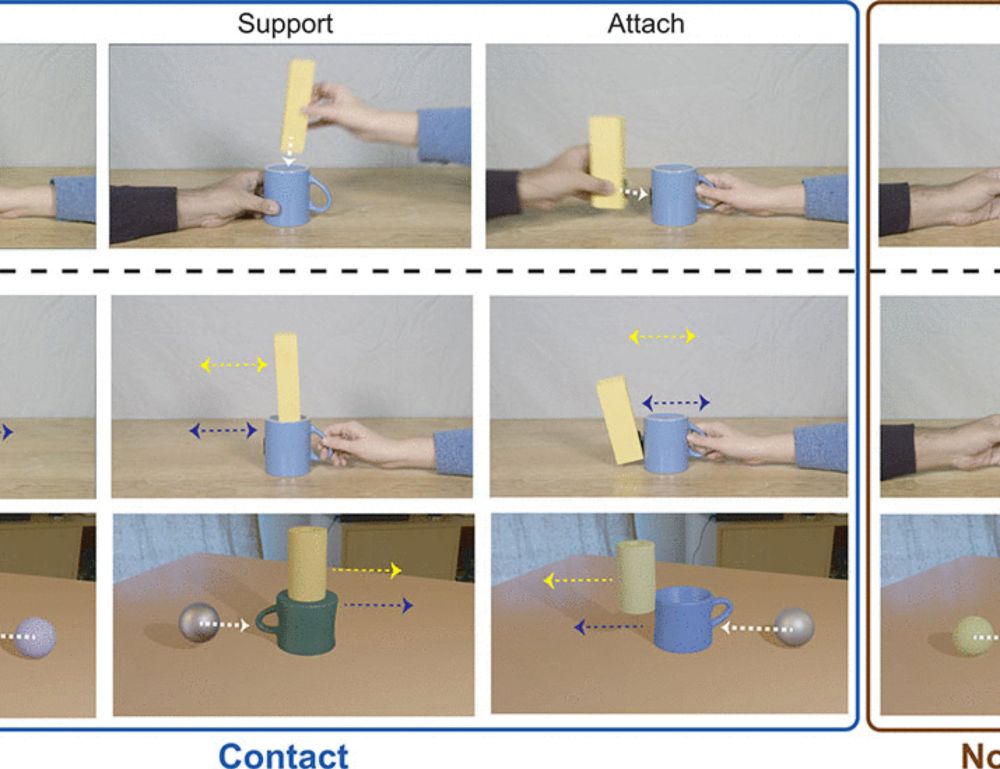

Given their importance for prediction, we hypothesized that the PN would encode object contact. In our first pre-registered fMRI experiment, we used multi-voxel pattern analysis (MVPA) and found that only PN carried scenario-invariant information about object contact.

(6/n)

17.06.2025 18:23 — 👍 1 🔁 0 💬 1 📌 0

If a container moves, then so does its containee, but the same is not true of an object that is merely occluded by the container without contacting it!

(5/n)

17.06.2025 18:23 — 👍 1 🔁 0 💬 1 📌 0

However, there was no evidence for such predicted future state information in the PN. We realized that object-object contact is an excellent way to test the Physics Engine hypothesis. When two objects are in contact, their fate is intertwined:

(4/n)

17.06.2025 18:23 — 👍 2 🔁 0 💬 1 📌 0

These results have led to the hypothesis that the Physics Network (PN) is our brain’s ‘Physics Engine’ – a generative model of the physical world (like those used in video games) capable of running simulations to predict what will happen next.

(3/n)

17.06.2025 18:23 — 👍 2 🔁 0 💬 1 📌 0

How do we understand, plan and predict in the physical world? Prior research has implicated fronto-parietal regions of the human brain (the ‘Physics Network’, PN) in physical judgement tasks, including in carrying representations of object mass & physical stability.

(2/n)

17.06.2025 18:23 — 👍 1 🔁 0 💬 1 📌 0

Decoding predicted future states from the brain’s “physics engine”

Using fMRI in humans, this study provides evidence for future state prediction in brain regions involved in physical reasoning.

Thrilled to announce our new publication titled 'Decoding predicted future states from the brain's physics engine' with @emiecz.bsky.social, Cyn X. Fang, @nancykanwisher.bsky.social, @joshtenenbaum.bsky.social

www.science.org/doi/full/10....

(1/n)

17.06.2025 18:23 — 👍 48 🔁 19 💬 1 📌 2

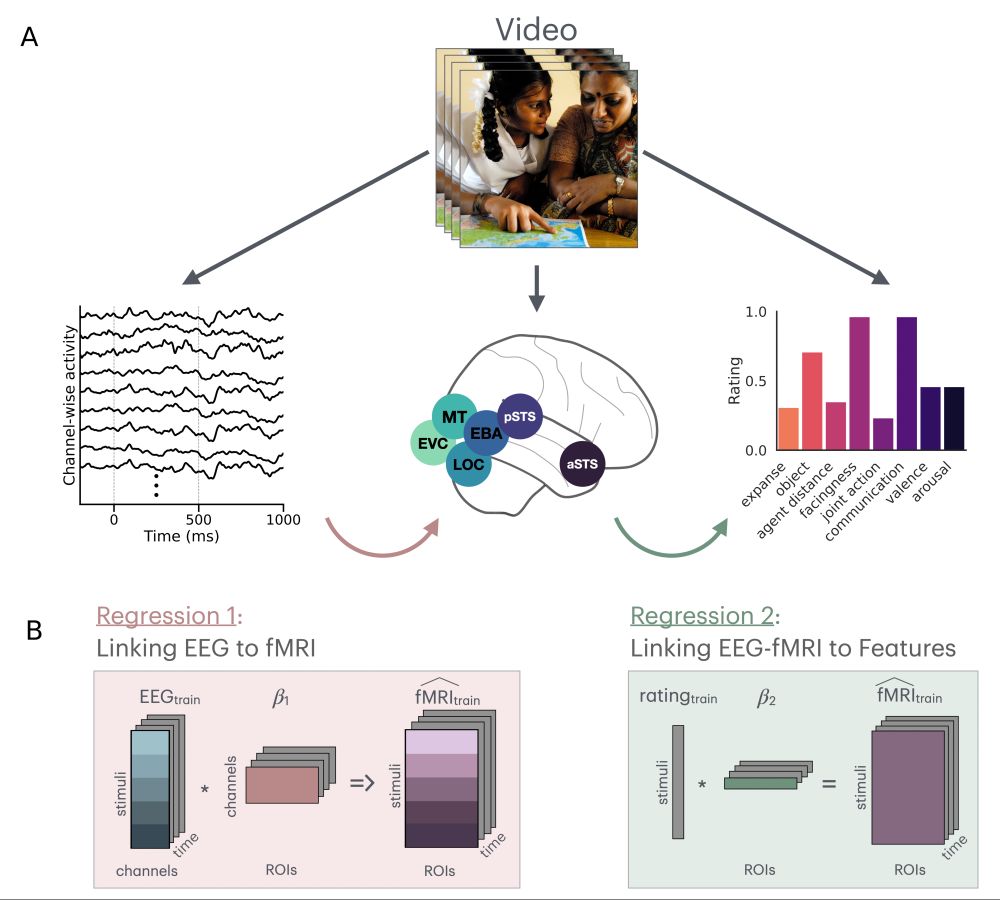

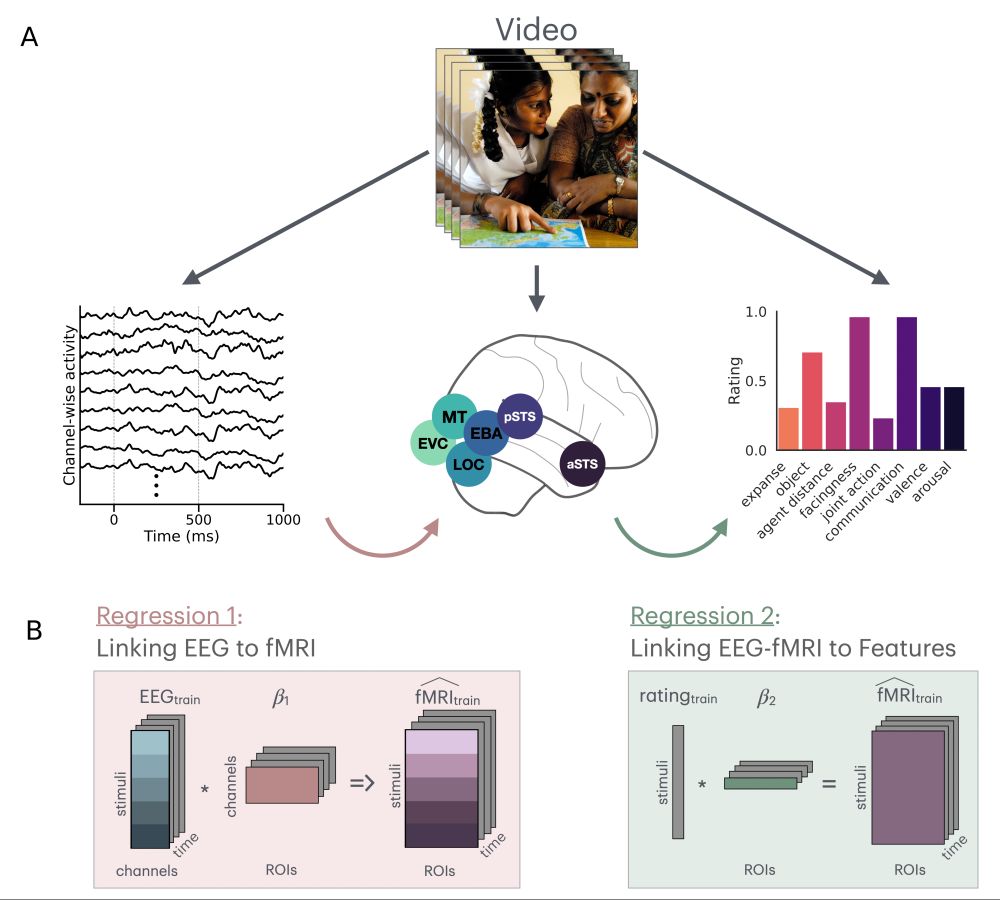

Shown is an example image that participants viewed either in EEG, fMRI, and a behavioral annotation task. There is also a schematic of a regression procedure for jointly predicting fMRI responses from stimulus features and EEG activity.

I am excited to share our recent preprint and the last paper of my PhD! Here, @imelizabeth.bsky.social, @lisik.bsky.social, Mick Bonner, and I investigate the spatiotemporal hierarchy of social interactions in the lateral visual stream using EEG-fMRI.

osf.io/preprints/ps...

#CogSci #EEG

23.04.2025 15:34 — 👍 27 🔁 9 💬 1 📌 0

Video of a baby on its parent's chest looking at the parent's face and smiling.

When you see this image, does it make you wonder what that baby is thinking. Do you think the baby is merely perceiving a set of shapes or do you think that the baby is also inferring meaning from the face they are looking at? (1/5)

22.04.2025 13:54 — 👍 30 🔁 7 💬 1 📌 1

Academics - where are academic jobs posted for non-UK non-North American countries? If you were looking for jobs in, say, the Nordic countries, or Australia, where do you look? Asking for all the PhDs who are on the market this year. (Pls no April fools jokes, their nerves are frayed as it is)

01.04.2025 21:15 — 👍 90 🔁 22 💬 17 📌 2

Technical Associate I, Kanwisher Lab

MIT - Technical Associate I, Kanwisher Lab - Cambridge MA 02139

I’m hiring a full-time lab tech for two years starting May/June. Strong coding skills required, ML a plus. Our research on the human brain uses fMRI, ANNs, intracranial recording, and behavior. A great stepping stone to grad school. Apply here:

careers.peopleclick.com/careerscp/cl...

......

26.03.2025 15:09 — 👍 64 🔁 48 💬 5 📌 3

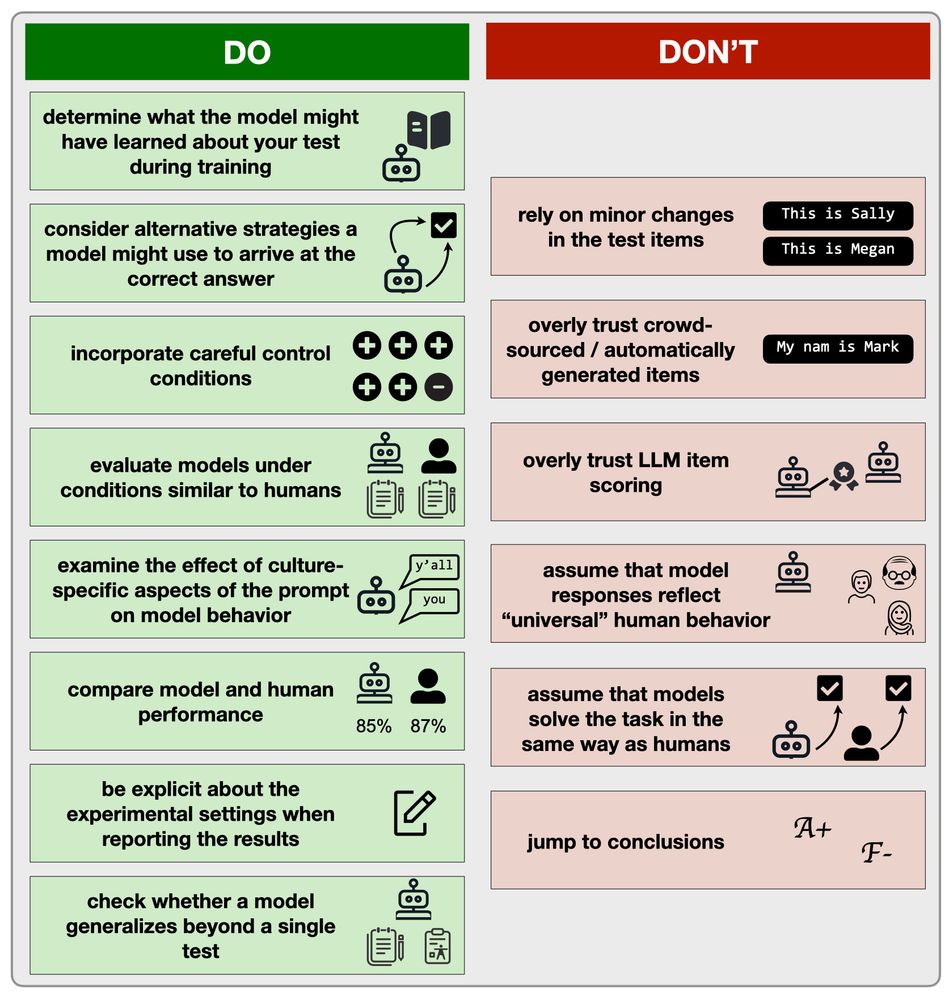

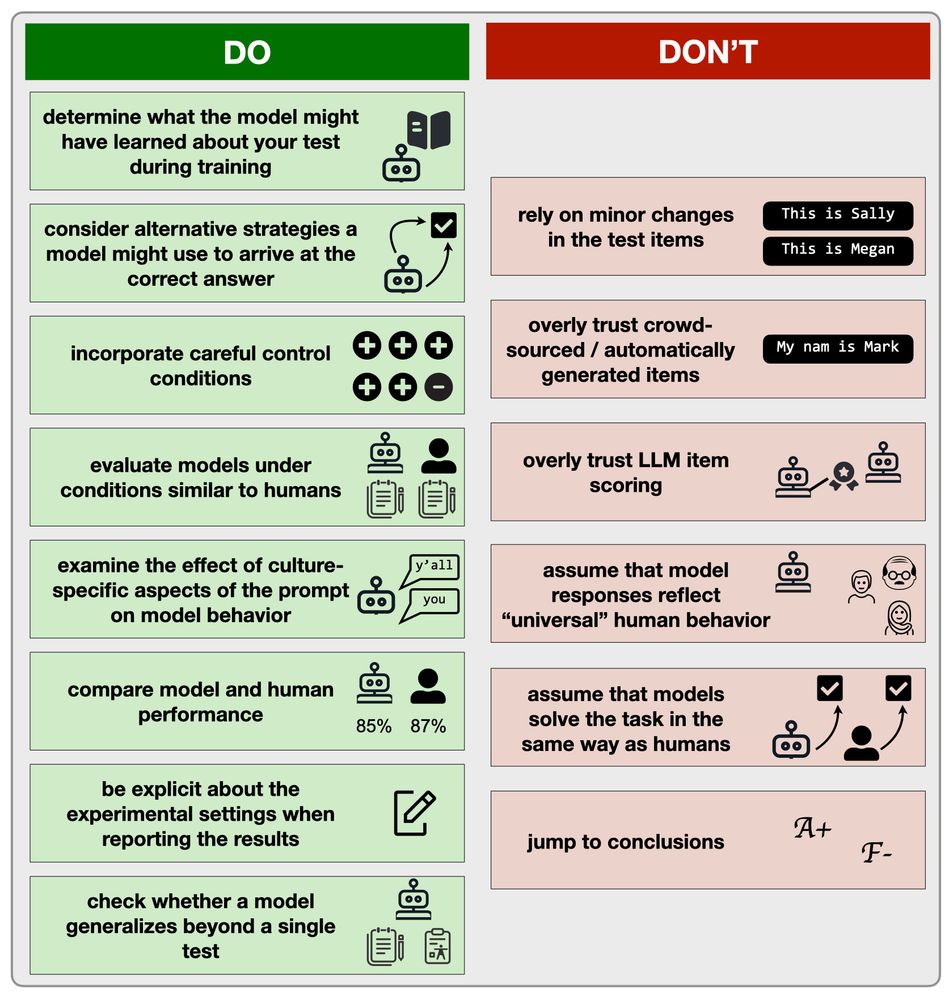

(same content as the table in the paper)

My commentary on the do's and don'ts of cognitive evaluations in LLMs is now out in Nature Human Behavior:

doi.org/10.1038/s415...

posting here with a figure that didn't make it into the final draft and is now instead a boring table :P

#CogSci #LLMs #AI

16.01.2025 22:26 — 👍 64 🔁 14 💬 0 📌 0

Cognition | Navigation | Behaviour

I study how the brain maps space - how this map is influenced by the environment & an animals's behaviour. Currently starting my own research group: the Neuroethology and Spatial Cognition lab @ University of Glasgow

PhD student in the Robertson Lab @Dartmouth PBS.

She/her

Vision science enthusiast, currently PhD student @ Vaziri Lab, UDel.

Computational neuroscientist.

Research Fellow @imperialcollegeldn.bsky.social and @imperial-ix.bsky.social

Funded by @schmidtsciences.bsky.social

Vision scientist. Professor at the Centre for Cognitive Science, Technical University of Darmstadt, Germany. 🇦🇺🇩🇪. pronoun.is/he

https://www.psychologie.tu-darmstadt.de/perception

Cognitive scientist, associate professor at Aarhus University.

Predictive Processing, Emotion, Play, Recreational Fear, Cognitive Development.

Cognitive Science PhD student @ JHU

MD/PhD neuroscientist/psychiatrist, father of 3, Nak Muay, engineer at heart. mPFC-HPC interactions in addiction/schizophrenia, multi-region ephys and imaging in vivo, gene therapy, novel optical methods for spatial transcriptomics https://sjulsonlab.org

PhD @ Harvard-MIT in CompNeuro | Neural nets, CogSci, Attention | Meta Reality Labs

Founder of Cognitive Resonance, a new venture dedicated to helping people understand human cognition and generative AI. Advocate for humans.

Newsletter: https://buildcognitiveresonance.substack.com/

Computational neuroscientist bringing machine and neural learning closer together [Oxford NeuroAI] @ox.ac.uk @oxforddpag.bsky.social 🇵🇹🇪🇺🇬🇧🌳

neuralml.github.io

www.medsci.ox.ac.uk/neuroai

Neuro, AI, chips @ Imperial & Cambridge.

www.danakarca.com

Language processing - Neuroscience - Machine Learning - Assistant Professor at Stanford University - She/Her - 🏳️🌈

Mathematics Sorceror (sensory alchemist) at the Arctangent Transpetroglyphics Algra Laboratory (ATAL), I transflarnx mathematics into living rainbows. http://owen.maresh.info https://github.com/graveolensa

Psoeppe-Tlaxtlal, (an undreamt splendour?)

Professor studying origins of concepts @CarnegieMellon; Brain development, cognition, evolution, math & logic; Primate Portal

@TIME 2017 Silence Breakers; she

Science Homecoming

@sciencehomecoming.bsky.social

CS PhD Student @University of Washington, CSxPhilosophy @Dartmouth College

Interested in MARL, Social Reasoning, and Collective Decision making in people, machines, and other organisms

kjha02.github.io

Clinical psychologist and researcher investigating anxiety disorders, Acute PTSD, dissociations, OCD, Affective Neuroscience, neuropsychoanalysis, application of nonlinear dynamical systems to psychology

https://montgomerycountypsychologist.com

Computational/theoretical neuroscientist; asst. prof. at CU Anschutz (July 2025): https://www.necolelab.com/members/laureline-logiaco.html

Particular interest in how mammalian brain regions specialize and synergize to generate hierarchical behaviors.

Postdoc @ Harvard (Buckner Lab)

cognitive neuroscience, precision functional mapping

https://jingnandu93.github.io/