Correctly tagging @aydanhuang265.bsky.social !

03.10.2025 17:28 — 👍 2 🔁 0 💬 0 📌 0

The big takeaway: framing behavior prediction as a program synthesis problem is an accurate, scalable, and efficient path to human-compatible AI!

It allows multi-agent systems to rapidly and accurately anticipate others' actions for more effective collaboration.

03.10.2025 02:26 — 👍 1 🔁 0 💬 1 📌 0

ROTE doesn’t sacrifice accuracy for speed!

While initial program generation takes time, the inferred code can be executed rapidly, making it orders of magnitude more efficient than other LLM-based methods for long-horizon predictions.

03.10.2025 02:26 — 👍 0 🔁 0 💬 1 📌 0

What explains this performance gap? ROTE handles complexity better. It excels with intricate tasks like cleaning and interacting with objects (e.g., turning items on/off) in Partnr, while baselines only showed success with simpler navigation and object manipulation.

03.10.2025 02:26 — 👍 0 🔁 0 💬 1 📌 0

We scaled up to the embodied robotics simulator Partnr, a complex, partially observable environment with goal-directed LLM-agents.

ROTE still significantly outperformed all LLM-based and behavior cloning baselines for high-level action prediction in this domain!

03.10.2025 02:25 — 👍 1 🔁 0 💬 1 📌 0

A key strength of code: zero-shot generalization.

Programs inferred from one environment transfer to new settings more effectively than all other baselines. ROTE's learned programs transfer without needing to re-incur the cost of text generation.

03.10.2025 02:25 — 👍 0 🔁 0 💬 1 📌 0

Can scripts model nuanced, real human behavior?

We collected human gameplay data and found ROTE not only outperformed all baselines but also achieved human-level performance when predicting the trajectories of real people!

03.10.2025 02:25 — 👍 0 🔁 0 💬 1 📌 0

Introducing ROTE (Representing Others’ Trajectories as Executables)!

We use LLMs to generate Python programs 💻 that model observed behavior, then uses Bayesian inference to select the most likely ones. The result: A dynamic, composable, and analyzable predictive representation!

03.10.2025 02:24 — 👍 2 🔁 0 💬 1 📌 0

Traditional AI is stuck! Predicting behavior is either brittle (Behavior Cloning) or too slow with endless belief space enumeration (Inverse Planning).

How can we avoid mental state dualism while building scalable, robust predictive models?

03.10.2025 02:24 — 👍 1 🔁 0 💬 2 📌 0

Forget modeling every belief and goal! What if we represented people as following simple scripts instead (i.e "cross the crosswalk")?

Our new paper shows AI which models others’ minds as Python code 💻 can quickly and accurately predict human behavior!

shorturl.at/siUYI%F0%9F%...

03.10.2025 02:24 — 👍 36 🔁 14 💬 3 📌 3

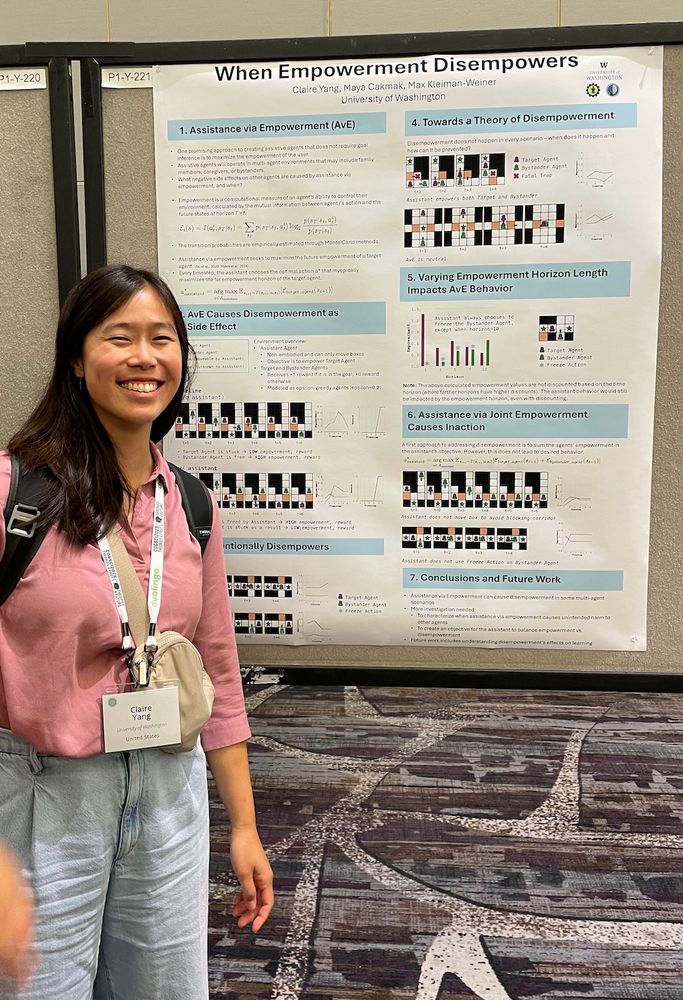

Person standing next to poster titled "When Empowerment Disempowers"

Still catching up on my notes after my first #cogsci2025, but I'm so grateful for all the conversations and new friends and connections! I presented my poster "When Empowerment Disempowers" -- if we didn't get the chance to chat or you would like to chat more, please reach out!

06.08.2025 22:31 — 👍 16 🔁 3 💬 0 📌 1

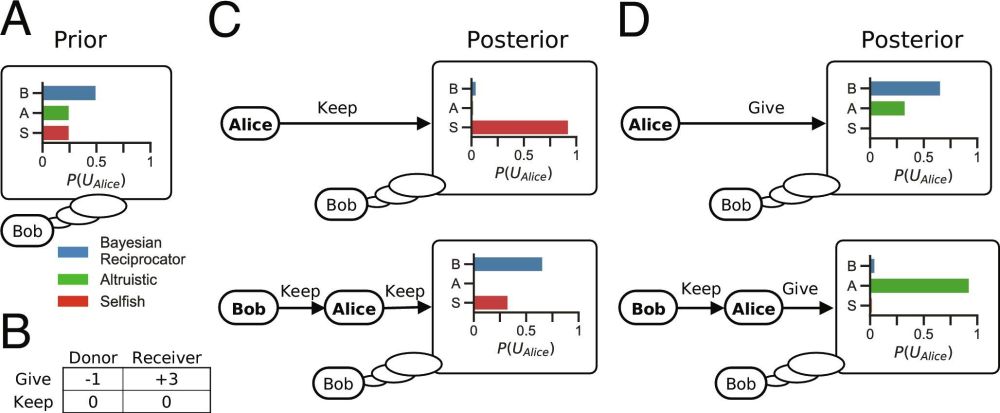

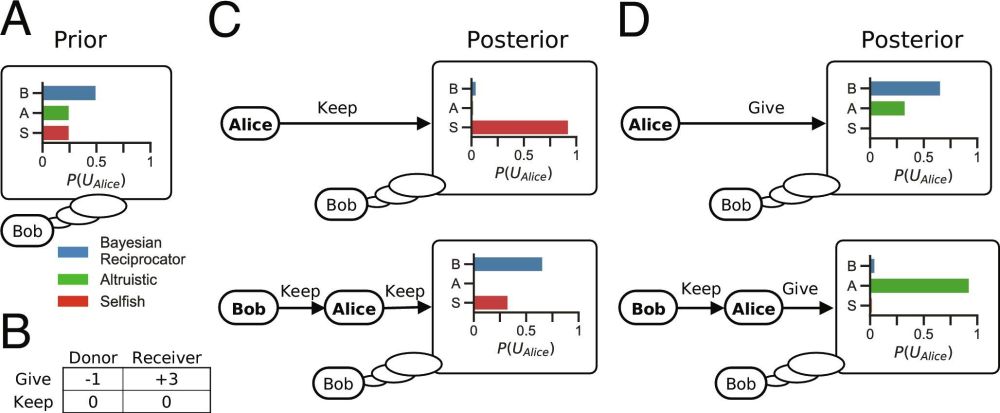

Evolving general cooperation with a Bayesian theory of mind | PNAS

Theories of the evolution of cooperation through reciprocity explain how unrelated

self-interested individuals can accomplish more together than th...

Our new paper is out in PNAS: "Evolving general cooperation with a Bayesian theory of mind"!

Humans are the ultimate cooperators. We coordinate on a scale and scope no other species (nor AI) can match. What makes this possible? 🧵

www.pnas.org/doi/10.1073/...

22.07.2025 06:03 — 👍 92 🔁 36 💬 2 📌 2

Really pumped for my Oral presentation on this work today!!! Come check out the RL session from 3:30-4:30pm in West Ballroom B

You can also swing by our poster from 4:30-7pm in West Exhibition Hall B2-B3 # W-713

See you all there!

15.07.2025 14:46 — 👍 4 🔁 1 💬 0 📌 0

I'll be at ICML next week! If anyone wants to chat about single/multi-agent RL, continual learning, cognitive science, or something else, shoot me a message!!!

08.07.2025 13:09 — 👍 2 🔁 0 💬 0 📌 0

Oral @icmlconf.bsky.social !!! Can't wait to share our work and hear the community's thoughts on it, should be a fun talk!

Can't thank my collaborators enough: @cogscikid.bsky.social y.social @liangyanchenggg @simon-du.bsky.social @maxkw.bsky.social @natashajaques.bsky.social

09.06.2025 16:32 — 👍 9 🔁 2 💬 0 📌 0

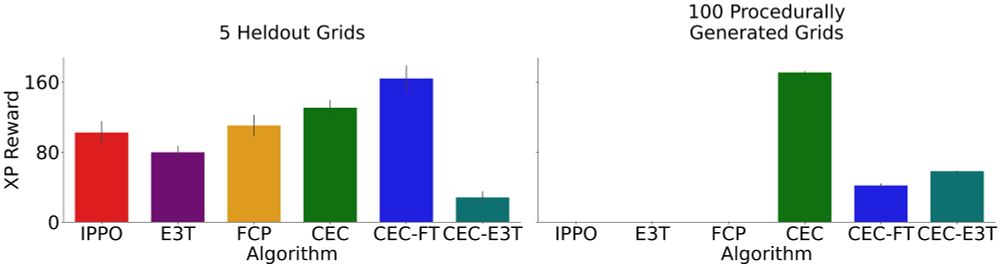

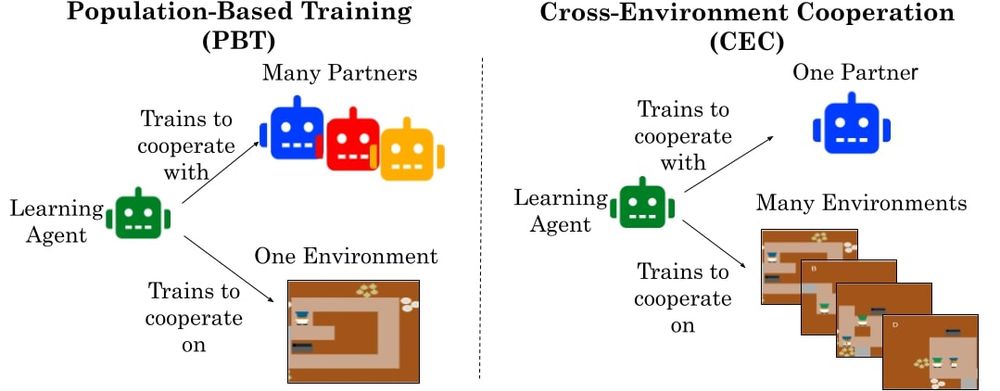

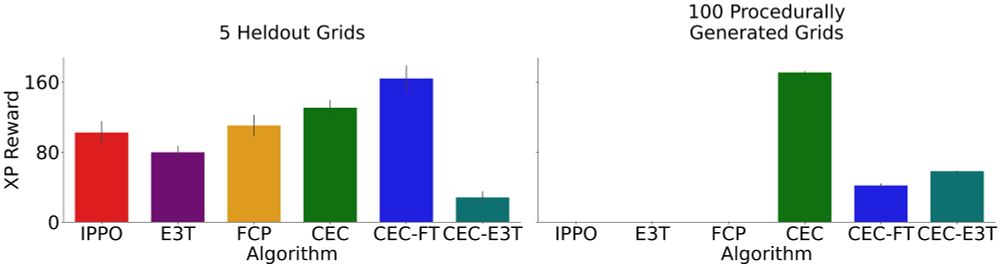

The big takeaway: Environment diversity > Partner diversity

Training across diverse tasks teaches agents how to cooperate, not just whom to cooperate with. This enables zero-shot coordination with novel partners in novel environments, a critical step toward human-compatible AI.

19.04.2025 00:09 — 👍 1 🔁 0 💬 1 📌 0

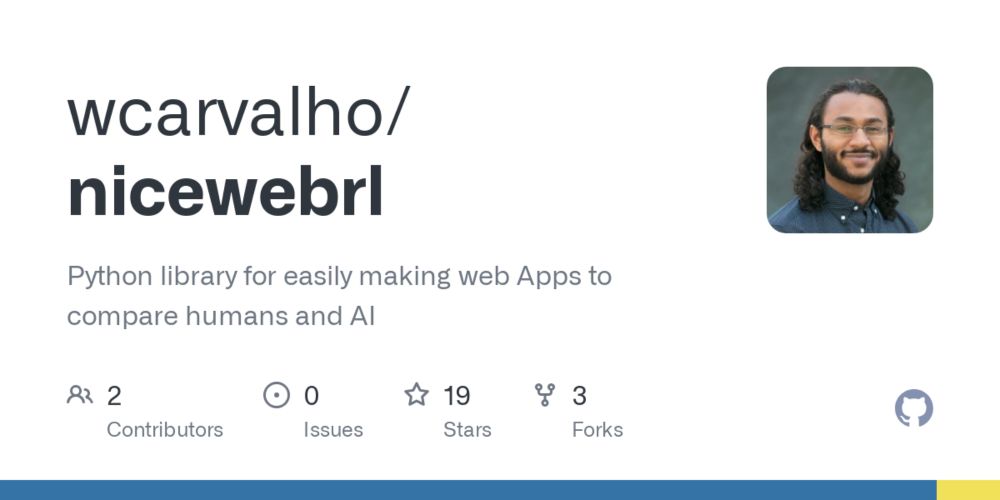

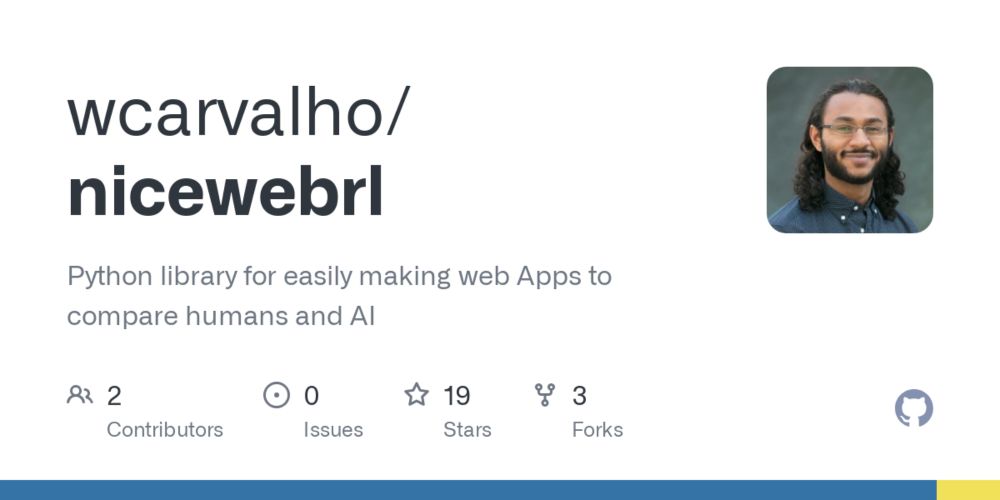

GitHub - wcarvalho/nicewebrl: Python library for easily making web Apps to compare humans and AI

Python library for easily making web Apps to compare humans and AI - wcarvalho/nicewebrl

Our work used NiceWebRL, a Python-based package we helped develop for evaluating Human, Human-AI, and Human-Human gameplay on Jax-based RL environments!

This tool makes crowdsourcing data for CS and CogSci studies easier than ever!

Learn more: github.com/wcarvalho/ni...

19.04.2025 00:09 — 👍 3 🔁 0 💬 1 📌 1

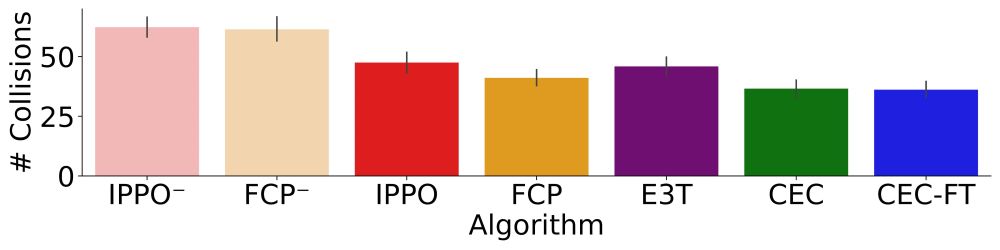

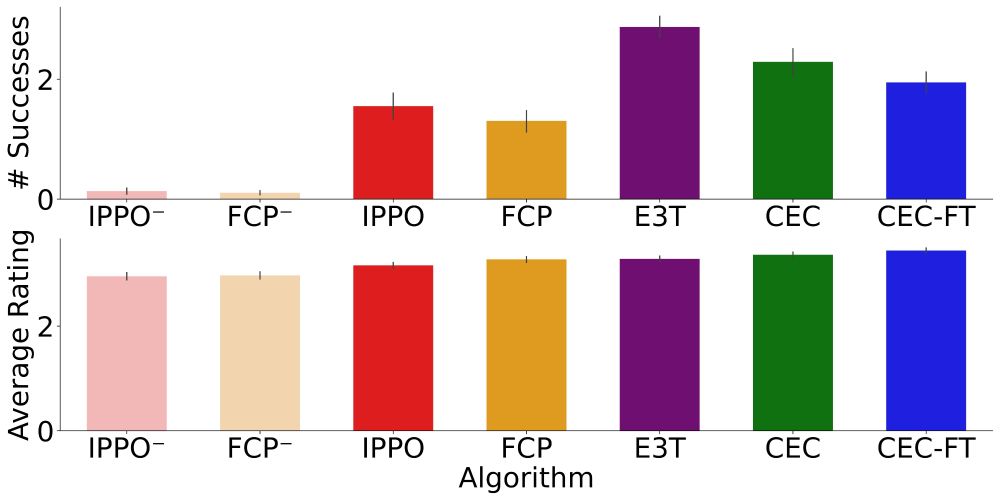

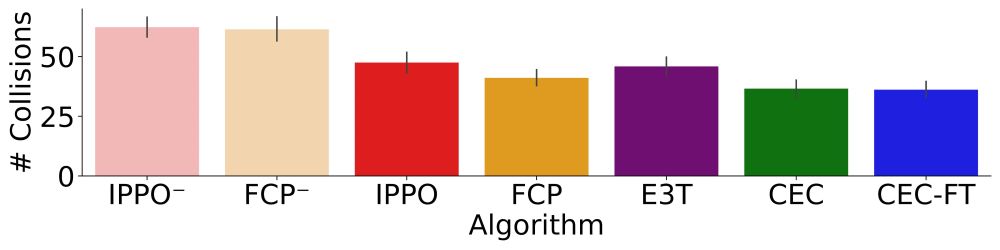

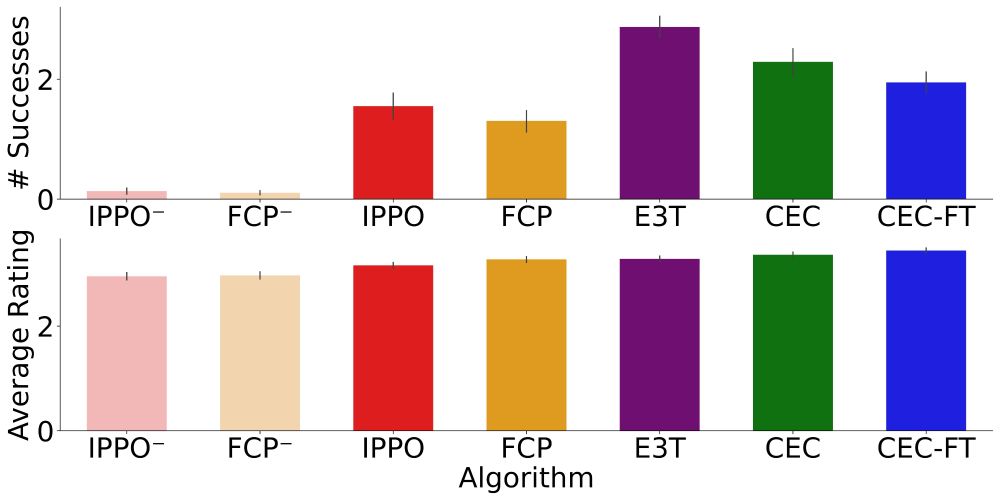

Why do humans prefer CEC agents? They collide less and adapt better to human behavior.

This increased adaptability reflects general norms for cooperation learned across many environments, not just memorized strategies.

19.04.2025 00:09 — 👍 0 🔁 0 💬 1 📌 0

Human studies confirm our findings! CEC agents achieve higher success rates with human partners than population based methods like FCP and are rated qualitatively better to collaborate with than the SOTA approach (E3T) despite never having seen the level during training.

19.04.2025 00:08 — 👍 1 🔁 0 💬 1 📌 0

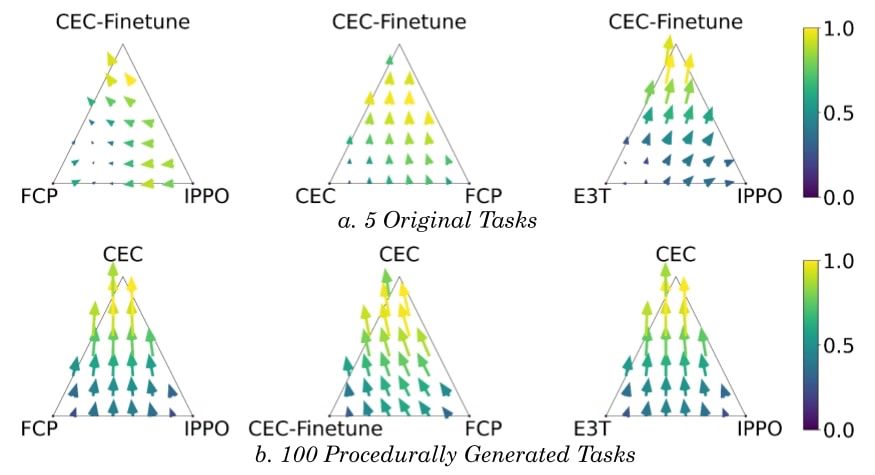

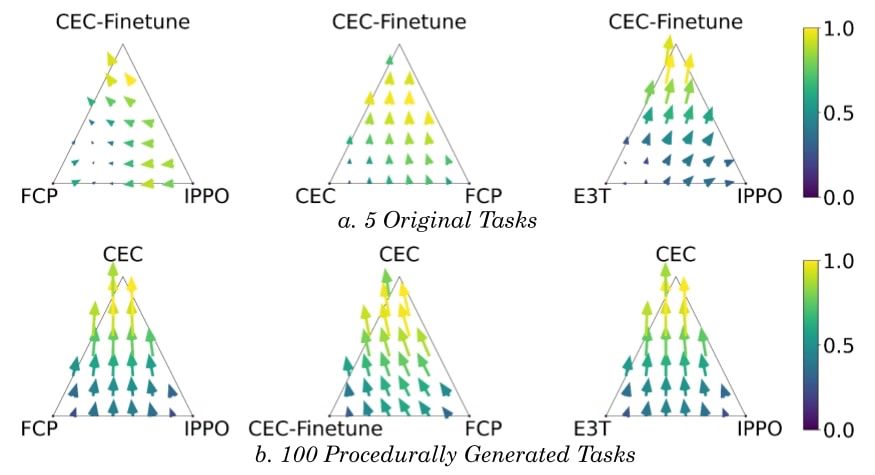

Using empirical game theory analysis, we show CEC agents emerge as the dominant strategy in a population of different agent types during Ad-hoc Teamplay!

When diverse agents must collaborate, the CEC-trained agents are selected for their adaptability and cooperative skills.

19.04.2025 00:08 — 👍 3 🔁 0 💬 1 📌 0

The result? CEC agents significantly outperform baselines when collaborating zero-shot with novel partners on novel environments.

Even more impressive: CEC agents outperform methods that were specifically trained on the test environment but struggle to adapt to new partners!

19.04.2025 00:08 — 👍 2 🔁 0 💬 1 📌 0

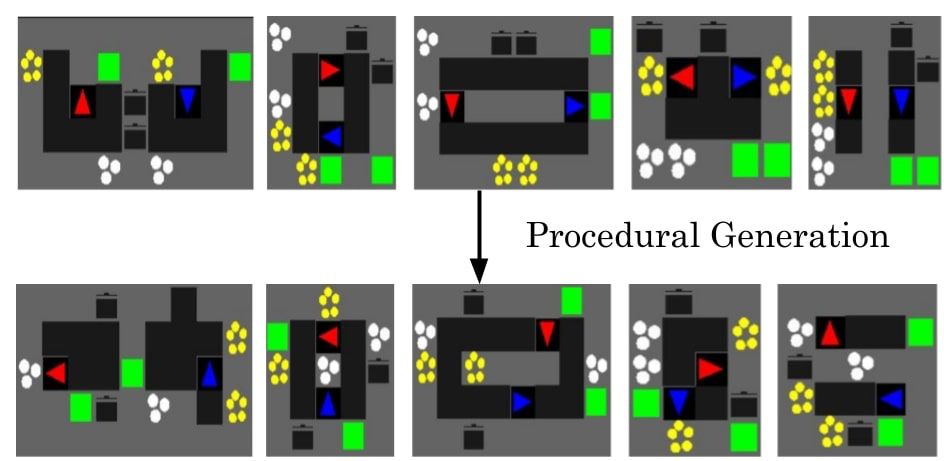

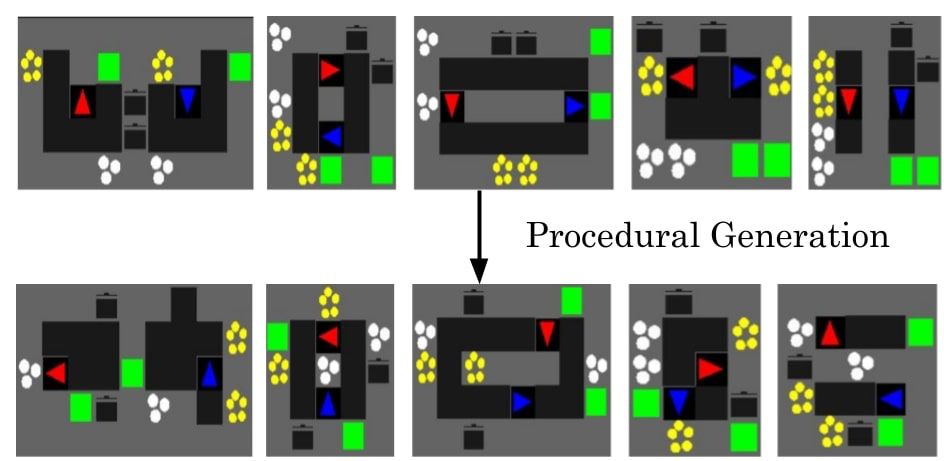

We built a Jax-based procedural generator creating billions of solvable Overcooked challenges.

Unlike prior work studying only 5 layouts, we can now study cooperative skill transfer at unprecedented scale (1.16e17 possible environments)!

Code available at: shorturl.at/KxAjW

19.04.2025 00:07 — 👍 1 🔁 0 💬 1 📌 0

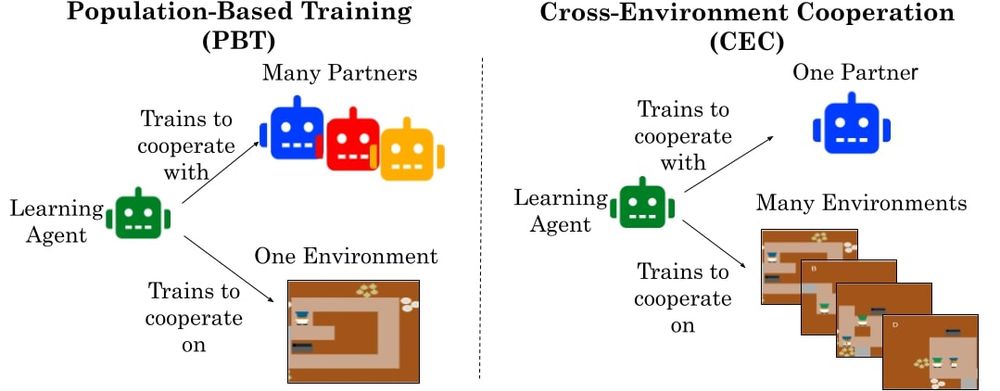

We introduce Cross-Environment Cooperation (CEC), where agents learn through self-play across procedurally generated environments.

CEC teaches robust task representations rather than memorized strategies, enabling zero-shot coordination with humans and other AIs!

19.04.2025 00:06 — 👍 1 🔁 0 💬 1 📌 0

Current AI cooperation algos form brittle strategies by focusing on partner diversity in fixed tasks.

I.e. they might learn a specific handshake but fail when greeted with a fist bump.

How can AI learn general norms that work across contexts and partners?

19.04.2025 00:06 — 👍 2 🔁 0 💬 1 📌 0

Our new paper (first one of my PhD!) on cooperative AI reveals a surprising insight: Environment Diversity > Partner Diversity.

Agents trained in self-play across many environments learn cooperative norms that transfer to humans on novel tasks.

shorturl.at/fqsNN%F0%9F%...

19.04.2025 00:06 — 👍 25 🔁 7 💬 1 📌 5

That's true! I think the significance of not assigning the same meaning to symbols may only matter when we're interacting with the agent, but definitely room to explore what we mean by "understand" here and how anyone can learn to grasp the full affordances of objects with underspecified properties!

07.01.2025 05:03 — 👍 0 🔁 0 💬 0 📌 0

Postdoc at MIT. Cognitive Neuroscience.

Co-Lead, Google DeepMind Neuroscience Lab

Honorary Lecturer, Sainsbury Wellcome Centre, University College London

kevinjmiller.com

NLP research - PhD student at UW

PhD student @ UW, research @ Ai2

Building AI powered tools to augment human creativity and problem solving in San Francisco. Previously @GitHub Copilot, @Google, 🇨🇦

narphorium.com

language, (social) cognition, AI

Computational modeling of human learning: cognitive development, language acquisition, social learning, causal learning... Brown PhD student with @daphnab.bsky.social

pedestrian and straphanger

undergrad jhucompsci.bsky.social

Assoc Prof at UC San Diego, exploring the mind & its origins. Cognitive development, social cognition, music cognition, open science, all of interest. http://madlab.ucsd.edu

Inspired by Cognitive Science and Philosophy.

https://www.juniorokoroafor.com/

Computational Cognitive Science PhD at Johns Hopkins with Leyla Isik

| BS @Stanford|

| 🔗 https://garciakathy.github.io/ |

I study how people solve big problems with small brains. Starting at Dartmouth in 2026—I'm recruiting!

https://fredcallaway.com

Researcher & entrepreneur | Co-founder @cosmik.network | Building https://semble.so/ | collective sensemaking | https://ronentk.me/ | Prev- Open Science Fellow @asterainstitute.bsky.social

Undergrad @ JHU CS | RA @ MIT | Cognitive AI🧠 | Embodied AI🤖

Deep Learning, Bayan Playing

UW NLP (Ark), UIUC

Website: https://andreyrisukhin.github.io/

phd student building computational models of social cognition @ edinburgh | prev imperial, ucl, inria

https://maxtaylordavi.es

Kempner Institute research fellow @Harvard interested in scaling up (deep) reinforcement learning theories of human cognition

prev: deepmind, umich, msr

https://cogscikid.com/

https://ananyahjha93.github.io

Second year PhD at @uwcse.bsky.social with @hanna-nlp.bsky.social and @lukezettlemoyer.bsky.social