Sharpn🔪ss predicts ✅generalz'n xcept when it doesn't,eg ✖️formers

But what's🔪?Which point is🔪er?

Find out

*why it's a tricky Q(hint: #symmetry)

*why our answer does let🔪predict generalz'n, even in ✅formers!

@ our #ICML2025 #spotlight E-2001 on Wed 11AM

by MF da Silva and F Dangel

@vectorinstitute.ai

15.07.2025 18:04 — 👍 0 🔁 0 💬 0 📌 0

YouTube video by Sageev Oore

Smart Looper DK demo 2025-05-15 T4 montuno - Part 2 teaser

i am so superduper excited to show a little glimpse of this system we're developing for an improvising #music system with #AI! i can't explain how *actually* fun it is to play with this.

15sec teaser vid: youtu.be/onPetq4gJ18

blog: osageev.github.io/introducing-...

17.06.2025 15:25 — 👍 0 🔁 0 💬 0 📌 0

Yeah! (at least for most of it)

21.05.2025 09:16 — 👍 2 🔁 0 💬 0 📌 0

are you planning to go?

20.05.2025 12:19 — 👍 0 🔁 0 💬 1 📌 0

Is the website info about it incorrect? (Says wkshops on 18 & 19)

19.05.2025 18:07 — 👍 1 🔁 0 💬 1 📌 0

[7b/8] We see this even for traditional sharpness measures in the diagonal networks we study, but it is particularly striking in the vision transformers we study, where geodesically sharper minima actually generalize better!

Maybe flatness isn’t universal after all—context matters.

09.05.2025 12:46 — 👍 0 🔁 0 💬 1 📌 0

[7a/8] 🔥 Surprising twist:

Interestingly, flatter is not always better!

09.05.2025 12:46 — 👍 0 🔁 0 💬 1 📌 0

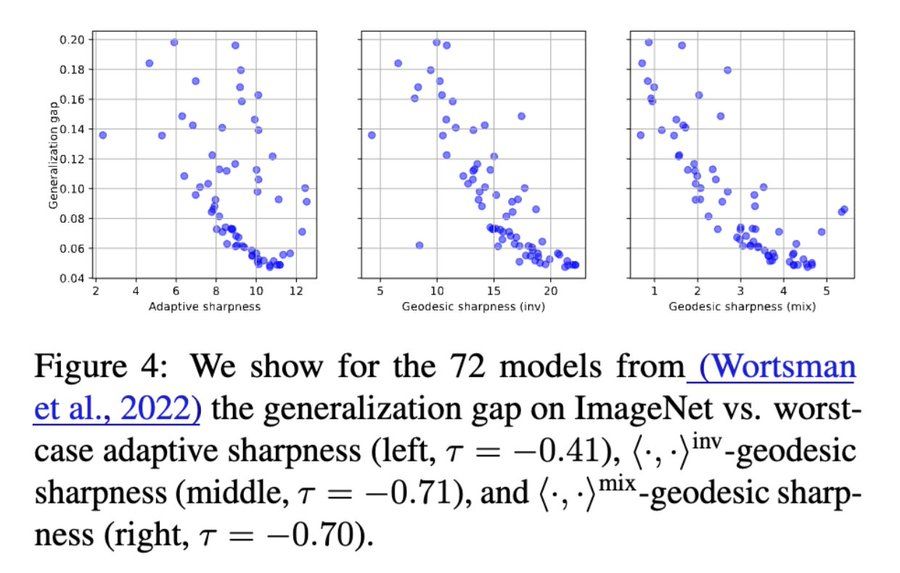

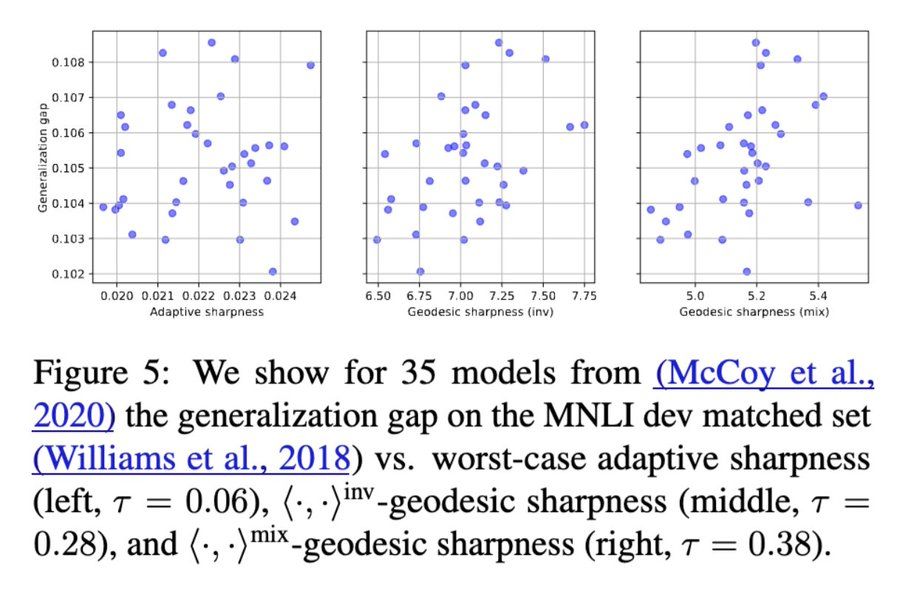

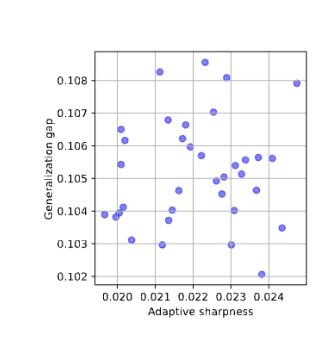

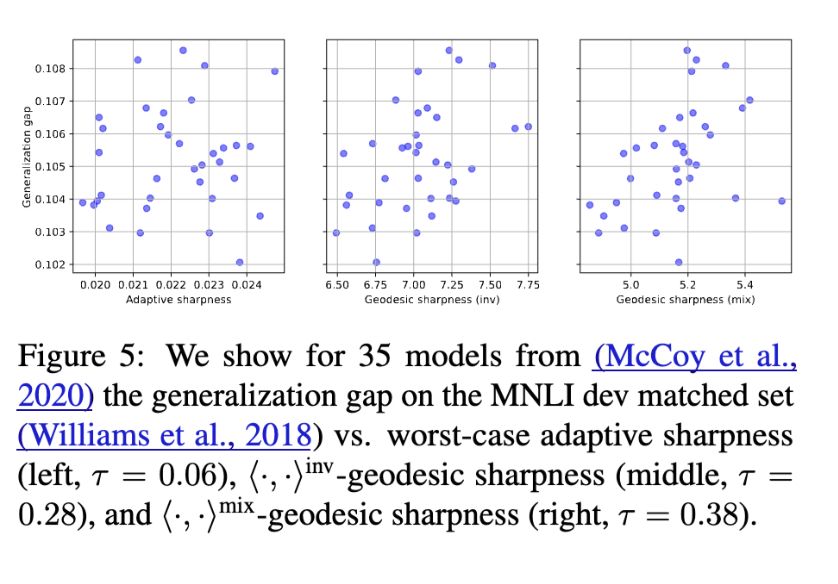

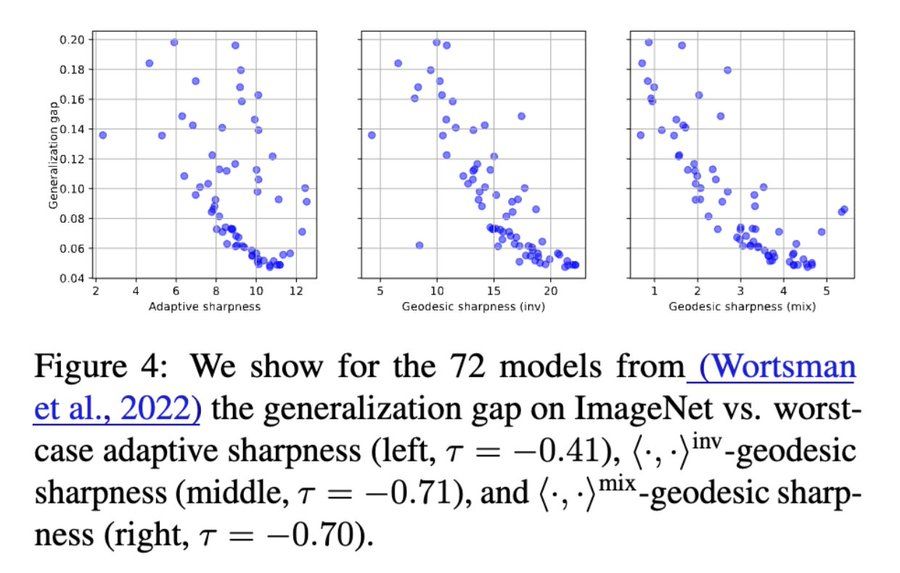

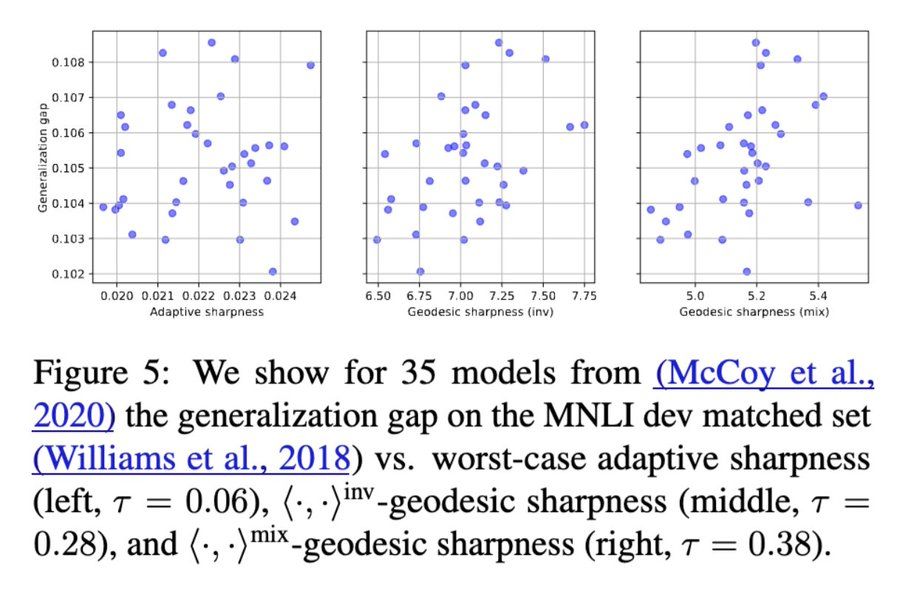

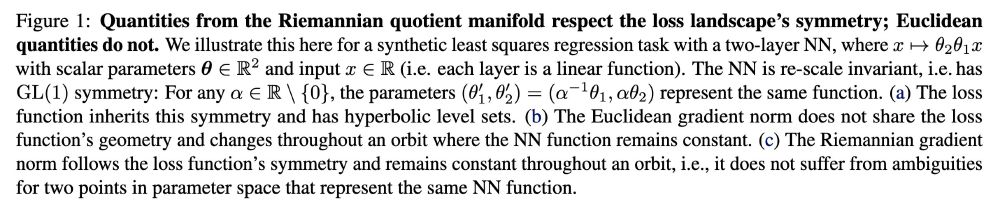

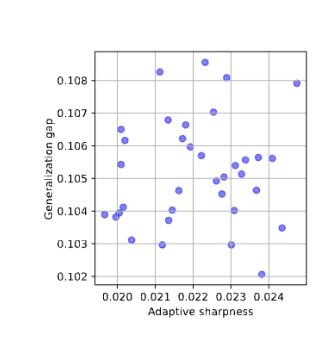

[6b/8]

🖼️ Vision Transformers (ImageNet): -0.41 (adaptive sharpness) → -0.71 (geodesic sharpness)

💬 BERT fine-tuned on MNLI: 0.06 (adaptive sharpness) → 0.38 (geodesic sharpness)

09.05.2025 12:46 — 👍 0 🔁 0 💬 1 📌 0

[6a/8] 🔦 Why does this matter?

Perturbations are no longer arbitrary—they respect functional equivalences in transformer weights.

Result: Geodesic sharpness shows clearer, stronger correlations (as measured by the tau correlation coefficient) with generalization. 📈

09.05.2025 12:46 — 👍 0 🔁 0 💬 1 📌 0

[5c/8] Geodesic Sharpness

Whereas traditional sharpness measures are evaluated inside an L^2 ball, we look at a geodesic ball in this symmetry-corrected space, using tools from Riemannian geometry.

09.05.2025 12:46 — 👍 0 🔁 0 💬 1 📌 0

[5b/8] Geodesic Sharpness

Instead of considering the usual Euclidean metric, we look at metrics invariant both to symmetries of the attention mechanism and to previously studied re-scaling symmetries.

09.05.2025 12:46 — 👍 0 🔁 0 💬 1 📌 0

[5a/8] Our solution: ✨Ge🎯desic Sharpness✨

We need to redefine sharpness and measure it not directly in parameter space, but on a symmetry-aware quotient manifold.

09.05.2025 12:46 — 👍 0 🔁 0 💬 1 📌 0

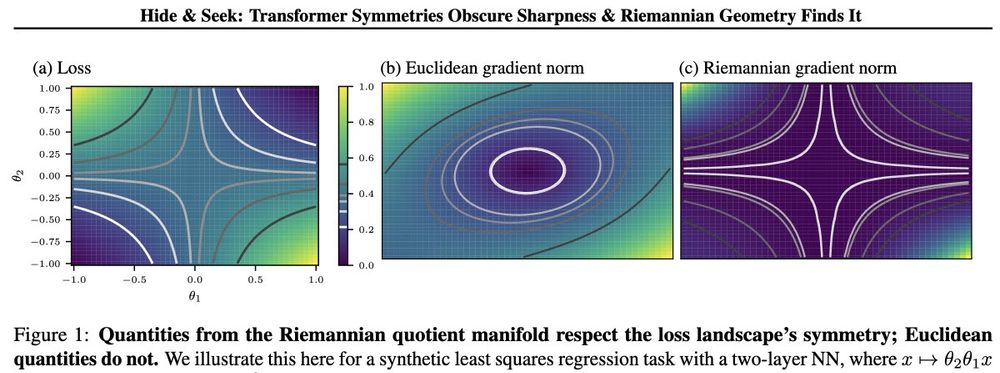

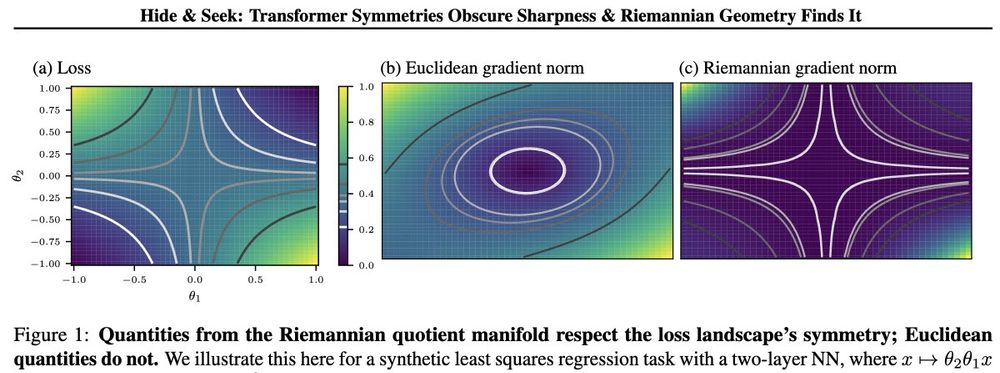

[4/8] The disconnect between the geometry of the loss landscape and the geometry of differential objects (e.g. the loss gradient norm) is already present for extremely simple models, i.e., for linear 2-layer networks with scalar weights.

09.05.2025 12:46 — 👍 0 🔁 0 💬 1 📌 0

[3b/8] This obscures the link between sharpness and generalization. Works such as Kwon et al. (2021) introduce notions of adaptive sharpness that are invariant to re-scaling.

Transformers, however, have a richer set of symmetries that aren’t accounted for by traditional adaptive sharpness measures.

09.05.2025 12:46 — 👍 0 🔁 0 💬 1 📌 0

[3a/8] 🔍 But why?

Traditional sharpness metrics (like Hessian-based measures or gradient norms) don't account for symmetries, directions in parameter space that don't change the model's output. They measure sharpness along directions that may be irrelevant, making results noisy or meaningless.

09.05.2025 12:46 — 👍 0 🔁 0 💬 1 📌 0

[2/8] 📌 Sharpness falls flat for transformers.

Sharpness (flat minima) often predicts how well models generalize. But Andriushchenko et al. (2023) found that transformers consistently break this intuition. Why?

09.05.2025 12:46 — 👍 0 🔁 0 💬 1 📌 0

🧵 ✨ Hide & Seek: Transformer Symmetries Obscure Sharpness & Riemannian Geometry Finds It ✨

Excited to announce our paper on factoring out param symmetries to better predict generalization in transformers ( #ICML25 spotlight! 🎉)

Amazing work by @marvinfsilva.bsky.social and Felix Dangel.

👇

09.05.2025 12:46 — 👍 0 🔁 0 💬 1 📌 1

www.instagram.com/reel/DIVINrS...

12.04.2025 02:54 — 👍 0 🔁 0 💬 0 📌 0

i love when in the middle of a familiar tune suddenly a groove presents itself... with a slow, natural build that takes its time

28.03.2025 01:03 — 👍 2 🔁 0 💬 0 📌 0

Friday night is tango night… I love how mysterious that e minor chord at the end of the video sounds… it always has, and I don’t have a lot of things that sounds that way to me. I love that song.

22.03.2025 00:24 — 👍 1 🔁 0 💬 0 📌 0

Practising Rachmaninoff which turned into a kind of focused improv exercise— a bit noodling but with a specific technical intention…

17.03.2025 00:52 — 👍 0 🔁 0 💬 0 📌 0

at one point i was using sibelius a lot, so i preferred it because i got used to it. but i never spent enough time with any of the other options to make a fair comparison. but sibelius could do everything i wanted, as far as i recall.

24.02.2025 06:34 — 👍 1 🔁 0 💬 0 📌 0

a little bit of fiddling around making fun twinkly shit up on a sat night

16.02.2025 01:18 — 👍 1 🔁 0 💬 0 📌 0

Redirecting...

We had an ice storm and people can literally skate on the streets here

www.facebook.com/share/r/12GZ...

15.02.2025 17:50 — 👍 1 🔁 0 💬 0 📌 0

today i came across this book that a cousin of mine (3x removed) wrote. i've seen some of his other stuff, but this little elementary introduction to variational problems is new to me🙂. my grandmother knew him in her childhood and he used to show her math stuff for fun.

archive.org/details/lyus...

14.02.2025 04:35 — 👍 1 🔁 0 💬 0 📌 0

Will keep updating this. Might be interesting to compare what I’m posting in about a month or so from now to what I’m posting these days. exciting and fun for me to do this #human #learning

09.02.2025 19:26 — 👍 0 🔁 0 💬 0 📌 0

Next day. Another section. Played through this ~50x, in different rhythms, hands separate, together,different articulations. All at 68 beats/min. Tmrw: same at 72 bpm. Can feel myself finally learning parts of this I never properly learned before. Would already be able to play much faster if I tried

09.02.2025 19:21 — 👍 0 🔁 0 💬 0 📌 1

Join us here: https://x.com/novasc0tia

.

"Nova Scotia - What's New?" is not a media outlet. No journalists or original content. We share open-source info, don’t verify 3rd-party materials. Images auto-added by platform.

Official account for the Halifax Regional Municipality. Not monitored 24/7. Need help? Call 311 or email contactus@311.halifax.ca. Call 911 for emergencies.

representing 1000+ professors, instructors, librarians and counsellors at Dalhousie University

Interpretable Deep Networks. http://baulab.info/ @davidbau

Editorial Cartoonist for The Chronicle Herald sharing his interpretation of local and world events from Nova Scotia, Canada.

PhD student in Machine Learning @Warsaw University of Technology and @IDEAS NCBR

Math Assoc. Prof. at Aix-Marseille (France)

Currently on Sabbatical at CRM-CNRS, Université de Montréal

https://sites.google.com/view/sebastien-darses/welcome

Teaching Project (non-profit): https://highcolle.com/

Mathematician at UCLA. My primary social media account is https://mathstodon.xyz/@tao . I also have a blog at https://terrytao.wordpress.com/ and a home page at https://www.math.ucla.edu/~tao/

Human/AI interaction. ML interpretability. Visualization as design, science, art. Professor at Harvard, and part-time at Google DeepMind.

AI @ OpenAI, Tesla, Stanford

Cofounder & CTO @ Abridge, Raj Reddy Associate Prof of ML @ CMU, occasional writer, relapsing 🎷, creator of d2l.ai & approximatelycorrect.com

PhD student at Vector Institute / University of Toronto. Building tools to study neural nets and find out what they know. He/him.

www.danieldjohnson.com

DeepMind Professor of AI @Oxford

Scientific Director @Aithyra

Chief Scientist @VantAI

ML Lead @ProjectCETI

geometric deep learning, graph neural networks, generative models, molecular design, proteins, bio AI, 🐎 🎶

Senior Lecturer #USydCompSci at the University of Sydney. Postdocs IBM Research and Stanford; PhD at Columbia. Converts ☕ into puns: sometimes theorems. He/him.

Associate Professor, Department of Psychology, Harvard University. Computation, cognition, development.

Integrative research. Human-centred solutions. We're a U of T institute working to ensure that powerful technologies make the world better—for everyone.