🎉 Delighted to start my postdoc at @vuamsterdam.bsky.social, working with Dim Coumou

and Eliot Walt on AI for climate & foundation models for weather forecasting. Climate change is one of the most urgent challenges of our time, and I am excited to use my expertise in AI to tackle it. 🌎🌍🌏

07.02.2025 10:31 — 👍 7 🔁 0 💬 0 📌 0

Exciting application of neural graphs for weight nowcasting! With new and improved neural graphs for Transformers!

x.com/BorisAKnyazev/…

16.09.2024 09:35 — 👍 1 🔁 0 💬 0 📌 0

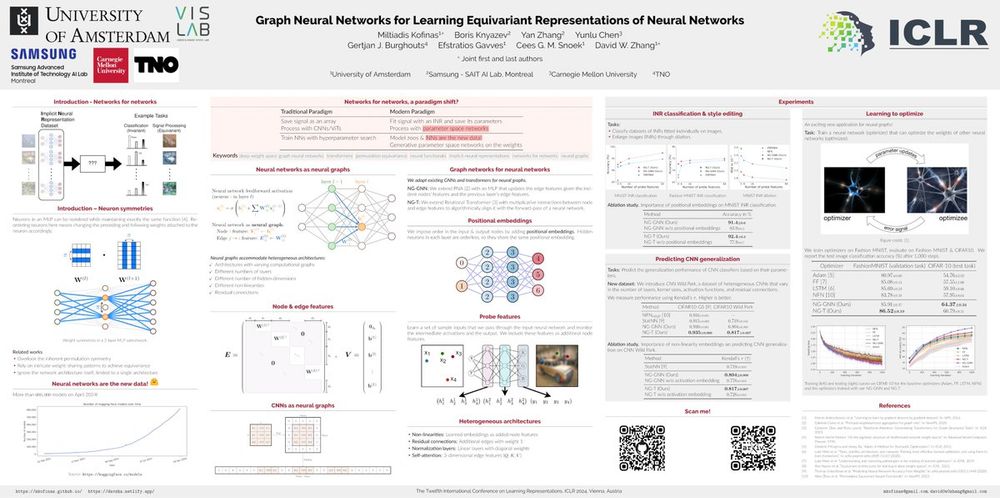

🧐How can we design neural networks that process other neural networks?

The answer is simple: represent them as graphs, a.k.a. neural graphs!

Join our oral presentation and our poster at #ICLR2024 for more details.

Oral session 4B @ Halle A7 and poster #77 session 4 @ Halle B.

06.05.2024 22:46 — 👍 1 🔁 0 💬 0 📌 0

😃 Shoutout to my wonderful collaborators @BorisAKnyazev, @Cyanogenoid, @yunluchen111, @gjburghouts, @egavves, @cgmsnoek, and @davwzha!

[9/9]

21.03.2024 18:39 — 👍 0 🔁 0 💬 0 📌 0

📊We empirically validate our proposed method across a spectrum of tasks, including classification and editing of implicit neural representations, predicting generalization performance, and learning to optimize, while consistently outperforming state-of-the-art approaches.

[8/9]

21.03.2024 18:39 — 👍 0 🔁 0 💬 1 📌 0

📏We adapt existing graph neural networks and transformers to take neural graphs as input, and incorporate inductive biases from neural graphs.

In the context of #geometricdeeplearning, neural graphs constitute a new benchmark for graph neural networks.

[7/9]

21.03.2024 18:38 — 👍 0 🔁 0 💬 1 📌 0

Explicitly integrating the graph structure allows us to process heterogeneous architectures, accommodating architectures with different number of layers or hidden dimensions, non-linearities, and different network connectivities such as residual connections.

[6/9]

21.03.2024 18:38 — 👍 0 🔁 0 💬 1 📌 0

We take an alternative approach: we introduce neural graphs, representations of neural networks as computational graphs of parameters.

This allows us to harness powerful graph neural networks and transformers that preserve permutation symmetry.

[5/9

21.03.2024 18:37 — 👍 0 🔁 0 💬 1 📌 0

Recent works propose architectures that respect this symmetry, but rely on intricate weight-sharing patterns.

Further, they ignore the impact of the network architecture itself, and cannot process neural network parameters from diverse architectures.

[4/9]

21.03.2024 18:37 — 👍 0 🔁 0 💬 1 📌 0

🤔Imagine a simple MLP. How can we process its parameters efficiently? Naively flattening and concatenating weights overlooks an essential structure in the parameters: permutation symmetry.

💡Neurons in a layer can be freely reordered while representing the same function.

[3/9]

21.03.2024 18:36 — 👍 0 🔁 0 💬 1 📌 0

How can we design neural networks that take neural network parameters as input?

This fundamental question arises in applications as diverse as generating neural network weights, processing implicit neural representations, and predicting generalization performance.

[2/9]

21.03.2024 18:35 — 👍 0 🔁 0 💬 1 📌 0

🔍How can we design neural networks that take neural network parameters as input?

🧪Our #ICLR2024 oral on "Graph Neural Networks for Learning Equivariant Representations of Neural Networks" answers this question!

📜:💻:🧵 [1/9

github.com/mkofinas/neura… arxiv.org/abs/2403.12143

21.03.2024 18:35 — 👍 0 🔁 0 💬 1 📌 0

Cheers to my wonderful collaborators @BorisAKnyazev, @Cyanogenoid, @yunluchen111, @gjburghouts, @egavves, @cgmsnoek, and @davwzha!

16.01.2024 23:54 — 👍 0 🔁 0 💬 0 📌 0

🎉 Super stoked that our work on "Graph Neural Networks for Learning Equivariant Representations of Neural Networks" was accepted as an oral in #ICLR2024!

Paper details and source code will follow soon.

16.01.2024 23:53 — 👍 0 🔁 0 💬 1 📌 0

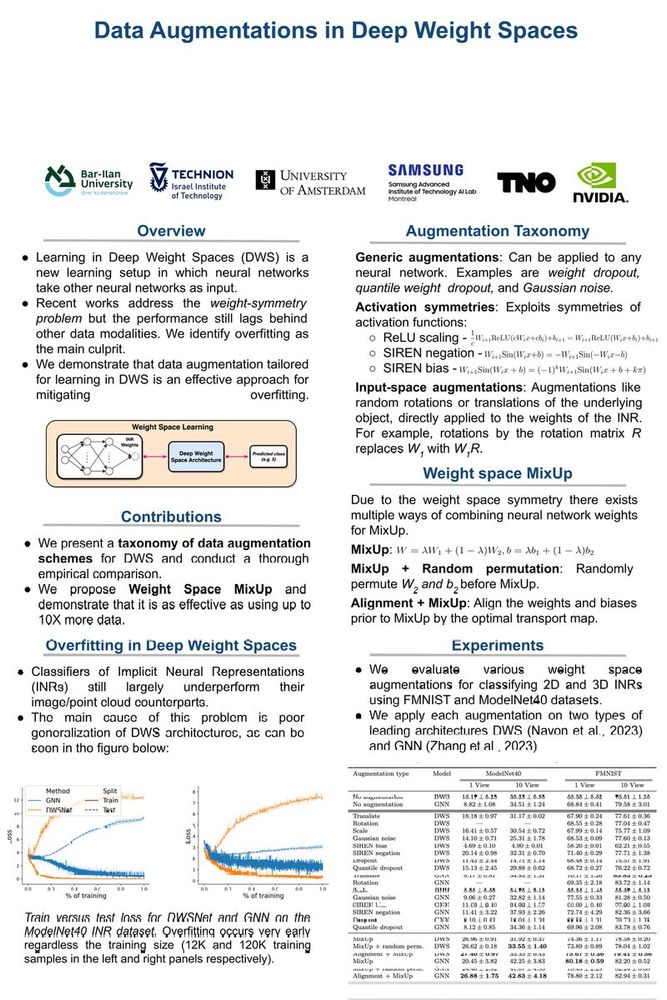

Join our poster on "Data Augmentations in Deep Weight Spaces" @neur_reps workshop, #NeurIPS2023, Ballroom A+B at 4pm!

16.12.2023 21:32 — 👍 0 🔁 0 💬 0 📌 0

Shoutout to my collaborators @erikjbekkers, @nsn86, and @egavves! 😊 [8/8]

13.12.2023 08:34 — 👍 0 🔁 0 💬 0 📌 0

We term our method Aether, inspired by the postulated medium that permeates all throughout space and allows for the propagation of light. [7/8]

13.12.2023 08:28 — 👍 0 🔁 0 💬 1 📌 0

Our experiments show that we can accurately discover the underlying fields in charged particles settings, real-world traffic scenes, and gravitational n-body problems, and effectively use them to learn the system and forecast future trajectories. [6/8]

13.12.2023 08:28 — 👍 0 🔁 0 💬 1 📌 0

We propose to disentangle equivariant object interactions from external global field effects. We model interactions with equivariant graph networks, and combine them with neural fields in a novel graph network that integrates field forces. [5/8]

13.12.2023 08:27 — 👍 0 🔁 0 💬 1 📌 0

We focus on discovering these fields, and infer them from the observed dynamics alone, without directly observing them. We theorize the presence of latent force fields, and propose neural fields to learn them. [4/8]

13.12.2023 08:26 — 👍 0 🔁 0 💬 1 📌 0

Equivariant networks are inapplicable in the presence of global fields, as they fail to capture global information.

Meanwhile, the observations constitute the net effect of object interactions and field effects, i.e. object interactions are entangled with global fields. [3/8]

13.12.2023 08:25 — 👍 0 🔁 0 💬 1 📌 0

Systems of interacting objects often evolve under the influence of global field effects that govern their dynamics, yet previous works have abstracted away from such effects, and only focused on the in vitro case of systems evolving in a vacuum. [2/8]

13.12.2023 08:02 — 👍 0 🔁 0 💬 1 📌 0

Today at #NeurIPS2023 I will be presenting our work on "Latent Field Discovery in Interacting Dynamical Systems with Neural Fields".

Join us at poster session 3 on Wednesday morning, poster #619.

Paper:Source code:1/8] 🧵

github.com/mkofinas/aether arxiv.org/abs/2310.20679

13.12.2023 08:01 — 👍 0 🔁 0 💬 1 📌 0

Thrilled to be in New Orleans for #NeurIPS2023! 🎉

Ping me if you want to grab a coffee and chat about graph networks, geometric deep learning, and neural fields.

13.12.2023 07:50 — 👍 0 🔁 0 💬 0 📌 0

Highly recommended PhD position with @erikjbekkers!

x.com/erikjbekkers/s…

17.10.2023 08:23 — 👍 0 🔁 0 💬 0 📌 0

A little late for the party, but I am truly excited that our work on "Latent Field Discovery in Interacting Dynamical Systems with Neural Fields" was accepted at @NeurIPSConf! 😊

Wonderful collaboration with @erikjbekkers, @nsn86, and @egavves.

Details & source code coming soon!

29.09.2023 15:49 — 👍 0 🔁 0 💬 0 📌 0

Joint work with @davwzha, @Cyanogenoid, @yunluchen111, @gjburghouts, and @cgmsnoek.

28.07.2023 23:56 — 👍 0 🔁 0 💬 0 📌 0

How can we effectively use neural networks to process the parameters of other neural nets?

We propose representing neural networks as computation graphs, enabling the use of standard graph neural networks to preserve permutation symmetries.

Join our poster @TAGinDS in #ICML2023!

28.07.2023 20:59 — 👍 0 🔁 0 💬 1 📌 0

Graph Switching Dynamical Systems

Dynamical systems with complex behaviours, e.g. immune system cells interacting with a pathogen, are commonly modelled by splitting the behaviour into different regimes, or modes, each with...

Today at #ICML2023 I will be co-presenting "Graph Switching Dynamical Systems". Join us in poster session 2, poster 636.

Joint work with Yongtuo Liu, @saramagliacane and @egavves.

Arxiv:Github:

github.com/yongtuoliu/Gra… arxiv.org/abs/2306.00370

25.07.2023 22:29 — 👍 0 🔁 0 💬 0 📌 0