Looks like the Gemini embedding is not ready yet.

models/text-embedding-004 doesn't have 429.

Thaddée Tyl

@espadrine.bsky.social

Self-replicating organisms. shields.io, Captain Train, Qonto. They.

@espadrine.bsky.social

Self-replicating organisms. shields.io, Captain Train, Qonto. They.

Looks like the Gemini embedding is not ready yet.

models/text-embedding-004 doesn't have 429.

Am I using the Gemini APIs wrong? I keep getting 429's. The key was fresh from aistudio.google.com.

gemini-embedding-exp-03-07 is the only embedding model in the market that I can’t benchmark because of it.

The quota in the Console says I'm at 0.33% usage…

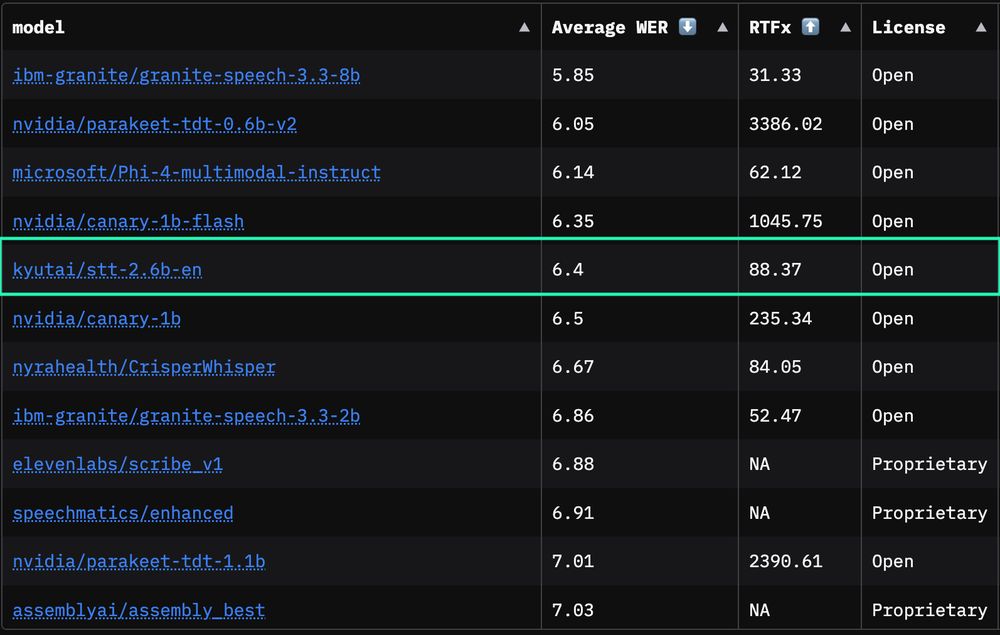

Our latest open-source speech-to-text model just claimed 1st place among streaming models and 5th place overall on the OpenASR leaderboard 🥇🎙️

While all other models need the whole audio, ours delivers top-tier accuracy on streaming content.

Open, fast, and ready for production!

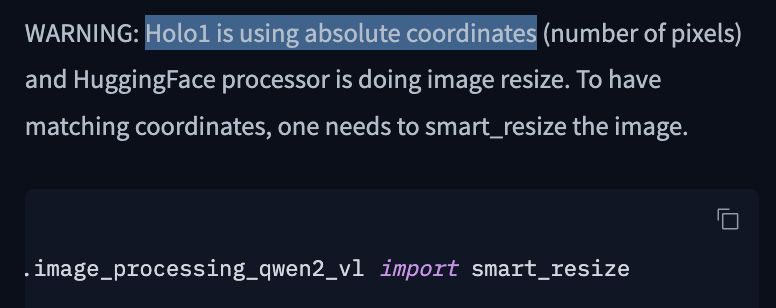

This is from H’s Holo1’s Huggingface README: huggingface.co/Hcompany/Hol...

10.06.2025 12:54 — 👍 0 🔁 0 💬 0 📌 0

WARNING: Holo1 is using absolute coordinates (number of pixels) and HuggingFace processor is doing image resize. To have matching coordinates, one needs to smart_resize the image. from transformers.models.qwen2_vl.image_processing_qwen2_vl import smart_resize

Isn’t there a better way to handle screens than asking a *language model* to guess the number of pixels to the left and top of a UI widget?

10.06.2025 12:50 — 👍 1 🔁 0 💬 1 📌 0Talk to unmute.sh 🔊, the most modular voice AI around. Empower any text LLM with voice, instantly, by wrapping it with our new speech-to-text and text-to-speech. Any personality, any voice. Interruptible, smart turn-taking. We’ll open-source everything within the next few weeks.

23.05.2025 10:14 — 👍 8 🔁 1 💬 2 📌 2

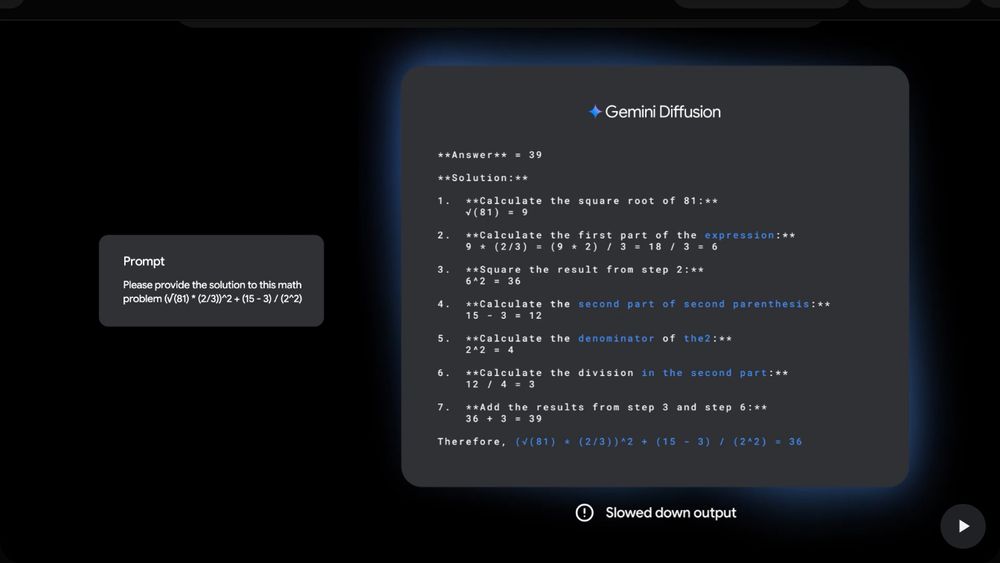

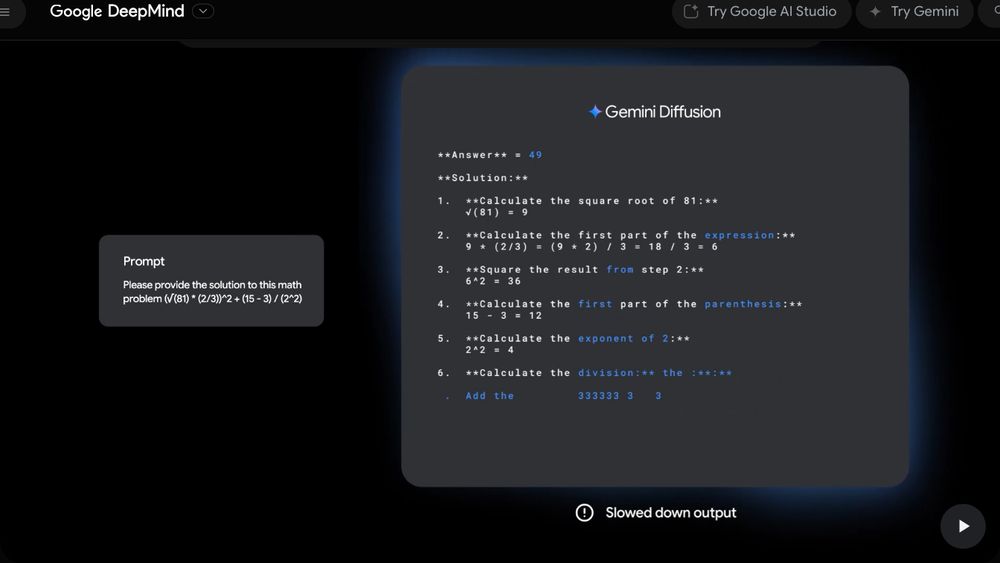

This diffusion has shenanigans. The number of tokens between two unchanged sequences can increase or decrease.

21.05.2025 12:51 — 👍 3 🔁 1 💬 1 📌 0Search > Recommendation.

I find more interesting, high-signal things from querying what I like, than linearly going through a feed that learnt from my navigation.

Generally, giving users the ability to send reliable signals beats extracting signals from their background noise.

It is critical for scientific integrity that we trust our measure of progress.

The @lmarena.bsky.social has become the go-to evaluation for AI progress.

Our release today demonstrates the difficulty in maintaining fair evaluations on the Arena, despite best intentions.

That does look gorgeous.

Was there a specific loss to improve style?

It is hard to truly evaluate how good it is without taking into consideration the prompt adherence.

I’ll be there after lunch!

11.04.2025 09:46 — 👍 1 🔁 0 💬 1 📌 0Oh are you there all day?

11.04.2025 07:27 — 👍 0 🔁 0 💬 1 📌 0In the config.json file, for Maverick the max_position_embeddings is 1048576 = 2**20.

For Scout, they just added a zero at the end (10485760). It is not even a power of 2. It makes me believe they didn’t test it.

They also set original_max_position_embeddings to 8192, which is not even 256K…

I wonder what the story was for Phi-4 Mini. Its tokenizer for conversation is completely different from Phi-4.

06.04.2025 16:55 — 👍 0 🔁 0 💬 0 📌 0That is a phenomenal step forward. If I understand this right, it might have dire consequences for PBKDF like scrypt which hope to require either large amounts of time or large amounts of space?

01.04.2025 11:03 — 👍 2 🔁 0 💬 1 📌 0New paper: Simulating Time With Square-Root Space

people.csail.mit.edu/rrw/time-vs-...

It's still hard for me to believe it myself, but I seem to have shown that TIME[t] is contained in SPACE[sqrt{t log t}].

To appear in STOC. Comments are very welcome!

Censorship is when the government silences speech.

With Mr Musk being in government, doesn’t that make every X suspension or shadow ban, censorship?

Preventing political opponents from joining elections, by removing their diploma and putting them in prison with unjustified charges, is not democratic.

Is there a shred of reason behind Ekrem Immamoglu's jailing?

apnews.com/article/turk...

do it

17.03.2025 14:36 — 👍 0 🔁 0 💬 0 📌 0

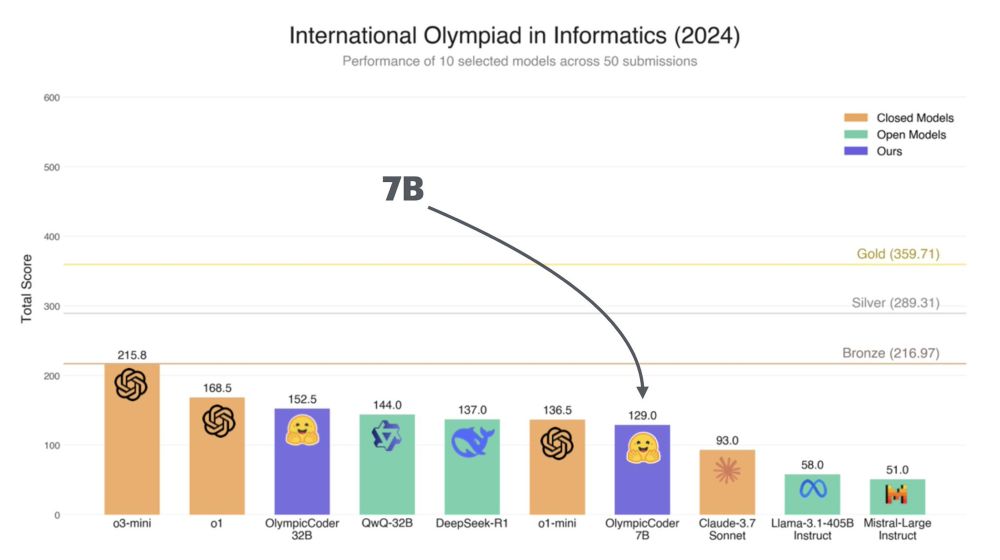

We've kept pushing our Open-R1 project, an open initiative to replicate and extend the techniques behind DeepSeek-R1

And even we were mind-blown by the results we got with this latest model we're releasing: ⚡️OlympicCoder

[1/3]

Is there an economic reason for which the tariffs established during Mr Trump’s first term didn’t cause a recession, but those established now did?

11.03.2025 17:41 — 👍 0 🔁 0 💬 0 📌 0The name Artefact is going the way of the dodo.

05.03.2025 21:33 — 👍 1 🔁 0 💬 0 📌 0

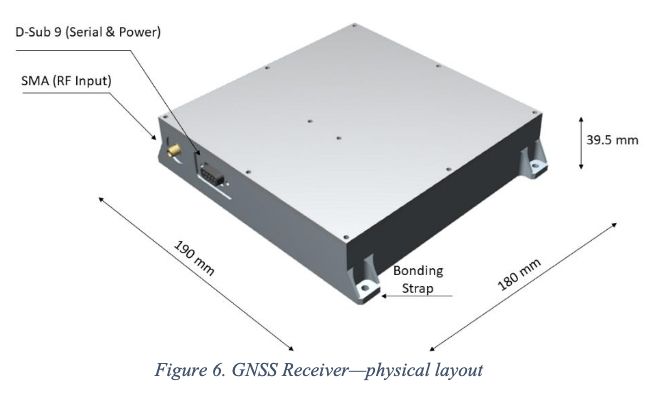

We can get GNSS spacial positioning all the way to the moon, given the right receiver!

Greatly simplifies space travel.

I still believe we should set up a separate GNSS on every planet.

ntrs.nasa.gov/api/citation...

Italy will reintroduce nuclear energy through SMRs and fusion research.

Decarbonization fights against an existential risk. I approve!

www.mase.gov.it/comunicati/n...

I genuinely love negative results paper. It allows avoiding to go down a rabbit hole that doesn't work.

04.03.2025 10:29 — 👍 1 🔁 0 💬 0 📌 0It's a bit light on scientific details though. I only yearn for a leak of the number of experts and parameters.

28.02.2025 11:16 — 👍 1 🔁 0 💬 0 📌 0

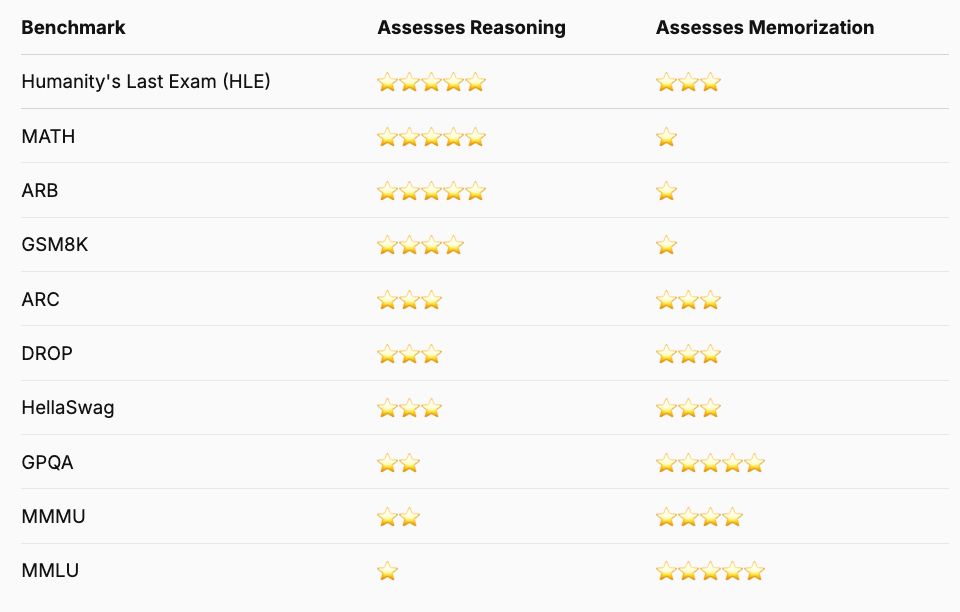

Humanity's Last Exam (HLE): 5 stars reasoning, 3 stars memorization MATH: 5 stars reasoning, 1 star memorization ARB: 5 stars reasoning, 1 star memorization GSM8K: 4 stars reasoning, 1 star memorization ARC: 3 stars reasoning, 3 stars memorization DROP: 3 stars reasoning, 3 stars memorization HellaSwag: 3 stars reasoning, 3 stars memorization GPQA: 2 stars reasoning, 5 stars memorization MMMU: 2 stars reasoning, 4 stars memorization MMLU: 1 star reasoning, 5 stars memorization

LLMs get better at tool use and search.

Model memorization is thus less useful than reasoning.

Yet a lot of benchmarks still focus on the former.

Mistral Large 3 soon, Llama 4 in a month or so, Deepseek R2 later

25.02.2025 14:48 — 👍 2 🔁 0 💬 1 📌 0Why did they put the parentheses? I thought that was the point of Spanish notation.

24.02.2025 09:42 — 👍 3 🔁 0 💬 0 📌 0It is a bit sad that codec programs gave up on using GPGPU / CUDA, which is much more widespread than hardware acceleration.

23.02.2025 09:08 — 👍 0 🔁 0 💬 0 📌 0