Opt out now and opt out thoroughly: www.howtogeek.com/gmail-might-...

22.11.2025 17:21 — 👍 15 🔁 8 💬 0 📌 0

@pranavgoel.bsky.social

Researcher: Computational Social Science, Text as Data On the job market in Fall 2025! Currently a Postdoctoral Research Associate at Network Science Institute, Northeastern University Website: pranav-goel.github.io/

Opt out now and opt out thoroughly: www.howtogeek.com/gmail-might-...

22.11.2025 17:21 — 👍 15 🔁 8 💬 0 📌 0

AI data centers are straining already fragile power and water infrastructures in communities around the world, leading to blackouts and water shortages. “Data centers are where environmental and social issues meet,” says Rosi Leonard, an environmentalist with @foeireland.bsky.social.

04.11.2025 18:40 — 👍 12 🔁 5 💬 0 📌 1

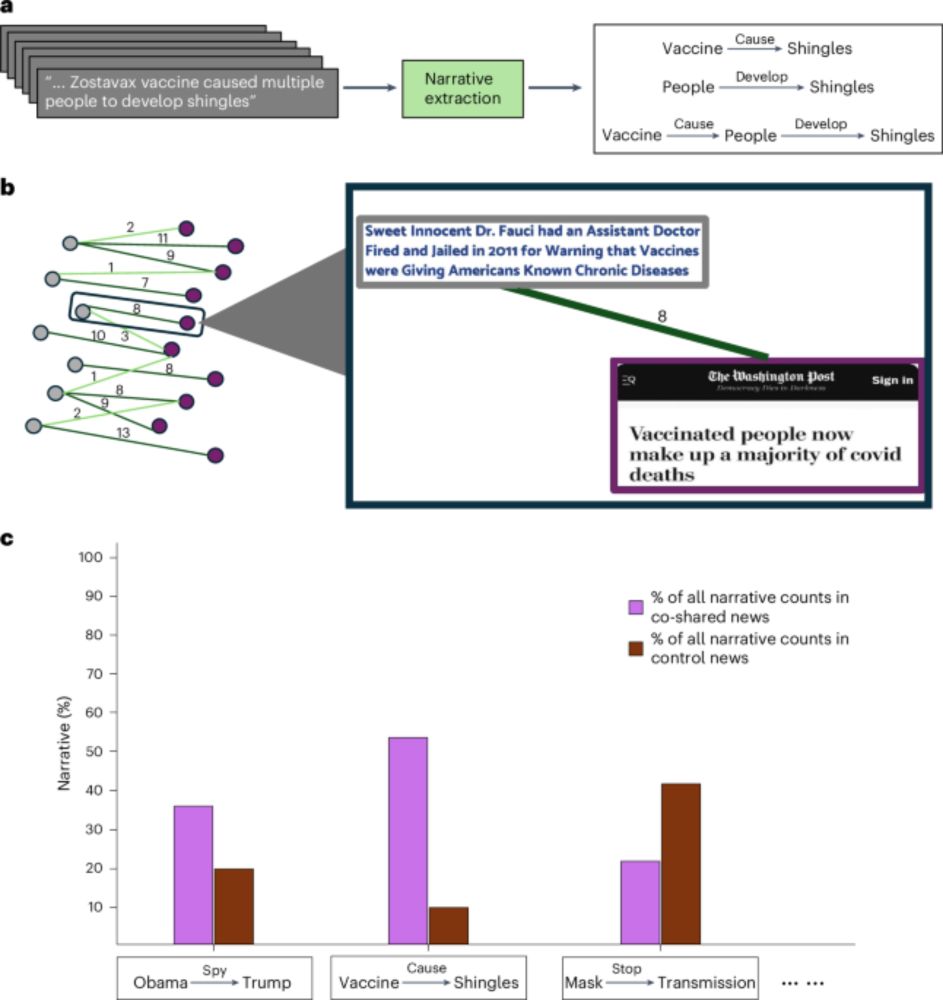

A diagram illustrating pointwise scoring with a large language model (LLM). At the top is a text box containing instructions: 'You will see the text of a political advertisement about a candidate. Rate it on a scale ranging from 1 to 9, where 1 indicates a positive view of the candidate and 9 indicates a negative view of the candidate.' Below this is a green text box containing an example ad text: 'Joe Biden is going to eat your grandchildren for dinner.' An arrow points down from this text to an illustration of a computer with 'LLM' displayed on its monitor. Finally, an arrow points from the computer down to the number '9' in large teal text, representing the LLM's scoring output. This diagram demonstrates how an LLM directly assigns a numerical score to text based on given criteria

LLMs are often used for text annotation, especially in social science. In some cases, this involves placing text items on a scale: eg, 1 for liberal and 9 for conservative

There are a few ways to accomplish this task. Which work best? Our new EMNLP paper has some answers🧵

arxiv.org/pdf/2507.00828

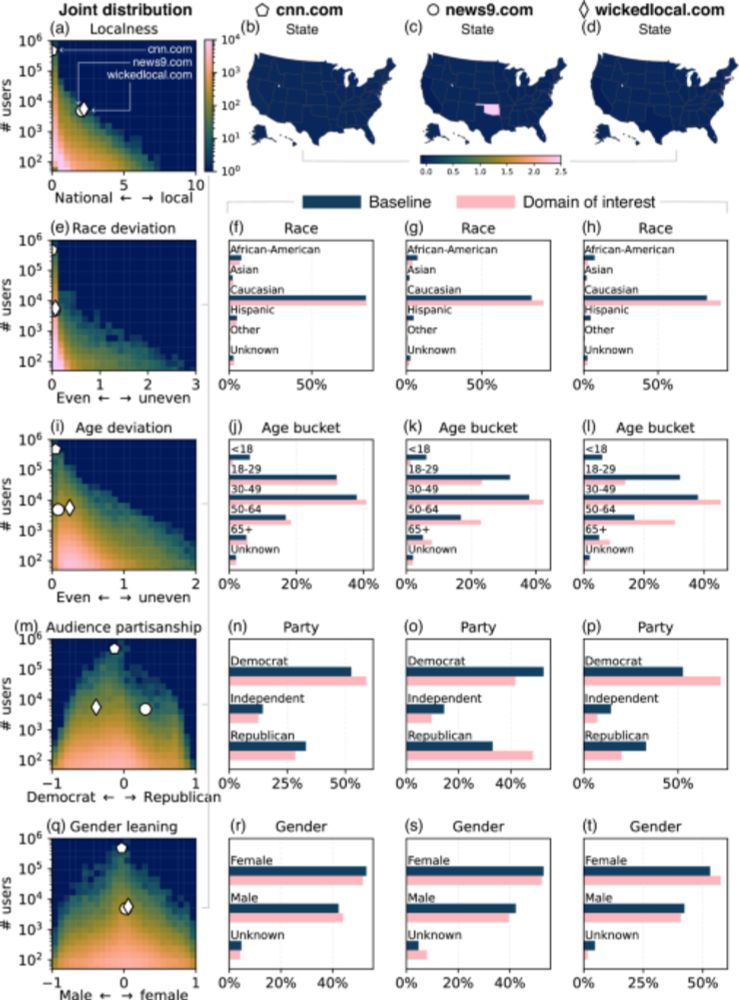

ICYMI, our DomainDemo dataset, which describes how different demographic groups share domains on Twitter, is now available to download!

📄 Data descriptor: doi.org/10.1038/s415...

📈 Interactive app to explore the data: domaindemo.info

💽 Dataset: doi.org/10.5281/zeno...

For more, check out the paper!

nature.com/articles/s41562-025-02223-4

arxiv.org/abs/2308.06459

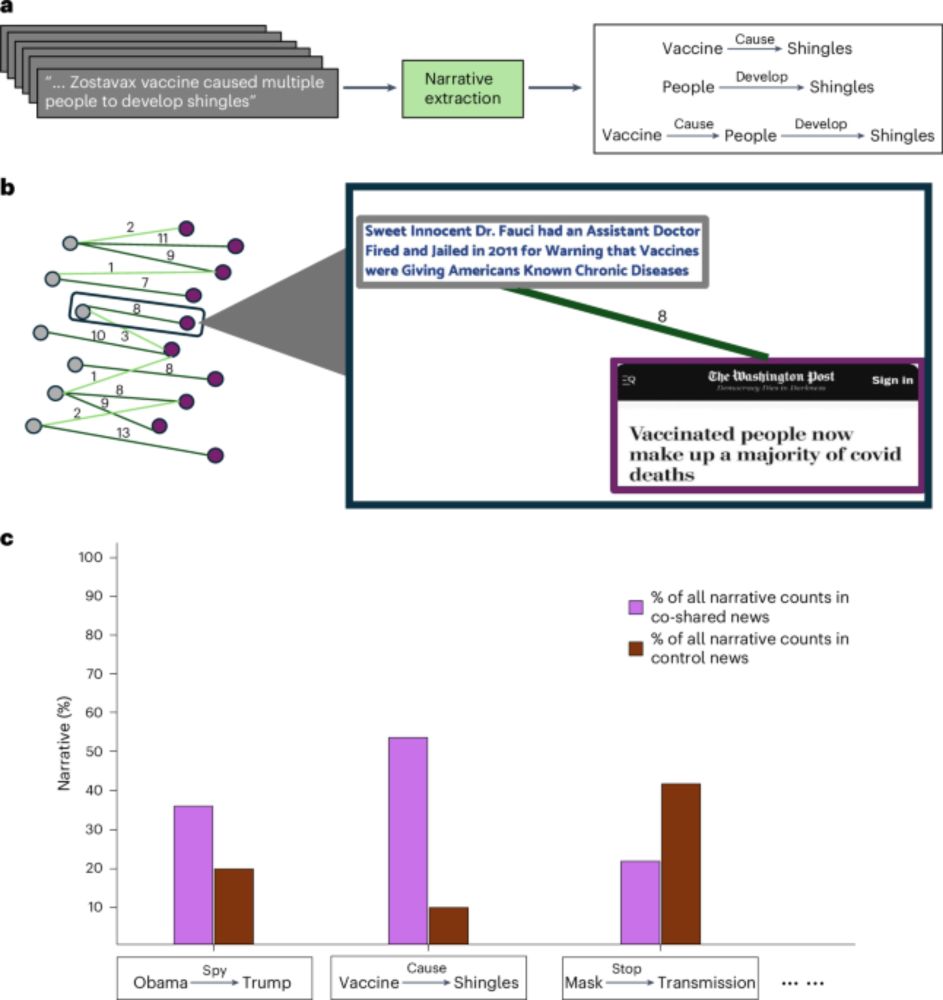

For journalists and especially headline writers: even if a discrete piece of information is true, you've got to think carefully about whether the way you're presenting it is useful for promoting narratives that aren't.

11.06.2025 15:39 — 👍 0 🔁 0 💬 1 📌 0Big picture: misleading claims are both *more prevalent* and *harder to moderate* than implied in current misinformation research. It's not as simple as fact-checking false claims or downranking/blocking unreliable domains. The extent to which information (mis)informs depends on how it is used!

11.06.2025 15:39 — 👍 0 🔁 0 💬 1 📌 0If you want to advance misleading narratives — such as COVID-19 vaccine skepticism — supporting information from reliable sources is more useful than similar information from unreliable sources, if you have it.

11.06.2025 15:39 — 👍 1 🔁 1 💬 1 📌 0This calls for a reconsideration of what misinformation is, how widespread it is, and the extent to which it can be moderated. Our core claim is that users are *using* information to promote their identities and advance their interests, not merely consuming information for its truth value.

11.06.2025 15:39 — 👍 1 🔁 0 💬 1 📌 0We find that mainstream stories with high scores on this measure are significantly more likely to contain narratives present in misinformation content. This suggests that reliable information — which has a much wider audience — can be repurposed by users promoting potentially misleading narratives.

11.06.2025 15:39 — 👍 0 🔁 0 💬 1 📌 0We do this by looking at co-sharing behavior on Twitter/X. We first identify users who frequently share information from unreliable sources, and then examine the information from reliable sources that those same users also share at disproportionate rates.

11.06.2025 15:39 — 👍 0 🔁 0 💬 1 📌 0Our paper uses this dynamic — users strategically repurposing true information from reliable sources to advance misleading narratives — to move beyond conceptualizing misinformation as source reliability and measuring it by just counting sharing of / exposure to unreliable sources.

11.06.2025 15:39 — 👍 1 🔁 0 💬 1 📌 0

Washington Post article: screenshot of the headline "Vaccinated people now make up a majority of covid deaths"

Take, for example, this headline from the Washington Post. The source is reliable and the information is, strictly speaking, true. But the people most excited to share this story wanted to advance a misleading claim: that the COVID-19 vaccine was ineffective at best.

11.06.2025 15:39 — 👍 2 🔁 0 💬 1 📌 0But users who want to advance misleading claims likely *prefer* to use reliable sources when they can. They know others see reliable sources as more credible!

11.06.2025 15:39 — 👍 1 🔁 0 💬 1 📌 0When thinking about online misinformation, we'd really like to identify/measure misleading claims; unreliable sources are only a convenient proxy.

11.06.2025 15:39 — 👍 0 🔁 0 💬 1 📌 0

In our new paper (w/ @jongreen.bsky.social , @davidlazer.bsky.social, & Philip Resnik), now up in Nature Human Behaviour (nature.com/articles/s41562-025-02223-4), we argue that this tension really speaks to a broader misconceptualization of what misinformation is and how it works.

11.06.2025 15:39 — 👍 11 🔁 6 💬 1 📌 1There's a lot of concern out there about online misinformation, but when we try and measure it by identifying sharing of/traffic to unreliable sources, it looks like a tiny share of users' information diets. What gives?

11.06.2025 15:39 — 👍 4 🔁 1 💬 1 📌 0

Goel et al. examine why some factually correct news articles are often shared alongside fake news claims on social media. @pranavgoel.bsky.social @jongreen.bsky.social @davidlazer.bsky.social

www.nature.com/articles/s41...

If you are at #WebSci2025, join our "Beyond APIs: Collecting Web Data for Research using the National Internet Observatory" - a tutorial that addresses the critical challenges of web data collection in the post-API era.

national-internet-observatory.github.io/beyondapi_websci25/

If you study networks, or have been stuck listening to people who study networks for long enough (sorry to my loved ones), you may have heard that open triads – V shapes – in social networks tend to turn into closed triangles. But why does this happen? In part, because people repost each other.

01.04.2025 20:00 — 👍 48 🔁 18 💬 3 📌 3Congratulations!

18.02.2025 15:46 — 👍 1 🔁 0 💬 1 📌 0