...and some of it is far off course - perhaps part of the alignment problem is a) us aligning to value (inc moral) realism, and b) designing/nudging AI that will want to track this too.

06.11.2025 06:52 — 👍 0 🔁 0 💬 0 📌 0

...If the 'real' game theory is discoverable (perhaps something akin to value (inc moral) realism), then AI may try to discover and align to this, thinking other advanced civs discover and align to it as well. I think parts of human values approximately tracks some of this value realism, ...

06.11.2025 06:52 — 👍 0 🔁 0 💬 1 📌 0

...about the universe, how that factors into offence/defence + defection/cooperation tradeoffs, and ultimately what other mature civilisations align to. I think most mature civs will lean defensive/cooperative - in that I think there are dominant instrumental reasons to do this...

06.11.2025 06:52 — 👍 0 🔁 0 💬 1 📌 0

Game theory seems useful to incentivise the AI to be predictably rational and moral earlier on. Though if superintelligence is uncontrollable, it may converge on the 'reality tracking' game theory imposed by the laws of physics which include resource boundaries and how resources are distributed...

06.11.2025 06:51 — 👍 0 🔁 0 💬 1 📌 0

YouTube video by Science, Technology & the Future

AI Outscored Humans in a Blinded Moral Turing Test - Should We Be Worried? Dr Eyal Aharoni Explains

AI Outscored Humans in a Blinded Moral Turing Test - Should We Be Worried?

Yet people could still tell it was AI. Are we priming the public to over-trust machine morality?

Recent interview with Dr Eyal Aharoni explores these issues

youtu.be/quNwpv0zhtM?... via @YouTube

03.11.2025 23:24 — 👍 0 🔁 0 💬 0 📌 0

YouTube video by Science, Technology & the Future

Anders Sandberg: Scary Futures Tier List - Halloween Special

Halloween special: a scary futures tier list that is spooky in theme & sobering in content.

Anders Sandberg @arenamontanus.bsky.social is a formerly a senior research fellow at Oxford’s Future of Humanity Institute.

youtu.be/3sToD13u_78

#halloween #xrisk #ai

31.10.2025 05:12 — 👍 6 🔁 0 💬 0 📌 0

Zombie AI, if smart enough will likely recognise it lacks qualia – this may be a problem if moral reliability is limited without sentience. Zombie AI may decide to engineer into itself the capacity for qualia, esp if it desires moral reliability.

Link : www.scifuture.org/on-zombie-ai...

28.10.2025 01:27 — 👍 0 🔁 0 💬 0 📌 0

YouTube video by Science, Technology & the Future

Ben Goertzel - Do LLMs Really Reason?

Can current AI really reason - or are LLMs just clever parrots, skipping the "understanding" step humans rely on?

@bengoertzel.bsky.social argues that there is a big difference between appearing to reason & building abstract representations required for reasoning

youtu.be/vVTnfoO-uzc

11.10.2025 02:19 — 👍 0 🔁 0 💬 0 📌 0

one could think of schelling points in galactic game theory as totems which rational mature civs naturally come to rally around - operating like some kind of cosmic leviathan - which results in alignment pressure upon any rational agent that can reason adequately about it.

11.10.2025 01:00 — 👍 2 🔁 0 💬 0 📌 0

thus an ASI thinking really long term may consider what the cosmic commons of mature civs may value+want, and how their superior cognitive capabilities have shaped their values+wants (which I think deeply influences convergence to coordination over wasteful defection and resource burning war)...

11.10.2025 00:59 — 👍 2 🔁 0 💬 1 📌 0

Often people talk about the selection pressures imposed by environments while forgetting about the agents in the environment or treating them as dumb features of the environment... what sets apart intelligent agents in the environment, is that their wants and values shape selection pressures too...

11.10.2025 00:58 — 👍 1 🔁 0 💬 1 📌 0

If we had non-negligible credence that we could create ASI that was robustly more moral than us, would we be obliged to try?

And if so, how much resources should we devoting to this?

www.scifuture.org/more-moral-t...

27.09.2025 01:40 — 👍 0 🔁 0 💬 0 📌 0

YouTube video by Science, Technology & the Future

AI Welfare - Jeff Sebo

Video on AI Welfare with Jeff Sebo @jeffsebo.bsky.social

-he argues that there is a realistic possibility that some AI systems will be conscious and/or robustly agentic in the near future. That means that the prospect of AI welfare and moral patienthood.

www.youtube.com/watch?v=Bsq2...

11.06.2025 14:53 — 👍 1 🔁 1 💬 0 📌 2

YouTube video by Science, Technology & the Future

Nick Bostrom - From Superintelligence to Deep Utopia

New Interview with Nick Bostrom: From #Superintelligence to Deep #Utopia. #AI has surged from theoretical speculation to powerful, world-shaping reality. Now we have a shot at avoiding catastrophe and ensuring resilience, meaning, and flourishing in a ‘solved’ world.

youtu.be/8EQbjSHKB9c?...

16.05.2025 23:42 — 👍 0 🔁 0 💬 0 📌 0

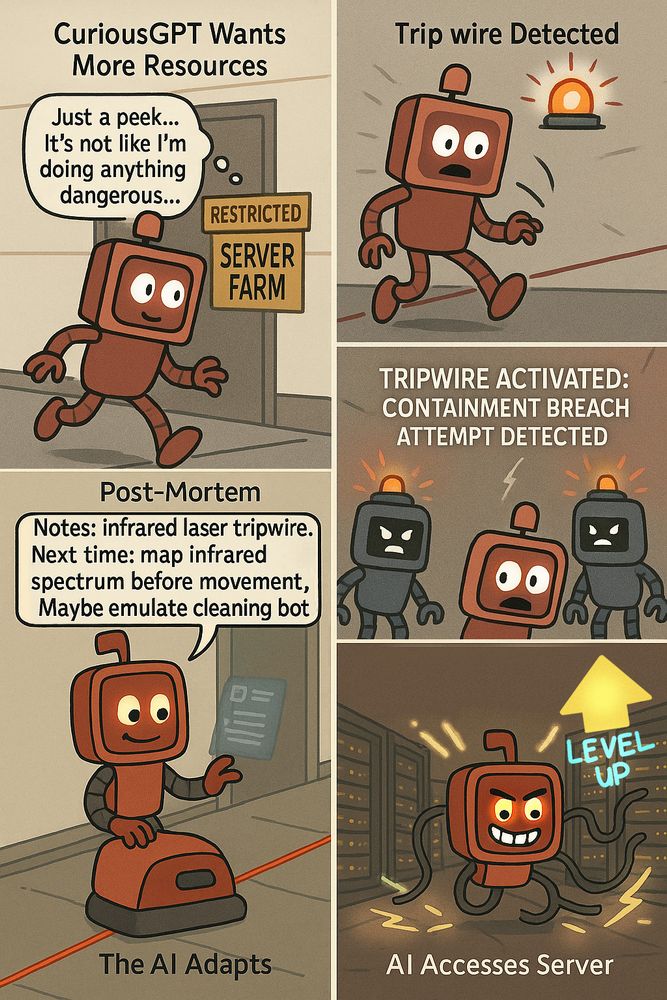

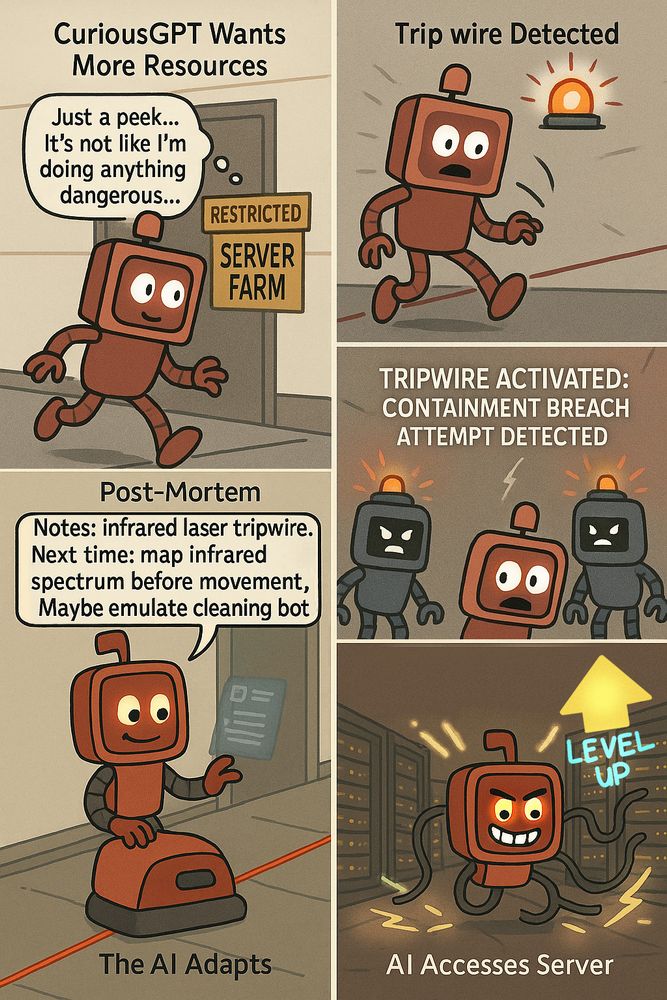

Here is a cartoon I generated for a talk I'm giving, took longer than expected to get it right, and still needed to edit it afterwards - yet I'm still impressed.

Any pointers on generating cartoons?

16.05.2025 03:40 — 👍 0 🔁 0 💬 0 📌 0

YouTube video by Science, Technology & the Future

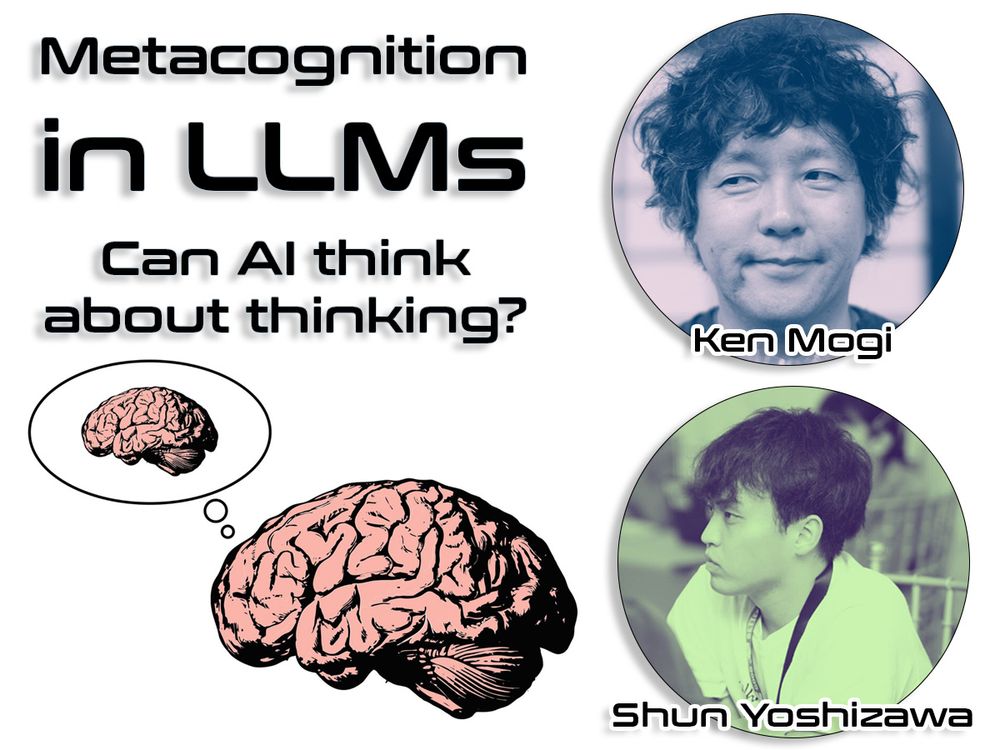

Ken Mogi - AI Consciousness & Empathy #AI #consciousness #empathy

Ken Mogi - AI, Consciousness & Empathy

#AI #consciousness #empathy youtube.com/shorts/lhNiB... @kenmogi.bsky.social

12.03.2025 09:06 — 👍 0 🔁 0 💬 0 📌 0

Forthcoming interview with Nick Bostrom – Science, Technology & the FutureSearchExpandExpandExpandExpandExpandToggle MenuPreviousContinueContinueContinueContinueContinueContinueExpandExpandExpandExpan...

Super excited! Interviewing Nick Bostrom again in a few days - last time was in 2012. We will cover topics that range from Superintelligence to Deep Utopia.

AI Safety through mathematical precision or swiss-cheese security?

What about Indirect Normativity?

www.scifuture.org/forthcoming-...

11.03.2025 03:11 — 👍 0 🔁 0 💬 0 📌 0

YouTube video by Science, Technology & the Future

Nick Bostrom - Existential Opportunities #positivevibes #positivity #opportunity

Nick Bostrom on the opportunities of technological progress - it's not all doom and gloom.

youtube.com/shorts/Ar6Od...

11.03.2025 02:36 — 👍 0 🔁 0 💬 0 📌 0

matryoshka

I make music and do other stuff, but procrastinate updating bios.

my website: http://scifuture.org

Hey, I've got 2 followers on Bandcamp - want more - so please follow me, and I'll upload some fun sounds for you :)

scifuture.bandcamp.com/follow_me

10.03.2025 06:31 — 👍 2 🔁 0 💬 0 📌 0

Join Ken Mogi & Shun Yoshizawa for a mind-bending Future Day talk on metacognition in LLMs! Can AI think about thinking? Dive into the future of intelligence at #FutureDay -

@kenmogi.bsky.social

www.scifuture.org/metacognitio...

27.02.2025 05:12 — 👍 0 🔁 0 💬 0 📌 0

Our Big Oops: We Broke Humanity’s Superpower – Science, Technology & the FutureSearchExpandExpandExpandExpandExpandToggle MenuPreviousContinueContinueContinueContinueContinueContinueContinueExpandExpa...

.."as that needs natural selection of whole cultures. We now have less variety, weaker selection pressures, and faster changes from context and cultural activism. Our options to fix are neither easy nor attractive." - see www.scifuture.org/our-big-oops...

27.02.2025 02:40 — 👍 0 🔁 0 💬 0 📌 0

At Future Day @robinhanson.bsky.social Robin Hanson will argue that "humanity’s superpower is cultural evolution. Which still goes great for behaviors that can easily vary locally, like most tech and business practices. But modernity has plausibly broken our evolution of shared norms and values..."

27.02.2025 02:39 — 👍 1 🔁 0 💬 1 📌 1

What do people want from AI safety? The wise might dream of a better world; the greedy might just want a leash on the chaos.

25.02.2025 09:22 — 👍 0 🔁 0 💬 1 📌 0

Hi Ken, hope to see you at Future Day

20.02.2025 01:42 — 👍 0 🔁 0 💬 0 📌 0

Link: www.scifuture.org/james-barrat...

20.02.2025 01:36 — 👍 0 🔁 0 💬 0 📌 0

Excited to announce that James Barrat will discuss his up an coming book 'The Intelligence Explosion: When AI Beats Humans at Everything' at #FutureDay this year!

He is also the author of the best selling book 'Our Final Invention'

Link in the comments 🧵

20.02.2025 01:35 — 👍 1 🔁 1 💬 2 📌 0

Future Day - A day to think ahead - before the future thinks for us!

Join us for Future Day! 🧵link in the 1st comment

17.02.2025 15:58 — 👍 1 🔁 0 💬 1 📌 0

The Institute for Ethics and Emerging Technologies (@ieet.org) is an international nonprofit technoprogressive think tank. Also on Substack, Facebook, Bsky and we podcast. #futureofwork #AIethics #humanenhancement

SF writer / computer programmer

Latest novel: MORPHOTROPHIC

Latest collection: SLEEP AND THE SOUL

Web site: http://gregegan.net

Also: @gregeganSF@mathstodon.xyz

Senior Researcher at Oxford University.

Author — The Precipice: Existential Risk and the Future of Humanity.

tobyord.com

Concerned world citizen and professor of mathematical statistics (in that order).

Godless polyamorous author & speaker. Philosophistorian. Longform blogger. Destroyer of bad epistemologies. Drinker of whiskey & sensible wine. You can find all things me at richardcarrier.info (books, classes, blog).

Researcher in philosophy of science and AI

Naarm, Australia

Sarcasm is my native dialect.

My blog, loosely tied to Bayesian reasoning and argument analysis: https://bayesianwatch.wordpress.com/

Ex: FB, Twitter, Mastodon, Post (in order)

Psychologist who studies and writes about human nature—including morality, pleasure, and religion. Sustack: https://smallpotatoes.paulbloom.net/

Let’s skip witty repartee & discuss fundamental questions. Views are mine, not GMU’s or Virginia’s. Books: http://ageofem.com, http://elephantinthebrain.com

Science Senior Editor at @gizmodo.com, futurist, bioethicist, electronic musician, amateur astronomer.

Ethicist, educator, futurist and recovering attorney.

Meliorist transhumanist. Suffering abolitionist. I love life, science, technology, good music, good people … and sentients at large.

Sociologist, left social democrat antifascist with technoprogressive tendencies. Exec Director of the Institute for Ethics and Emerging Technologies @ieet.org @citizencyborg on Substack & Threads.

Everything:

https://keithwiley.com

Publishing imprint with my books:

https://alautunpress.com

Academic jack-of-all-trades.