21.03.2025 17:46 — 👍 0 🔁 0 💬 0 📌 0

21.03.2025 17:46 — 👍 0 🔁 0 💬 0 📌 0

Erik

@erikkaum.bsky.social

SWE @hf.co

@erikkaum.bsky.social

SWE @hf.co

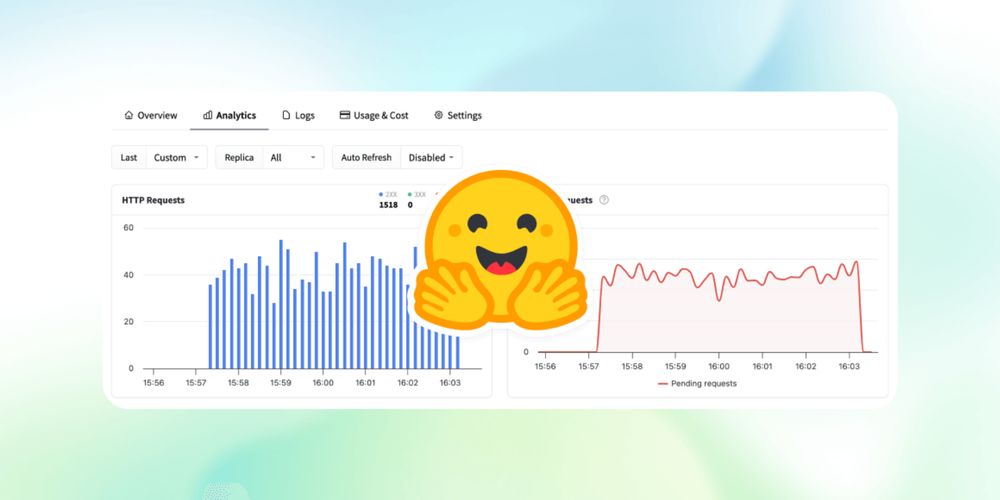

We just refreshed 🍋 our analytics in @hf.co

endpoints. More info below!

Morning workout at the @hf.co Paris office is imo one of the best perks.

13.03.2025 11:24 — 👍 3 🔁 0 💬 0 📌 0

Link to deploy:

endpoints.huggingface.co/huggingface/...

Gemma 3 is live 🔥

You can deploy it from endpoints directly with an optimally selected hardware and configurations.

Give it a try 👇

Apparently, mom is a better engineer than what I am.

24.12.2024 13:03 — 👍 4 🔁 0 💬 0 📌 0today as part of a course, I implemented a program that takes a bit stream like so:

10001001110111101000100111111011

and decodes the intel 8088 assembly from it like:

mov si, bx

mov bx, di

only works on the mov instruction, register to register.

code: github.com/ErikKaum/bit...

Ambition is a paradox.

You should always aim higher, but that easily becomes a state where you're never satisfied. Just reached 10k MRR. Now there's the next goal of 20k.

Sharif has a good talk on this: emotional runway.

How do you deal with this paradox?

video: www.youtube.com/watch?v=zUnQ...

There’s some deep wisdom in that as well!

08.12.2024 14:38 — 👍 1 🔁 0 💬 0 📌 0

Qui Gon Jinn sharing some insightful prompting wisdom 👌🏼

08.12.2024 09:03 — 👍 11 🔁 3 💬 1 📌 0Exactly.

Suppose we have an algorithm that is guaranteed to give output according to a structure, with the caveat that it might run out of tokens.

Should this still be classified as structured generation?

🤔

05.12.2024 20:22 — 👍 1 🔁 0 💬 0 📌 0CUDA libraries..? So they have access to gpus as well? 👀

05.12.2024 19:33 — 👍 1 🔁 0 💬 1 📌 0A video series on how to develop, profile and compare cuda kernels would be such a banger.

And allow a lot more tinkerers to enter the field.

Hell yeah 🔥

How would you classify the edge case when running out of tokens?

E.g if it goes into a ”\n” loop and runs out of tokens.

Hah, fair!

01.12.2024 11:01 — 👍 1 🔁 0 💬 0 📌 0Interesting, for me it's snappy as hell, maybe things aren't cached as well in Costa Rica? 🤔

01.12.2024 10:15 — 👍 0 🔁 0 💬 1 📌 0pro tip for the borrow-checker, using .clone() everywhere is okay 🙌

01.12.2024 10:13 — 👍 0 🔁 0 💬 1 📌 0

it's this time of the year 😍

01.12.2024 10:12 — 👍 2 🔁 0 💬 0 📌 0Or then you can let the model run free in a constrained environment.

I’m tinkering on this: bsky.app/profile/erik...

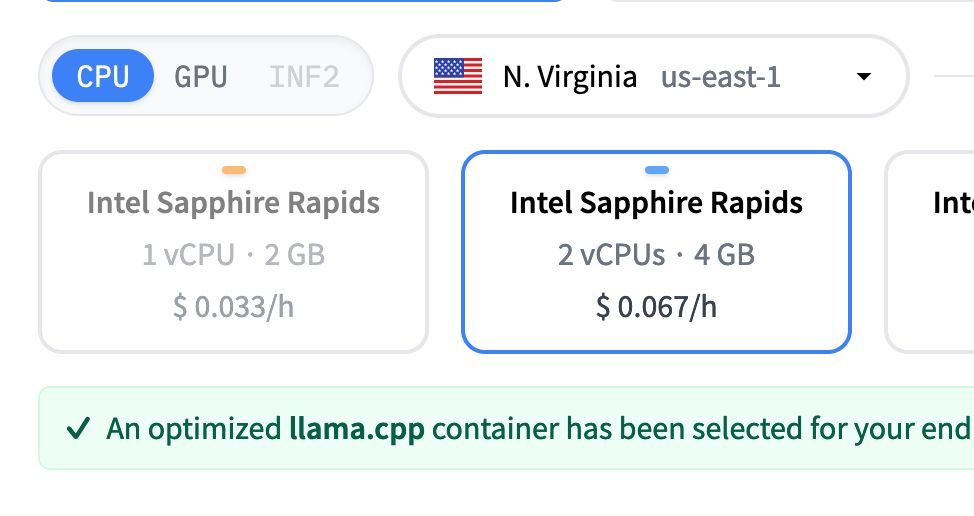

Hugging Face inference endpoints now support CPU deployment for llama.cpp 🚀 🚀

Why this is a huge deal? Llama.cpp is well-known for running very well on CPU. If you're running small models like Llama 1B or embedding models, this will definitely save tons of money 💰 💰

Nice! This is so neat 🙌🏽

26.11.2024 22:23 — 👍 1 🔁 0 💬 0 📌 0

Let's go! We are releasing SmolVLM, a smol 2B VLM built for on-device inference that outperforms all models at similar GPU RAM usage and tokens throughputs.

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

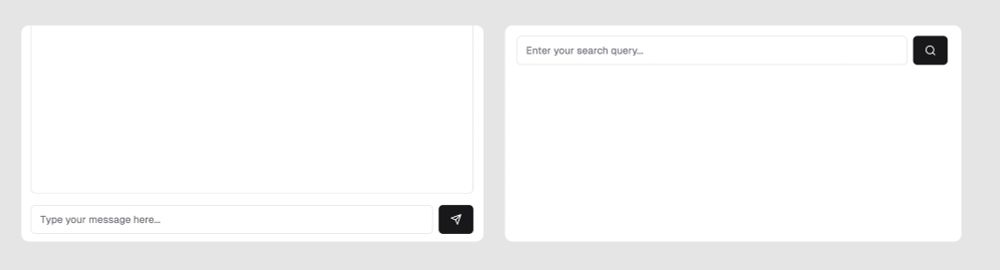

Is it just me or does it intuitively align that chat bars are at the bottom of the page and search bars at the top?

I've noticed that perplexity positions the question on the top and generates the text below.

Is it because they want to position more as a search engine?

The hope if have with Bluesky is that I as a user can do moderation more efficiently than what I could on twitter 🤞🏼

25.11.2024 18:18 — 👍 12 🔁 0 💬 0 📌 0Feeds and starter packs helped at least me a lot. E.g: bsky.app/profile/did:...

25.11.2024 13:19 — 👍 1 🔁 0 💬 1 📌 0Indeed, the beauty of open source 🔥

24.11.2024 14:28 — 👍 1 🔁 0 💬 0 📌 0Can’t wait to have that feature!

It’s kinda mind blowing that it’s not a thing on other social media platforms 🤷🏼♂️

code boxes with syntax highlighting 😍

24.11.2024 10:19 — 👍 4 🔁 0 💬 1 📌 0

typical engineer writing copy

in plain english i'd say "2 conversions at the same time"