Expect future LLMs to adopt DroPE in their pre-training, and no longer be limited to their pre-trained context lengths.

12.01.2026 10:30 — 👍 1 🔁 0 💬 0 📌 0Expect future LLMs to adopt DroPE in their pre-training, and no longer be limited to their pre-trained context lengths.

12.01.2026 10:30 — 👍 1 🔁 0 💬 0 📌 0

SelfExtend (arxiv.org/abs/2401.01325), a no-fine-tuning context extension method I covered in this video youtu.be/TV7qCk1dBWA, is not even mentioned but it has been evaluated in a previous eval paper (arxiv.org/abs/2409.12181) and found to be rubbish (top-right).

12.01.2026 10:26 — 👍 0 🔁 0 💬 1 📌 0

This beats all other context extension methods, with or without fine-tuning, by a considerable margin.

12.01.2026 10:21 — 👍 0 🔁 0 💬 1 📌 0Dropping Positional Embeddings, yes, just discarding them towards the end of LLM pre-training, unlocks context generalization in LLMs way beyond their pre-trained context length.

12.01.2026 10:19 — 👍 0 🔁 0 💬 1 📌 0

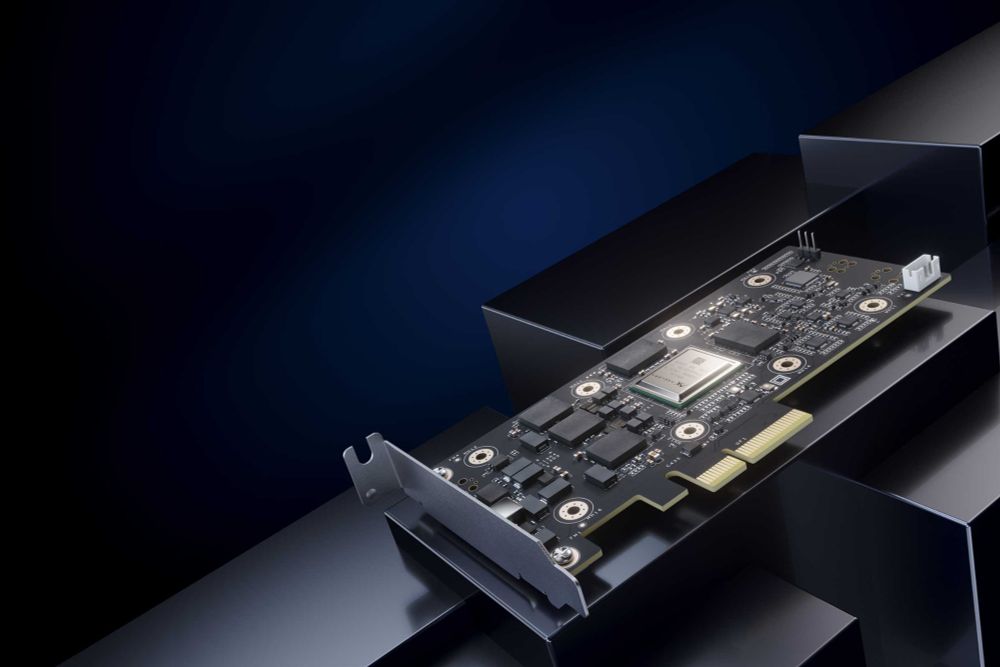

Announcing our next-gen chip: axelera.ai/news/axelera...

• 628 TOPS

• in-memory compute (IMC) matrix multipliers <- this is Axelera's tech edge

• 16 Risc-V vector cores for that will handle pre- and post-processing directly on chip.

Full report here: www.hottech.com/industry-cov...

16.07.2025 15:51 — 👍 1 🔁 0 💬 0 📌 0

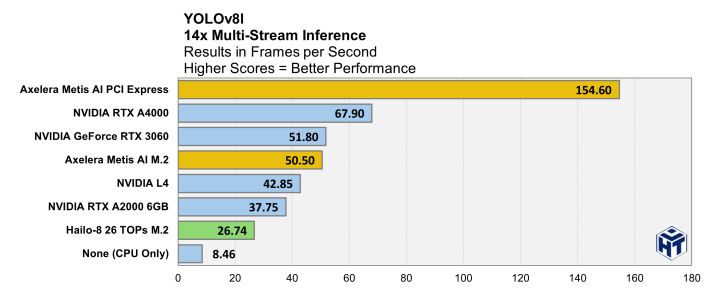

and check out the full report, which has data about more modern models like YOLO8L. For that model, compared to the best NVIDIA card tested, Axelera's Metis is:

- 230% faster

- 330% more power efficient

and also about 3x cheaper

The secret sauce works!

www.forbes.com/sites/daveal...

4-chip Metis accelerator PCIe card coming soon:

store.axelera.ai/collections/...

PCIe and M.2 Metis boards available now:

- PCIe: axelera.ai/ai-accelerat...

- M.2: axelera.ai/ai-accelerat...

50+ models pre-configured in the model zoo are ready to run:

github.com/axelera-ai-h...

Blog post by A-Tang Fan and Doug Watt about Axelera.ai's Voyager SDK: community.axelera.ai/product-upda...

30.05.2025 14:35 — 👍 2 🔁 1 💬 1 📌 0I am impressed and humbled by what the Axelera team was able to bring to market, on only three years, with the Metis chip and Voyager SDK. And it's just a beginning. We have an exciting roadmap ahead! axelera.ai

07.05.2025 14:22 — 👍 2 🔁 0 💬 0 📌 0The explosion of new AI models and capabilities, in advanced vision, speech recognition, language models, reasoning etc, needs a novel, energy-efficient approach to AI acceleration to deliver truly magical AI experiences, at the edge and in the datacenter.

07.05.2025 14:22 — 👍 2 🔁 0 💬 1 📌 0

I'm delighted to share that I joined the Axelera team this week to deliver the next generation AI compute platform. axelera.ai

07.05.2025 14:21 — 👍 3 🔁 0 💬 2 📌 0

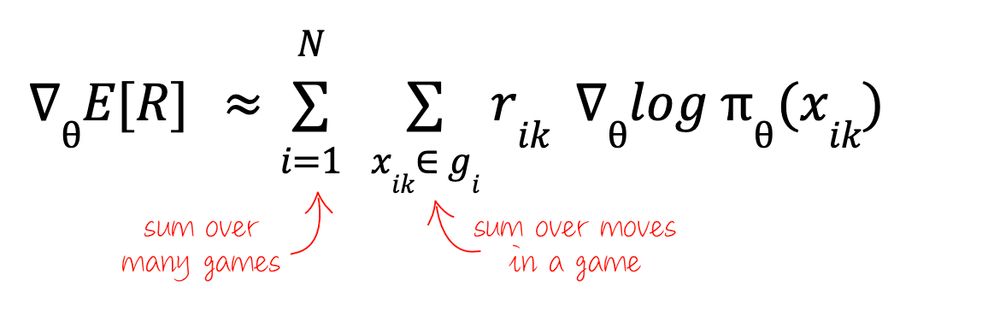

You'll see it in this form in Karpathy's original "Pong from pixel" post karpathy.github.io/2016/05/31/rl/ as well as my "RL without a PhD" video from a while ago youtu.be/t1A3NTttvBA.... They also explore a few basic reward assignment strategies.

Have fun, don't despair and RL!

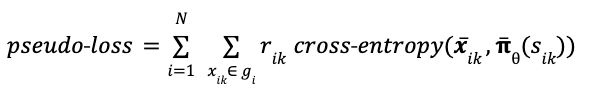

It is usually written in vector form, using the cross-entropy function. This time, I use 𝛑̅(sᵢₖ) for the *vector* of all move probabilities predicted from game state sᵢₖ, while 𝒙̅ᵢₖ is the one-hot encoded *vector* representing the move actually played in game i move k.

11.03.2025 01:40 — 👍 0 🔁 0 💬 1 📌 0

One more thing: In modern autograd libraries like PyTorch or JAX, the RL gradient can be computed from the following “pseudo-loss”. Don’t try to find the meaning of this function, it does not have any. It’s just a function that has the gradient we want.

11.03.2025 01:40 — 👍 0 🔁 0 💬 1 📌 0

So in conclusion, math tells us that Reinforcement Learning is possible, even in multi-turn games where you cannot differentiate across multiple moves. But math tells us nothing about how to do it in practice. Which is why it is hard.

11.03.2025 01:40 — 👍 0 🔁 0 💬 1 📌 0

What "rewards"? Well the "good" ones, that encourage the "correct" moves!

This is pretty much like a delicious recipe saying you should mix "great" ingredients in the "correct" proportions 😭. NOT HELPFUL AT ALL 🤬 !!!

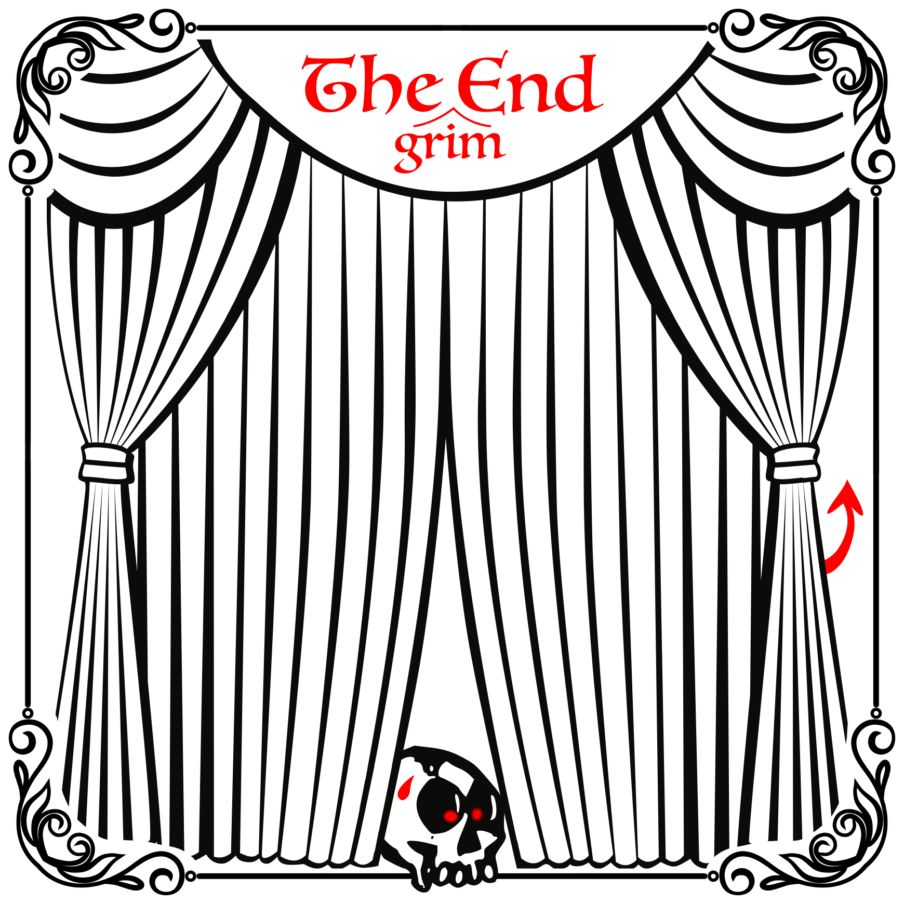

Now the bad news: what this equation really means is that the gradient we are looking for is the weighted sum of the gradients of our policy network over many individual games and moves, weighted by an unspecified set of “rewards”.

11.03.2025 01:40 — 👍 0 🔁 0 💬 1 📌 0We wanted to maximize the expected reward and managed to approximate the gradients form successive runs of the game, as played by our policy network. We can run backprop after all ... at least in theory 🙁.

11.03.2025 01:40 — 👍 1 🔁 0 💬 1 📌 0

... string of zeros followed by the final reward - but we may have finer-grained rewarding strategies. The 1/N constant was folded into the rewards.

The good news: yay, This is computable 🎉🥳🎊! The expression only involves our policy network and our rewards.

For a more practical application, let's unroll the log-probabilities into individual moves using eq. (1) and rearrange a little. We use the fact that the log of a product is a sum of logs. I have also split the game reward into separate game steps rewards rᵢₖ - worst case a ...

11.03.2025 01:39 — 👍 0 🔁 0 💬 1 📌 0

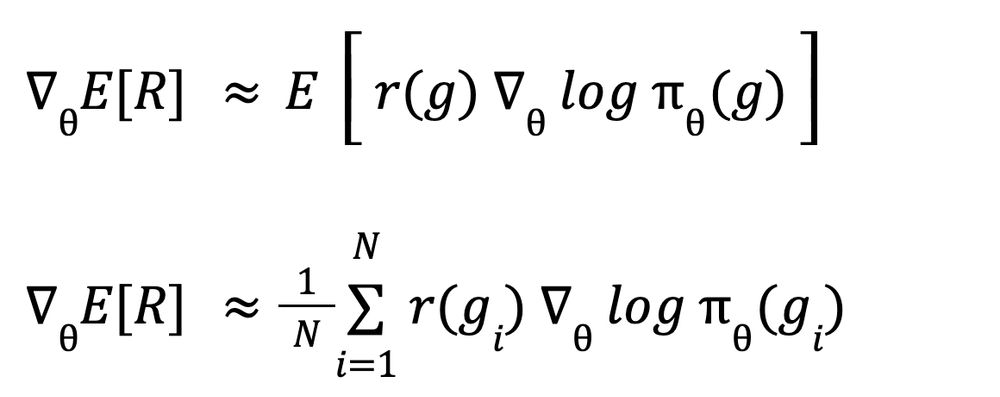

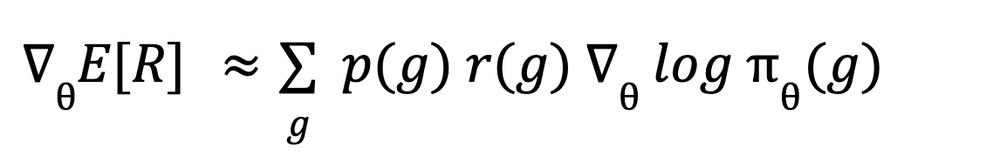

But look, this is a sum of a probability × some value. That's an expectation! Which means that instead of computing it directly, we can approximate it from multiple games gᵢ :

11.03.2025 01:39 — 👍 0 🔁 0 💬 1 📌 0

And now we can start approximating like crazy - and abandon any pretense of doing exact math 😅.

First, we use our policy network 𝛑 to approximate the move probabilities.

Combining the last two equations we get:

11.03.2025 01:39 — 👍 0 🔁 0 💬 1 📌 0

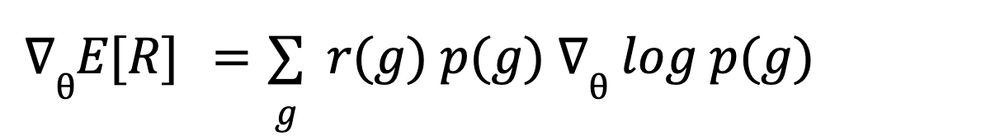

We now use a mathematical cheap trick based on the fact that the derivative of log(x) is 1/x. With gradients, this cheap trick reads:

11.03.2025 01:39 — 👍 0 🔁 0 💬 1 📌 0

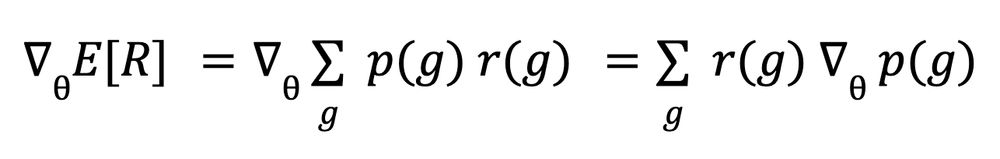

To maximize the expectation (3) we compute its gradient. The notation ∇ is the "gradient", or list of partial derivatives relatively to parameters θ. Differentiation is a linear operation so we can enter it into the sum Σ. Also, rewards do not depend on θ so:

11.03.2025 01:39 — 👍 0 🔁 0 💬 1 📌 0For example, for a single dice, the possible values are 1, 2, 3, 4, 5, 6, the probability of each outcome is p=⅙ which gives us an expectation of 3.5. And you get roughly the same number by rolling the dice many times and averaging the result. It's the "law of large numbers".

11.03.2025 01:39 — 👍 0 🔁 0 💬 1 📌 0