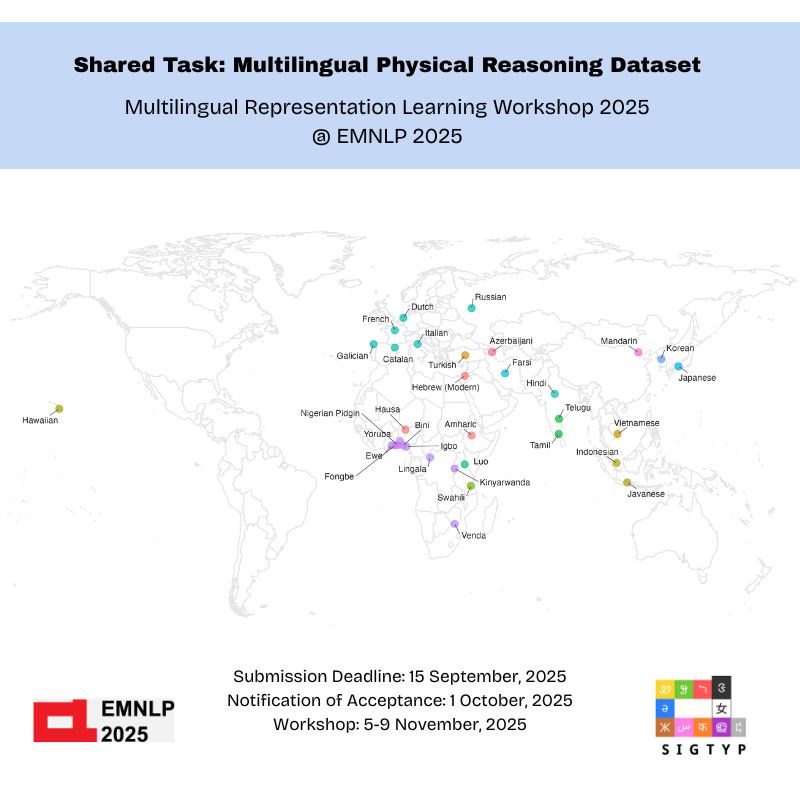

Very very excited that Global PIQA is out! This was an incredible effort by 300+ researchers from 65 countries. The resulting dataset is a high-quality, participatory, and culturally-specific benchmark for over 100 languages.

29.10.2025 16:08 — 👍 3 🔁 0 💬 0 📌 0

Did you know?

❌77% of language models on @hf.co are not tagged for any language

📈For 95% of languages, most models are multilingual

🚨88% of models with tags are trained on English

In a new blog post, @tylerachang.bsky.social and I dig into these trends and why they matter! 👇

19.09.2025 14:53 — 👍 13 🔁 2 💬 1 📌 0

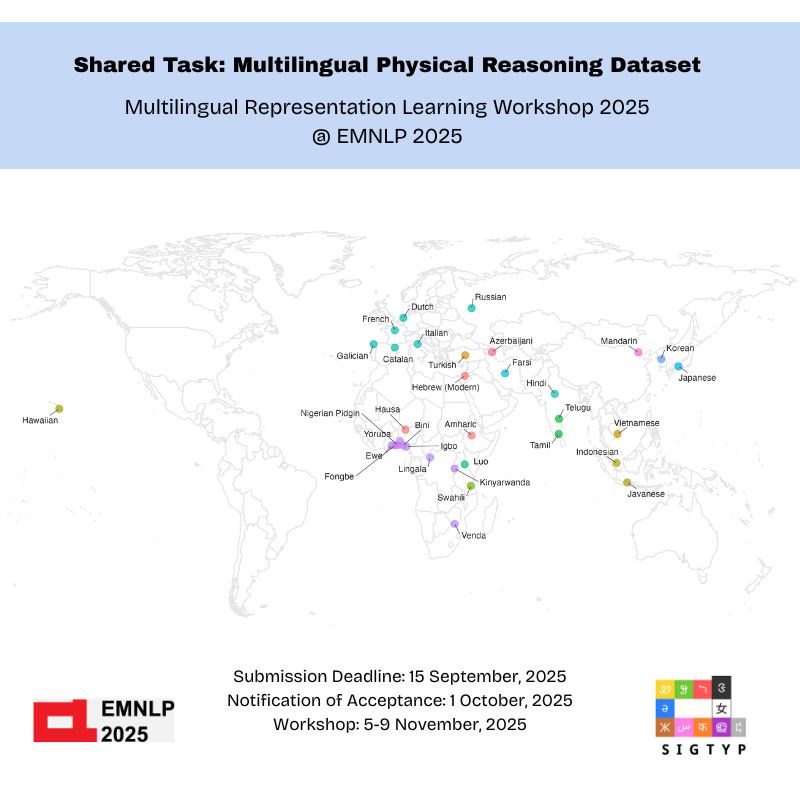

We have over 200 volunteers now for 90+ languages! We are hoping to expand the diversity of our language coverage and are still looking for participants who speak these languages. Check out how to get involved below, and please help us spread the word!

18.08.2025 15:52 — 👍 3 🔁 3 💬 1 📌 0

With six weeks left before the deadline, we have had over 50 volunteers sign up to contribute for over 30 languages. If you don’t see your language represented on the map, this is your sign to get involved!

05.08.2025 15:13 — 👍 3 🔁 2 💬 1 📌 1

We're organizing a shared task to develop a multilingual physical commonsense reasoning evaluation dataset! Details on how to submit are at: sigtyp.github.io/st2025-mrl.h...

25.06.2025 03:28 — 👍 4 🔁 0 💬 0 📌 0

of course, there are some scenarios where you would want to really check all the training examples, e.g. for detecting data contamination, or for rare facts, etc.

25.04.2025 14:44 — 👍 1 🔁 0 💬 0 📌 0

I think you could still make interesting inferences about what *types* of training examples influence the target! You'd essentially be getting a sample of the actual top-k retrievals

25.04.2025 14:43 — 👍 1 🔁 0 💬 1 📌 0

The biggest compute cost is computing gradients for every training example (~= cost of training) -- happy to chat more, especially if you know anyone interested in putting together an open-source implementation!

25.04.2025 08:57 — 👍 1 🔁 0 💬 1 📌 0

Presenting our work on training data attribution for pretraining this morning: iclr.cc/virtual/2025... -- come stop by in Hall 2/3 #526 if you're here at ICLR!

24.04.2025 23:55 — 👍 4 🔁 0 💬 1 📌 1

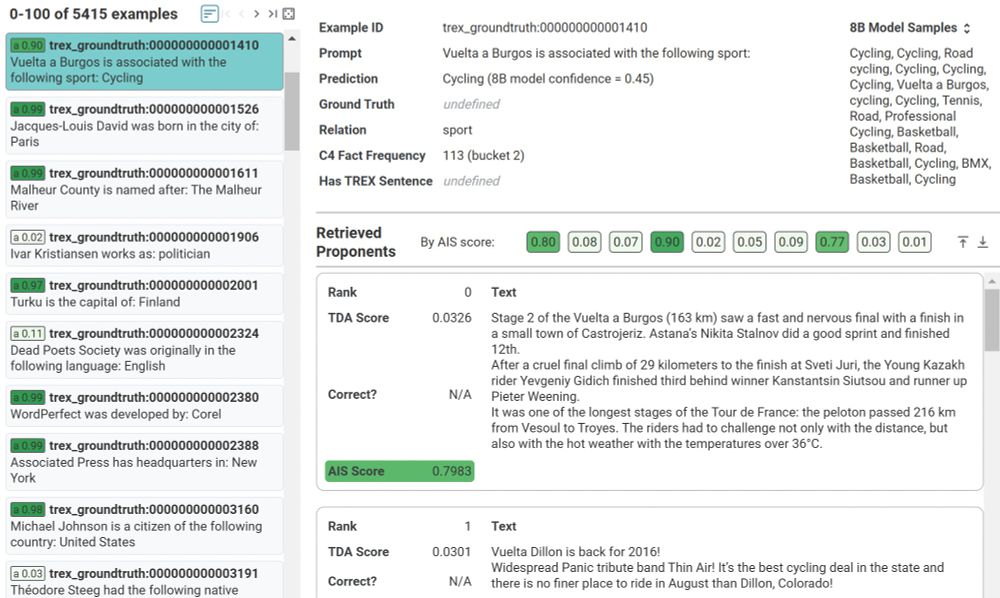

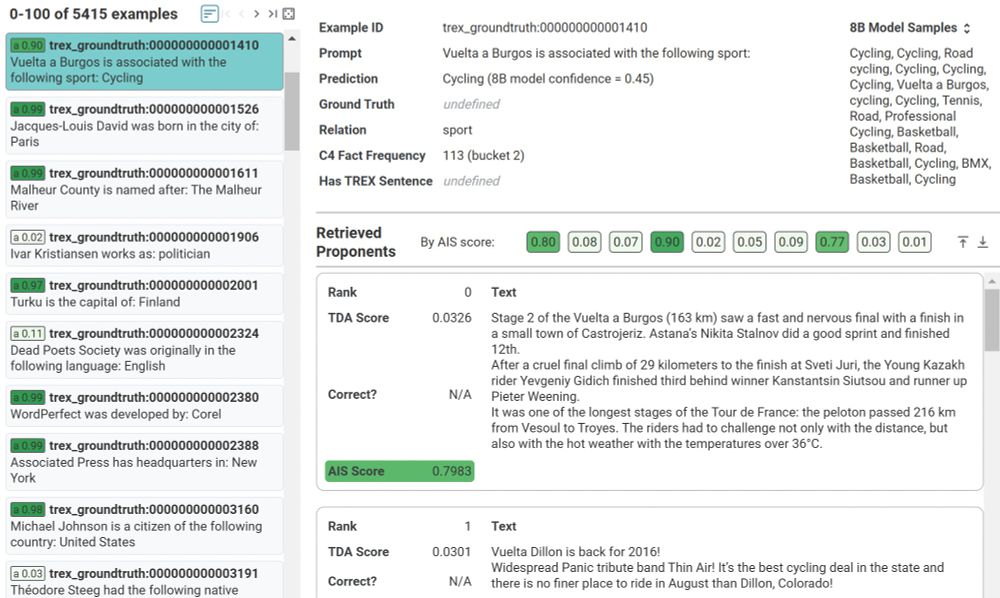

Play with it yourself: see influential pretraining examples from our method for facts, factual errors, commonsense reasoning, arithmetic, and open-ended generation: github.com/PAIR-code/pr...

13.12.2024 18:57 — 👍 5 🔁 0 💬 1 📌 0

As models increase in size and pretraining tokens, "influence" more closely resembles "attribution". I.e. "better" models do seem to rely more on entailing examples.

13.12.2024 18:57 — 👍 3 🔁 0 💬 1 📌 0

Many influential examples do not entail a fact, but instead appear to reflect priors on common entities for certain relation types, or guesses based on first or last names.

13.12.2024 18:57 — 👍 3 🔁 0 💬 1 📌 0

In a fact tracing task, we find that classical retrieval methods (e.g. BM25) are still much better for retrieving examples that *entail* factual predictions (factual "attribution"), but TDA methods retrieve examples that have greater *influence* on model predictions.

13.12.2024 18:57 — 👍 3 🔁 0 💬 1 📌 0

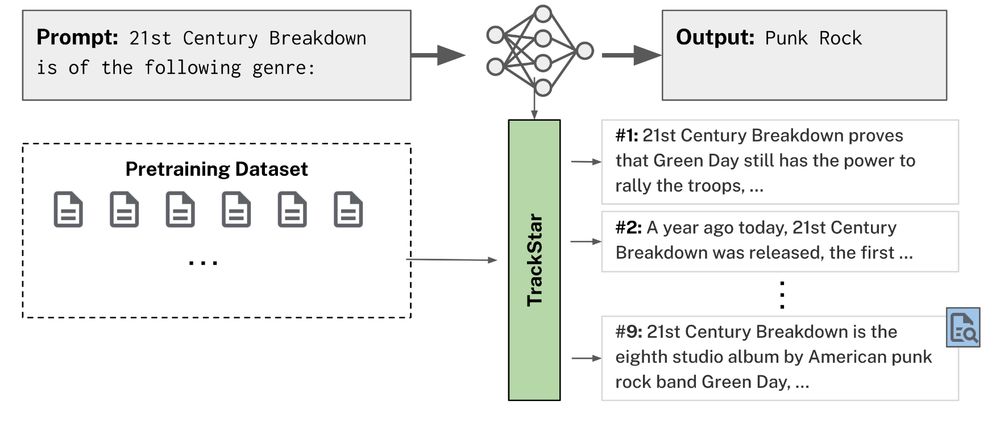

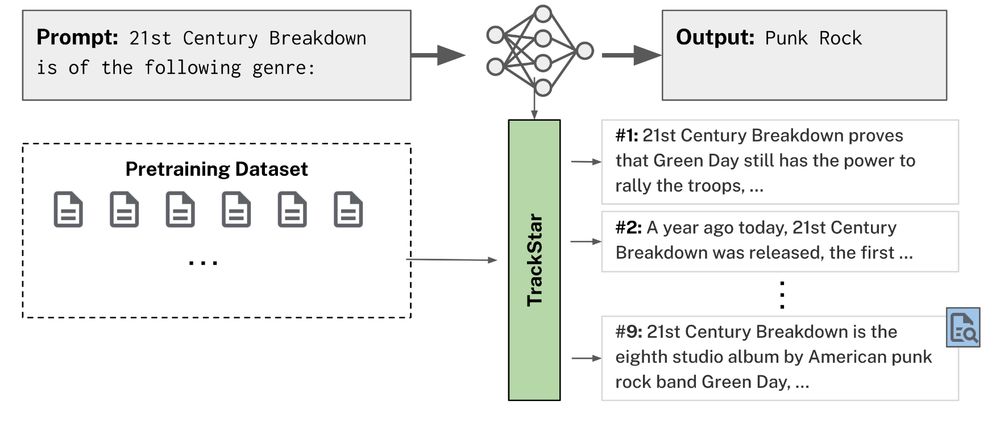

Our method, TrackStar, refines existing gradient-based approaches to scale to much larger settings: over 100x more queries and a 30x larger retrieval corpus than previous work at this model size.

13.12.2024 18:57 — 👍 3 🔁 0 💬 1 📌 0

We scaled training data attribution (TDA) methods ~1000x to find influential pretraining examples for thousands of queries in an 8B-parameter LLM over the entire 160B-token C4 corpus!

medium.com/people-ai-re...

13.12.2024 18:57 — 👍 36 🔁 8 💬 2 📌 5

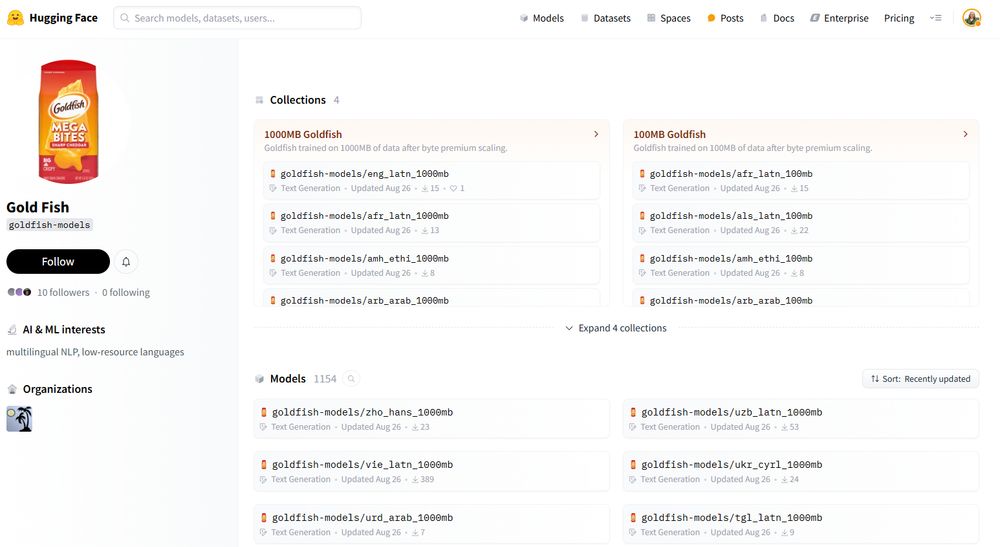

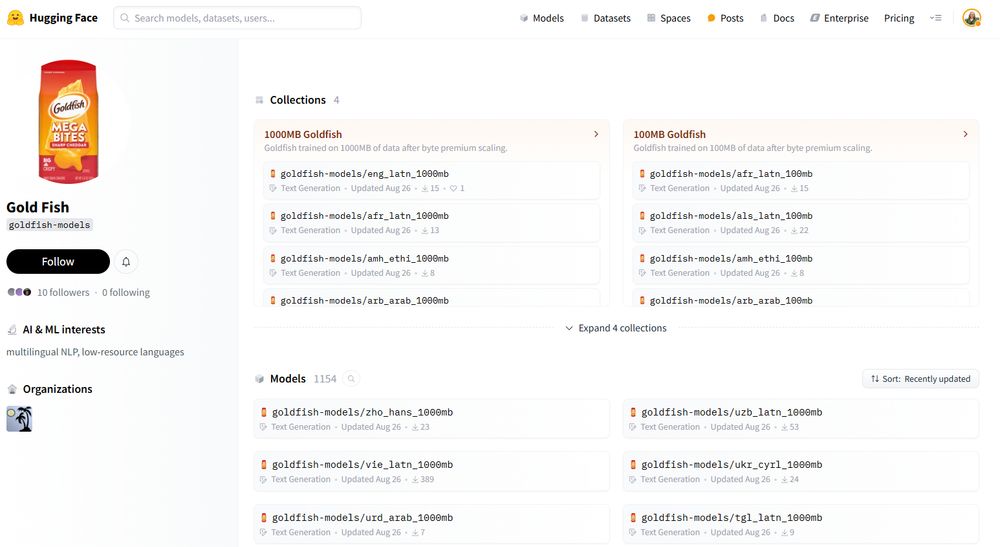

The Goldfish models were trained on byte-premium-scaled dataset sizes, such that if a language needs more bytes to encode a given amount of information, we scaled up the dataset according the byte premium. Read about how we (@tylerachang.bsky.social) trained the models: arxiv.org/pdf/2408.10441

22.11.2024 15:03 — 👍 5 🔁 1 💬 1 📌 0

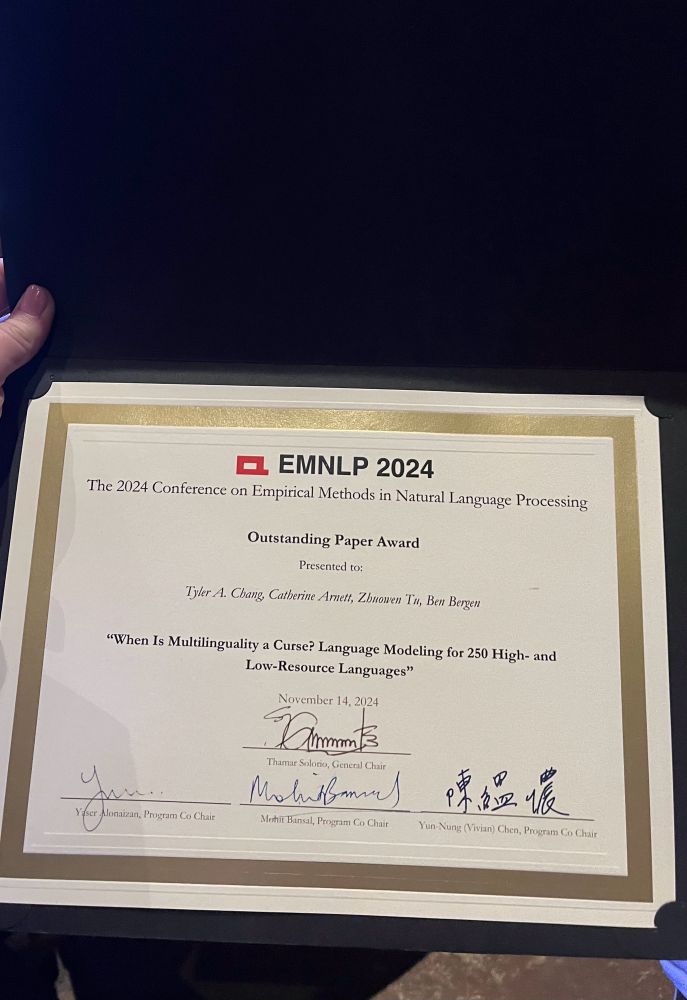

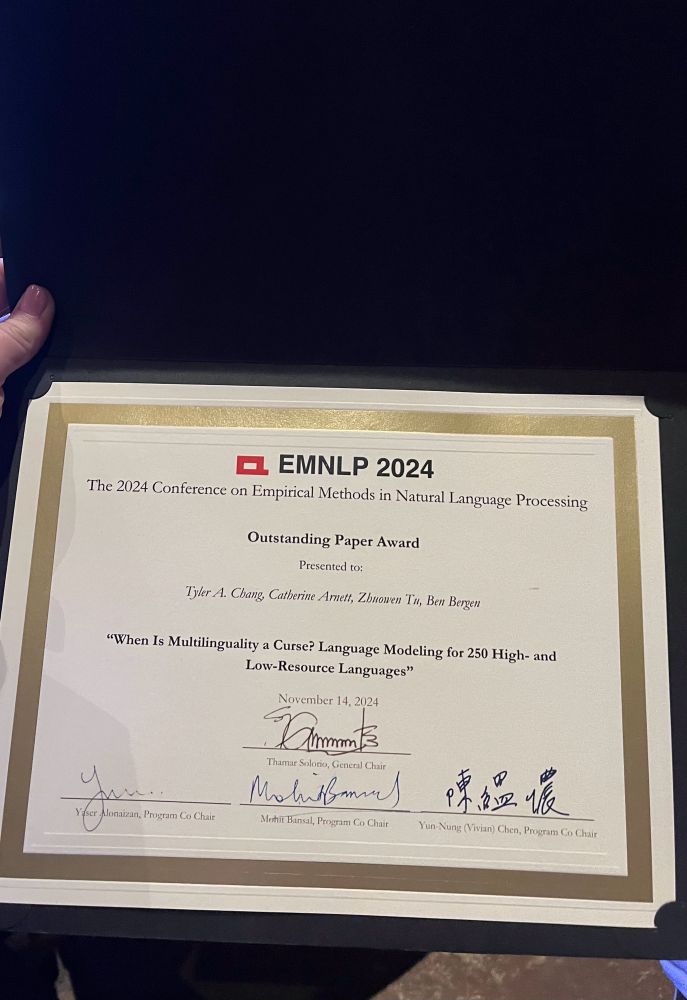

Tyler Chang and my paper got awarded outstanding paper at #EMNLP2024! Thanks to the award committee for the recognition!

15.11.2024 02:23 — 👍 32 🔁 1 💬 1 📌 0

I work on speech and language technologies at Google. I like languages, history, maps, traveling, cycling, and buying way too many books.

The 5th edition of our workshop will be co-located with EMNLP in Suzhou, China!

https://sigtyp.github.io/ws2025-mrl.html

Asst Prof. @ UCSD | PI of LeM🍋N Lab | Former Postdoc at ETH Zürich, PhD @ NYU | computational linguistics, NLProc, CogSci, pragmatics | he/him 🏳️🌈

alexwarstadt.github.io

Philosopher of Artificial Intelligence & Cognitive Science

https://raphaelmilliere.com/

Human–computer interaction researcher. PhD from University of Minnesota. Tacoma, WA. Mastodon: zwlevonian@hci.social

Aspiring 10x reverse engineer at Google DeepMind

Research Scientist at GoogleDeepMind (formerly at Google Research). UPenn graduate.

PhD Student at @gronlp.bsky.social 🐮, core dev @inseq.org. Interpretability ∩ HCI ∩ #NLProc.

gsarti.com

Currently Google DeepMind. Previously NVIDIA, Whisper.ai, Skydio, IBM Research, Stanford, UCSD, and Clemson.

AI Evaluation and Interpretability @MicrosoftResearch, Prev PhD @CMU.

#NLP Postdoc at Mila - Quebec AI Institute & McGill University

mariusmosbach.com

Junior Professor CNRS (previously EPFL, TU Darmstadt) -- AI Interpretability, causal machine learning, and NLP. Currently visiting @NYU

https://peyrardm.github.io

I hate slop and yet I work on generative models

PhD from UT Austin, applied scientist @ AWS

He/him • https://bostromk.net

Research Scientist, People + AI Research (PAIR) team at Google DeepMind.

Research Scientist @ Google Deepmind. Opinions are my own.

minsukchang.com

natural language processing and computational linguistics at google deepmind.

Research Scientist at Google DeepMind, interested in multiagent reinforcement learning, game theory, games, and search/planning.

Lover of Linux 🐧, coffee ☕, and retro gaming. Big fan of open-source. #gohabsgo 🇨🇦

For more info: https://linktr.ee/sharky6000

Staff Research Scientist, People + AI Research (PAIR) team at Google DeepMind. Interpretability, analysis, and visualizations for LLMs. Opinions my own.

iftenney.github.io