Our new paper in #PNAS (bit.ly/4fcWfma) presents a surprising finding—when words change meaning, older speakers rapidly adopt the new usage; inter-generational differences are often minor.

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

29.07.2025 12:05 — 👍 34 🔁 17 💬 3 📌 2

🚨Job Alert

W2 (TT W3) Professorship in Computer Science "AI for People & Society"

@saarland-informatics-campus.de/@uni-saarland.de is looking to appoint an outstanding individual in the field of AI for people and society who has made significant contributions in one or more of the following areas:

18.07.2025 07:11 — 👍 14 🔁 18 💬 1 📌 0

Kaiserslautern, Germany

📣 Life update: Thrilled to announce that I’ll be starting as faculty at the Max Planck Institute for Software Systems this Fall!

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

22.07.2025 04:12 — 👍 91 🔁 12 💬 13 📌 4

I'm at #ICML in Vancouver this week, hit me up if you want to chat about pre-training experiments or explainable machine learning.

You can find me at these posters:

Tuesday: How Much Can We Forget about Data Contamination? icml.cc/virtual/2025...

14.07.2025 14:49 — 👍 1 🔁 1 💬 1 📌 0

Congrats!

14.07.2025 20:49 — 👍 1 🔁 0 💬 0 📌 0

Paper title "The Non-Linear Representation Dilemma: Is Causal Abstraction Enough for Mechanistic Interpretability?" with the paper's graphical abstract showing how more powerful alignment maps between a DNN and an algorithm allow more complex features to be found and more "accurate" abstractions.

Mechanistic interpretability often relies on *interventions* to study how DNNs work. Are these interventions enough to guarantee the features we find are not spurious? No!⚠️ In our new paper, we show many mech int methods implicitly rely on the linear representation hypothesis🧵

14.07.2025 12:15 — 👍 66 🔁 12 💬 1 📌 1

Have you ever wondered whether a few times of data contamination really lead to benchmark overfitting?🤔 Then our latest #ICML paper about the effect of data contamination on LLM evals might be for you!🚀

Paper: arxiv.org/abs/2410.03249

👇🧵

08.07.2025 06:42 — 👍 12 🔁 1 💬 1 📌 2

💡Beyond math/code, instruction following with verifiable constraints is suitable to be learned with RLVR.

But the set of constraints and verifier functions is limited and most models overfit on IFEval.

We introduce IFBench to measure model generalization to unseen constraints.

03.07.2025 21:06 — 👍 29 🔁 5 💬 1 📌 1

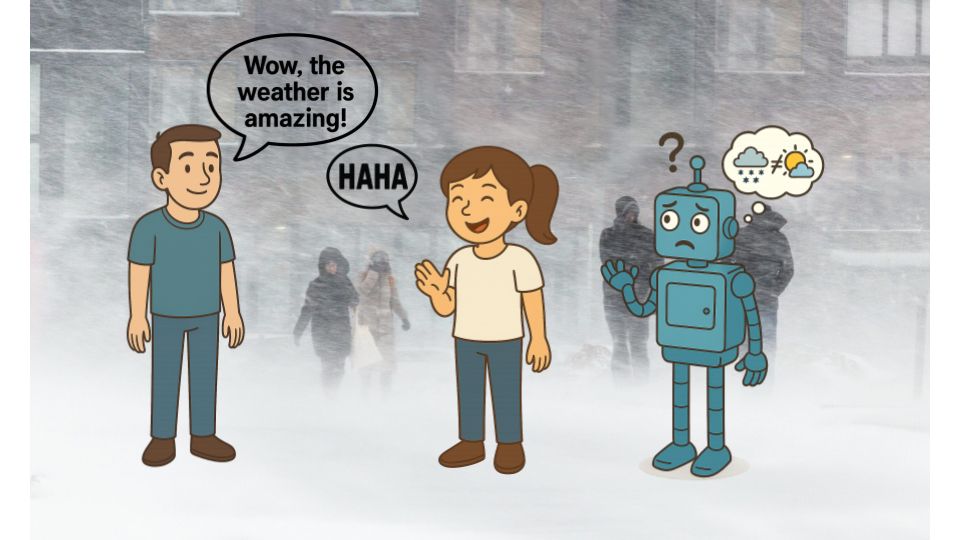

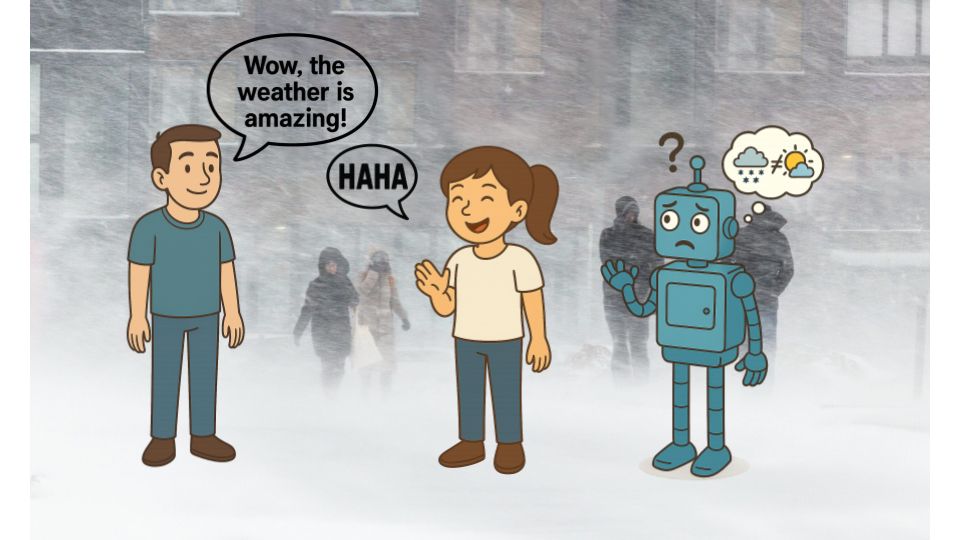

A blizzard is raging through Montreal when your friend says “Looks like Florida out there!” Humans easily interpret irony, while LLMs struggle with it. We propose a 𝘳𝘩𝘦𝘵𝘰𝘳𝘪𝘤𝘢𝘭-𝘴𝘵𝘳𝘢𝘵𝘦𝘨𝘺-𝘢𝘸𝘢𝘳𝘦 probabilistic framework as a solution.

Paper: arxiv.org/abs/2506.09301 to appear @ #ACL2025 (Main)

26.06.2025 15:52 — 👍 15 🔁 7 💬 1 📌 4

02 | Gauthier Gidel: Bridging Theory and Deep Learning, Vibes at Mila, and the Effects of AI on Art

Behind the Research of AI · Episode

Started a new podcast with @tomvergara.bsky.social !

Behind the Research of AI:

We look behind the scenes, beyond the polished papers 🧐🧪

If this sounds fun, check out our first "official" episode with the awesome Gauthier Gidel

from @mila-quebec.bsky.social :

open.spotify.com/episode/7oTc...

25.06.2025 15:54 — 👍 17 🔁 6 💬 1 📌 0

Interested in shaping the progress of responsible AI and meeting leading researchers in the field? SoLaR@COLM 2025 is looking for paper submissions and reviewers!

🤖 ML track: algorithms, math, computation

📚 Socio-technical track: policy, ethics, human participant research

17.06.2025 17:46 — 👍 8 🔁 1 💬 1 📌 1

"Build the web for agents, not agents for the web"

This position paper argues that rather than forcing web agents to adapt to UIs designed for humans, we should develop a new interface optimized for web agents, which we call Agentic Web Interface (AWI).

arxiv.org/abs/2506.10953

14.06.2025 04:17 — 👍 6 🔁 4 💬 0 📌 0

Excited to share the results of my recent internship!

We ask 🤔

What subtle shortcuts are VideoLLMs taking on spatio-temporal questions?

And how can we instead curate shortcut-robust examples at a large-scale?

We release: MVPBench

Details 👇🔬

13.06.2025 14:47 — 👍 16 🔁 5 💬 1 📌 0

Congrats Sarah!! They are lucky to have you 💪

13.06.2025 21:10 — 👍 0 🔁 0 💬 1 📌 0

New paper in Interspeech 2025 🚨

@interspeech.bsky.social

A Robust Model for Arabic Dialect Identification using Voice Conversion

Paper 📝 arxiv.org/pdf/2505.24713

Demo 🎙️https://shorturl.at/rrMm6

#Arabic #SpeechTech #NLProc #AI #Speech #ArabicDialects #Interspeech2025 #ArabicNLP

10.06.2025 10:07 — 👍 1 🔁 2 💬 1 📌 0

Do LLMs hallucinate randomly? Not quite.

Our #ACL2025 (Main) paper shows that hallucinations under irrelevant contexts follow a systematic failure mode — revealing how LLMs generalize using abstract classes + context cues, albeit unreliably.

📎 Paper: arxiv.org/abs/2505.22630 1/n

06.06.2025 18:09 — 👍 46 🔁 18 💬 1 📌 3

Congrats Elinor!

30.05.2025 20:58 — 👍 1 🔁 0 💬 0 📌 0

Chain-of-Thought (CoT) reasoning lets LLMs solve complex tasks, but long CoTs are expensive. How short can they be while still working? Our new ICML paper tackles this foundational question.

05.05.2025 12:25 — 👍 12 🔁 2 💬 2 📌 0

Title slide: Processing Trans Languaging - Vagrant Gautam (they/xe), Saarland University, with a very brightly patterned background featuring colourful people and math symbols.

Come to my keynote tomorrow at the first official @queerinai.com workshop at #NAACL2025 to hear about how trans languaging is complex and cool, and how this makes it extra difficult to process computationally. I will have SO many juicy examples!

03.05.2025 20:52 — 👍 44 🔁 14 💬 3 📌 0

Deadline extended! ⏳

The Actionable Interpretability Workshop at #ICML2025 has moved its submission deadline to May 19th. More time to submit your work 🔍🧠✨ Don’t miss out!

03.05.2025 20:00 — 👍 4 🔁 3 💬 0 📌 0

Check out Gaurav's video on their #NAACL paper and find @adadtur.bsky.social at the conference 👇

02.05.2025 01:41 — 👍 11 🔁 1 💬 0 📌 0

I'll be at #NAACL2025:

🖇️To present my paper "Superlatives in Context", showing how the interpretation of superlatives is very context dependent and often implicit, and how LLMs handle such semantic underspecification

🖇️And we will present RewardBench on Friday

Reach out if you want to chat!

27.04.2025 20:00 — 👍 28 🔁 5 💬 1 📌 1

👋🇨🇦🇩🇪

27.04.2025 18:51 — 👍 1 🔁 0 💬 0 📌 0

I’m really excited about Diffusion Steering Lens, an intuitive and elegant new “logit lens” technique for decoding the attention and MLP blocks of vision transformers!

Vision is much more expressive than language, so some new mech interp rules apply:

25.04.2025 13:36 — 👍 11 🔁 3 💬 0 📌 0

💡 New ICLR paper! 💡

"On Linear Representations and Pretraining Data Frequency in Language Models":

We provide an explanation for when & why linear representations form in large (or small) language models.

Led by @jackmerullo.bsky.social, w/ @nlpnoah.bsky.social & @sarah-nlp.bsky.social

25.04.2025 01:55 — 👍 42 🔁 12 💬 3 📌 3

Logo for MIB: A Mechanistic Interpretability Benchmark

Lots of progress in mech interp (MI) lately! But how can we measure when new mech interp methods yield real improvements over prior work?

We propose 😎 𝗠𝗜𝗕: a 𝗠echanistic 𝗜nterpretability 𝗕enchmark!

23.04.2025 18:15 — 👍 51 🔁 15 💬 1 📌 6

Paper title of the year so far. I will be back ... have to read the paper now. Great work @saxon.me !

21.04.2025 23:43 — 👍 5 🔁 0 💬 1 📌 0

BamNLP Research Group at the University of Bamberg, Germany

We work on #nlproc #nlp #computationalpsychology #deeplearning #emotions #sentiment #argumentmining

PI: @romanklinger.de

Website: https://www.uni-bamberg.de/en/nlproc/#

Mathematician at UCLA. My primary social media account is https://mathstodon.xyz/@tao . I also have a blog at https://terrytao.wordpress.com/ and a home page at https://www.math.ucla.edu/~tao/

Anti-cynic. Towards a weirder future. Reinforcement Learning, Autonomous Vehicles, transportation systems, the works. Asst. Prof at NYU

https://emerge-lab.github.io

https://www.admonymous.co/eugenevinitsky

Prof. at Saarland University 🇩🇪 | Learning Sciences*HCI | Faculty Associate at BKC Harvard | prev: CMU HCII, StanfordGSE | co-designing tech to support human learning | choice making, SRL, learning with AI, ITS |

tomonag.org

🇯🇵->🇺🇸->🇩🇪

Assistant professor at NUS. Scaling cooperative intelligence & infrastructure for an increasingly automated future. PhD @ MIT ProbComp / CoCoSci. Pronouns: 祂/伊

Neuroscientist, in theory.

Studying sleep and navigation in 🧠s and 💻s.

Wu Tsai Investigator, Assistant Professor of Neuroscience at Yale.

An emergent property of a few billion neurons, their interactions with each other and the world over ~1 century.

PhD candidate @Technion | NLP

PhD student in Interpretable Machine Learning at @tuberlin.bsky.social & @bifold.berlin

https://web.ml.tu-berlin.de/author/laura-kopf/

Senior Researcher Machine Learning at BIFOLD | TU Berlin 🇩🇪

Prev at IPAM | UCLA | BCCN

Interpretability | XAI | NLP & Humanities | ML for Science

1000 researchers, 2800 students, 5 renowned institutes & 24 academic programs - computer science at Saarland University. 🖥️

Impressum: https://bit.ly/484nGeh

Philosopher of Artificial Intelligence & Cognitive Science

https://raphaelmilliere.com/

PhD student at Cambridge University. Causality & language models. Passionate musician, professional debugger.

pietrolesci.github.io

AI/ML Applied Research Intern at Adobe | NLP-ing (Research Masters) at MILA/McGill

MSc Master's @mila-quebec.bsky.social @mcgill-nlp.bsky.social

Research Fellow @ RBC Borealis

Model analysis, interpretability, reasoning and hallucination

Studying model behaviours to make them better :))

Looking for Fall '26 PhD

Researcher @Microsoft; PhD @Harvard; Incoming Assistant Professor @MIT (Fall 2026); Human-AI Interaction, Worker-Centric AI

zbucinca.github.io

Assistant Professor of Computer Science @JohnsHopkins,

CS Postdoc @Stanford,

PHD @EPFL,

Computational Social Science, NLP, AI & Society

https://kristinagligoric.com/

Cognitive scientist at Stanford. Open science advocate. Symbolic Systems Program director. Bluegrass picker, slow runner, dad. http://langcog.stanford.edu

Research collaboration among 5 universities in Denmark: Aalborg University, IT University of Copenhagen, University of Aarhus, Technical University of Denmark, and the University of Copenhagen.

https://www.aicentre.dk/

Assistant Professor at @cs.ubc.ca and @vectorinstitute.ai working on Natural Language Processing. Book: https://lostinautomatictranslation.com/

@guyd33 on the X-bird site. Machine learning researcher at Jane Street. Formerly, PhD student at NYU, cognitive science x AI, specifically goal and task representations in minds/machines. Otherwise, cooking, playing ultimate frisbee, and making hot sauces.