Daniel Beaglehole, David Holzm\"uller, Adityanarayanan Radhakrishnan, Mikhail Belkin: xRFM: Accurate, scalable, and interpretable feature learning models for tabular data https://arxiv.org/abs/2508.10053 https://arxiv.org/pdf/2508.10053 https://arxiv.org/html/2508.10053

15.08.2025 06:32 — 👍 3 🔁 3 💬 0 📌 0

Thanks!

30.07.2025 12:35 — 👍 0 🔁 0 💬 0 📌 0

I got 3rd out of 691 in a tabular kaggle competition – with only neural networks! 🥉

My solution is short (48 LOC) and relatively general-purpose – I used skrub to preprocess string and date columns, and pytabkit to create an ensemble of RealMLP and TabM models. Link below👇

29.07.2025 11:10 — 👍 11 🔁 2 💬 2 📌 0

Excited to have co-contributed the SquashingScaler, which implements the robust numerical preprocessing from RealMLP!

24.07.2025 16:00 — 👍 8 🔁 4 💬 0 📌 0

Is it because mathematicians think in terms of the number of assumptions that are satisfied, while physicists think in terms of the number of things that satisfy them?

23.07.2025 21:04 — 👍 1 🔁 0 💬 1 📌 0

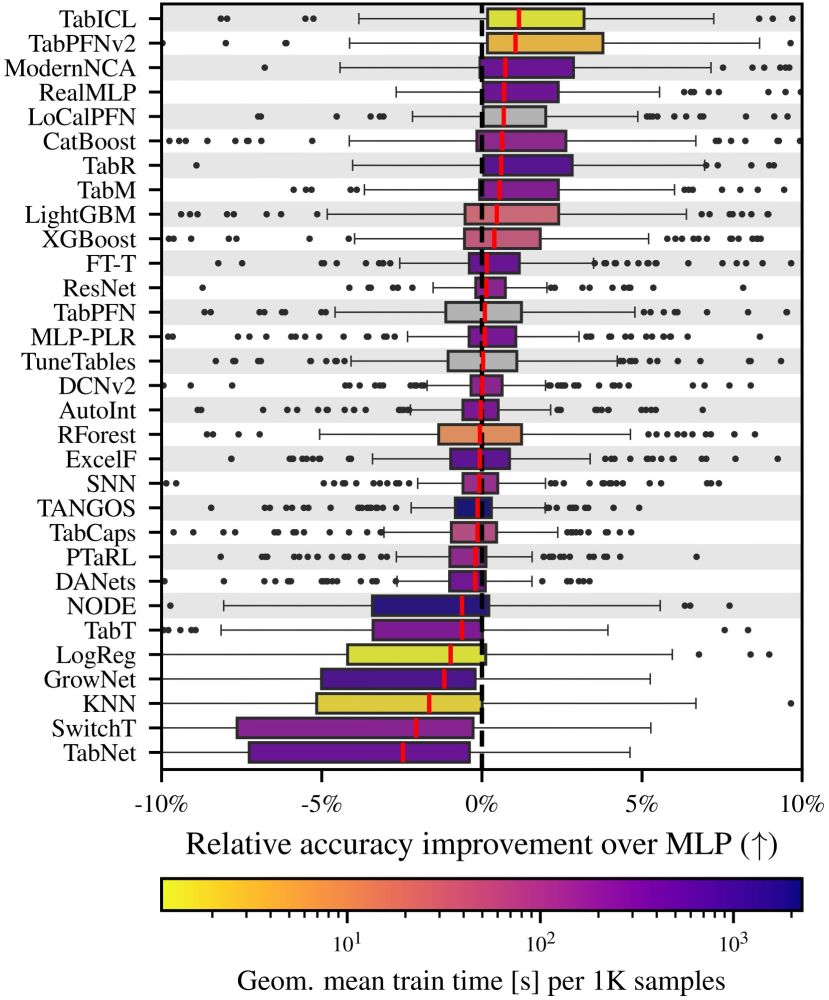

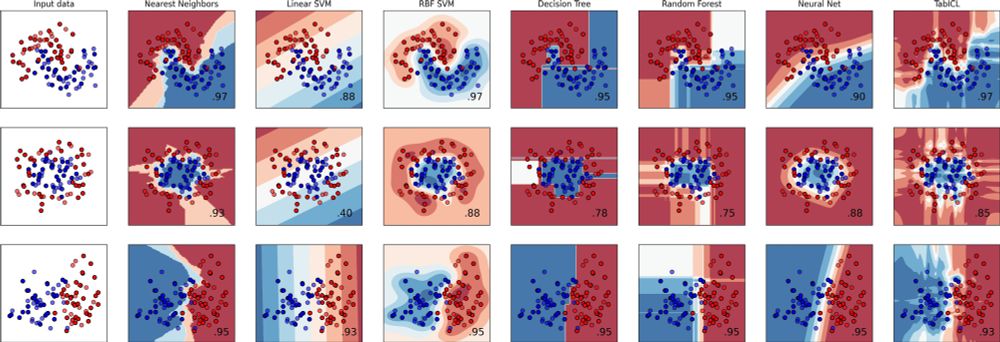

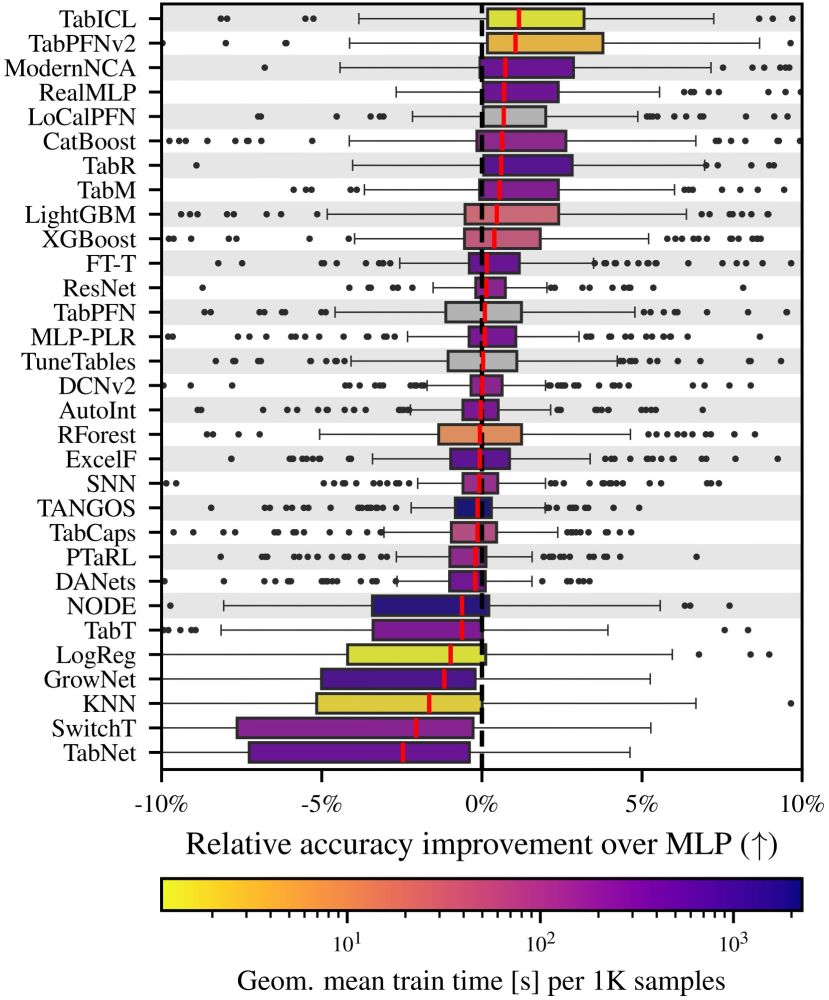

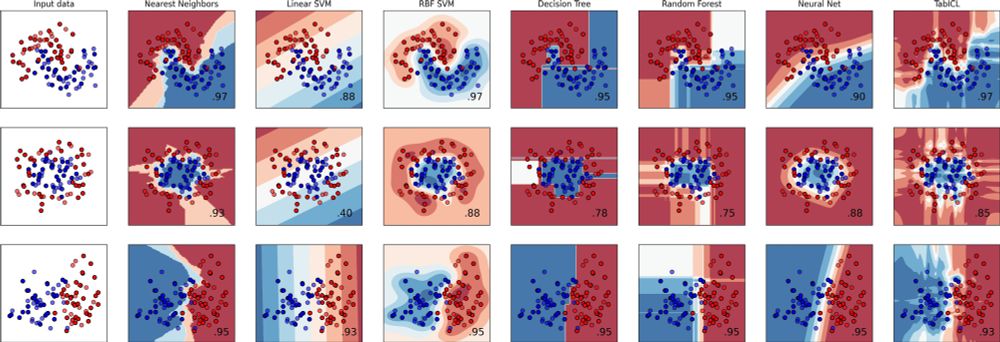

👨🎓🧾✨#icml2025 Paper: TabICL, A Tabular Foundation Model for In-Context Learning on Large Data

With Jingang Qu, @dholzmueller.bsky.social, and Marine Le Morvan

TL;DR: a well-designed architecture and pretraining gives best tabular learner, and more scalable

On top, it's 100% open source

1/9

09.07.2025 18:41 — 👍 50 🔁 15 💬 1 📌 0

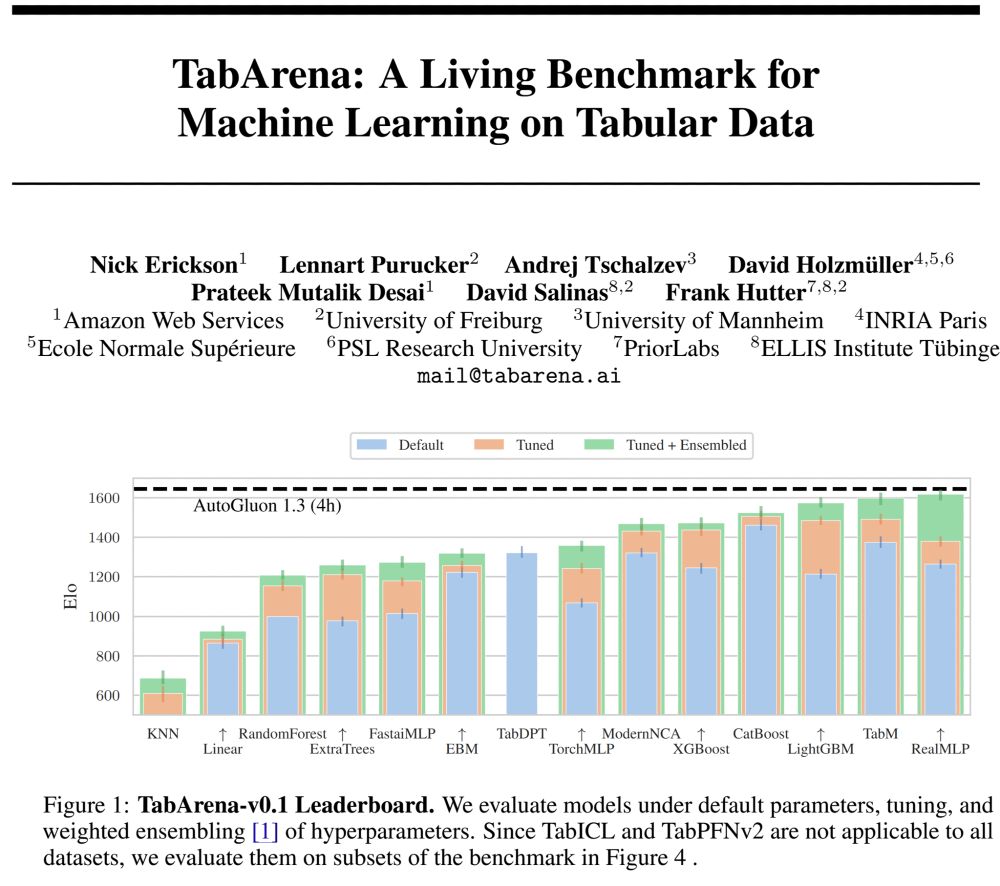

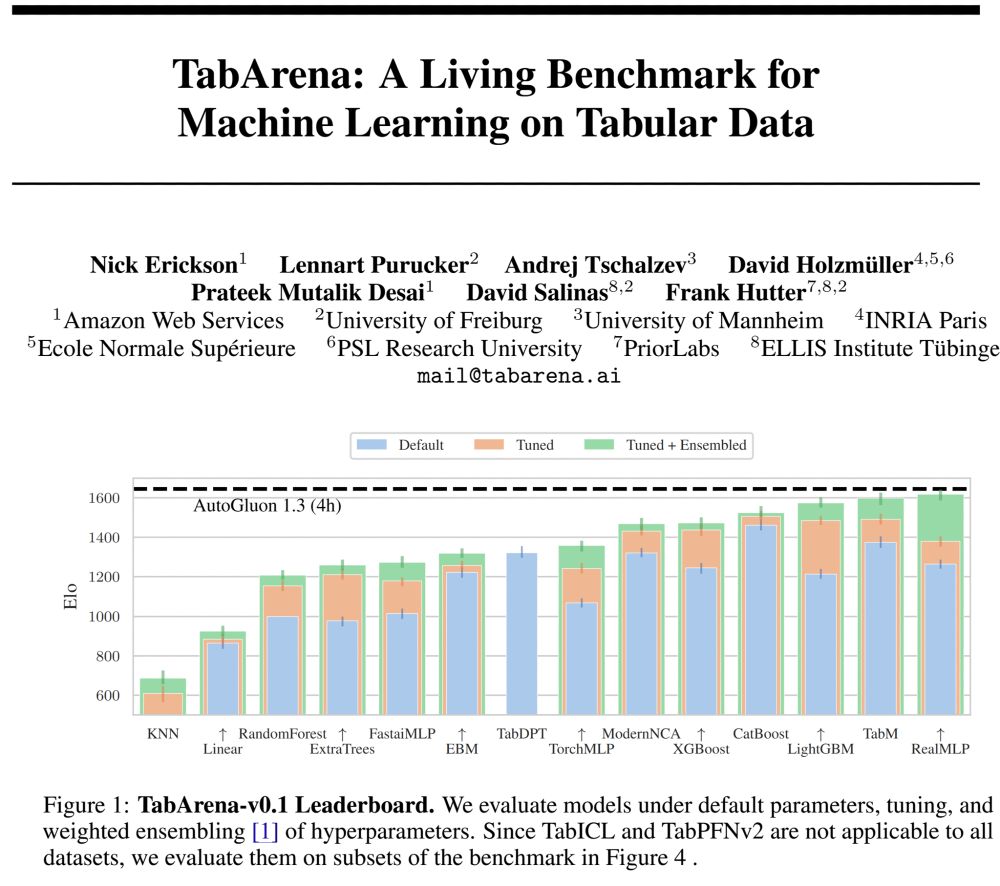

🚨What is SOTA on tabular data, really? We are excited to announce 𝗧𝗮𝗯𝗔𝗿𝗲𝗻𝗮, a living benchmark for machine learning on IID tabular data with:

📊 an online leaderboard (submit!)

📑 carefully curated datasets

📈 strong tree-based, deep learning, and foundation models

🧵

23.06.2025 10:14 — 👍 13 🔁 8 💬 1 📌 0

📝 The skrub TextEncoder brings the power of HuggingFace language models to embed text features in tabular machine learning, for all those use cases that involve text-based columns.

28.05.2025 08:43 — 👍 6 🔁 3 💬 2 📌 0

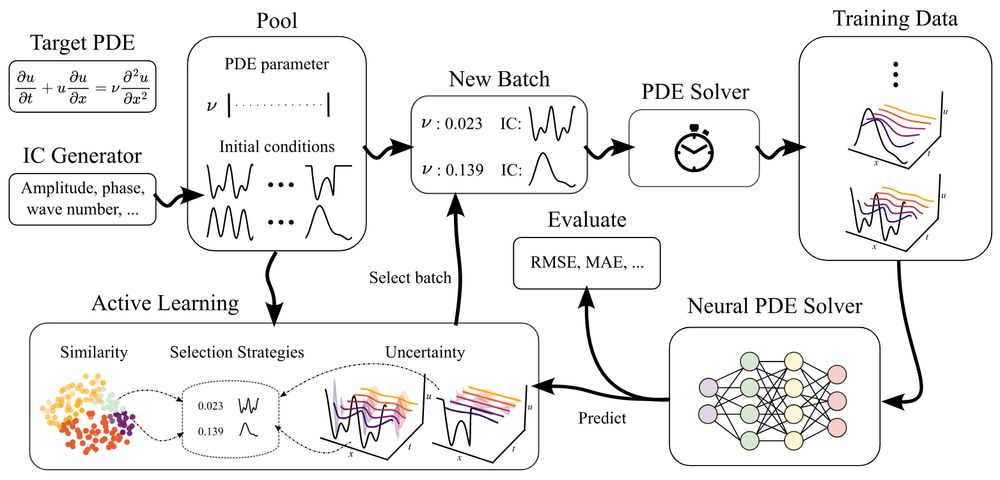

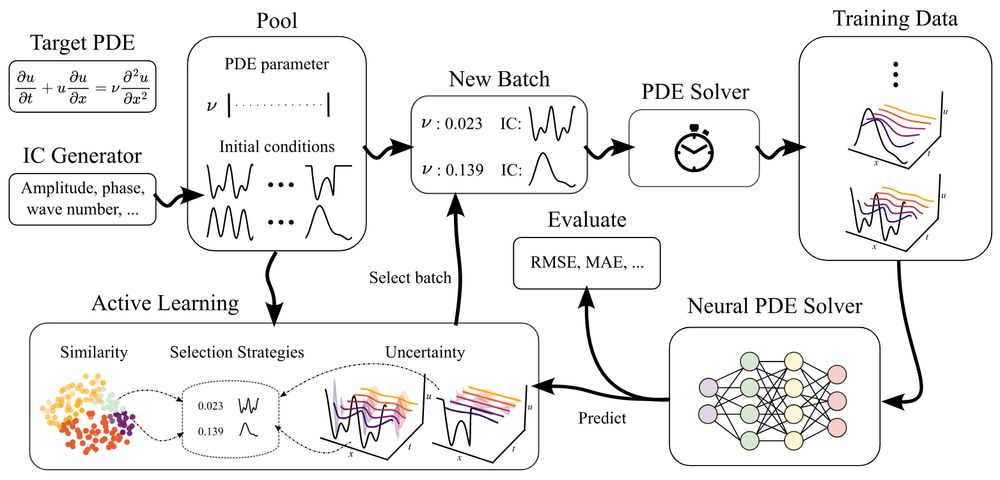

🚨ICLR poster in 1.5 hours, presented by @danielmusekamp.bsky.social :

Can active learning help to generate better datasets for neural PDE solvers?

We introduce a new benchmark to find out!

Featuring 6 PDEs, 6 AL methods, 3 architectures and many ablations - transferability, speed, etc.!

24.04.2025 00:38 — 👍 11 🔁 2 💬 1 📌 0

The Skrub TableReport is a lightweight tool that allows to get a rich overview of a table quickly and easily.

✅ Filter columns

🔎 Look at each column's distribution

📊 Get a high level view of the distributions through stats and plots, including correlated columns

🌐 Export the report as html

23.04.2025 11:49 — 👍 6 🔁 4 💬 1 📌 1

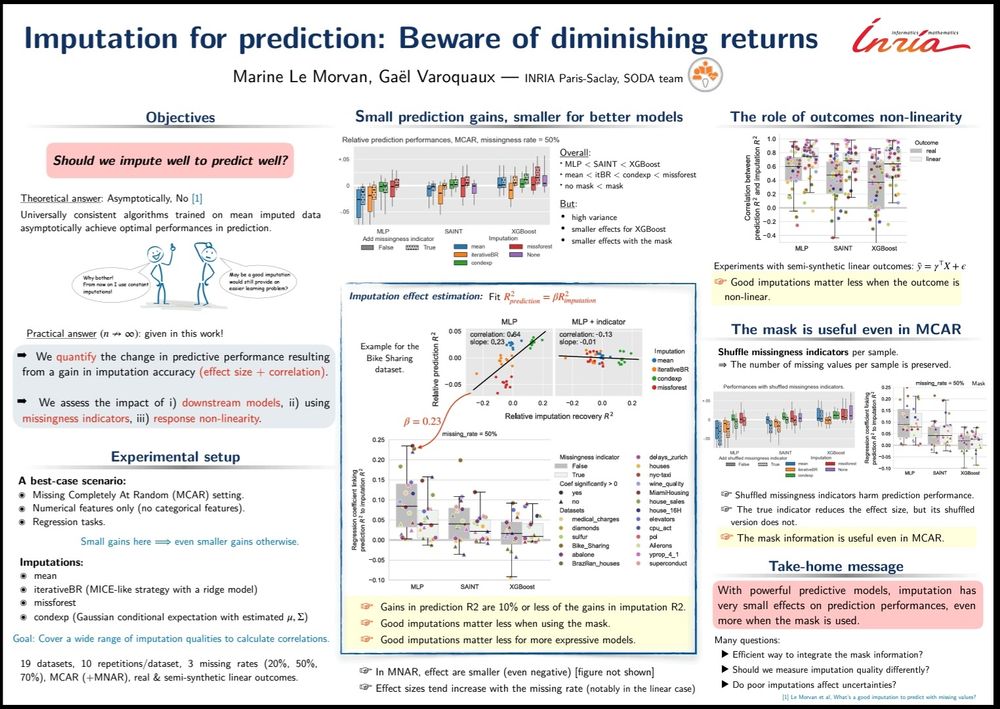

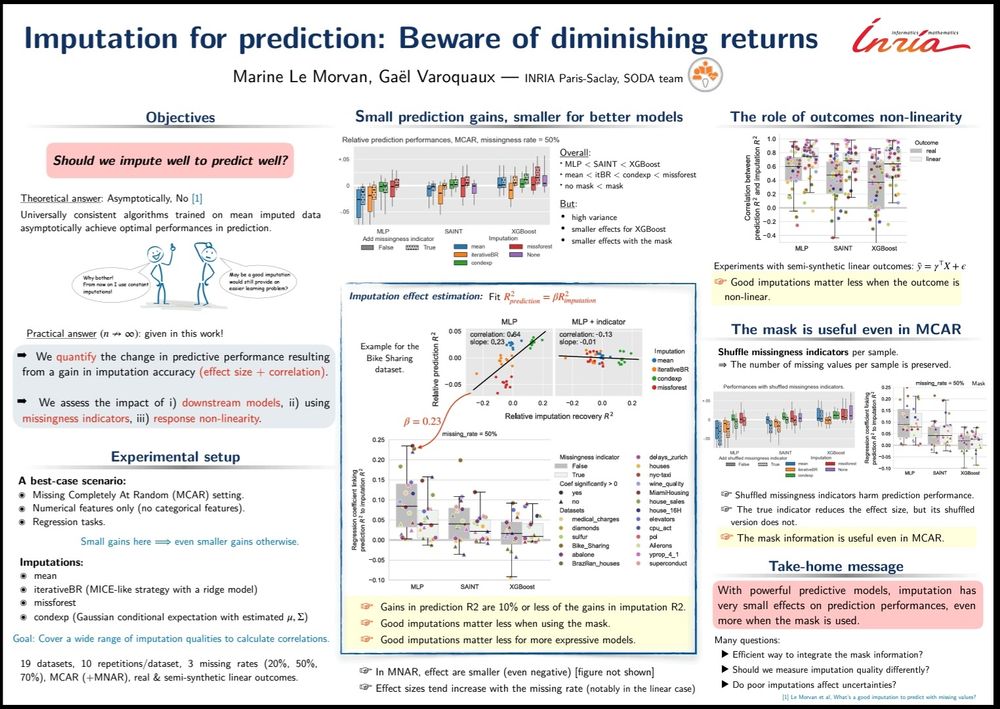

#ICLR2025 Marine Le Morvan presents "Imputation for prediction: beware of diminishing returns": poster Thu 24th

arxiv.org/abs/2407.19804

Concludes 6 years of research on prediction with missing values: Imputation is useful but improvements are expensive, while better learners yield easier gains.

23.04.2025 07:55 — 👍 48 🔁 8 💬 1 📌 0

Details about the seminar talk titled TabICL: A Tabular Foundation Model for In-Context Learning on Large Data by Marine Le Morvan

Excited to share the new monthly Table Representation Learning (TRL) Seminar under the ELLIS Amsterdam TRL research theme! To recur every 2nd Friday.

Who: Marine Le Morvan, Inria (in-person)

When: Friday 11 April 4-5pm (+drinks)

Where: L3.36 Lab42 Science Park / Zoom

trl-lab.github.io/trl-seminar/

02.04.2025 09:42 — 👍 12 🔁 3 💬 0 📌 1

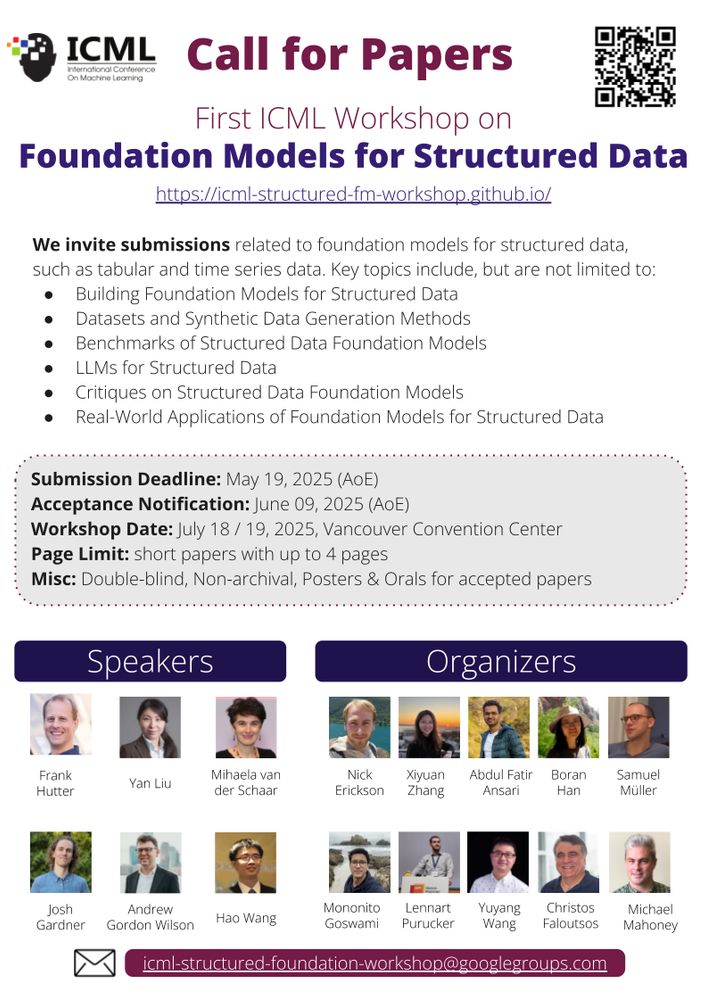

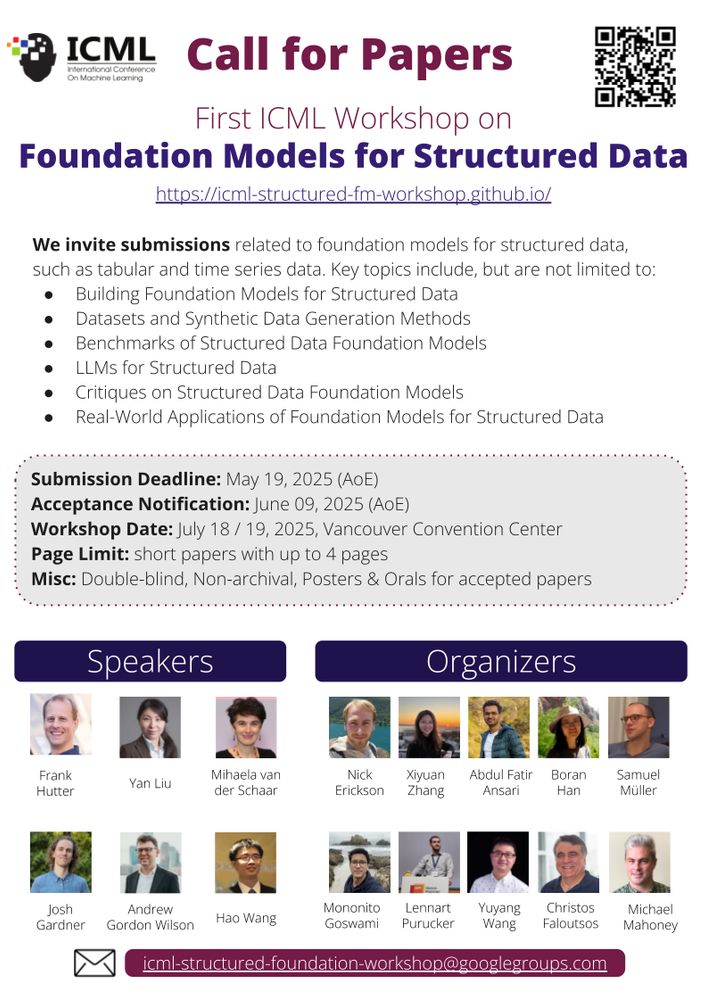

We are excited to announce #FMSD: "1st Workshop on Foundation Models for Structured Data" has been accepted to #ICML 2025!

Call for Papers: icml-structured-fm-workshop.github.io

25.03.2025 17:59 — 👍 16 🔁 10 💬 0 📌 2

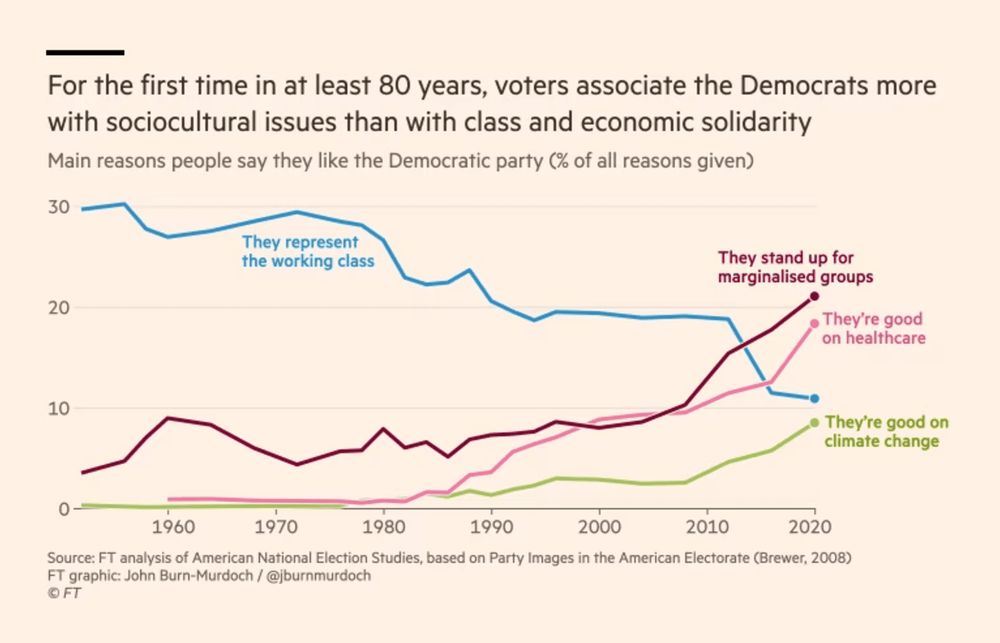

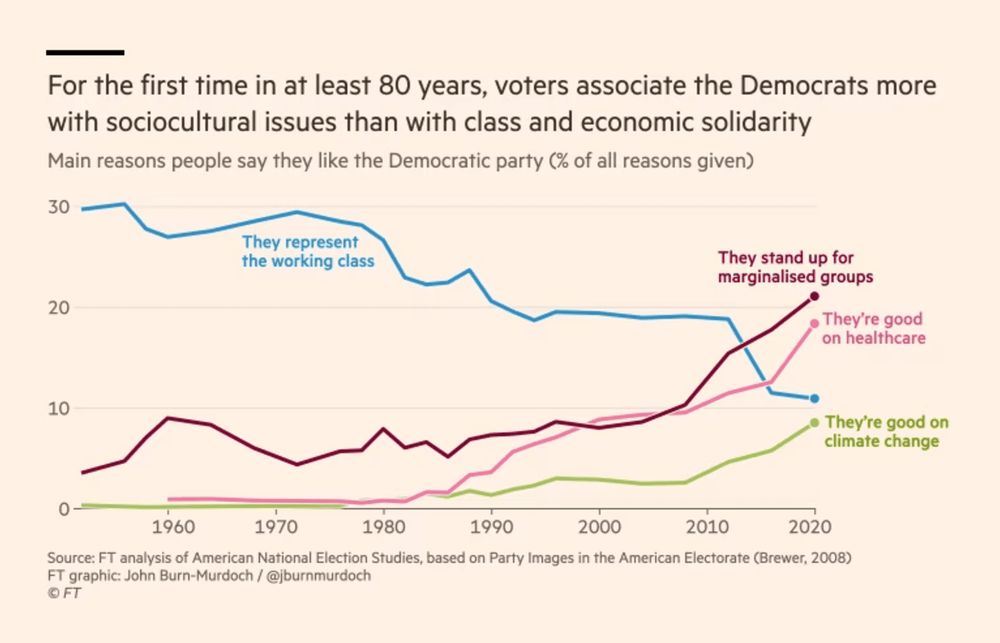

Trying something new:

A 🧵 on a topic I find many students struggle with: "why do their 📊 look more professional than my 📊?"

It's *lots* of tiny decisions that aren't the defaults in many libraries, so let's break down 1 simple graph by @jburnmurdoch.bsky.social

🔗 www.ft.com/content/73a1...

20.11.2024 17:02 — 👍 1592 🔁 463 💬 92 📌 96

🚀Continuing the spotlight series with the next @iclr-conf.bsky.social MLMP 2025 Oral presentation!

📝LOGLO-FNO: Efficient Learning of Local and Global Features in Fourier Neural Operators

📷 Join us on April 27 at #ICLR2025!

#AI #ML #ICLR #AI4Science

18.03.2025 08:12 — 👍 2 🔁 2 💬 1 📌 0

Links:

www.kaggle.com/competitions...

www.kaggle.com/competitions...

Link to the repo: github.com/dholzmueller...

PS: The newest pytabkit version now includes multiquantile regression for RealMLP and a few other improvements.

bsky.app/profile/dhol...

10.03.2025 15:53 — 👍 1 🔁 0 💬 0 📌 0

Rohlik Sales Forecasting Challenge

Use historical product sales data to predict future sales.

Practitioners are often sceptical of academic tabular benchmarks, so I am elated to see that our RealMLP model outperformed boosted trees in two 2nd place Kaggle solutions, for a $10,000 forecasting challenge and a research competition on survival analysis.

10.03.2025 15:53 — 👍 7 🔁 1 💬 1 📌 0

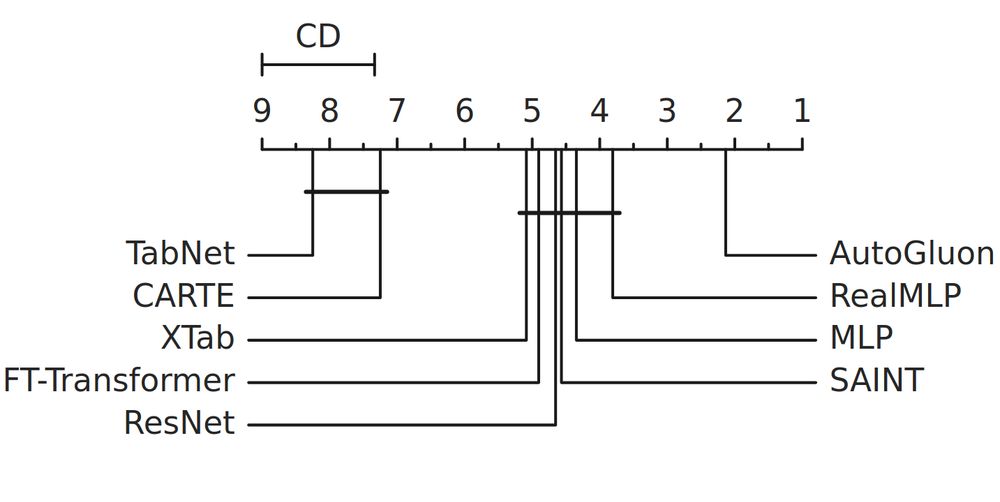

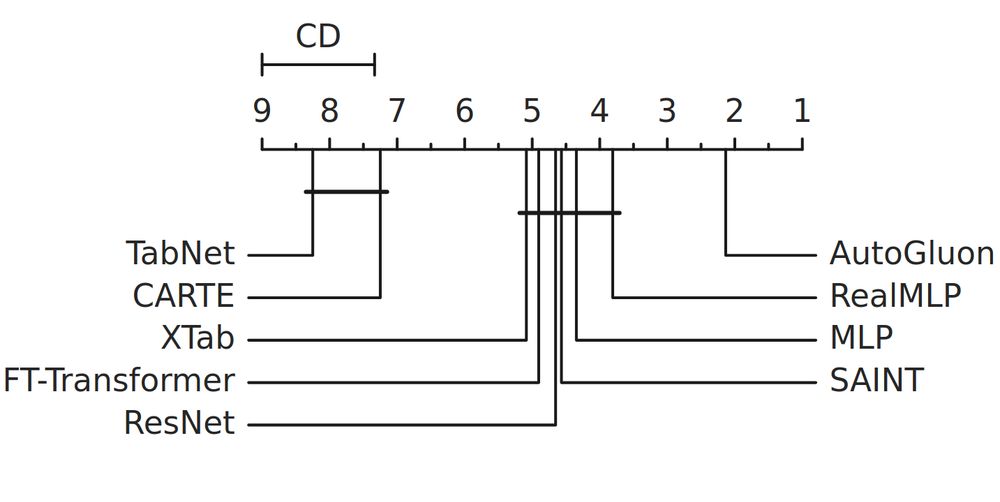

A new tabular classification benchmark provides another independent evaluation of our RealMLP. RealMLP is the best classical DL model, although some other recent baselines are missing. TabPFN is better on small datasets and boosted trees on larger datasets, though.

04.03.2025 14:25 — 👍 2 🔁 0 💬 1 📌 0

What about work on adaptive learning rates (in the sense of convergence rates, not step sizes) that studies methods with hyperparameter optimization on a holdout set to achieve optimal/good convergence rates simultaneously for different classes of functions? E.g.

projecteuclid.org/journals/ann...

12.02.2025 07:31 — 👍 0 🔁 0 💬 0 📌 0

By the way, I think an intercept in this case is necessary because the logistic regression model does not have an intercept. For more realistic models that can learn an intercept themselves, I think an intercept for TS is probably not very important.

08.02.2025 11:21 — 👍 2 🔁 0 💬 0 📌 0

Finally, if you just want to have the best performance for a given (large) time budget, AutoGluon combines many tabular models. It does not include some of the latest models (yet), but has a very good CatBoost, for example, and will likely outperform individual models.

07.02.2025 14:39 — 👍 1 🔁 0 💬 0 📌 0

The library offers the same for XGBoost and LightGBM. Plus, the library includes some of the best tabular DL models like RealTabR, TabR, RealMLP, and TabM that could also be interesting to try. (ModernNCA is also very good but not included.)

07.02.2025 14:39 — 👍 1 🔁 0 💬 1 📌 0

Using my library github.com/dholzmueller...

you could, for example, use CatBoost_TD_Regressor(n_cv=5), which will use better default parameters for regression, train five models in a cross-validation setup, select the best iteration for each, and ensemble them.

07.02.2025 14:39 — 👍 0 🔁 0 💬 1 📌 0

Interesting! Would be cool to have these datasets on OpenML as well so they are easy to use in tabular benchmarks.

Here are some more recommendations for stronger tabular baselines:

1. For CatBoost and XGBoost, you'd want at least early stopping to select the best iteration.

07.02.2025 14:39 — 👍 0 🔁 0 💬 1 📌 0

Assoc. Prof. of Machine & Human Intelligence | Univ. Helsinki & Finnish Centre for AI (FCAI) | Bayesian ML & probabilistic modeling | https://lacerbi.github.io/

Postdoc (Machine Learning | Control | Applied Math) at Technical University of Munich (TUM) and Munich Center for Machine Learning (MCML)

PhD Candidate (Lab : https://www.evmodelers.org/) | Infectious Disease Modelling | L'école doctorale Pierre Louis de Santé Publique | Sorbonne Centre for Artificial Intelligence (SCAI)

Combining #deeplearning with mathematical models of infectious diseas

Mathematician, Statistician, Programmer. Prof at LMU Munich

Official Bluesky account of the Institute for Artificial Intelligence, University of Stuttgart.

The aim of the newly founded institute is to research fundamental questions about AI, to reflect on the benefits for society and to promote the transfer of AI.

AlgoPerf benchmark for faster neural network training via better training algorithms

https://www.slds.stat.uni-muenchen.de

University of Cambridge and

Max Planck Institute for Intelligent Systems

I'm interested in amortized inference/PFNs/in-context learning for challenging probabilistic and causal problems.

https://arikreuter.github.io/

PhD @Meta & @ENSParis. Diffusion Models, Flow Matching, High-Dimensional Statistics.

PhD student at FAIR at Meta and Ecole des Ponts with @syhw and @Amaury_Hayat, co-supervised by @wtgowers. Machine learning for mathematics and programming.

Postdoc at Inria working on optimization and machine learning.

Website: https://matdag.github.io/

PhD student in machine learning with Francis Bach &

Michael I. Jordan: uncertainty quantification, learning theory.

https://elsacho.github.io

Prof for AI. Interested in knowledge graphs, ML with background knowledge, Web science, AI Ethics

PhD student at SimTech (Uni Stuttgart) doing MD simulations. Freetime calisthenics, urbex, and bouldering.

Professor for ML and AI at Lamarr Institute / @tu-dortmund.de. Working on AutoML and Tabular Data. All opinions are my own.