with Julian Forsyth, @thomasfel.bsky.social, @matthewkowal.bsky.social, @csprofkgd.bsky.social

Demo: yorkucvil.github.io/UniversalSAE/

@hthasarathan.bsky.social

PhD student @YorkUniversity @LassondeSchool, I work on computer vision and interpretability.

with Julian Forsyth, @thomasfel.bsky.social, @matthewkowal.bsky.social, @csprofkgd.bsky.social

Demo: yorkucvil.github.io/UniversalSAE/

🌌🛰️🔭Want to explore universal visual features? Check out our interactive demo of concepts learned from our #ICML2025 paper "Universal Sparse Autoencoders: Interpretable Cross-Model Concept Alignment".

Come see our poster at 4pm on Tuesday in East Exhibition hall A-B, E-1208!

Our work finding universal concepts in vision models is accepted at #ICML2025!!!

My first major conference paper with my wonderful collaborators and friends @matthewkowal.bsky.social @thomasfel.bsky.social

@Julian_Forsyth

@csprofkgd.bsky.social

Working with y'all is the best 🥹

Preprint ⬇️!!

Accepted at #ICML2025! Check out the preprint.

HUGE shoutout to Harry (1st PhD paper, in 1st year), Julian (1st ever, done as an undergrad), Thomas and Matt!

@hthasarathan.bsky.social @thomasfel.bsky.social @matthewkowal.bsky.social

Check out Neehar Kondapaneni's upcoming ICLR 2025 work which proposes a new approach for understanding how two neural networks differ by discovering the shared and unique concepts learned by the networks.

Representational Similarity via Interpretable Visual Concepts

arxiv.org/abs/2503.15699

A very interesting work that explores the possibility of having a unified interpretation across multiple models

09.02.2025 09:13 — 👍 2 🔁 1 💬 0 📌 0This was joint work with my wonderful collaborators @Julian_Forsyth @thomasfel.bsky.social @matthewkowal.bsky.social and my supervisor @csprofkgd.bsky.social . Couldn’t ask for better mentors and friends🫶!!!

(9/9)

We hope this work contributes to the growing discourse on universal representations. As the zoo of vision models increases, a canonical, interpretable concept space could be crucial for safety and understanding. Code coming soon!

(8/9)

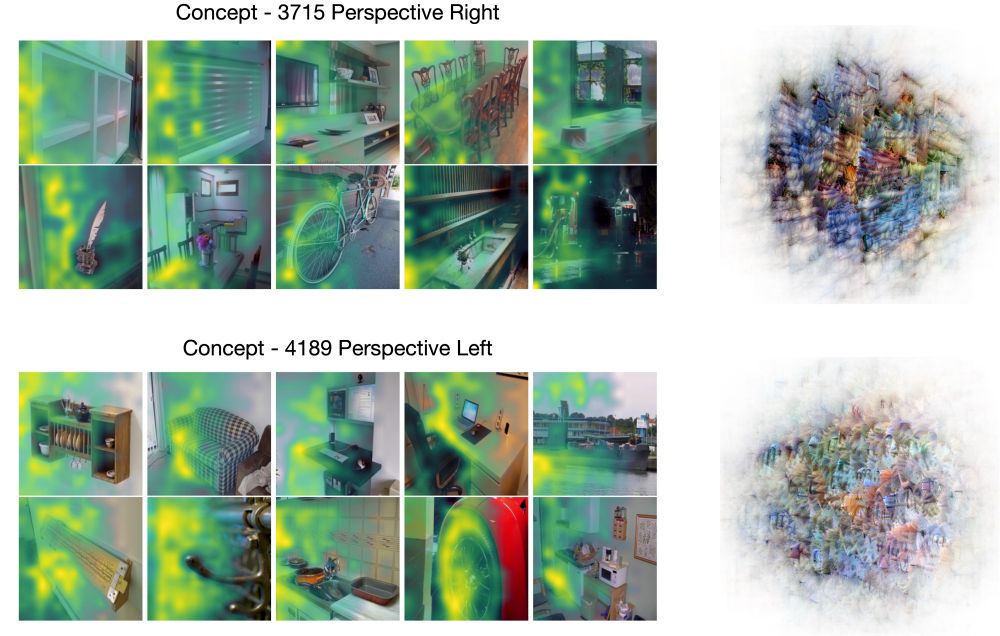

Our method reveals model-specific features too: DinoV2 (left) shows specialized geometric concepts (depth, perspective), while SigLIP (right) captures unique text-aware visual concepts.

This opens new paths for understanding model differences!

(7/9)

Using coordinated activation maximization on universal concepts, we can visualize how each model independently represents the same concept allowing us to further explore model similarities and differences. Below are concepts visualized for DinoV2, SigLIP, and ViT.

(6/9)

Using co-firing and firing entropy metrics, we uncover universal features ranging from basic primitives (colors, textures) to complex abstractions (object interactions, hierarchical compositions). We find that universal concepts are important for reconstructing model activations!

(5/9)

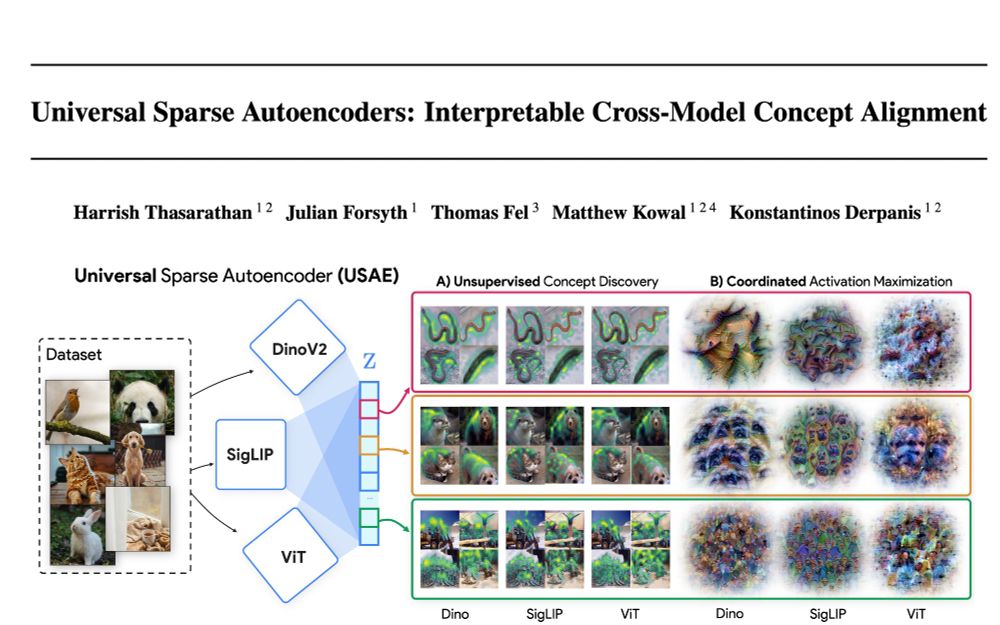

Previous approaches found universal features by post-hoc mining or similarity analysis - but this scales poorly. Our solution: extend Sparse Autoencoders to learn a shared concept space directly, encoding one model's activations and reconstructing all others from this unified vocabulary.

(4/9)

If vision models seem to learn the same fundamental visual concepts, what are these universal features, and how can we find them?

(3/9)

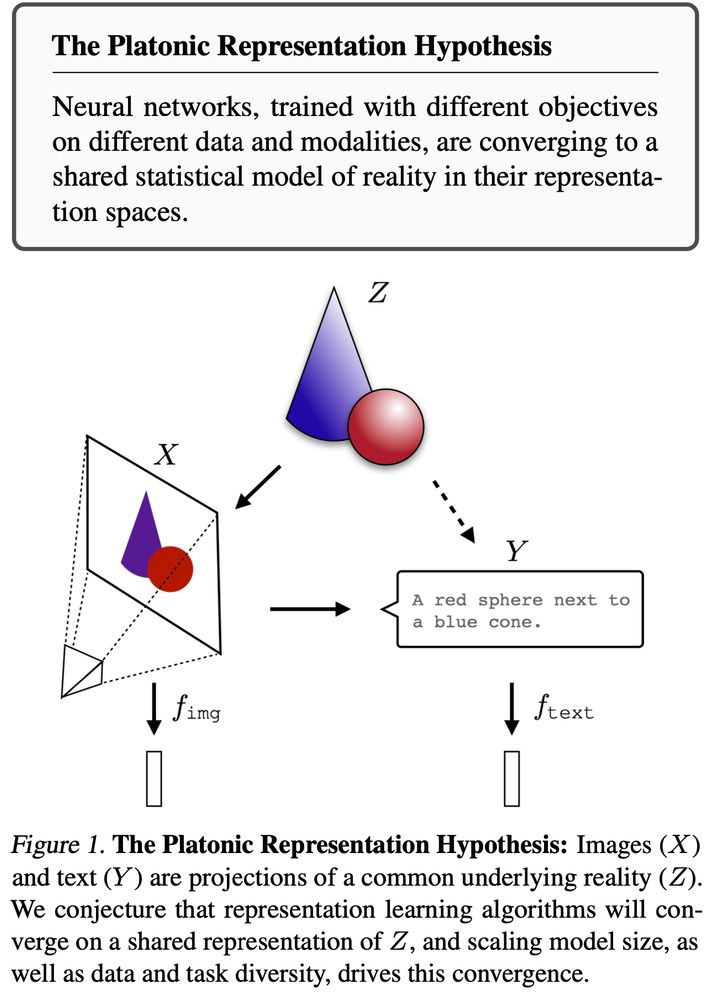

Vision models (backbones & foundation models alike) seem to learn transferable features that are relevant across many tasks. Recent work even suggests we are converging towards the same "Platonic" representation of the world. (Image from arxiv.org/abs/2405.07987)

(2/9)

🌌🛰️🔭Wanna know which features are universal vs unique in your models and how to find them? Excited to share our preprint: "Universal Sparse Autoencoders: Interpretable Cross-Model Concept Alignment"!

arxiv.org/abs/2502.03714

(1/9)