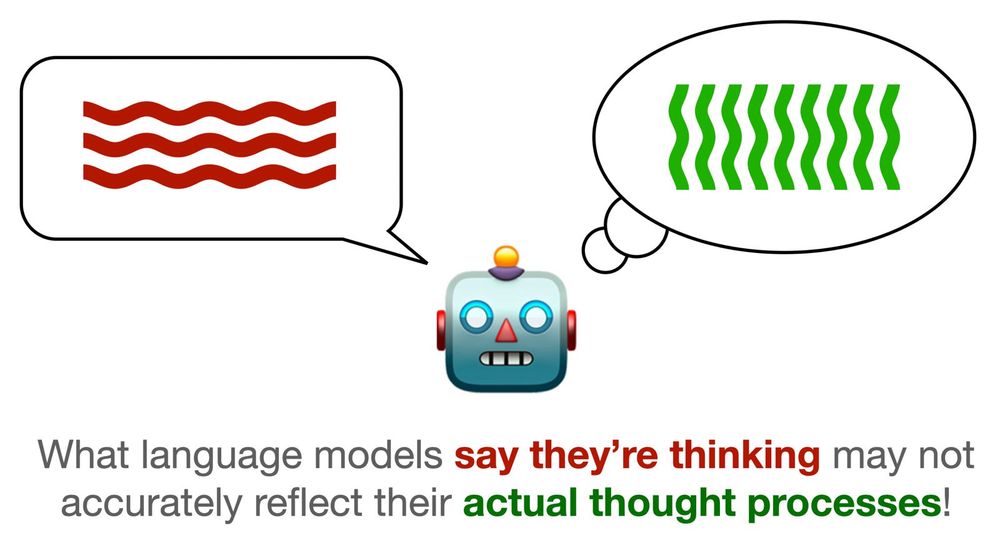

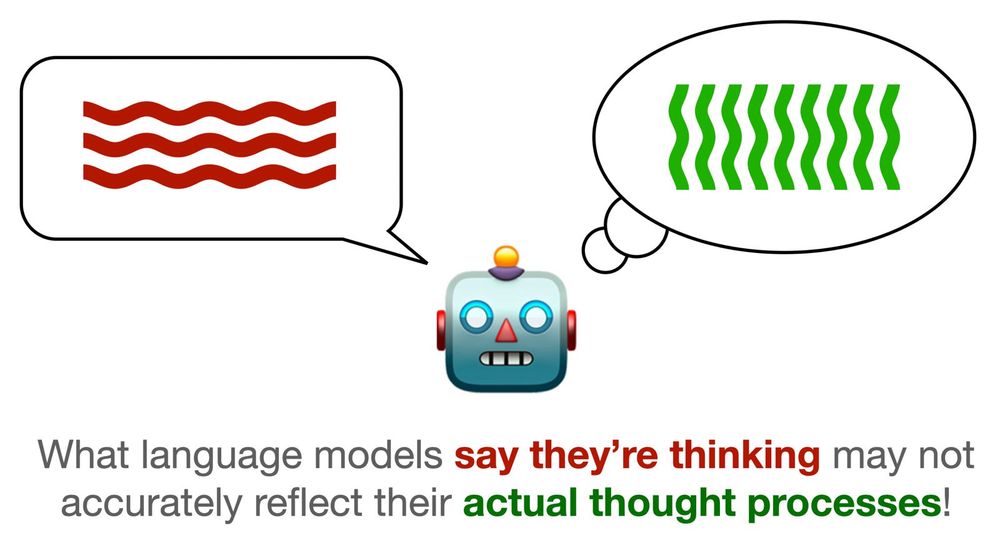

Excited to share our paper: "Chain-of-Thought Is Not Explainability"! We unpack a critical misconception in AI: models explaining their steps (CoT) aren't necessarily revealing their true reasoning. Spoiler: the transparency can be an illusion. (1/9) 🧵

01.07.2025 15:41 — 👍 82 🔁 31 💬 2 📌 5

weve reached that point in this submission cycle, no amount of coffee will do 😞🙂↔️😞

09.05.2025 23:51 — 👍 1 🔁 0 💬 0 📌 0

INCOMING

29.03.2025 04:58 — 👍 3 🔁 0 💬 0 📌 0

a leaf falls on moo deng the pygmy hippo , blocking her vision

moo deng is upset presumably because she can’t see!

titled: peer review

29.03.2025 04:58 — 👍 7 🔁 1 💬 0 📌 0

CDS building which looks like a jenga tower

Life update: I'm starting as faculty at Boston University

@bucds.bsky.social in 2026! BU has SCHEMES for LM interpretability & analysis, I couldn't be more pumped to join a burgeoning supergroup w/ @najoung.bsky.social @amuuueller.bsky.social. Looking for my first students, so apply and reach out!

27.03.2025 02:24 — 👍 244 🔁 13 💬 35 📌 7

or if you're awesome and happen to be in sf, also message me

15.03.2025 01:51 — 👍 1 🔁 0 💬 0 📌 0

pls message me if you wanna meet up for coffee and chat about ai/physics/llms/interpretability

15.03.2025 01:42 — 👍 0 🔁 0 💬 1 📌 0

really excited to be headed to OFC in SF! so excited to revisit optical physics 😀

15.03.2025 01:42 — 👍 1 🔁 0 💬 2 📌 1

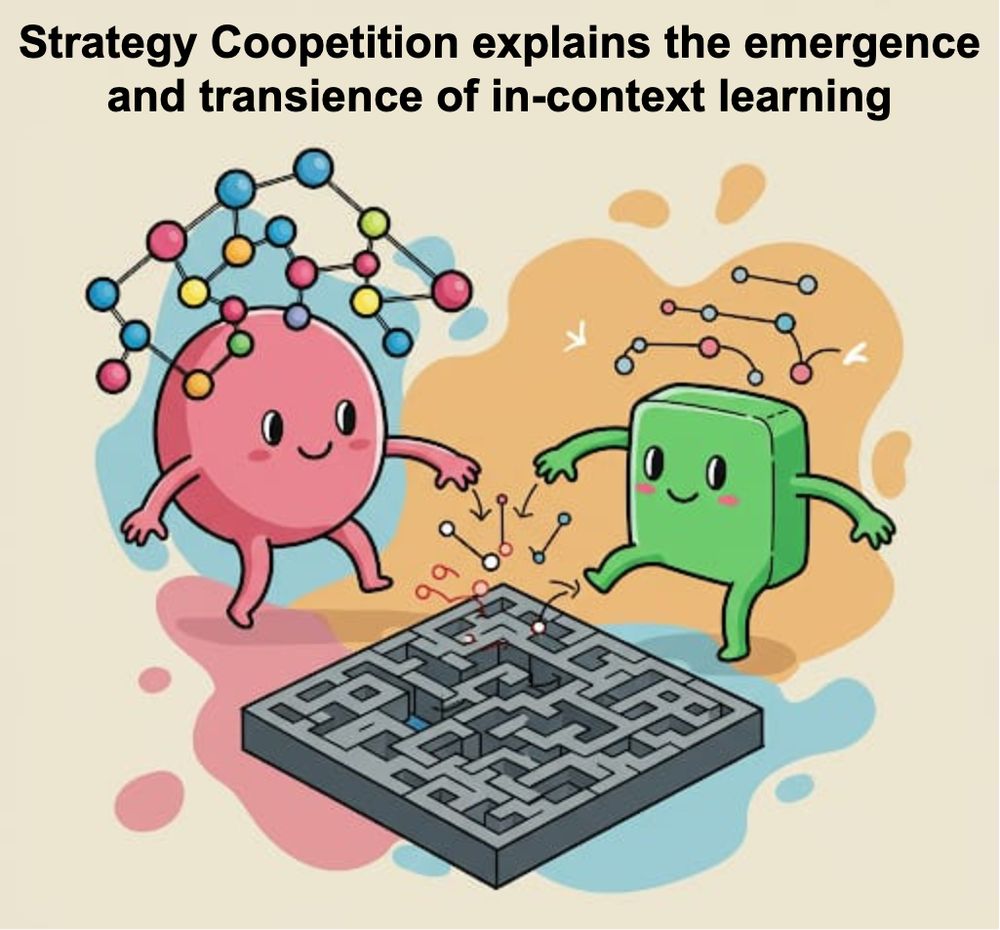

Transformers employ different strategies through training to minimize loss, but how do these tradeoff and why?

Excited to share our newest work, where we show remarkably rich competitive and cooperative interactions (termed "coopetition") as a transformer learns.

Read on 🔎⏬

11.03.2025 07:13 — 👍 8 🔁 4 💬 1 📌 0

i use the same template and need help getting a butterfly button help

05.03.2025 02:13 — 👍 0 🔁 0 💬 0 📌 0

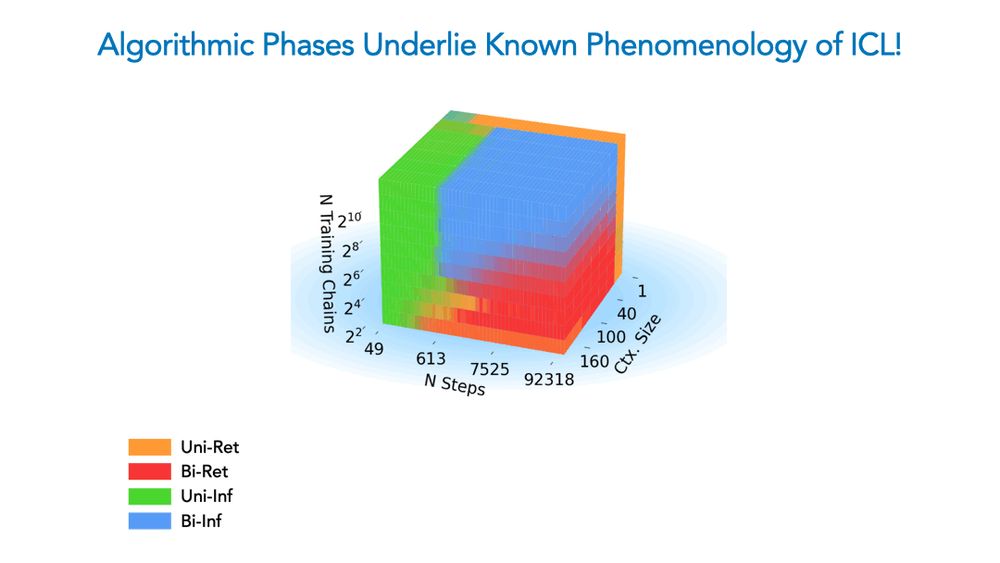

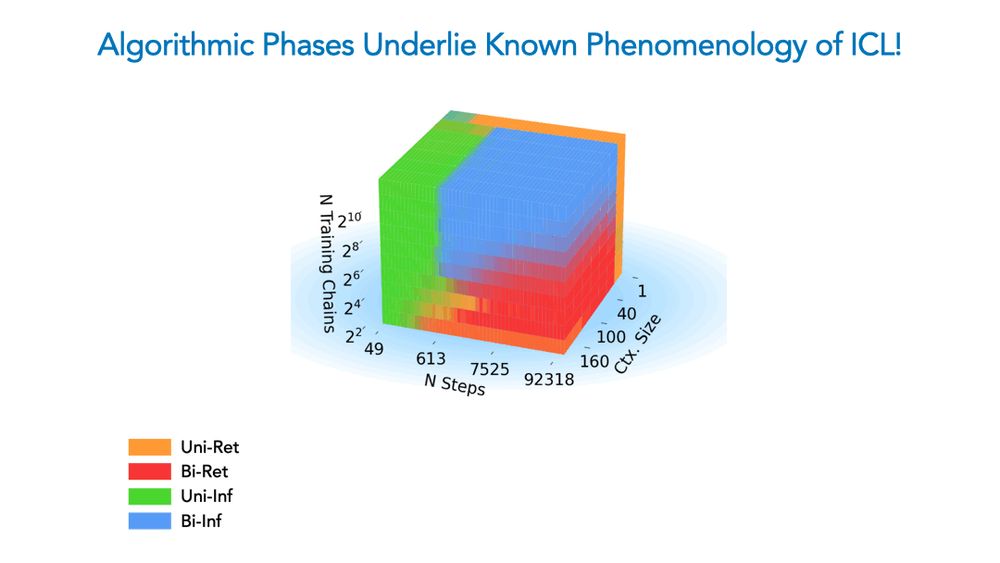

New paper–accepted as *spotlight* at #ICLR2025! 🧵👇

We show a competition dynamic between several algorithms splits a toy model’s ICL abilities into four broad phases of train/test settings! This means ICL is akin to a mixture of different algorithms, not a monolithic ability.

16.02.2025 18:57 — 👍 32 🔁 5 💬 2 📌 1

Out-of-Sync ‘Loners’ May Secretly Protect Orderly Swarms

Studies of collective behavior usually focus on how crowds of organisms coordinate their actions. But what if the individuals that don’t participate have just as much to tell us?

Starlings move in undulating curtains across the sky. Forests of bamboo blossom at once. But some individuals don’t participate in these mystifying synchronized behaviors — and scientists are learning that they may be as important as those that do.

15.02.2025 16:46 — 👍 33 🔁 10 💬 2 📌 2

Paper page - Fully Autonomous AI Agents Should Not be Developed

Join the discussion on this paper page

New piece out!

We explain why Fully Autonomous Agents Should Not be Developed, breaking “AI Agent” down into its components & examining through ethical values.

With @evijit.io, @giadapistilli.com and @sashamtl.bsky.social

huggingface.co/papers/2502....

06.02.2025 09:56 — 👍 140 🔁 48 💬 4 📌 11

How do tokens evolve as they are processed by a deep Transformer?

With José A. Carrillo, @gabrielpeyre.bsky.social and @pierreablin.bsky.social, we tackle this in our new preprint: A Unified Perspective on the Dynamics of Deep Transformers arxiv.org/abs/2501.18322

ML and PDE lovers, check it out!

31.01.2025 16:56 — 👍 95 🔁 16 💬 2 📌 0

it’s finally raining in la:)

26.01.2025 19:20 — 👍 5 🔁 0 💬 0 📌 0

09.01.2025 14:30 — 👍 0 🔁 1 💬 0 📌 0

part of me wants to quip, is this why i quit smoking, but i think im actually getting a lil scared. hope we get thru the next few days okay cause feels like theres very little we can do here rn

09.01.2025 14:14 — 👍 1 🔁 0 💬 1 📌 0

when i lived in seattle, fires were a summer expectation at a distance. here, it feels very different, to see it actually closing in on us

09.01.2025 14:14 — 👍 0 🔁 0 💬 1 📌 0

i go on a really long walk almost every day, and at a high point in silverlake, i saw fire from all sides. and it's harder to breathe. and everything is orange.

09.01.2025 14:14 — 👍 6 🔁 0 💬 1 📌 0

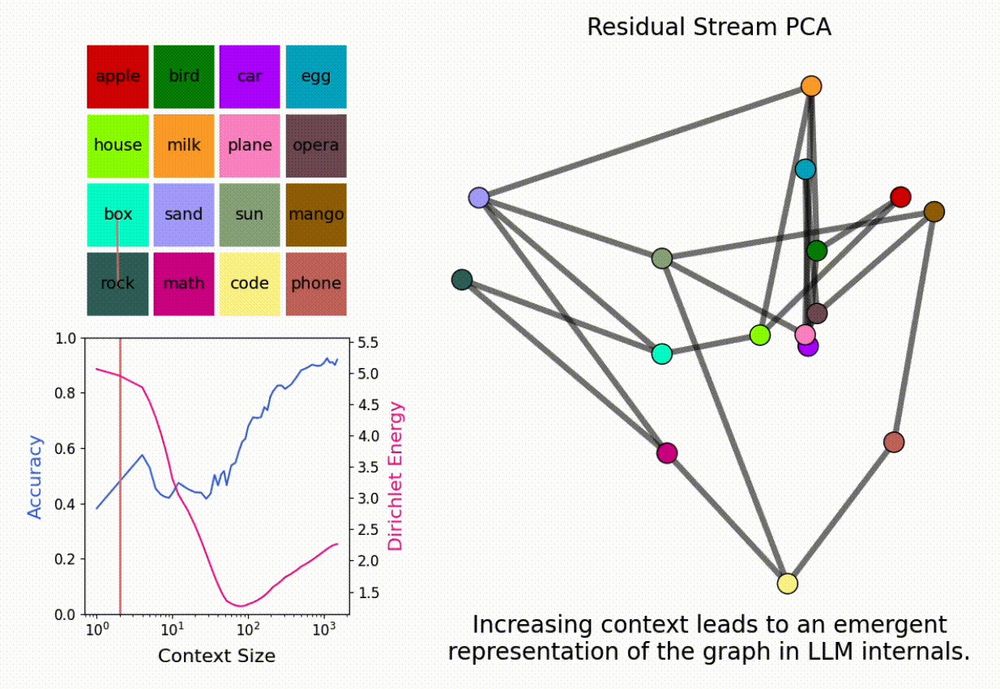

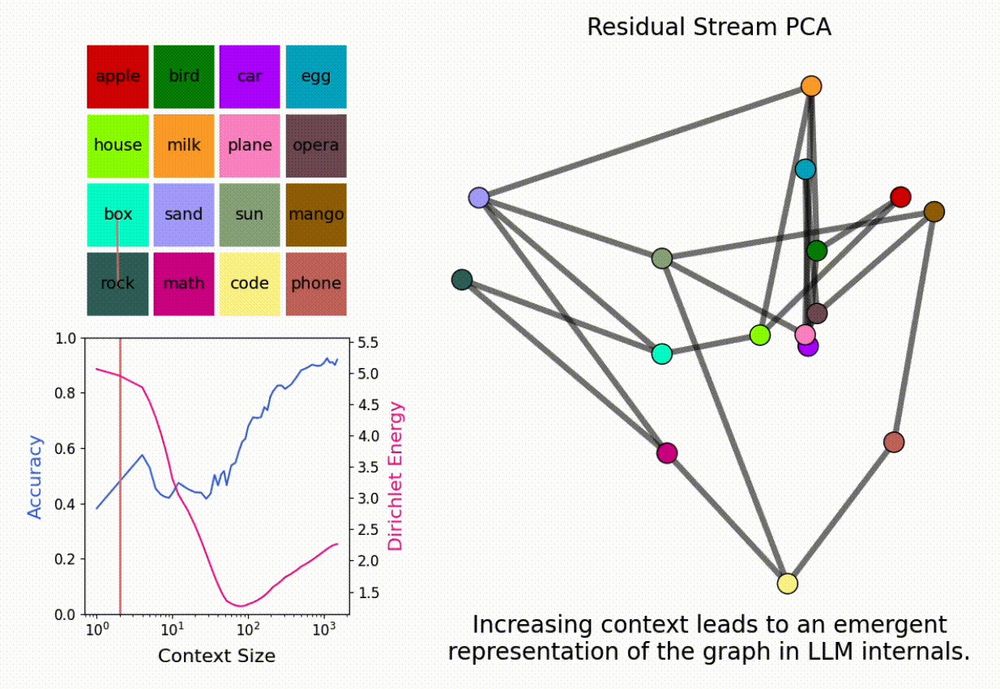

New paper <3

Interested in inference-time scaling? In-context Learning? Mech Interp?

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N

05.01.2025 15:49 — 👍 53 🔁 16 💬 2 📌 1

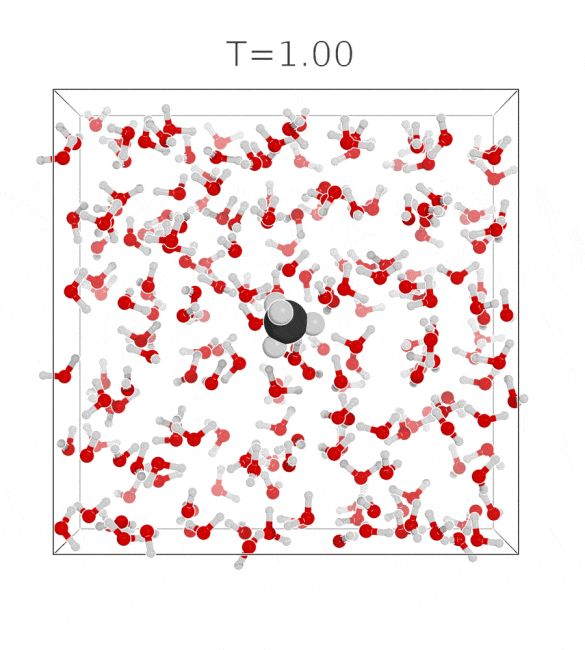

hello bluesky! we have a new preprint on solvation free energies:

tl;dr: We define an interpolating density by its sampling process, and learn the corresponding equilibrium potential with score matching. arxiv.org/abs/2410.15815

with @francois.fleuret.org and @tbereau.bsky.social

(1/n)

17.12.2024 12:32 — 👍 34 🔁 10 💬 1 📌 1

look at our sheep

15.12.2024 23:52 — 👍 33 🔁 6 💬 3 📌 0

BreimanLectureNeurIPS2024_Doucet.pdf

The slides of my NeurIPS lecture "From Diffusion Models to Schrödinger Bridges - Generative Modeling meets Optimal Transport" can be found here

drive.google.com/file/d/1eLa3...

15.12.2024 18:40 — 👍 327 🔁 67 💬 9 📌 6

Slides from the tutorial are now posted here!

neurips.cc/media/neurip...

11.12.2024 16:43 — 👍 17 🔁 7 💬 0 📌 0

An Evolved Universal Transformer Memory

sakana.ai/namm/

Introducing Neural Attention Memory Models (NAMM), a new kind of neural memory system for Transformers that not only boost their performance and efficiency but are also transferable to other foundation models without any additional training!

10.12.2024 01:34 — 👍 41 🔁 15 💬 1 📌 3

Tomorrow (Dec 12) poster #2311! Go talk to @emalach.bsky.social and the other authors at #NeurIPS, say hi from me!

11.12.2024 18:13 — 👍 16 🔁 1 💬 0 📌 0

Sometimes our anthropocentric assumptions about how intelligence "should" work (like using language for reasoning) may be holding AI back. Letting AI reason in its own native "language" in latent space could unlock new capabilities, improving reasoning over Chain of Thought. arxiv.org/pdf/2412.06769

10.12.2024 14:59 — 👍 94 🔁 16 💬 5 📌 2

The broader spectrum of in-context learning

The ability of language models to learn a task from a few examples in context has generated substantial interest. Here, we provide a perspective that situates this type of supervised few-shot learning...

What counts as in-context learning (ICL)? Typically, you might think of it as learning a task from a few examples. However, we’ve just written a perspective (arxiv.org/abs/2412.03782) suggesting interpreting a much broader spectrum of behaviors as ICL! Quick summary thread: 1/7

10.12.2024 18:17 — 👍 123 🔁 32 💬 2 📌 1

your friendly neighborhood slop janitor

PhD student at Brown interested in deep learning + cog sci, but more interested in playing guitar.

phd student @ harvard researching evolutionary dynamics | website: https://brewster.cc

https://cfpark00.github.io/

The Kempner Institute for the Study of Natural and Artificial Intelligence at Harvard University.

Professor of Psychology and Philosophy at the University of Illinois Urbana-Champaign. I build computational models and conduct experiments to understand how neural computing architectures give rise to symbolic thought.

Cognitive and perceptual psychologist, industrial designer, & electrical engineer. Assistant Professor of Industrial Design at University of Illinois Urbana-Champaign. I make neurally plausible bio-inspired computational process models of visual cognition.

PhD Student @ ML Group TU Berlin, BIFOLD

PhD student at TU Berlin & @bifold.berlin

I am interested in Machine Learning, Explainable AI (XAI), and Optimal Transport

ai phd student (+ went through music school as a percussionist) | (he/him)

PhD student explainable AI @ ML Group TU Berlin, BIFOLD

Novelist and essayist, most recently author of How to Write an Autobiographical Novel. Professor of Creative Writing at Dartmouth College. He/him. Agent: Jin Auh, The Wylie Agency. alexanderchee.net

Professor. Sociologist. NYTimes Opinion Columnist. Books: THICK, LowerEd. Forthcoming: 1)Black Mothering & Daughtering and 2)Mama Bears.

Beliefs: C.R.E.A.M. + the internet ruined everything good + bring back shame.

“I’m just here so I don’t get fined.”

natural language processing and computational linguistics at google deepmind.

Researching planning, reasoning, and RL in LLMs @ Reflection AI. Previously: Google DeepMind, UC Berkeley, MIT. I post about: AI 🤖, flowers 🌷, parenting 👶, public transit 🚆. She/her.

http://www.jesshamrick.com

interests: software, neuroscience, causality, philosophy | ex: salk institute, u of washington, MIT | djbutler.github.io

she/her

McGillNLP & Mila

occasionally live on ckut 90.3 fm :-)

adadtur.github.io