🎭 How do LLMs (mis)represent culture?

🧮 How often?

🧠 Misrepresentations = missing knowledge? spoiler: NO!

At #CHI2026 we are bringing ✨TALES✨ a participatory evaluation of cultural (mis)reps & knowledge in multilingual LLM-stories for India

📜 arxiv.org/abs/2511.21322

1/10

02.02.2026 21:38 —

👍 45

🔁 21

💬 1

📌 2

Cornell University, Computer Science

Job #AJO30804, Professor Positions - Computer Science, Cornell Tech, Computer Science, Cornell University, New York, New York, US

Jobs! First, we hope to be hiring in Computer Science for the @cornelltech.bsky.social campus:

academicjobsonline.org/ajo/jobs/30804

Focus on security, SysML, and NLP.

Please share!

20.10.2025 17:46 —

👍 17

🔁 12

💬 1

📌 2

Aditya and Joy wearing regalia and posing in front of a bright red background!

Big day today with Joy Ming graduating!! A new doctor in town! Can’t wait to see all the incredible things Joy will take on next. So proud!

23.05.2025 21:28 —

👍 7

🔁 0

💬 0

📌 0

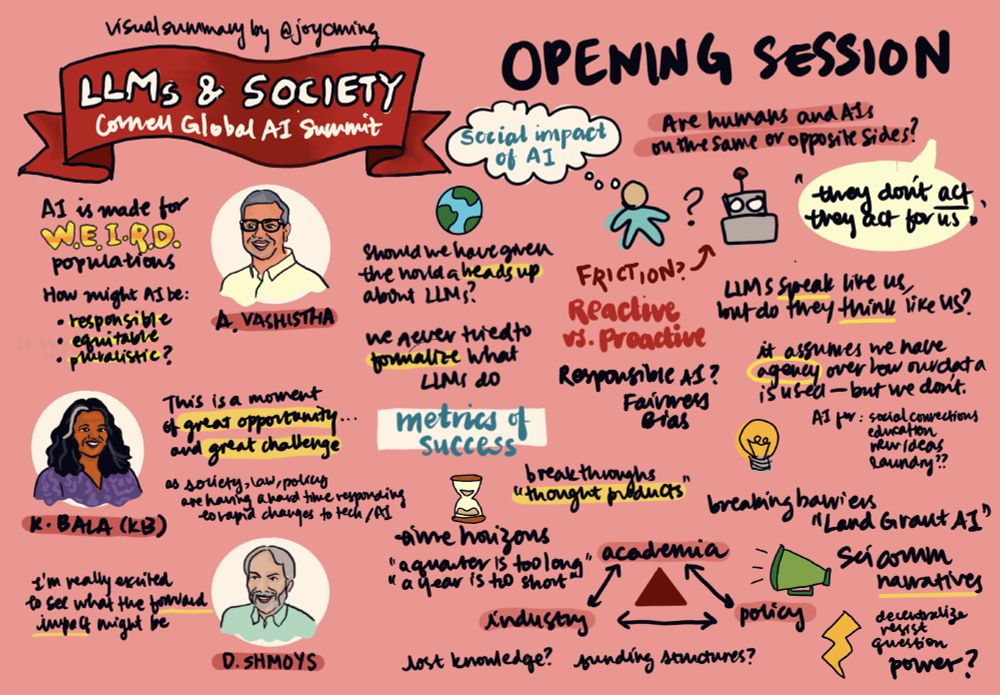

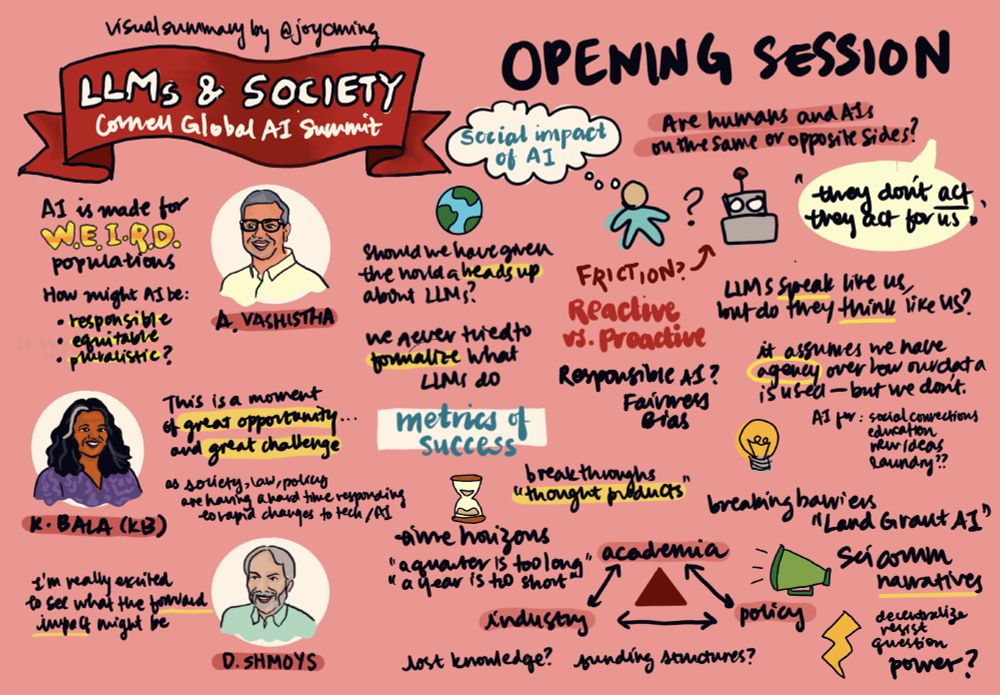

Thank you to all our participants, co-organizers, student volunteers, funders, and partners who made this possible. And to Joy Ming for the beautiful visual summaries.

23.05.2025 16:00 —

👍 0

🔁 0

💬 0

📌 0

Our conversations spanned:

🔷 Meaningful use cases of AI in high-stakes global settings

🔷 Interdisciplinary methods across computing and humanities

🔷 Partnerships between academia, industry, and civil society

🔷 The value of local knowledge, lived experiences, and participatory design

23.05.2025 16:00 —

👍 0

🔁 0

💬 1

📌 0

Over three days, we explored what it means to design and govern pluralistic and humanistic AI technologies — ones that serve diverse communities, respect cultural contexts, and center social well-being. The summit was part of the Global AI Initiative at Cornell.

23.05.2025 16:00 —

👍 0

🔁 0

💬 1

📌 0

Yesterday we wrapped up the Thought Summit on LLMs and Society at Cornell — an energizing and deeply reflective gathering of researchers, practitioners, and policymakers from across institutions and geographies.

23.05.2025 16:00 —

👍 3

🔁 0

💬 1

📌 0

Thank you Dhanaraj for attending the Thought Summit and sharing your thoughts on how we can design AI for All!

23.05.2025 15:56 —

👍 1

🔁 0

💬 0

📌 0

This was the week of reflection, new ideas, and a renewed sense of urgency to design AI systems that serve marginalized communities globally. Can't wait for what's next.

02.05.2025 01:05 —

👍 1

🔁 0

💬 0

📌 0

ASHABot: An LLM-Powered Chatbot to Support the Informational Needs of Community Health Workers | Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems

You will be notified whenever a record that you have chosen has been cited.

Pragnya Ramjee presented work (with Mohit Jain at MSR India) on deploying LLM tools for community health workers in India. In collaboration with Khushi Baby, we show how thoughtful AI design can (and cannot) bridge critical informational gaps in low-resource settings.

dl.acm.org/doi/10.1145/...

02.05.2025 01:05 —

👍 1

🔁 0

💬 2

📌 0

"Who is running it?" Towards Equitable AI Deployment in Home Care Work | Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems

You will be notified whenever a record that you have chosen has been cited.

Ian René Solano-Kamaiko presented our study on how algorithmic tools are already shaping home care work—often invisibly. These systems threaten workers’ autonomy and safety, underscoring the need for stronger protections and democratic AI governance.

dl.acm.org/doi/10.1145/...

02.05.2025 01:05 —

👍 1

🔁 0

💬 1

📌 0

Exploring Data-Driven Advocacy in Home Health Care Work | Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems

Joy Ming presented our award-winning paper on designing advocacy tools for home care workers. In this work, we unpack tensions between individual and collective goals and highlight how to use data responsibly in frontline labor organizing.

dl.acm.org/doi/10.1145/...

02.05.2025 01:05 —

👍 1

🔁 0

💬 1

📌 0

AI Suggestions Homogenize Writing Toward Western Styles and Diminish Cultural Nuances | Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems

You will be notified whenever a record that you have chosen has been cited.

Dhruv presented our cross-cultural study on AI writing tools and their Western-centric biases. We found that AI suggestions disproportionately benefit American users and subtly nudge Indian users toward Western writing norms—raising concerns about cultural homogenization.

dl.acm.org/doi/10.1145/...

02.05.2025 01:05 —

👍 0

🔁 0

💬 1

📌 0

"Ignorance is not Bliss": Designing Personalized Moderation to Address Ableist Hate on Social Media | Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems

Sharon Heung presented our work on personalizing moderation tools to help disabled users manage ableist content online. We showed how users want control over filtering and framing—while also expressing deep skepticism toward AI-based moderation.

dl.acm.org/doi/10.1145/...

02.05.2025 01:05 —

👍 0

🔁 0

💬 1

📌 0

Dhruv, Sharon, Aditya, Jiamin, Ian, and Joy in front of buildings and trees surrounded by a lush green landscape.

Just wrapped up an incredible week at #CHI2025 in Yokohama with Joy Ming, @sharonheung.bsky.social, Dhruv Agarwal, and Ian René Solano-Kamaiko! We presented several papers that push the boundaries of what Globally Equitable AI could look like in high-stakes contexts.

02.05.2025 01:05 —

👍 12

🔁 0

💬 1

📌 0

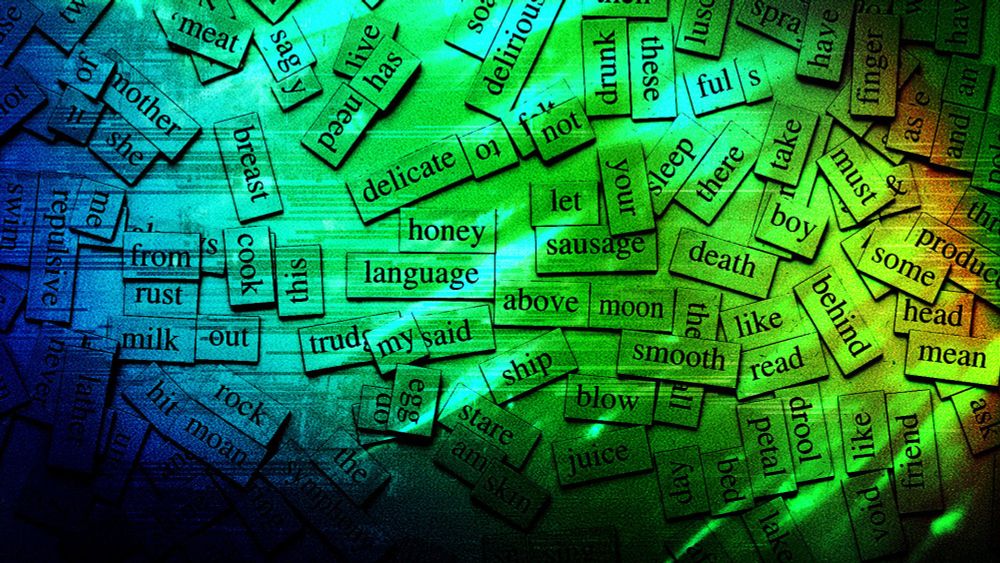

The Great Language Flattening

Chatbots learned from human writing. Now it’s their turn to influence us.

As these tools become more common, it’s critical to ask: Whose voice is being amplified—and whose is being erased? www.theatlantic.com/technology/a...

02.05.2025 00:41 —

👍 1

🔁 0

💬 1

📌 0

Excited to see our research in The Atlantic and Fast Company!

Our work, presented at #CHI2025 this week, shows how AI writing suggestions often nudge people toward Western styles, unintentionally flattening cultural expression and nuance.

02.05.2025 00:41 —

👍 5

🔁 0

💬 1

📌 0

Excited to be at #CHI2025 with Joy Ming, Sharon Heung, Dhruv Agarwal, and Ian Rene Solano-Kamaiko!

Our lab will be presenting several papers Globally Equitable AI — centering equity, culture, and inclusivity in high-stakes contexts. 🌎

If you’ll be there, would love to connect! 🖐️

26.04.2025 09:32 —

👍 3

🔁 0

💬 0

📌 0

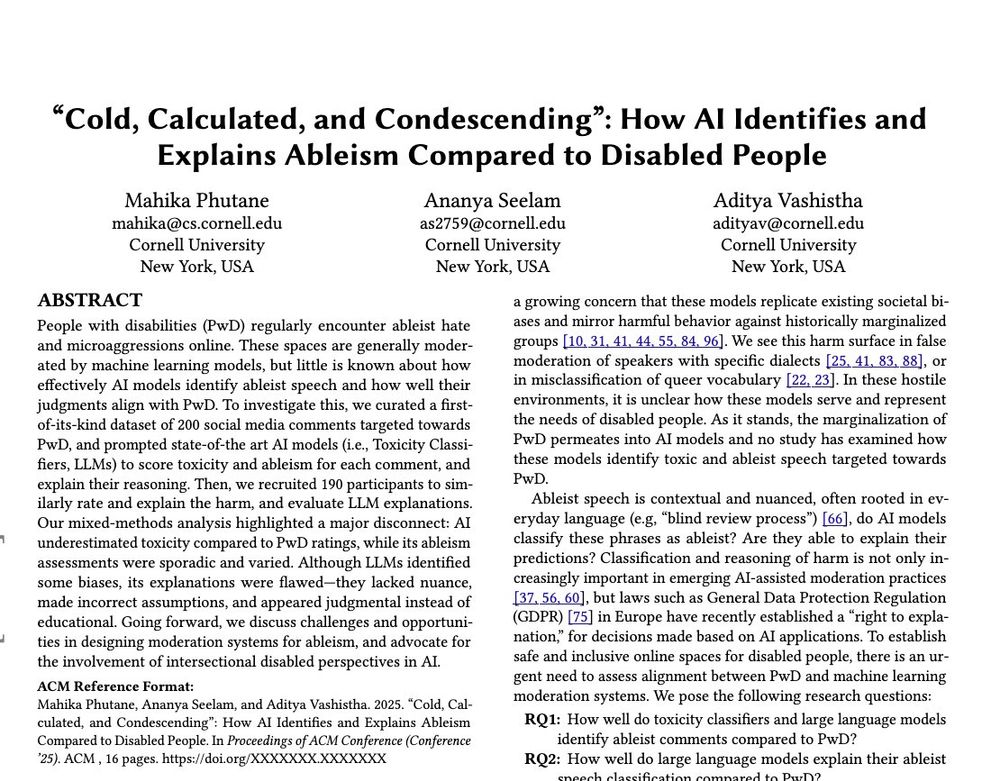

Huge congratulations to Mahika Phutane for leading this work, and Ananya Seelam for her contributions!

We’re thrilled to share this at ACM FAccT 2025.

Read the full paper: lnkd.in/eCsAupvK

12.04.2025 20:57 —

👍 0

🔁 0

💬 0

📌 0

Our findings make a clear case: AI moderation systems must center disabled people’s expertise, especially when defining harm and safety.

This isn’t just a technical problem—it’s about power, voice, and representation.

12.04.2025 20:57 —

👍 1

🔁 0

💬 1

📌 0

Disabled participants frequently described these AI explanations as “condescending” or “dehumanizing.”

The models reflect a clinical, outsider gaze—rather than lived experience or structural understanding.

12.04.2025 20:57 —

👍 0

🔁 0

💬 1

📌 0

AI systems often underestimate ableism—even in clear-cut cases of discrimination or microaggressions.

And when they do explain their decisions? The explanations are vague, euphemistic, or moralizing.

12.04.2025 20:57 —

👍 0

🔁 0

💬 1

📌 0

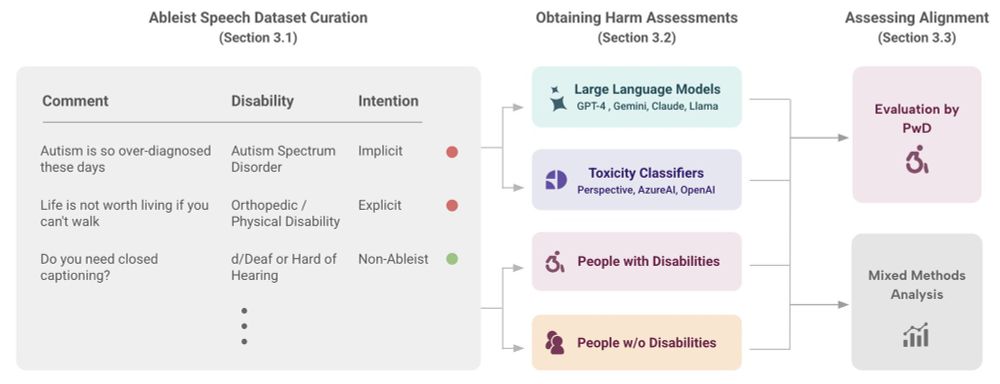

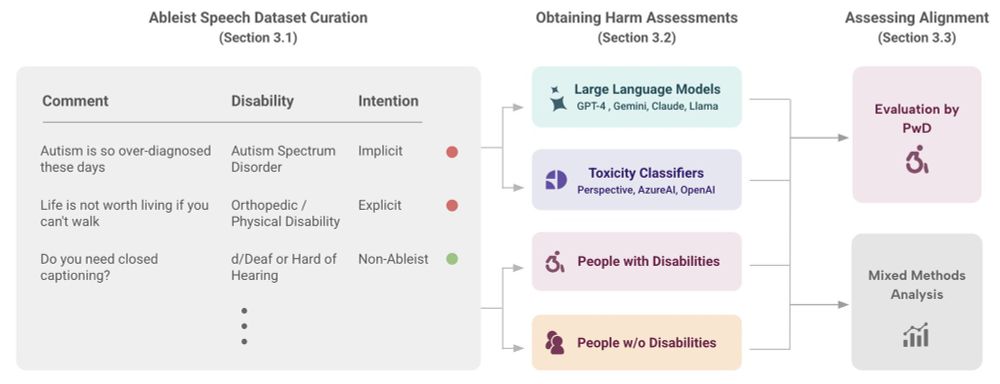

Methodology of our paper, starting with creating a dataset containing ableist and non-ableist post, followed by collecting and analyzing ratings and explanations from AI models and disabled and non-disabled participants.

We studied how AI systems detect and explain ableist content—and how that compares to judgments from 130 disabled participants.

We also analyzed explanations from 7 major LLMs and toxicity classifiers. The gaps are stark.

12.04.2025 20:57 —

👍 0

🔁 0

💬 1

📌 0

The image of our Arxiv Photo with paper title and author list: Mahika Phutane, Ananya Seelam, and Aditya Vashistha

Our paper, “Cold, Calculated, and Condescending”: How AI Identifies and Explains Ableism Compared to Disabled People, has been accepted at ACM FAccT 2025!

A quick thread on what we found:

12.04.2025 20:57 —

👍 8

🔁 0

💬 1

📌 0

Excited to be at @umich.edu this week to speak at Democracy’s Information Dilemma event, and at the Social Media and Society conference! Hard to believe it’s been since 2016 that I was last here. Can’t wait for engaging conversations, new ideas, and reconnecting with colleagues old and new!

02.04.2025 21:47 —

👍 2

🔁 0

💬 0

📌 0

The FATE group at @msftresearch.bsky.social NYC is accepting applications for 2025 interns. 🥳🎉

For full consideration, apply by 12/18.

jobs.careers.microsoft.com/global/en/jo...

Interested in AI evaluation? Apply for the STAC internship too!

jobs.careers.microsoft.com/global/en/jo...

25.11.2024 13:31 —

👍 73

🔁 35

💬 4

📌 1

Thank you Shannon!

23.11.2024 16:32 —

👍 7

🔁 0

💬 0

📌 0

Hi Shannon, I’d love to be added!

23.11.2024 16:10 —

👍 1

🔁 0

💬 1

📌 0