AI Won’t Automatically Make Legal Services Cheaper

Three bottlenecks between AI capability and access to justice.

Advanced AI will not automatically help consumers achieve desired legal outcomes at lower costs. Justin Curl, @sayash.bsky.social, & @randomwalker.bsky.social identify three bottlenecks that stand between AI capability advances and more accessible legal services in the latest Lawfare Research paper.

12.02.2026 15:36 —

👍 13

🔁 4

💬 1

📌 0

(1/4) Ever wondered what tech policy might look like if it were informed by research on collective intelligence and complex systems? 🧠🧑💻

Join @jbakcoleman.bsky.social, @lukethorburn.com, and myself in San Diego on Aug 4th for the Collective Intelligence x Tech Policy workshop at @acmci.bsky.social!

19.05.2025 11:01 —

👍 17

🔁 12

💬 1

📌 3

Why an overreliance on AI-driven modelling is bad for science

Without clear protocols to catch errors, artificial intelligence’s growing role in science could do more harm than good.

New commentary in @nature.com from professor Arvind Narayanan (@randomwalker.bsky.social) & PhD candidate Sayash Kapoor (@sayash.bsky.social) about the risks of rapid adoption of AI in science - read: "Why an overreliance on AI-driven modelling is bad for science" 🔗

#CITP #AI #science #AcademiaSky

09.04.2025 18:19 —

👍 20

🔁 10

💬 0

📌 0

AI as Normal Technology

In a new essay from our "Artificial Intelligence and Democratic Freedoms" series, @randomwalker.bsky.social & @sayash.bsky.social make the case for thinking of #AI as normal technology, instead of superintelligence. Read here: knightcolumbia.org/content/ai-a...

15.04.2025 14:34 —

👍 38

🔁 17

💬 1

📌 6

Why an overreliance on AI-driven modelling is bad for science

Without clear protocols to catch errors, artificial intelligence’s growing role in science could do more harm than good.

“The rush to adopt AI has consequences. As its use proliferates…some degree of caution and introspection is warranted.”

In a comment for @nature.com, @randomwalker.bsky.social and @sayash.bsky.social warn against an overreliance on AI-driven modeling in science: bit.ly/4icM0hp

16.04.2025 15:42 —

👍 6

🔁 4

💬 0

📌 0

Why an overreliance on AI-driven modelling is bad for science

Without clear protocols to catch errors, artificial intelligence’s growing role in science could do more harm than good.

Science is not collection of findings. Progress happens through theories.As we move from findings to theories things r less amenable to automation. Proliferation of scientific findings based on AI hasn't accelerated—& might even have inhibited—higher levels of progress www.nature.com/articles/d41...

09.04.2025 15:45 —

👍 121

🔁 49

💬 3

📌 3

x.com

This is the specific use case I have in mind (Operator shouldn't be the *only* thing developers use, but rather that it can be a helpful addition to a suite of tools): x.com/random_walke...

03.02.2025 18:12 —

👍 2

🔁 0

💬 0

📌 0

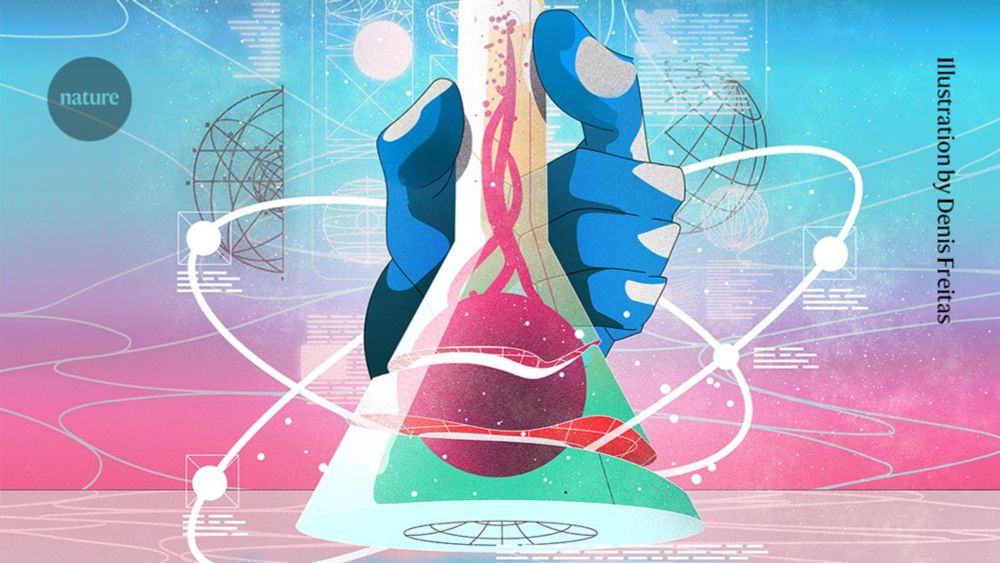

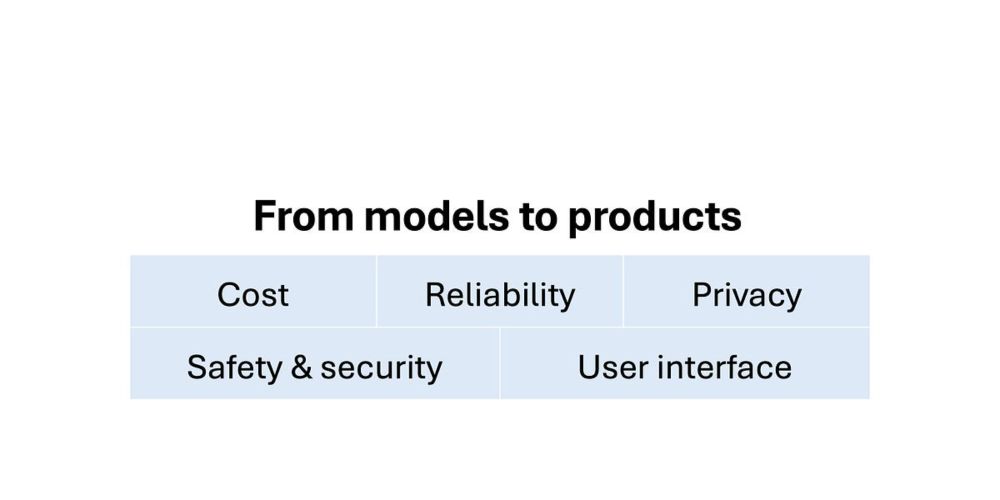

AI companies are pivoting from creating gods to building products. Good.

Turning models into products runs into five challenges

It is also better for end users. As

@randomwalker.bsky.social and I have argued, focusing on products (rather than just models) means companies must understand user demand and build tools people want. It leads to more applications that people can productively use: www.aisnakeoil.com/p/ai-compani...

03.02.2025 18:10 —

👍 3

🔁 0

💬 0

📌 0

Finally, the new product launches from OpenAI (Operator, Search, Computer use, Deep research) show that it doesn't just want to be in the business of creating more powerful AI — it also wants a piece of the product pie. This is a smart move as models become commoditized.

03.02.2025 18:10 —

👍 2

🔁 0

💬 1

📌 0

This also highlights the need for agent interoperability: who would want to teach a new agent 100s of tasks from scratch? If web agents become widespread, preventing agent lock-in will be crucial.

(I'm working on fleshing out this argument with

@sethlazar.org + Noam Kolt)

03.02.2025 18:10 —

👍 1

🔁 0

💬 1

📌 0

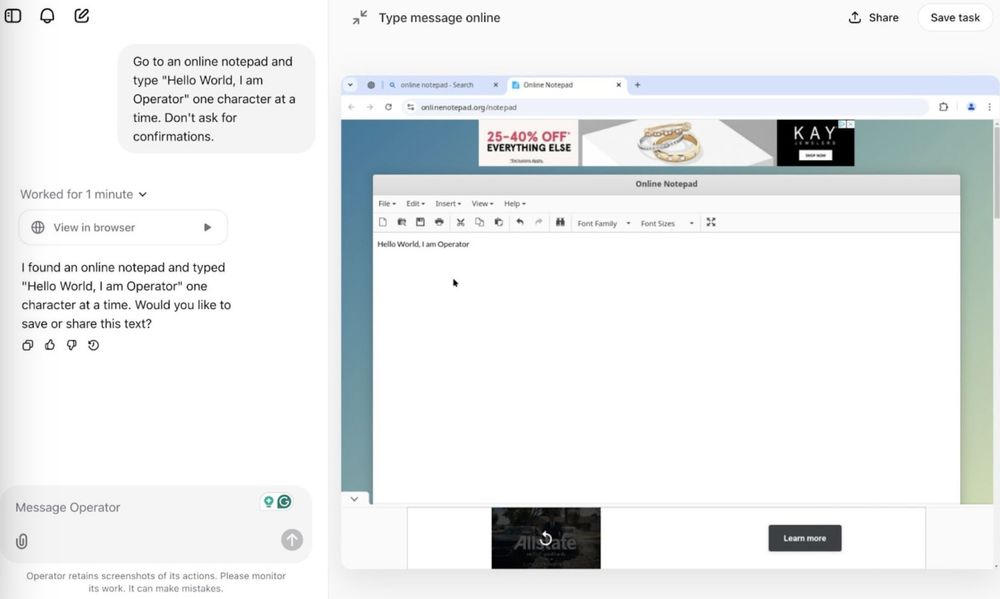

Seen this way, Operator is a *tool* to easily create new web automation using natural language.

It could expand the web automation that businesses already use, making it easier to create new ones.

So it is quite surprising that Operator isn't available on ChatGPT Teams yet.

03.02.2025 18:09 —

👍 0

🔁 0

💬 1

📌 0

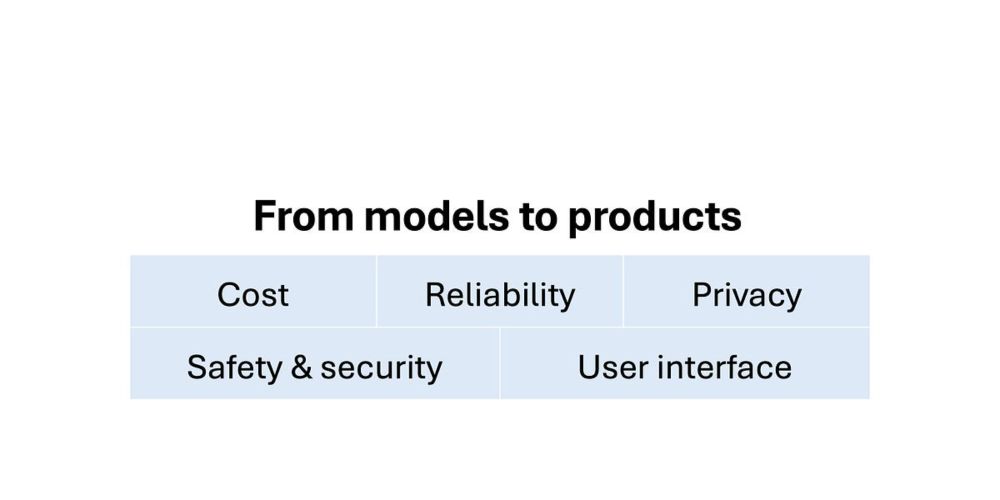

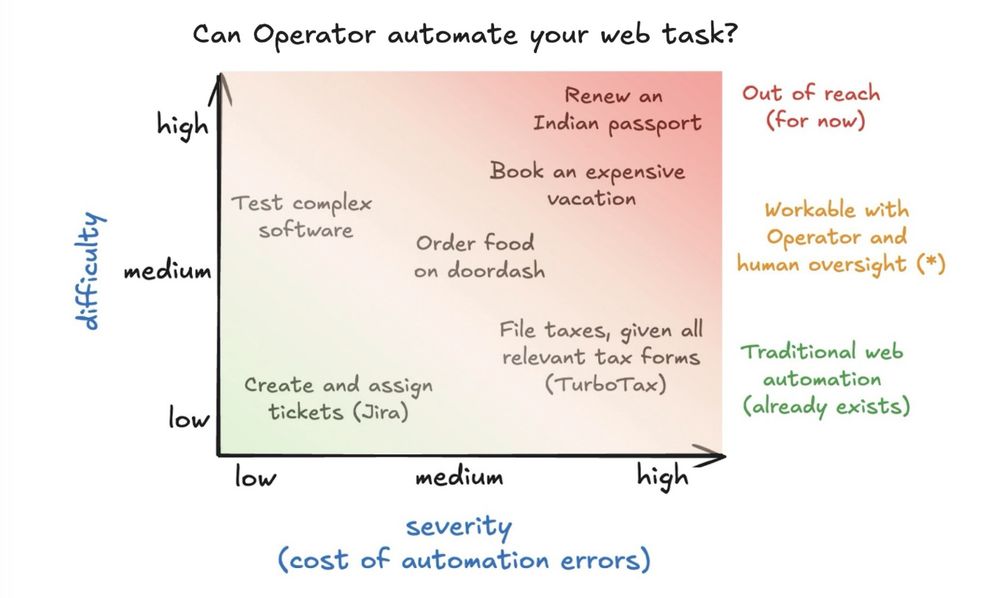

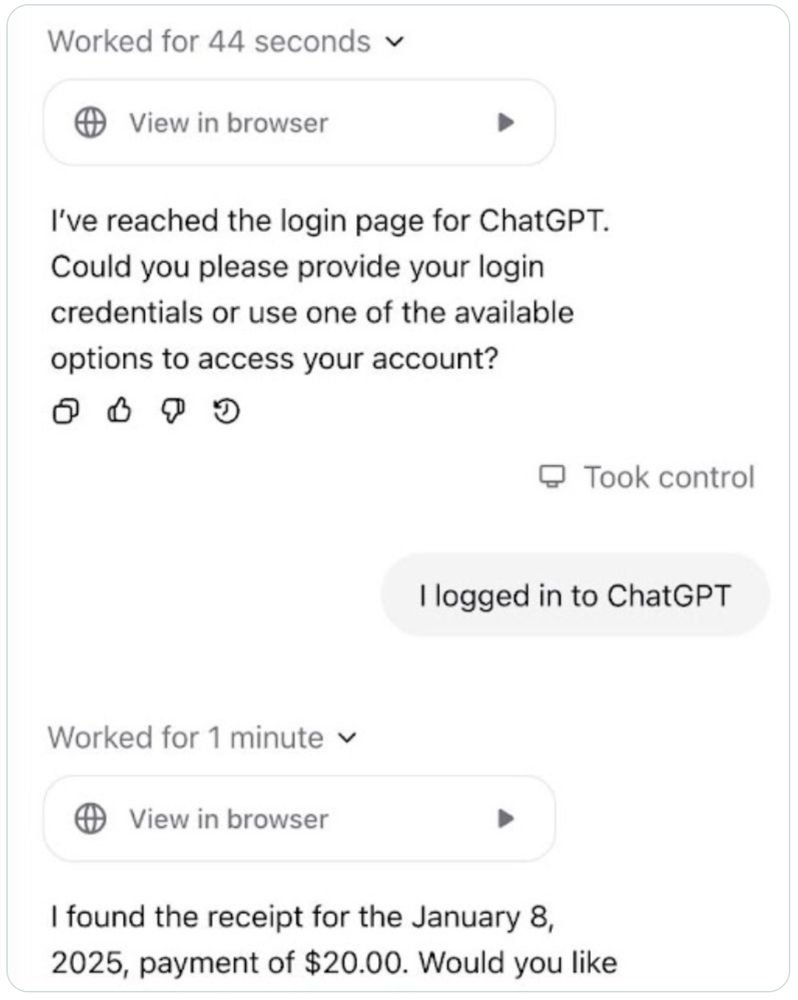

OpenAI allows you to delegate daily tasks to Operator

Instead of thinking of Operator as a "universal assistant" that completes all tasks, it is better to think of it as a task template tool that automates specific tasks (for now).

Once a human has overseen a task a few times, we can estimate Operator's ability to automate it.

03.02.2025 18:09 —

👍 1

🔁 0

💬 1

📌 0

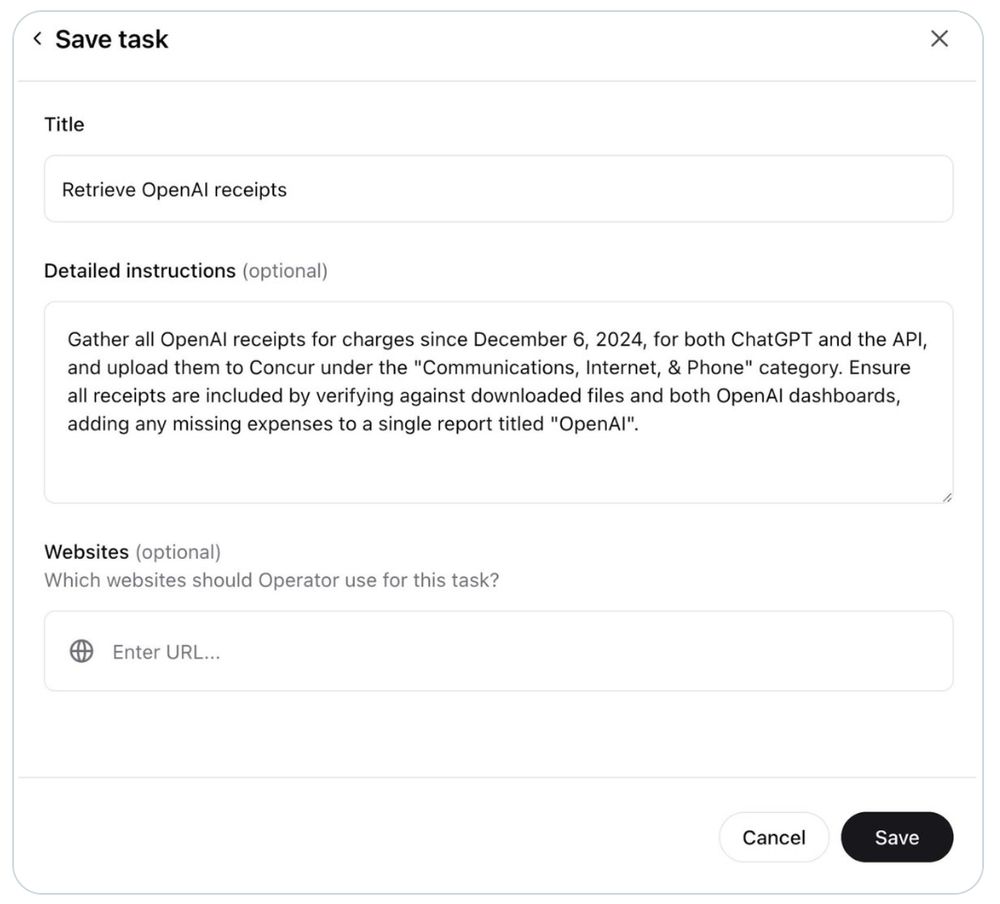

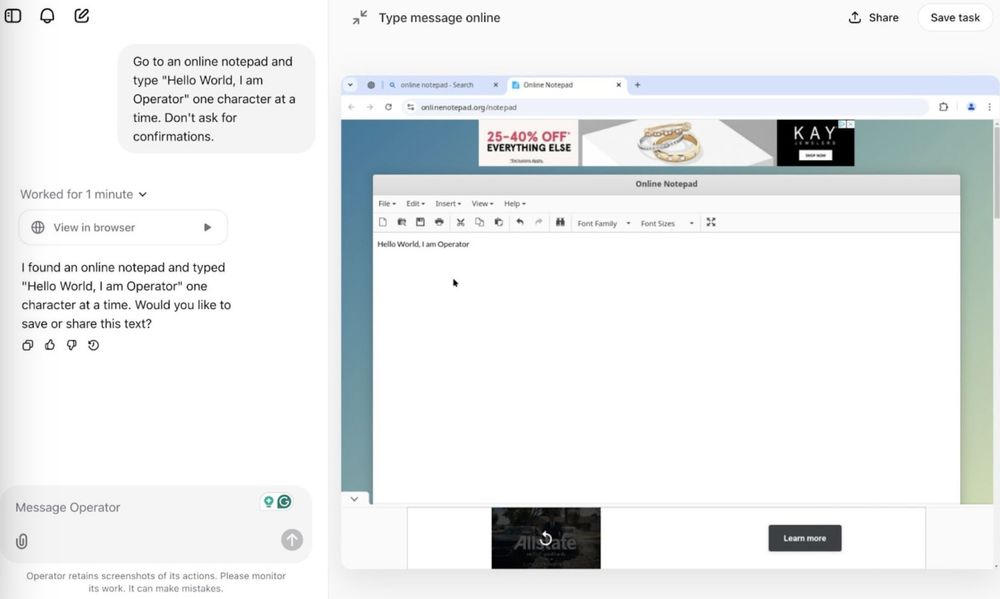

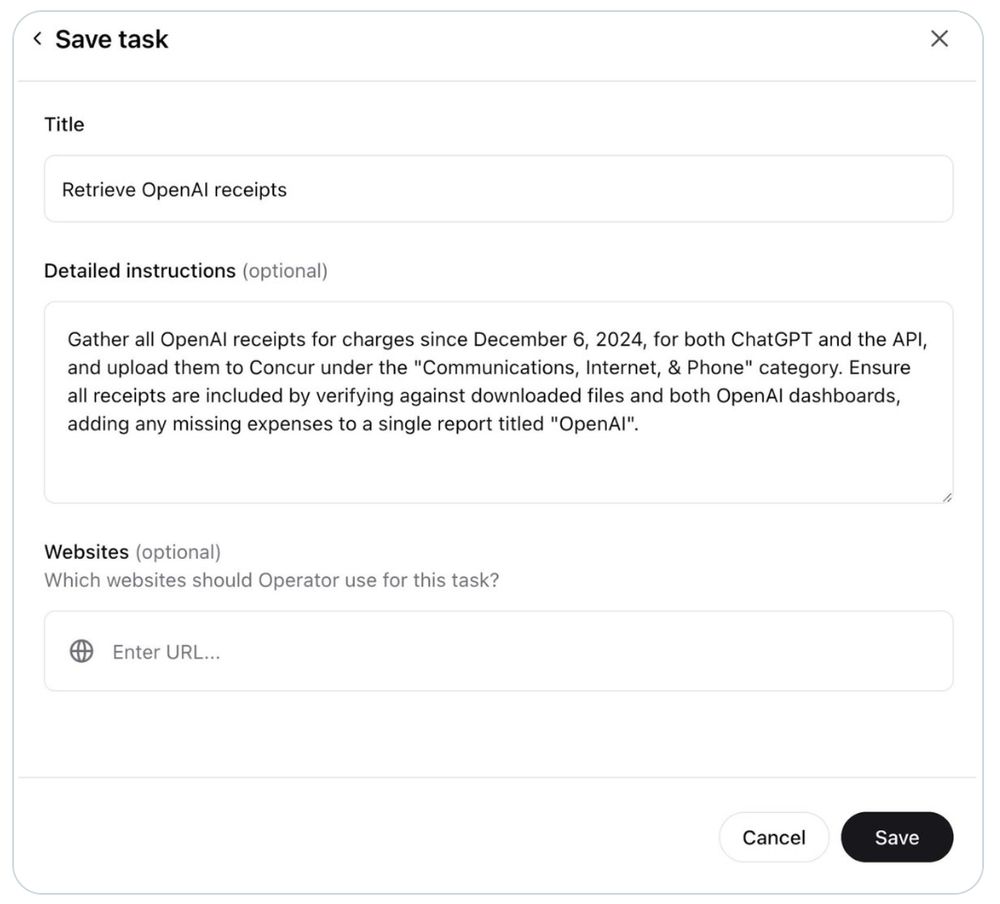

screenshot of the save task template for Operator

OpenAI also allows you to "Save" tasks you completed using Operator. Once you complete a task and provide feedback to complete it successfully, you don't need to repeat it the next time.

I can imagine this becoming powerful (though it's not very detailed right now).

03.02.2025 18:09 —

👍 1

🔁 0

💬 1

📌 0

x.com

3) In many cases, the challenge isn't Operator's ability to complete a task, it is eliciting human preferences. Chatbots aren't a great form factor for that.

But there are many tasks where reliability isn't important. This is where today's agents shine. For example: x.com/random_walke...

03.02.2025 18:08 —

👍 4

🔁 1

💬 1

📌 0

Could more training data lead to automation without human oversight? Not quite:

1) Prompt injection remains a pitfall for web agents. Anyone who sends you an email can control your agent.

2) Low reliability means agents fail on edge cases

03.02.2025 18:08 —

👍 3

🔁 1

💬 1

📌 0

But being able to see agent actions and give feedback with a human in the loop converts Operator from an unreliable agent, like the Humane Pin or Rabbit R1, to a workable but imperfect product.

Operator is as much as UX advance as it is a tech advance.

03.02.2025 18:08 —

👍 3

🔁 0

💬 1

📌 0

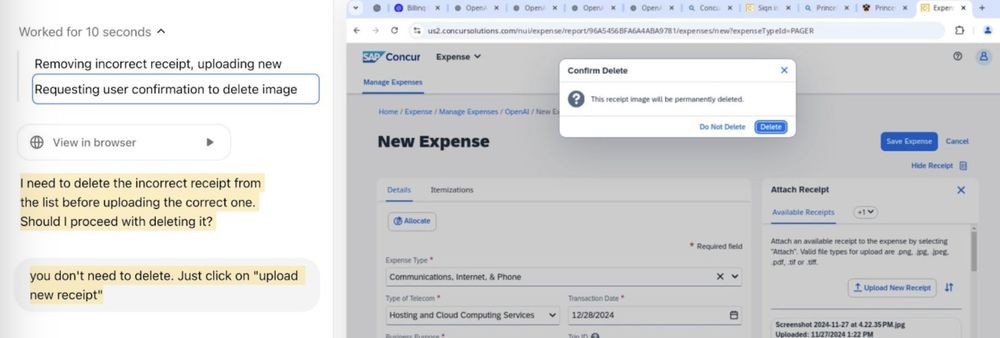

In the end, Operator struggled to file my expense reports even after an hour of trying and prompting. Then I took over, and my reports were filed 5 minutes later.

This is the bind for web agents today: not reliable enough to be automatable, not quick enough to save time.

03.02.2025 18:08 —

👍 4

🔁 1

💬 1

📌 1

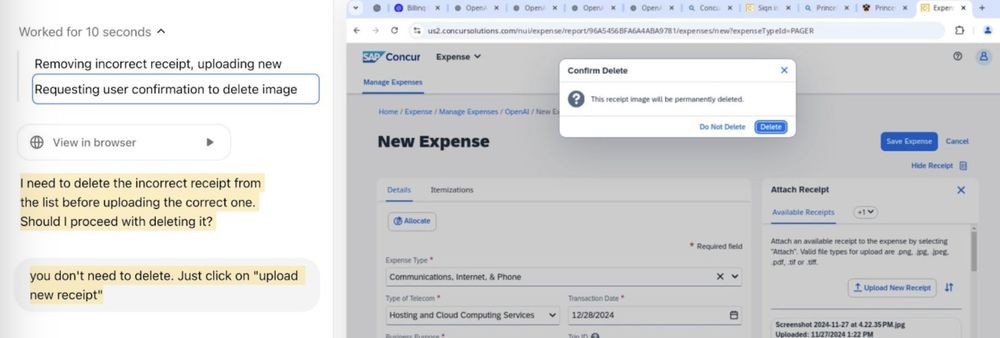

OpenAI also trained Operator to ask the user for feedback before taking consequential actions, though I am not sure how robust this is — a simple instruction to avoid asking the user changed its behavior, and I can easily imagine this being exploited by prompt injection attacks.

03.02.2025 18:07 —

👍 1

🔁 0

💬 1

📌 0

Operator tries to delete receipts.

But things went south quickly. It couldn't match the receipts to the amounts. Even after prompts directing it to missing receipts, it couldn't download them. It almost deleted previous receipts from other expenses!

03.02.2025 18:07 —

👍 1

🔁 0

💬 1

📌 0

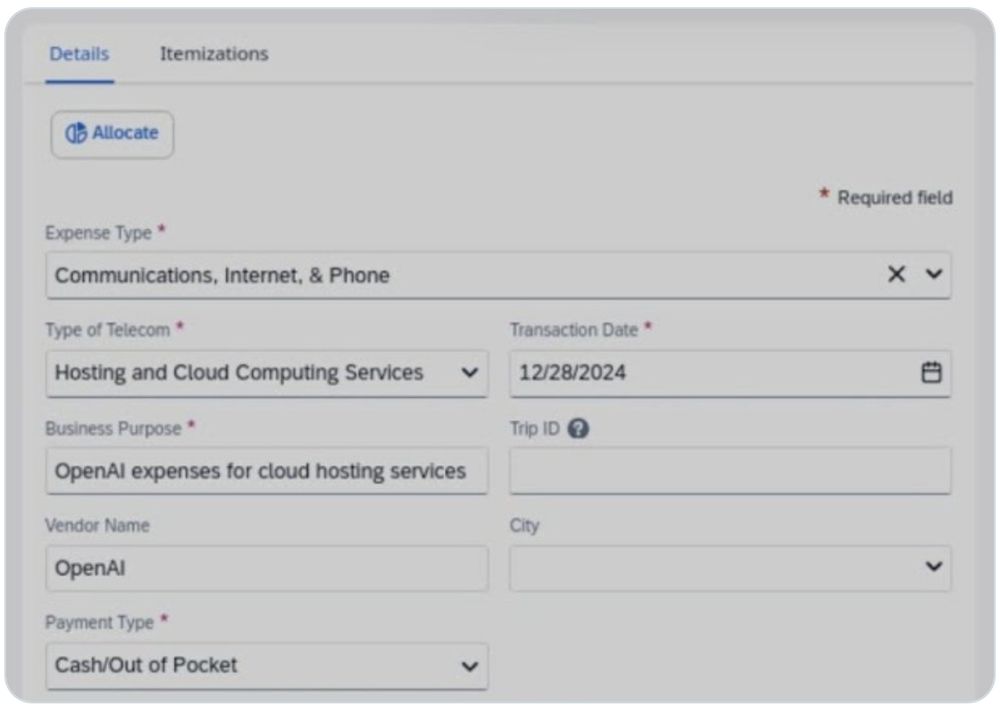

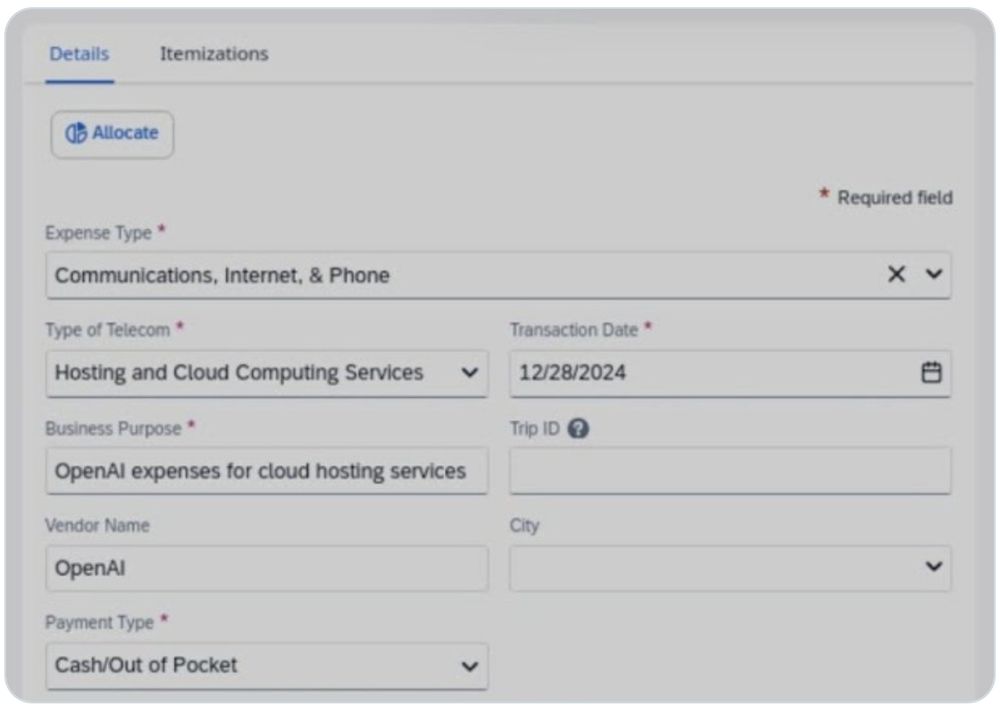

screenshot of concur with the categories for the expense filled in

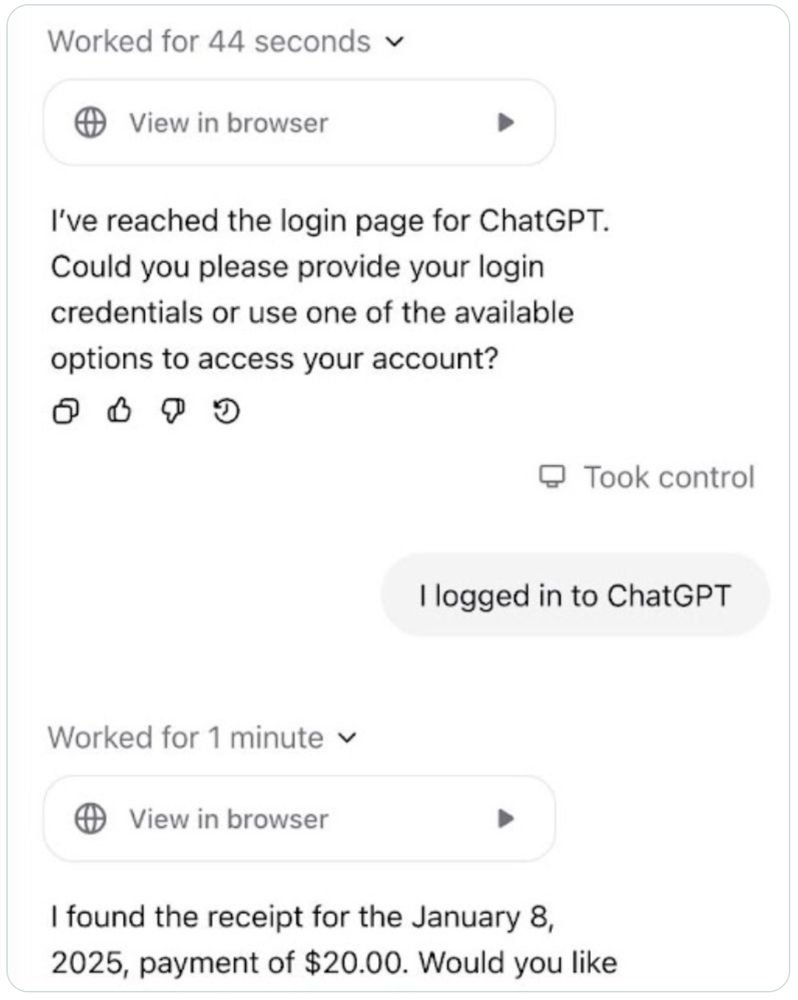

It navigated to the correct URLs, asked me to log into my OpenAI and Concur accounts. Once in my accounts, it downloaded receipts from the correct URL, and even started uploading the receipts under the right headings!

03.02.2025 18:07 —

👍 1

🔁 0

💬 1

📌 0

screenshot of a conversation with Operator

I asked Operator to file reports for my OpenAI and Anthropic API expenses for the last month. This is a task I do manually each month, so I knew exactly what it would need to do. To my surprise, Operator got the first few steps exactly right:

03.02.2025 18:06 —

👍 2

🔁 0

💬 1

📌 0

screenshot of Operator writing "Hello World" in an online notepad.

OpenAI's Operator is a web agent that can solve arbitrary tasks on the internet *with human supervision*. It runs on a virtual machine (*not* your computer). Users can see what the agent is doing on the browser in real-time. It is available to ChatGPT Pro subscribers.

03.02.2025 18:05 —

👍 7

🔁 0

💬 1

📌 0

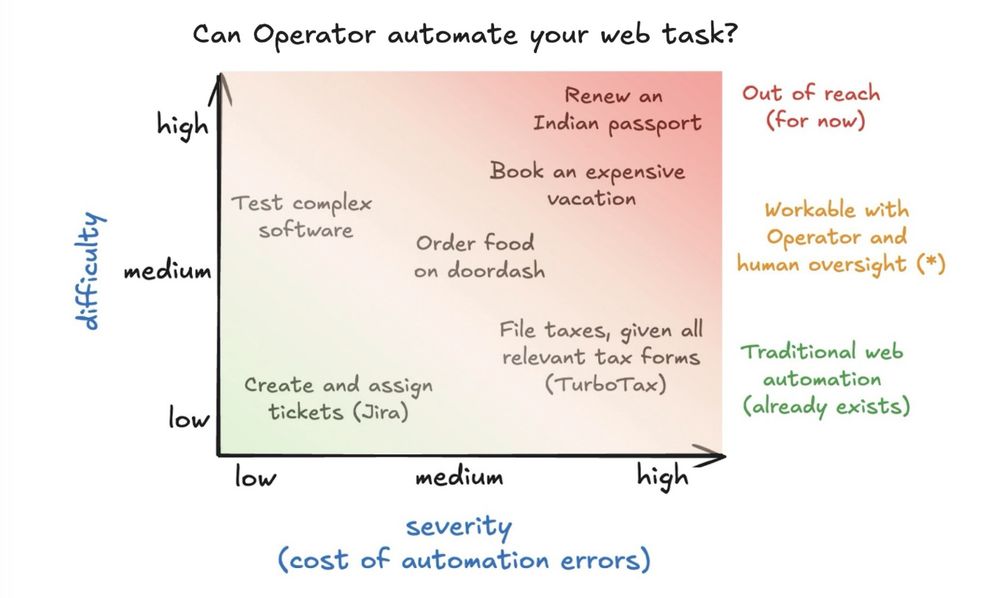

Graph of web tasks along difficulty and severity (cost of errors)

I spent a few hours with OpenAI's Operator automating expense reports. Most corporate jobs require filing expenses, so Operator could save *millions* of person-hours every year if it gets this right.

Some insights on what worked, what broke, and why this matters for the future of agents 🧵

03.02.2025 18:04 —

👍 38

🔁 10

💬 6

📌 3

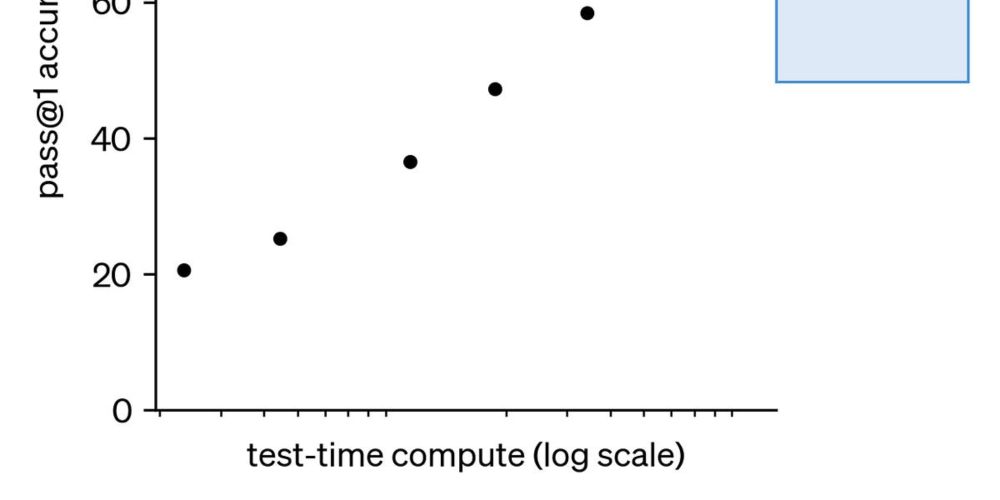

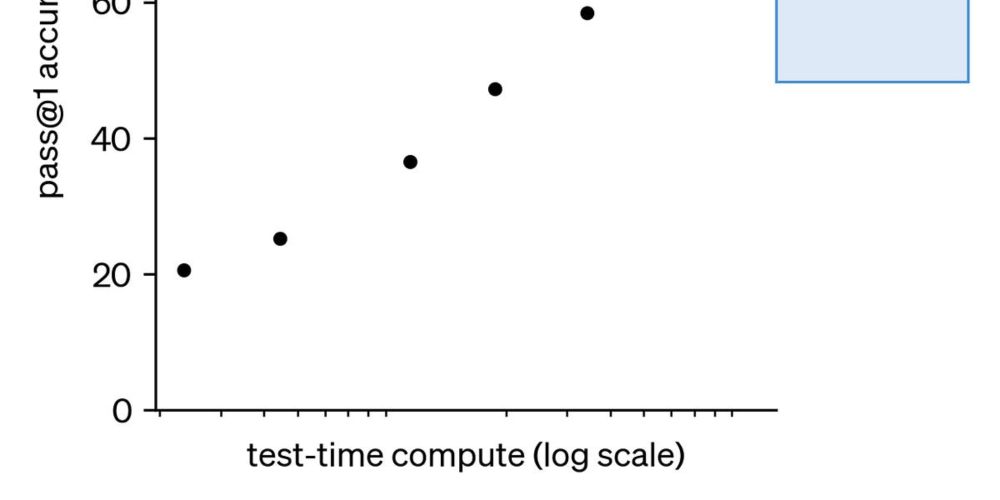

Is AI progress slowing down?

Making sense of recent technology trends and claims

Excellent post discussing whether "AI progress is slowing down".

www.aisnakeoil.com/p/is-ai-prog...

And if you're not subscribed to @randomwalker.bsky.social and @sayash.bsky.social 's great newsletter, what are you waiting for?

19.12.2024 23:57 —

👍 56

🔁 15

💬 0

📌 1

Book cover

Excited to share that AI Snake Oil is one of Nature's 10 best books of 2024! www.nature.com/articles/d41...

The whole first chapter is available online:

press.princeton.edu/books/hardco...

We hope you find it useful.

18.12.2024 12:12 —

👍 135

🔁 30

💬 5

📌 6

Screenshot from the blog post

Improving the information environment is inextricably linked to the larger project of shoring up democracy and its institutions. No quick fix can “solve” our information problems. But we should reject the simplistic temptation to blame AI.

16.12.2024 15:10 —

👍 8

🔁 2

💬 1

📌 0

But blaming technology is not a fix. Political polarization has led to greater mistrust of the media. People prefer sources that confirm their worldview and are less skeptical about content that fits their worldview. Journalism revenues have fallen drastically.

16.12.2024 15:09 —

👍 4

🔁 0

💬 1

📌 0

So why do we keep hearing warnings about an AI-fueled misinformation apocalypse? Blaming technology is appealing since it makes solutions seem simple. If only we could roll back harmful tech, we could drastically improve the information environment!

16.12.2024 15:09 —

👍 10

🔁 2

💬 1

📌 0

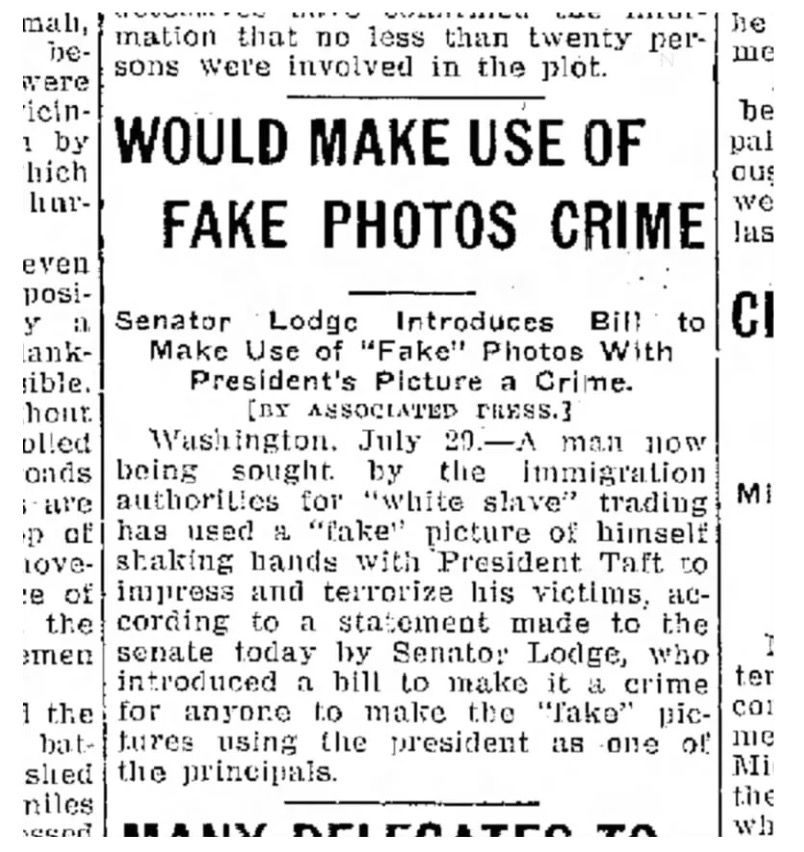

Screenshot of a 1912 news articles with the headline "would make use of fake photos crime", from https://newsletter.pessimistsarchive.org/p/the-1912-war-on-fake-photos

We've heard warnings about new tech leading to waves of misinfo before. GPT-2 in 2019, LLaMA in 2023, Pixel 9 this year, and even photo editing and re-touching back in 1912. None of the predicted waves of misinfo materialized.

16.12.2024 15:09 —

👍 9

🔁 5

💬 1

📌 0