Ever wanted to run MD simulations of entire proteins in water with DFT accuracy?

Meet AMPv3-BMS25, the latest iteration of our AMP multiscale neural network potential by

@rinikerlab.bsky.social

Read more in the preprints:

doi.org/10.26434/che...

doi.org/10.26434/che...

12.01.2026 06:48 — 👍 14 🔁 5 💬 1 📌 0

I'll be at Pacifichem this week, hit me up if you wanna have a coffee! ☕

15.12.2025 17:22 — 👍 1 🔁 0 💬 0 📌 0

Join us today from 4:30 to 7:30 PM @neuripsconf.bsky.social Hall C,D,E #1006 for our poster on SmoothDiff, a novel XAI method leveraging automatic differentiation.

🧵1/6

03.12.2025 19:36 — 👍 11 🔁 6 💬 1 📌 0

I'm in San Diego this week for Neurips, hit me up if you wanna have a coffee ☕

02.12.2025 04:29 — 👍 1 🔁 0 💬 0 📌 0

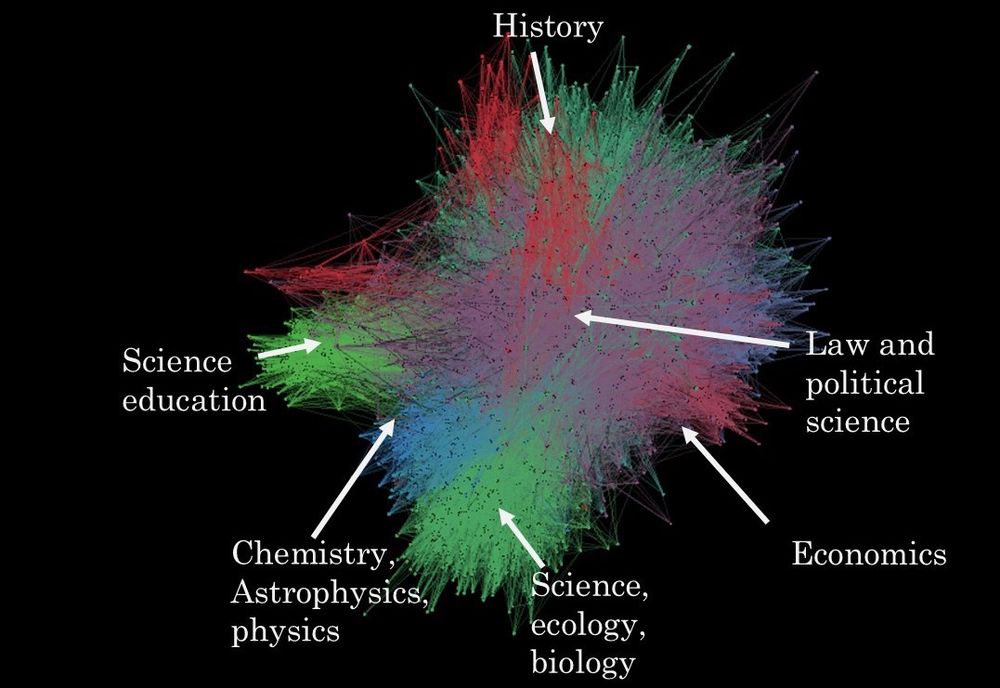

physics is a branch of chemistry

18.11.2025 16:35 — 👍 2 🔁 0 💬 0 📌 0

I'm in Oslo this week for WATOC, hit me up if you wanna have a coffee ☕

22.06.2025 20:22 — 👍 1 🔁 0 💬 1 📌 0

GitHub - khaledkah/tv-snr-diffusion

Contribute to khaledkah/tv-snr-diffusion development by creating an account on GitHub.

9/ Check it yourself:

🔗: github.com/khaledkah/tv...

📄: www.arxiv.org/abs/2502.08598

Thanks to Khaled, Winnie, Oliver, Klaus, and Shin for the cool collaboration as well as @bifold.berlin, TU Berlin, RIKEN, and DeepMind

12.03.2025 15:55 — 👍 2 🔁 1 💬 0 📌 0

8/ Takeaway: Exploding TV isn’t needed. Control TV + SNR separately for faster, better sampling. Method generalizes across domains (molecules, images).

12.03.2025 15:55 — 👍 1 🔁 0 💬 1 📌 0

7/ Why it works? Our empirical analysis shows:

1. Straight trajectories near data (t ≈ 0) are important (see in the inset plot)

2. Broad support of pₜ(𝐱) early on → robust to errors (note how SMLD goes from small to huge range instead of staying the same)

12.03.2025 15:55 — 👍 0 🔁 0 💬 1 📌 0

6/ Images: Matches EDM with uniform grid

No fancy time grids like in EDM needed! VP-ISSNR on CIFAR-10/FFHQ ≈ EDM but with fewer hyperparameters!

12.03.2025 15:55 — 👍 0 🔁 0 💬 1 📌 0

5/ Molecules in 8 Steps:

VP-ISSNR achieves 74% stability with 8 steps, 95% with 64 (SDE). Beats all baselines!

12.03.2025 15:55 — 👍 0 🔁 0 💬 1 📌 0

4/ We propose a new VP schedule 📈:

Exponential inverse sigmoid SNR (ISSNR)→ rapid decay at start/end. Generalizes Optimal Transport Flow Matching.

12.03.2025 15:55 — 👍 1 🔁 0 💬 1 📌 0

3/ VP variants improve existing schedules:

Take SMLD/EDM (exploding TV) → force TV=1. Result: +30% stability for molecules with 8 steps

(x-axis is NFE=number of function evals).

12.03.2025 15:55 — 👍 0 🔁 0 💬 1 📌 0

1/ Problem: Diffusion models are slow due to repeated evals but reducing steps hurts quality if the noise schedule isn’t optimal. Other schedules passively adjust variance. Can we do better?

🔑Insight: control Total Variance (TV) and signal-to-noise-ratio (SNR) independently!

12.03.2025 15:55 — 👍 0 🔁 0 💬 1 📌 0

We have a new paper on diffusion!📄

Faster diffusion models with total variance/signal-to-noise ratio disentanglement! ⚡️

Our new work shows how to generate stable molecules in sometimes as little 8 steps and match EDM’s image quality with a uniform time grid. 🧵

12.03.2025 15:55 — 👍 5 🔁 1 💬 1 📌 0

We are already on Day 3 of the workshop "Density Functional Theory and Artificial Intelligence learning from each other" in sunny CECAM-HQ.

This afternoon, Fang Liu (Emory University) & Michael Herbst (EPFL) will present their talks.

05.03.2025 10:48 — 👍 6 🔁 1 💬 0 📌 0

our GPU cluster tonight after the ICML deadline

31.01.2025 17:55 — 👍 4 🔁 0 💬 0 📌 0

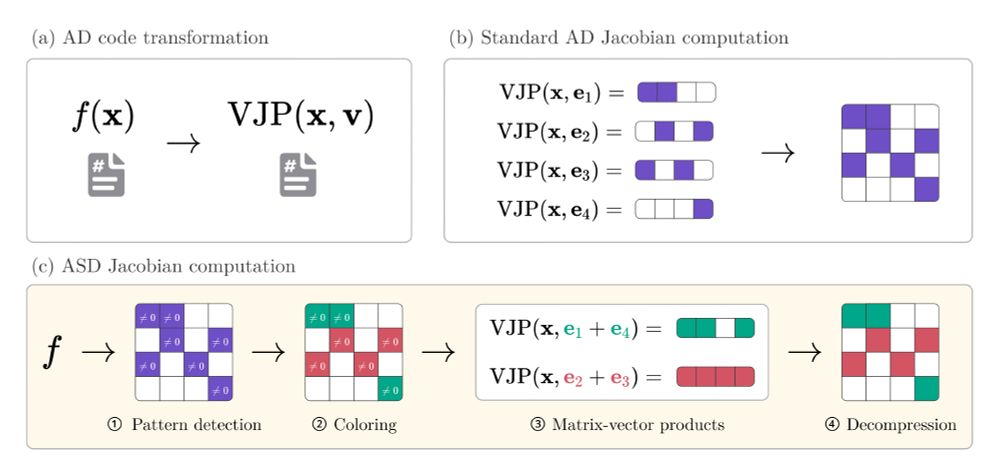

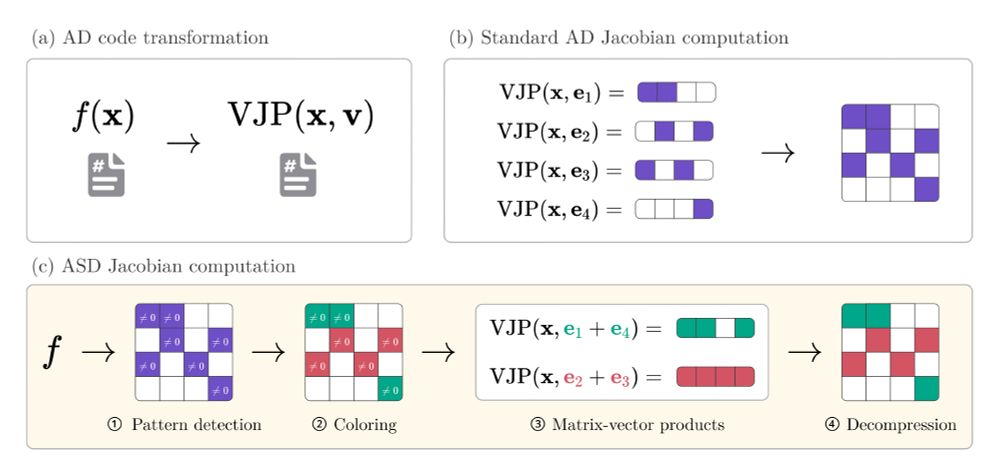

Figure comparing automatic differentiation (AD) and automatic sparse differentiation (ASD).

(a) Given a function f, AD backends return a function computing vector-Jacobian products (VJPs). (b) Standard AD computes Jacobians row-by-row by evaluating VJPs with all standard basis vectors. (c) ASD reduces the number of VJP evaluations by first detecting a sparsity pattern of non-zero values, coloring orthogonal rows in the pattern and simultaneously evaluating VJPs of orthogonal rows. The concepts shown in this figure directly translate to forward-mode, which computes Jacobians column-by-column instead of row-by-row.

You think Jacobian and Hessian matrices are prohibitively expensive to compute on your problem? Our latest preprint with @gdalle.bsky.social might change your mind!

arxiv.org/abs/2501.17737

🧵1/8

30.01.2025 14:32 — 👍 142 🔁 29 💬 3 📌 4

We submitted our stuff to the ICML today at lunch and I am so happy about it. It's juicy, it might get rejected, but it has the heart in the right place :]

31.01.2025 14:39 — 👍 3 🔁 0 💬 1 📌 0

acab includes the raclette police 💡

25.12.2024 23:44 — 👍 4 🔁 0 💬 0 📌 0

i guess their claim would be that it blows up for mysterious NN reasons rather than integrator or time step. 2 fs is a bit of chonky step, i agree, but if it explodes at say 0.1 fs i'd start wondering about the NN more than about the time step

17.12.2024 19:11 — 👍 0 🔁 0 💬 1 📌 0

stuff like this, (Ala)_2 at 2 fs or water at 1 fs? at <=0.5 fs they wouldn't explode for curl free forces, i assume?

17.12.2024 16:50 — 👍 0 🔁 0 💬 1 📌 0

why was that paper bad? i thought it was more of a benchmark than proposing their own thing anyways?

17.12.2024 16:14 — 👍 1 🔁 0 💬 1 📌 0

i raise you to ful midammis oml

26.11.2024 16:46 — 👍 0 🔁 0 💬 0 📌 0

Seems like it refers to DFT. They actually give an intuition from metallurgy in the appendix about simulated annealing. I didn't know it's about removing impurities 🙉

20.11.2024 18:44 — 👍 0 🔁 0 💬 0 📌 0

Excellent question. Alas, I can't say i like mcmc (or tmcmc?) as a word either. A string of non-descript names is just ... Eugh

20.11.2024 17:09 — 👍 0 🔁 0 💬 1 📌 0

Ah yes, 'annealing', i do it every day and have a super intuitive understanding of what it is. In fact, im annealing right now.

20.11.2024 16:23 — 👍 0 🔁 0 💬 1 📌 0

Is "simulated annealing" really the best word for what it describes?

20.11.2024 15:58 — 👍 1 🔁 0 💬 2 📌 0

Riniker research group, ETH Zurich

Senior Research Scientist @ Microsoft Research | PhD and postdoctoral studies @ ETH Zurich.

🎓👩🏻🎓 PhD | Curiosity-driven computational chemist | Unveiling the mysteries of biomolecules, compounds and chemical reactions ✨

Computational Chemist. Associate Professor at the University of Oxford. Organic Chemistry Fellow at Hertford College. 🇨🇱

Computational biochemist (ligand binding kinetics, crowding)

Junior group leader at the Technical University Berlin

Associate editor at the Journal of Chemical Information and Modeling

Brazilian in Germany

PhD Student in the Haas lab at Berlin Institute of Health, Charité Berlin @bihatcharite.bsky.social and MDC-BIMSB @mdc-bimsb.bsky.social

🫧 Single Cell Technologies

🩸 Hematology

🧪 Immunology

💻 Bioinformatics

Head of Div. Intelligent Medical Systems (IMSY) at DKFZ, director of the National Center of Tumor Diseases (NCT) Heidelberg and @ellis.eu Health board member. Excited about Medical Imaging AI, Surgical Data Science, and Validation of A(G)Is.

web @ https://argmin.xyz

interests: machine learning, ai4science, algorithms, coding

member of technical staff @ https://cusp.ai

past @ MSR, DeepMind, MPI-IS

home @ Heimbach (Gilserberg), Berlin, Europe

born @ 353 ppm

block toxicity

he/him

This account might not be very active for a while.

I'm a female (yes, feminist) mathematician and a physicist with growing interests in Astrophysics, Materials Science and all areas of Earth Science.

Computational chemist 🖥️ ⌬👩🏻🔬🧬 | Fifth-year i2 BS-MS Student |

IISER TVM

Promoting fundamental research on advanced computational methods

Professor at the University of Chicago. Previously at U Minnesota and U Geneva. From Italy. I value a culture of diversity and solidarity.

https://gagliardigroup.uchicago.edu/

We perform research in theoretical chemistry, specifically classical and quantum statistical mechanics, mathematical approaches, and machine learning methods applied to condensed phases.

PhD Student @TU Berlin @BIFOLD working on ML applications for chemistry

Associate Professor

Dept. of Chemistry, Grad. School of Science, The University of Osaka

Organic Chemistry, Coordination Chemistry, Supramolecular Chemistry, Porphyrinoid

https://orcid.org/0000-0001-7270-1234

A #philosophy podcast for surviving the worst possible timelines. Hosted by ethicist aaronrabinowitz.net. Ethics director and credentialed creator at creatoraccountabilitynetwork.org. Obsessed with luck. Sibling show: https://0gphilosophy.libsyn.com/

Associate Prof at Georgia Tech. Low-cost semiconductors #solar #optoelectronics. Previously: UConn -> EPFL -> MIT. Opinions my own. He/his

Chair of Theoretical Chemistry and Head of the School of Chemistry at Trinity College Dublin