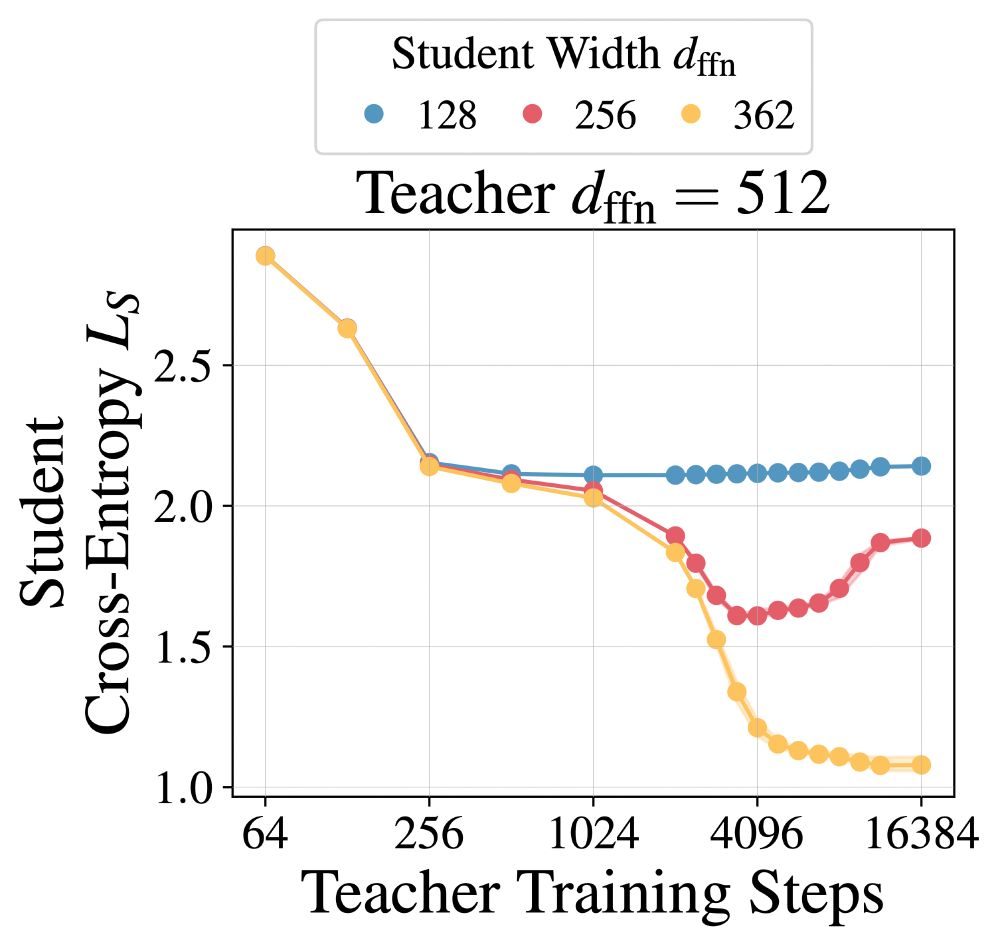

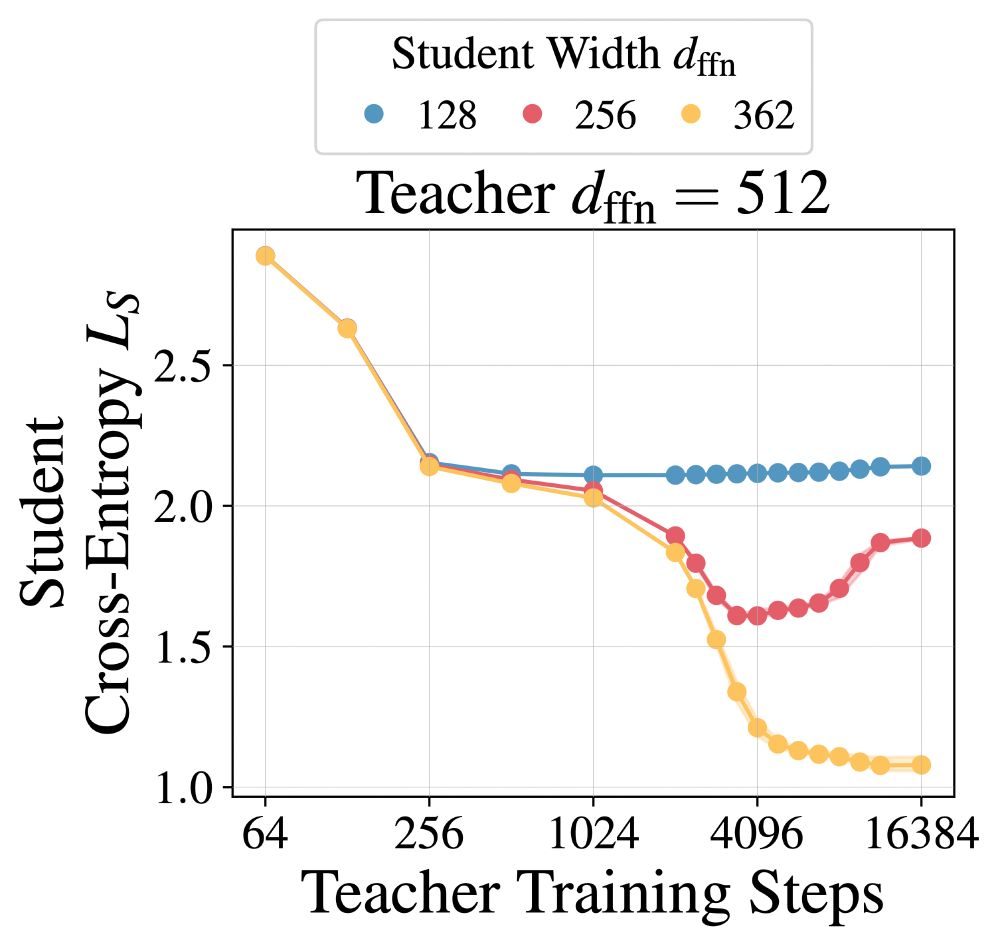

We do observe that students improve with longer distillation training (i.e., patient teaching works). Additionally, with particularly long distillation durations, we approach what supervised learning can achieve (as limited by model capacity, in our experimental setting).

13.02.2025 21:52 — 👍 1 🔁 0 💬 0 📌 0

In contrast, our teachers and students are trained on the same data distribution, and we compare with supervised models that can access the same distribution. This lets us to make statements about what distillation can do given access to the same resources.

13.02.2025 21:52 — 👍 0 🔁 0 💬 1 📌 0

I.e. Beyer et al's students do not see the teacher training distribution, and there is no supervised baseline where, for example, a model has access to both INet21k and the smaller datasets. This type of comparison was not the focus of their work.

13.02.2025 21:52 — 👍 0 🔁 0 💬 1 📌 0

Beyer et al.'s teachers are trained on a large, diverse dataset (e.g., INet21k) then fine-tuned for the target datasets (e.g., Flowers102 or ImageNet1k). Students are distilled on the target datasets and only access the teacher's training distribution indirectly.

13.02.2025 21:52 — 👍 0 🔁 0 💬 1 📌 0

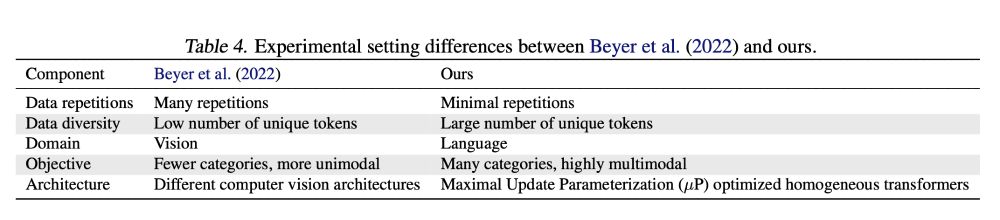

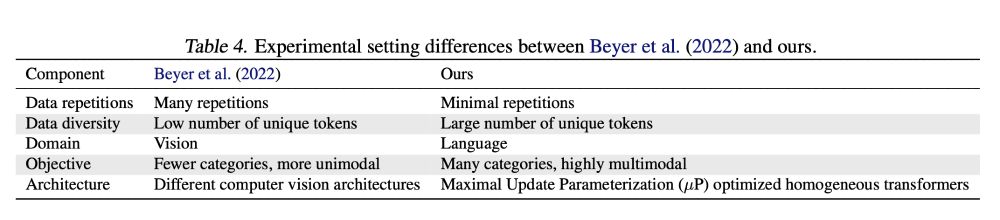

These differences, outlined in Table 4, relate primarily to the relationship between student and teacher.

13.02.2025 21:52 — 👍 0 🔁 0 💬 1 📌 0

As is often the case with distillation, the situation is nuanced, which is why we provide a dedicated discussion in Appendix D.1.

The key message is that despite an apparent contradiction, our findings are consistent because our baselines and experimental settings differ.

13.02.2025 21:52 — 👍 0 🔁 0 💬 1 📌 0

Several people have asked me to comment further on the connection between our work and the Patient and Consistent Teachers study by Beyer et al., as In Section 5.1, we note that our findings appear to contradict theirs.

arxiv.org/abs/2106.05237

13.02.2025 21:52 — 👍 0 🔁 0 💬 1 📌 0

This investigation answered many long-standing questions I’ve had about distillation. I am deeply grateful for my amazing collaborators Amitis Shidani, Floris Weers, Jason Ramapuram, Etai Littwin, and Russ Webb, whose expertise and support brought this project to life.

13.02.2025 21:50 — 👍 0 🔁 0 💬 0 📌 0

So, distillation does work.

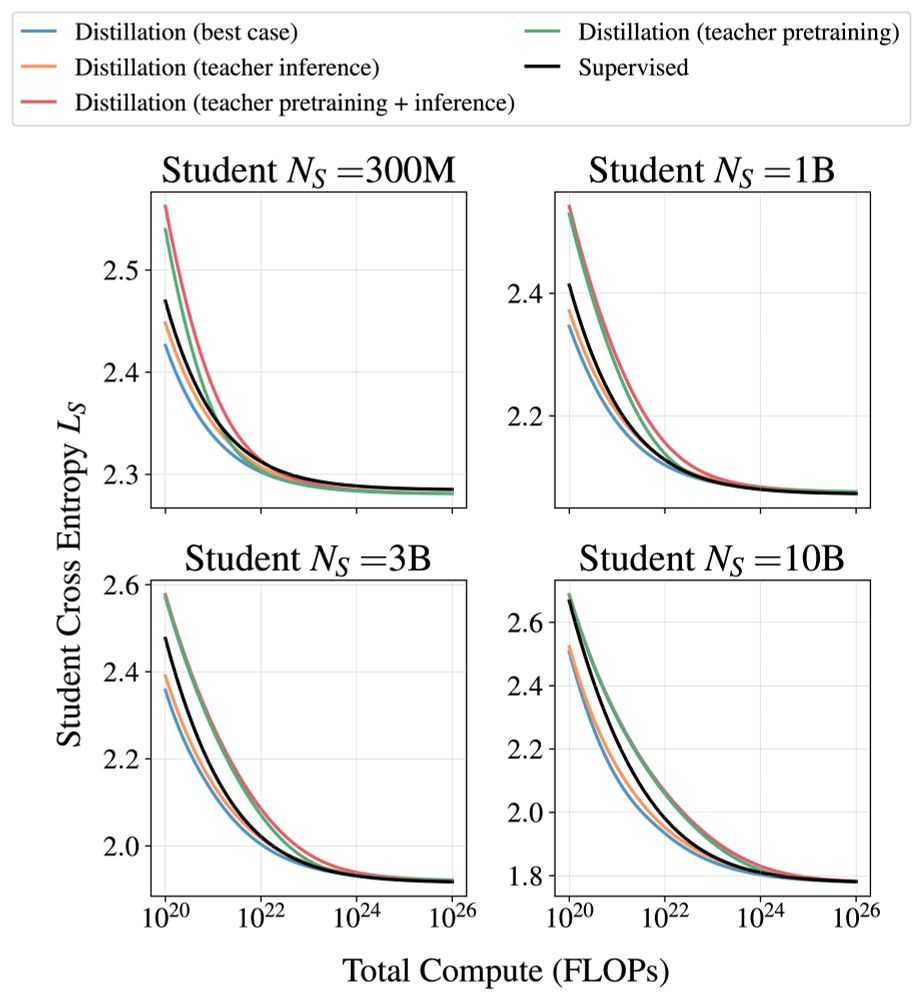

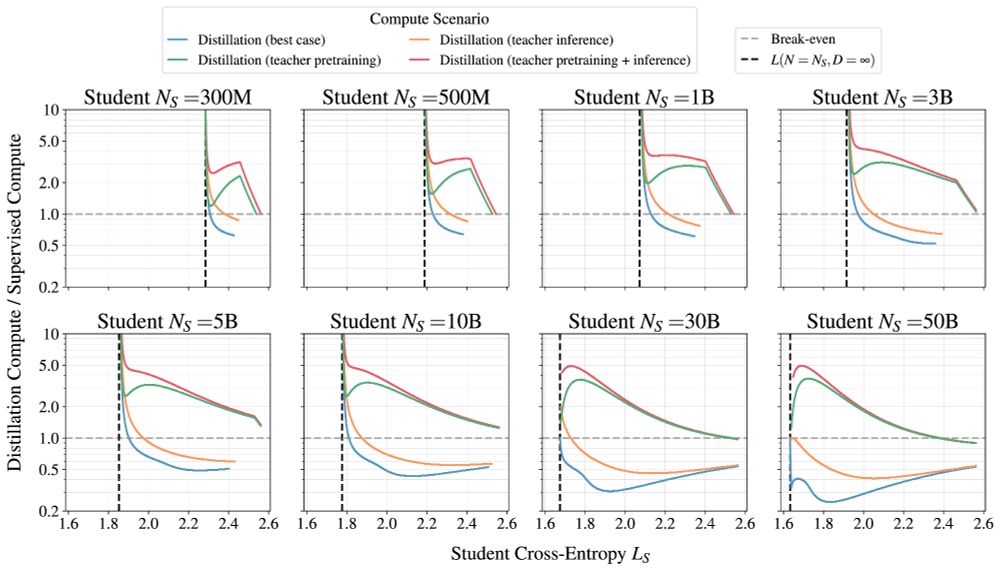

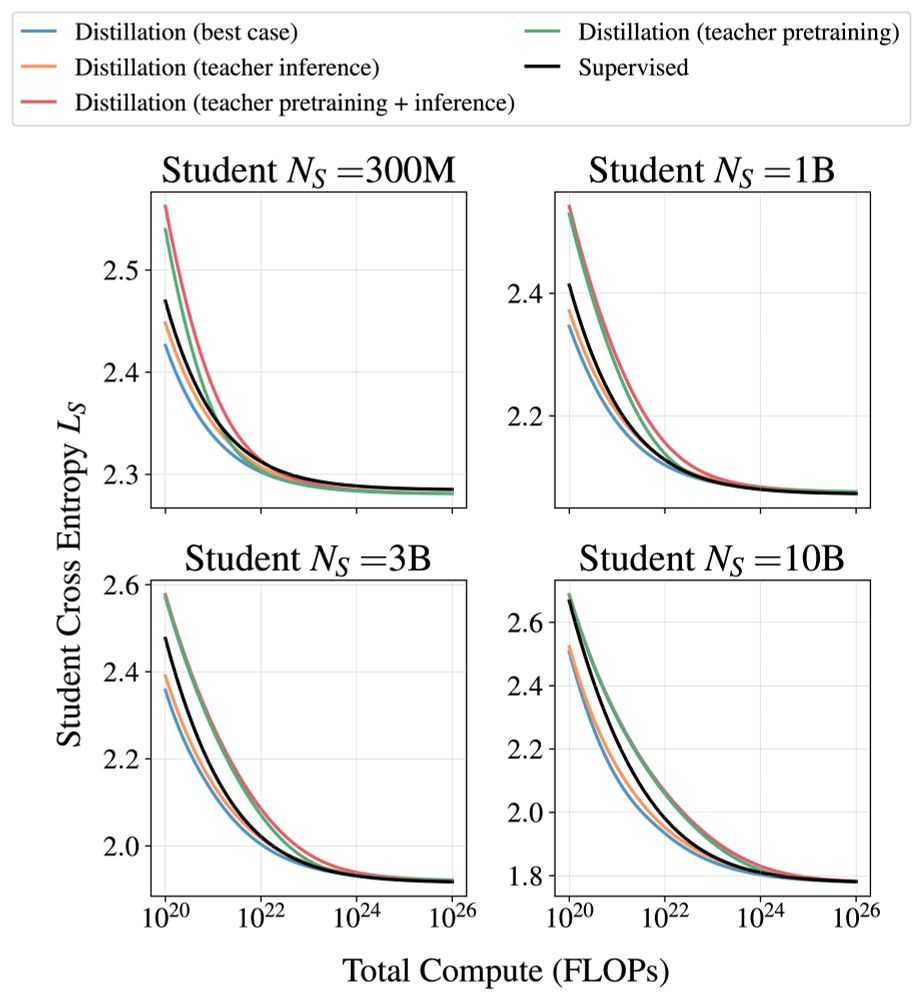

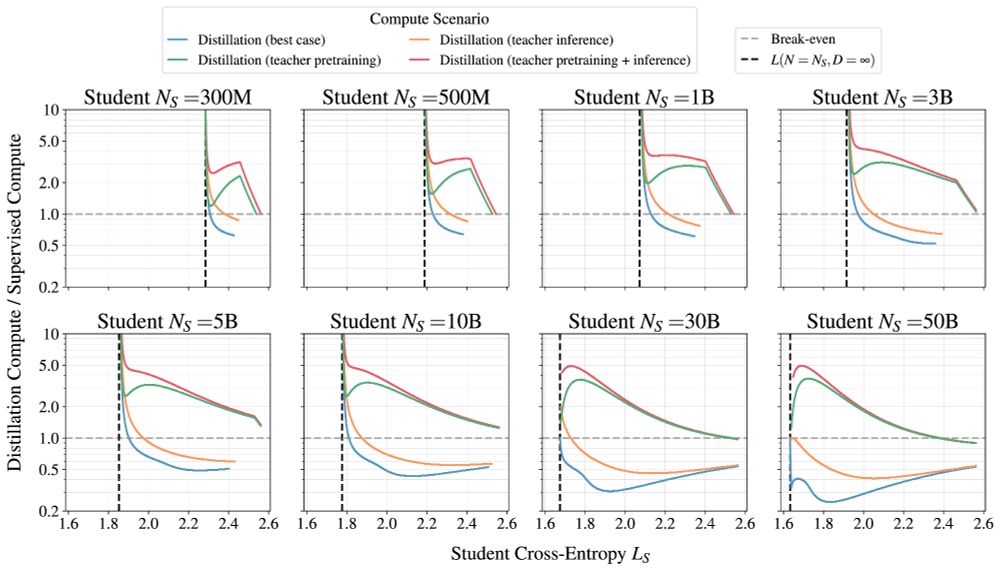

If you have access to a decent teacher, distillation is more efficient than supervised learning. Efficiency gains lessen with increasing compute.

If you need to train a teacher, it may not be worth it, depending on other uses for the teacher.

13.02.2025 21:50 — 👍 1 🔁 0 💬 1 📌 0

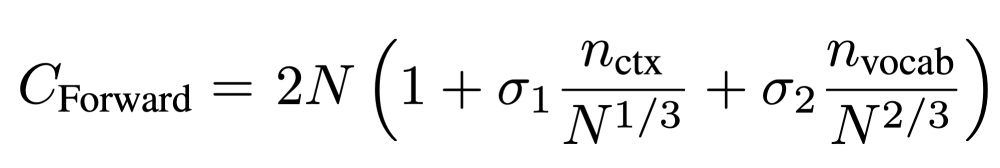

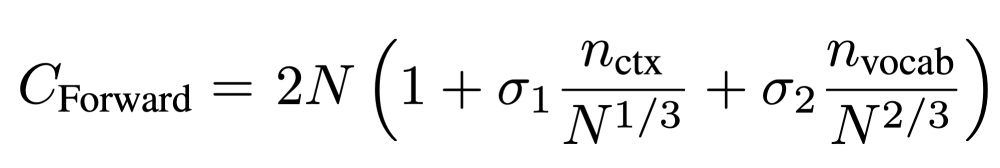

5. Using fixed aspect ratio (width/depth) models, enables using N as non-embedding model parameters, and allows a simple, accurate expression for FLOPs/token which works for large contexts. I think this would be useful for the community to adopt generally.

13.02.2025 21:50 — 👍 0 🔁 0 💬 1 📌 0

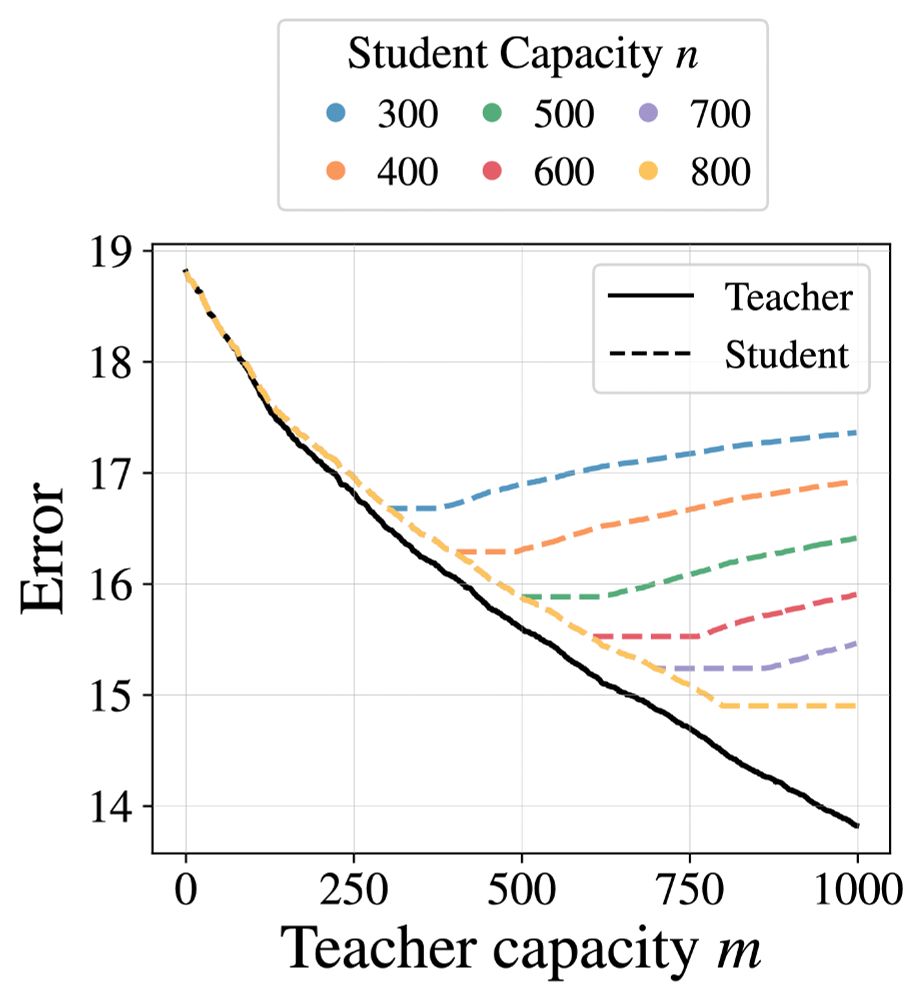

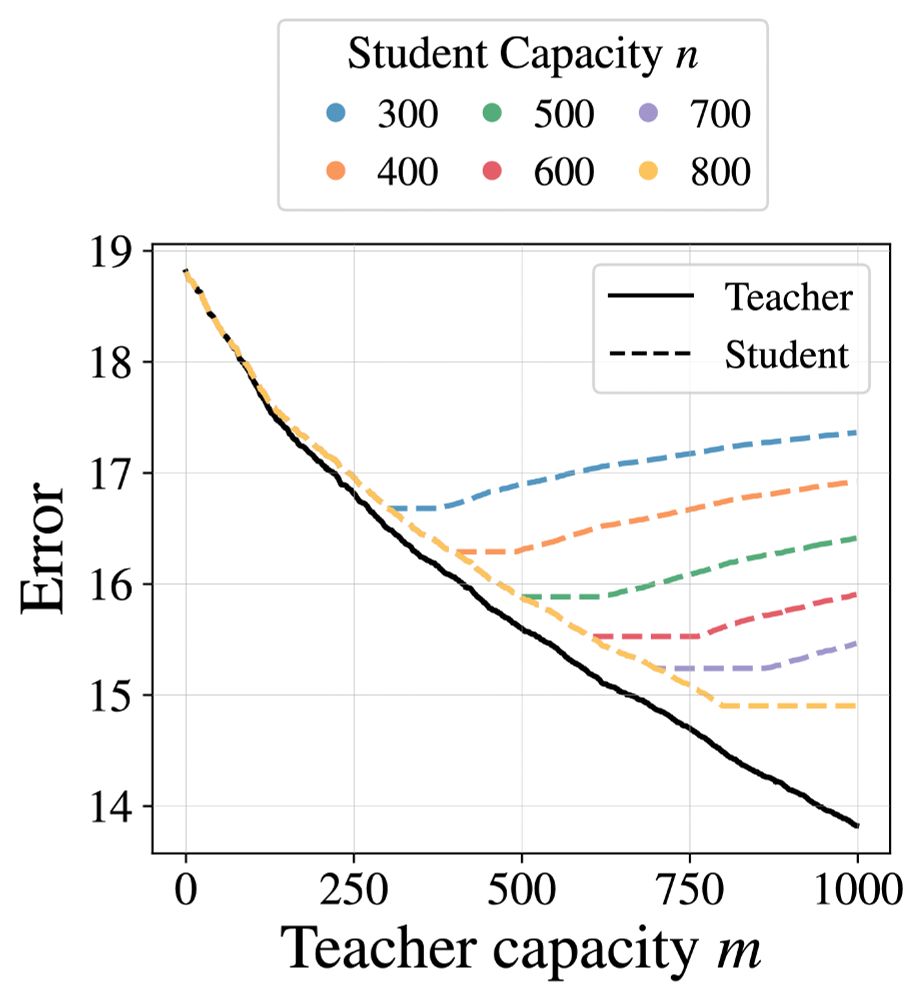

4. We present the first synthetic demonstrations of the distillation capacity gap phenomenon, using kernel regression (left) and pointer mapping problems (right). This will help us better understand why making a teacher too strong can harm student performance.

13.02.2025 21:50 — 👍 0 🔁 0 💬 1 📌 0

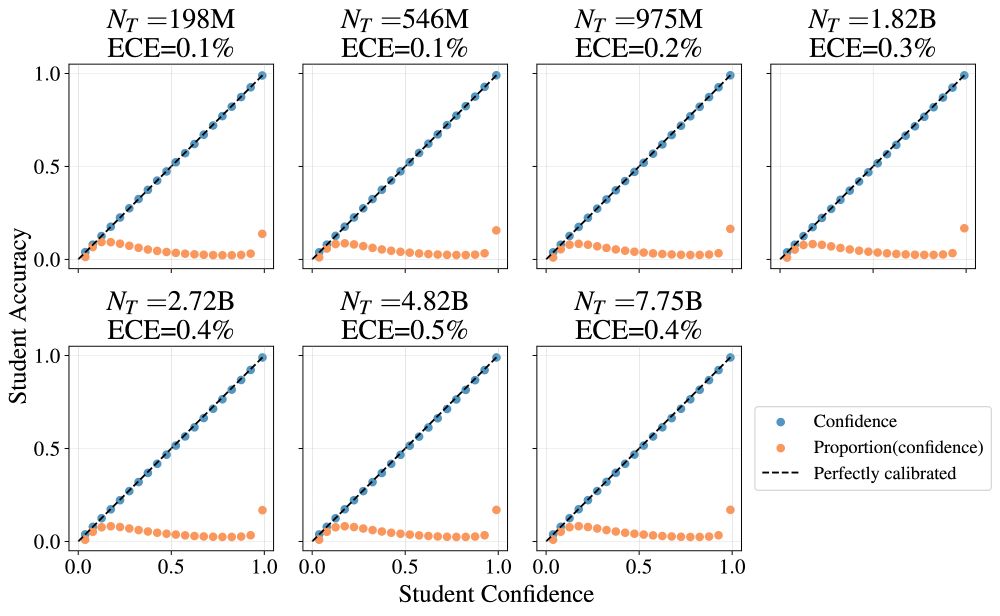

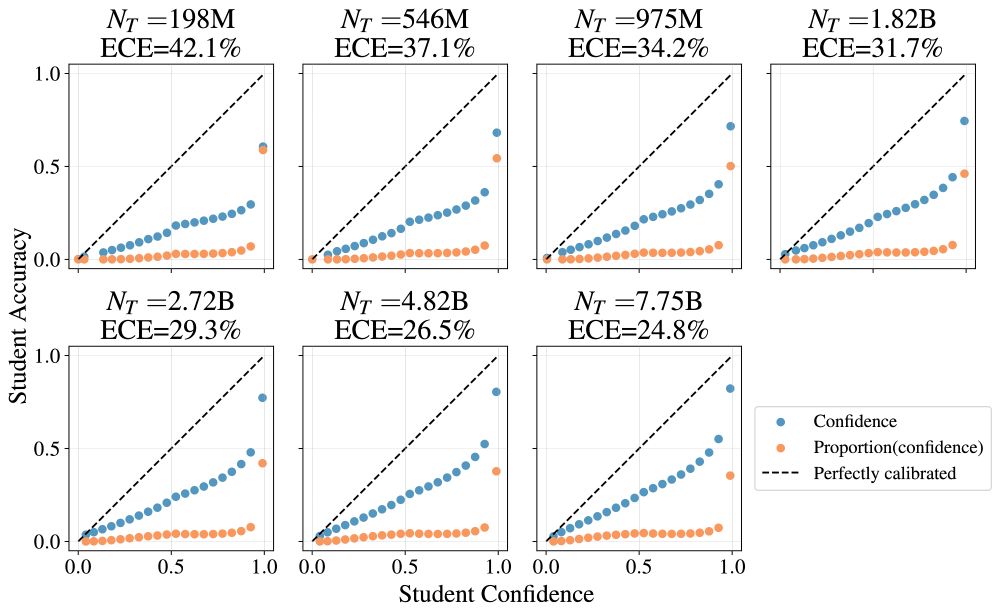

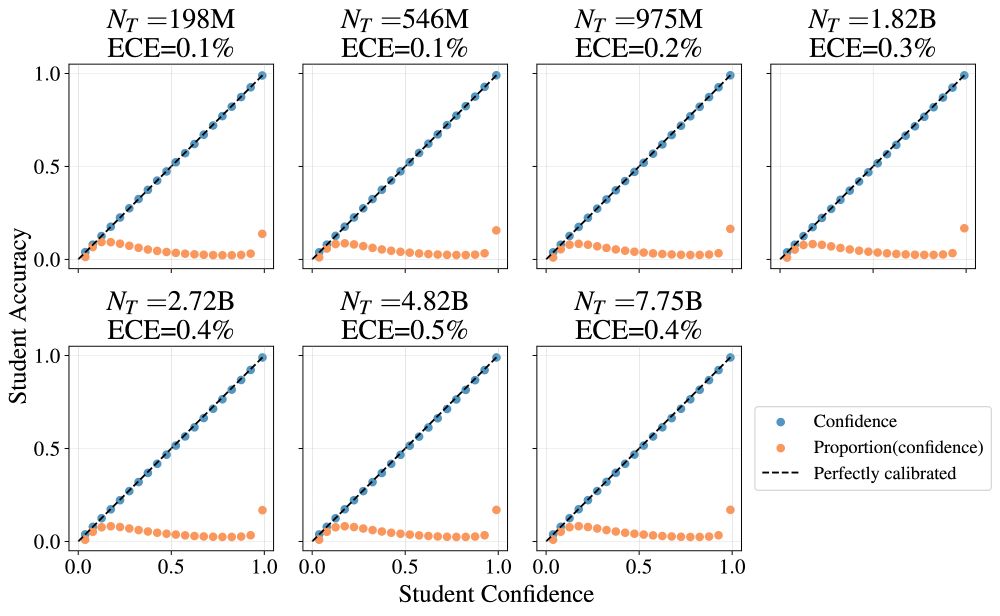

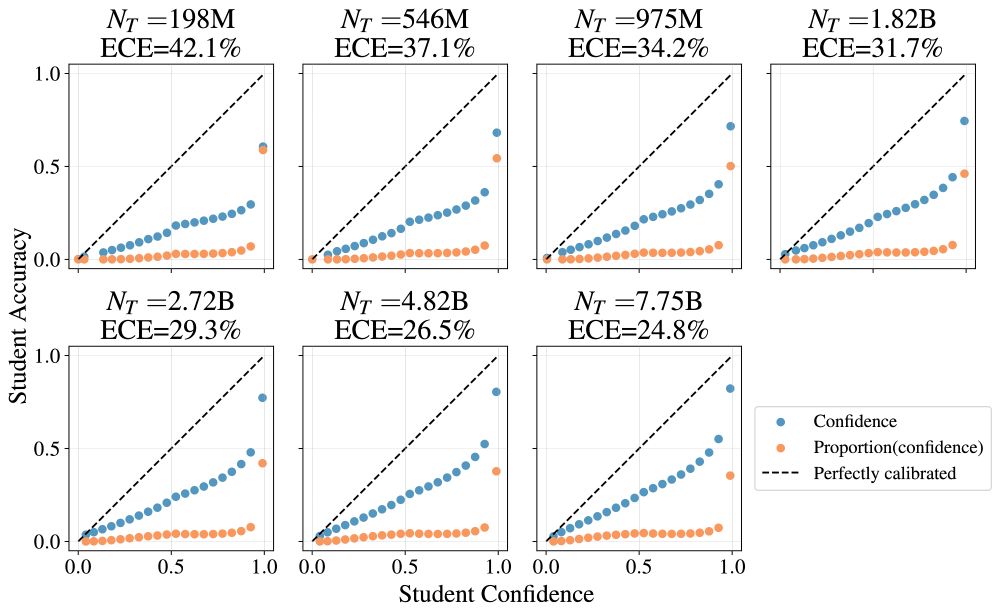

3. Training on the teacher logit signal produces a calibrated student (left) whereas training on the teacher top-1 does not (right). This can be understood using proper scoring metrics, but it is nice to observe clearly.

13.02.2025 21:50 — 👍 0 🔁 0 💬 1 📌 0

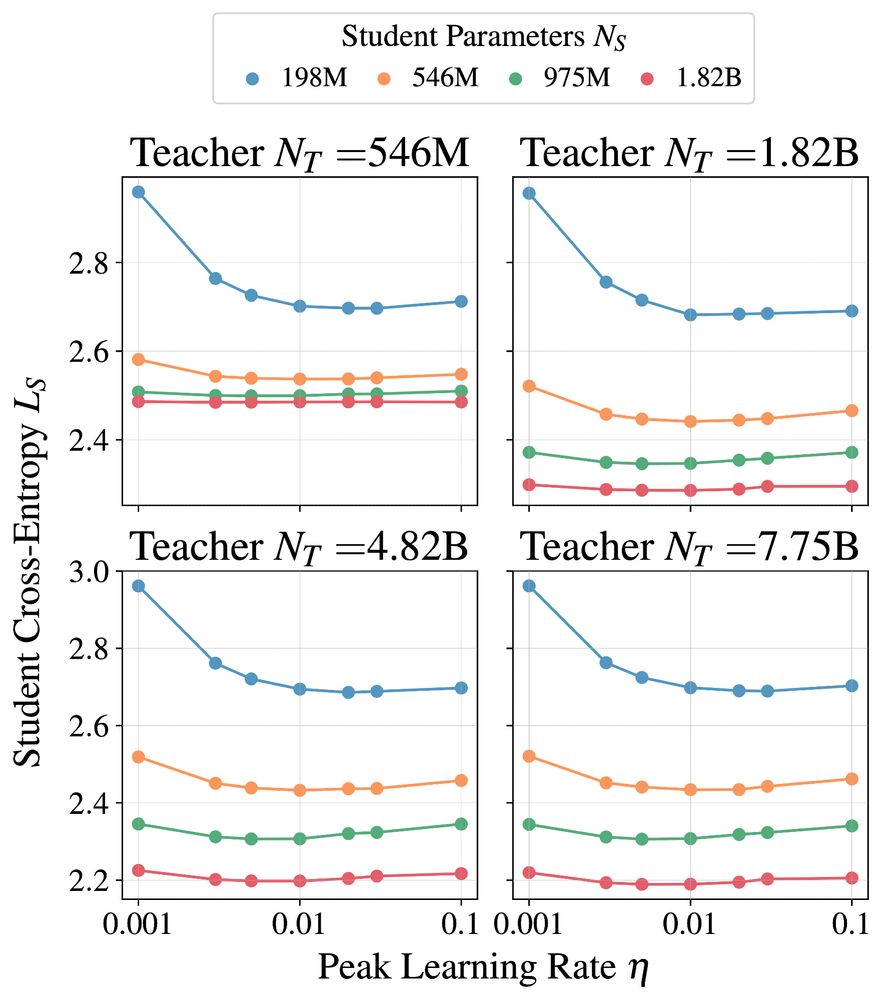

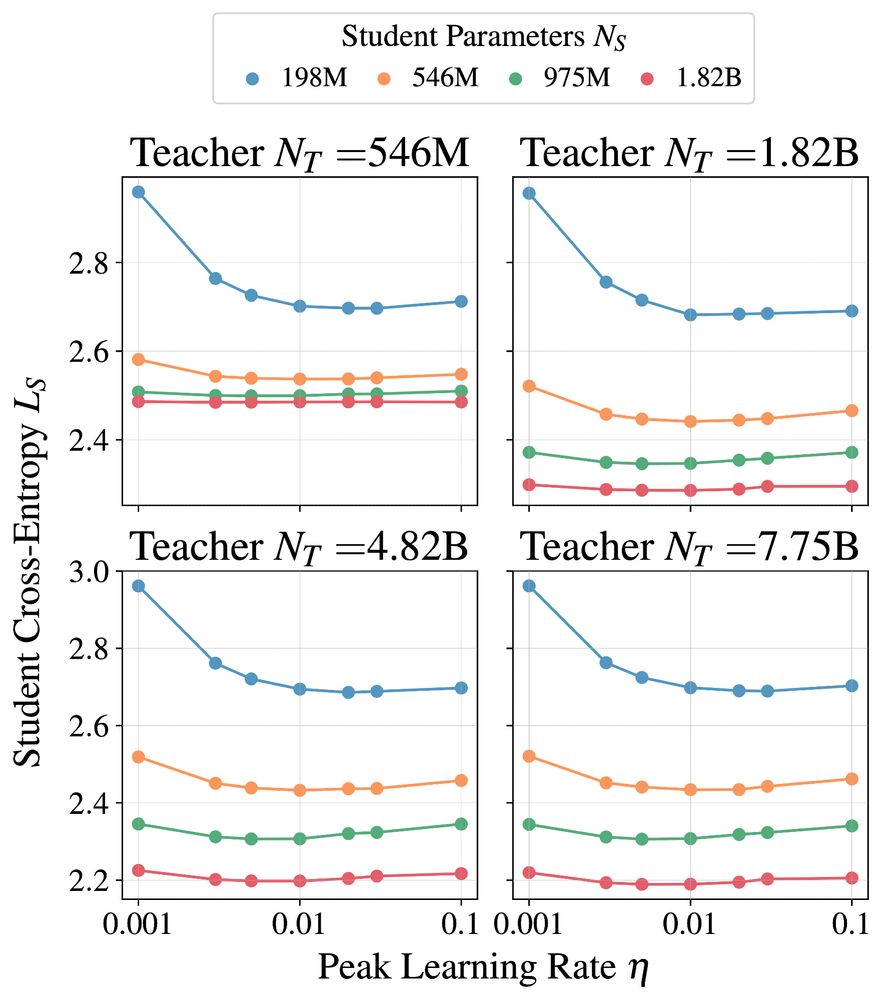

2. Maximum Update Parameterization (MuP, arxiv.org/abs/2011.14522) works out of the box for distillation. The distillation optimal learning rate is the same as the base learning rate for teacher training. This simplified our setup a lot.

13.02.2025 21:50 — 👍 0 🔁 0 💬 1 📌 0

We also investigated many other aspects of distillation that should be useful for the community.

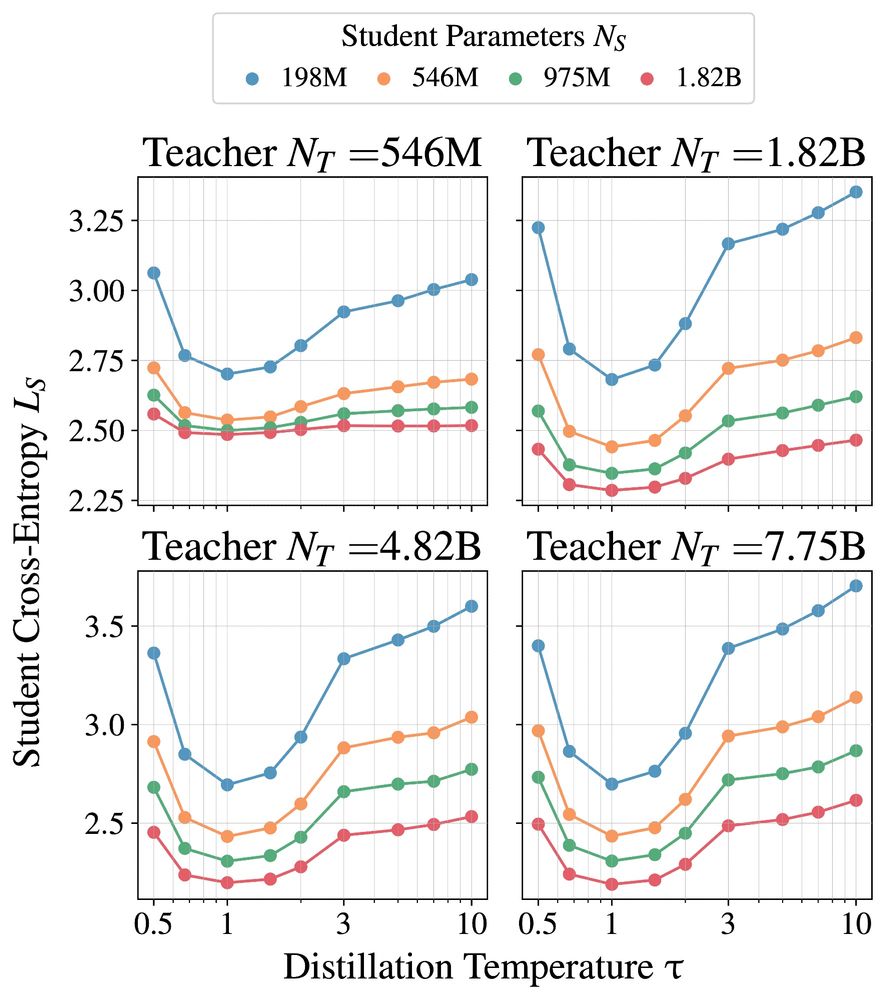

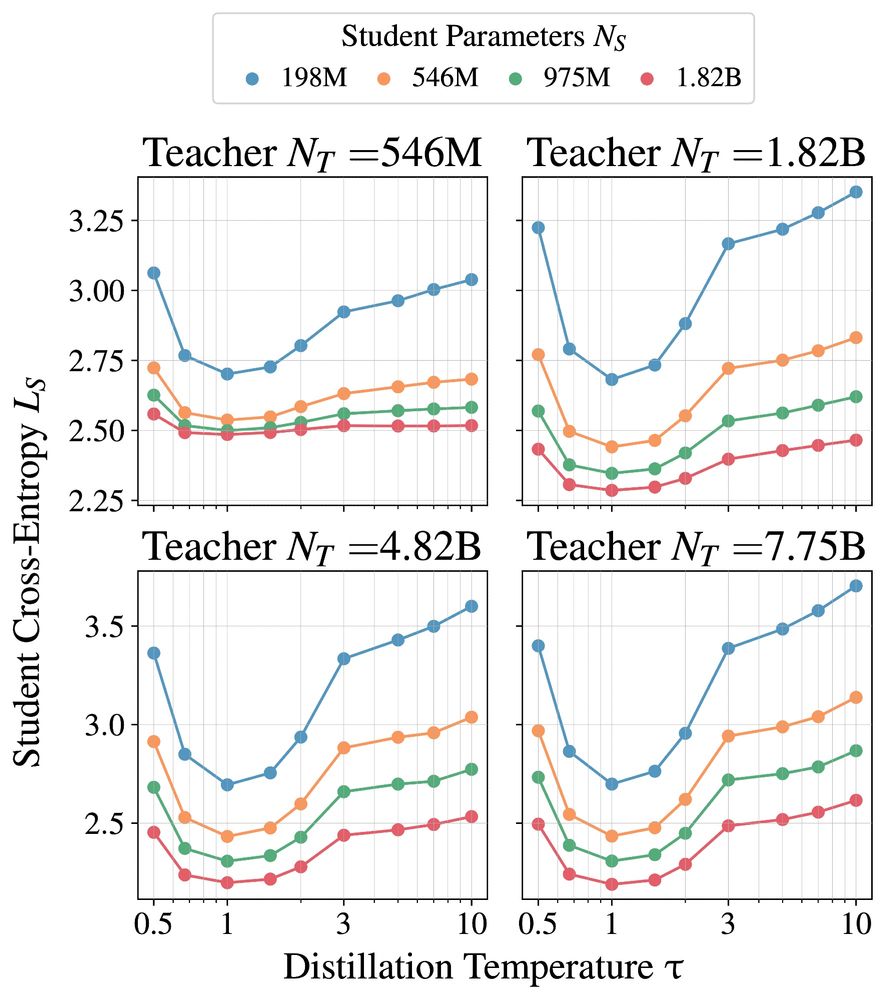

1. Language model distillation should use temperature = 1. Dark knowledge is less important, as the target distribution of natural language is already complex and multimodal.

13.02.2025 21:50 — 👍 0 🔁 0 💬 1 📌 0

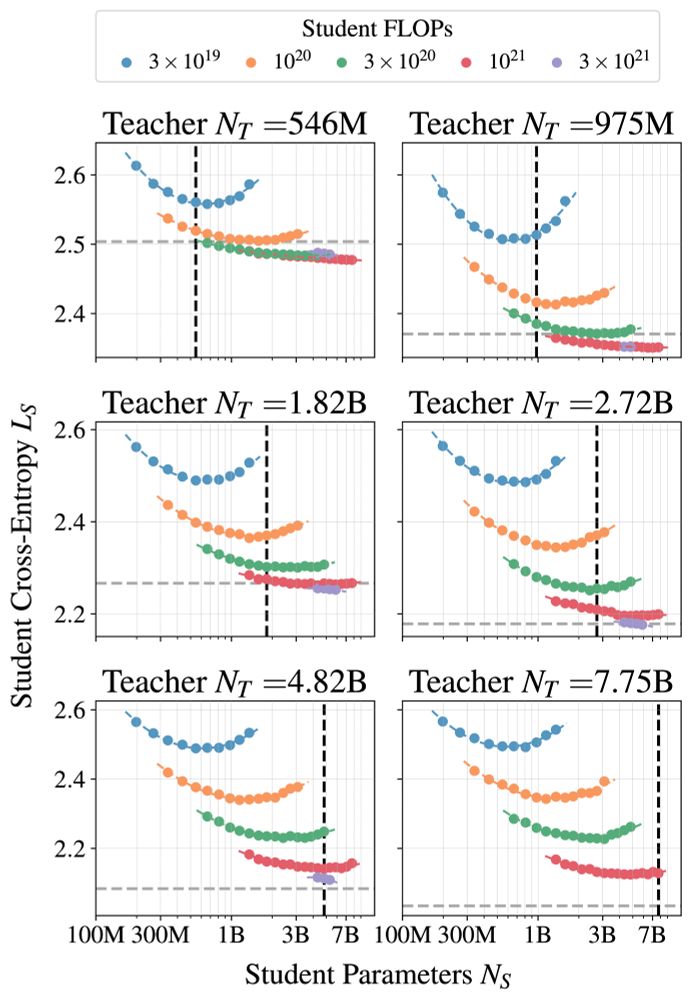

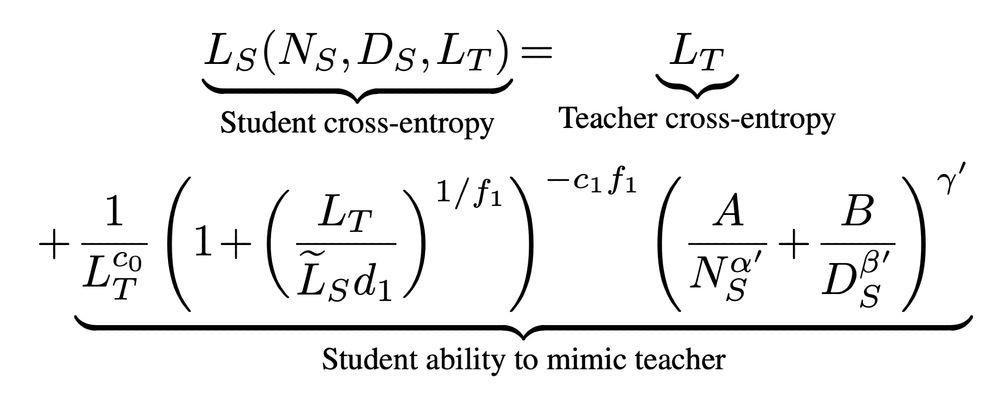

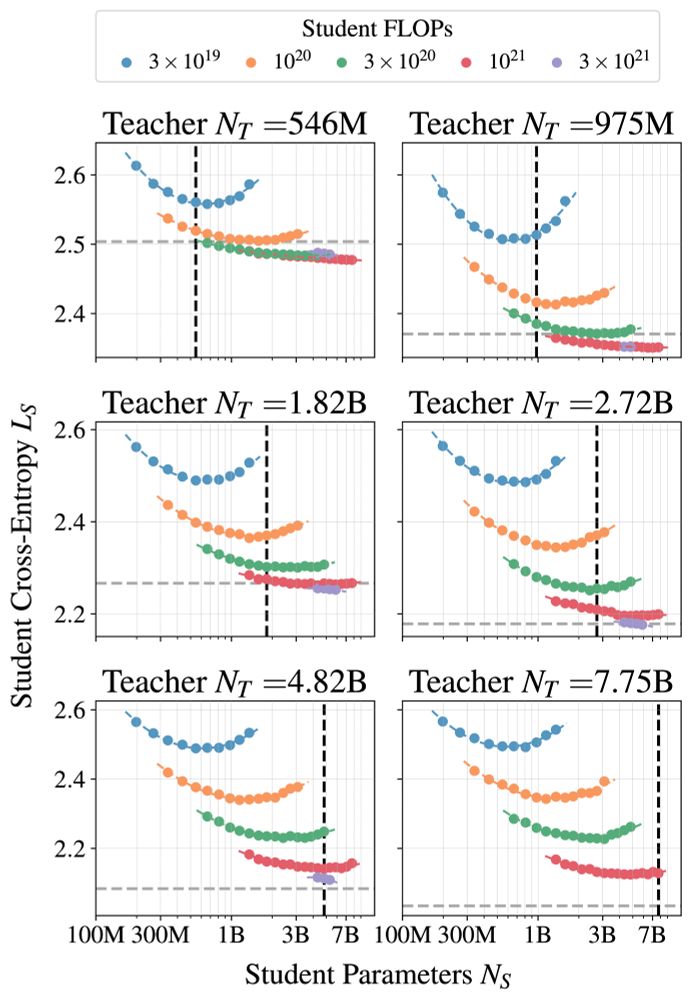

When using an existing teacher, distillation overhead comes from generating student targets. Compute-optimal distillation shows It's better to choose a smaller teacher, slightly more capable than the target student capability, rather than a large, powerful teacher.

13.02.2025 21:50 — 👍 0 🔁 0 💬 1 📌 0

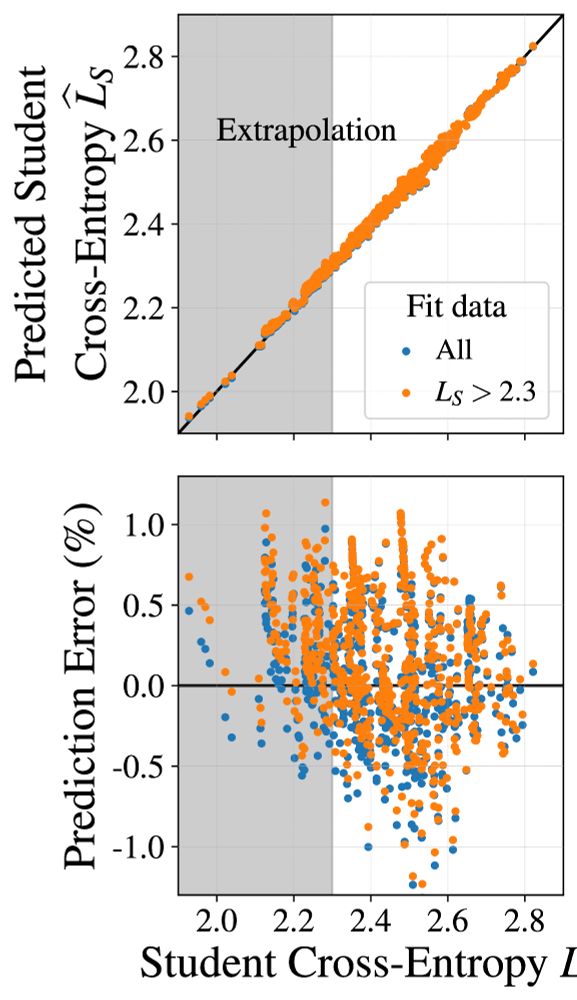

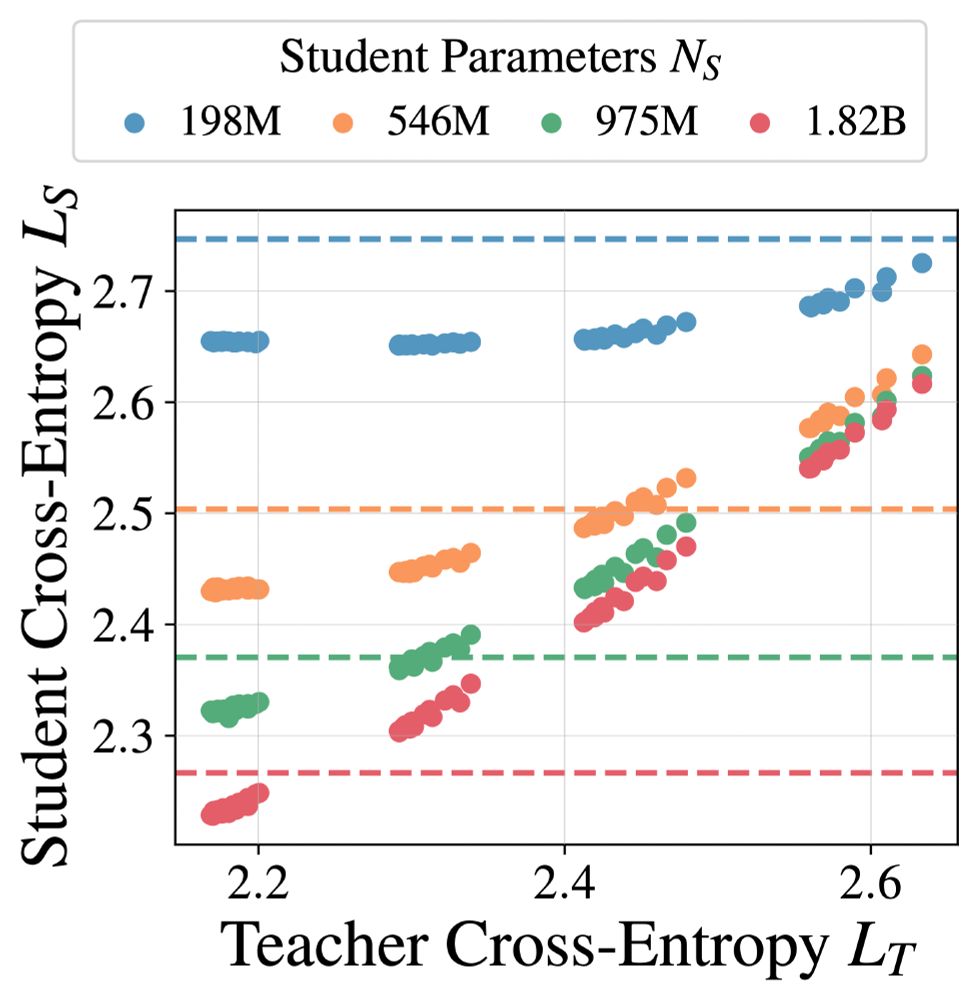

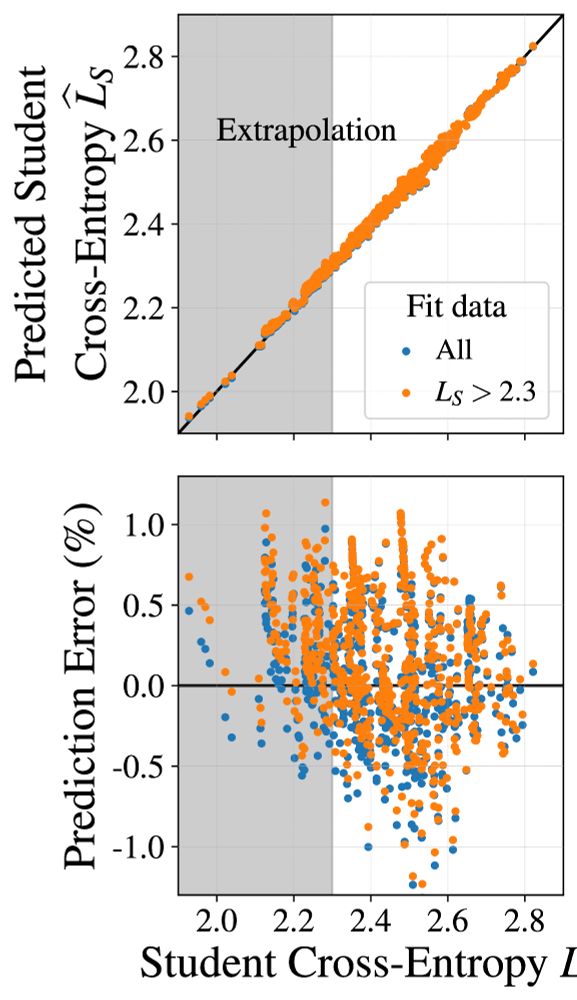

The distillation scaling law extrapolates with ~1% error in student cross-entropy prediction. We use our law in an extension Chinchilla, giving compute-optimal distillation for settings of interest to the community, and our conclusions about when distillation beneficial.

13.02.2025 21:50 — 👍 0 🔁 0 💬 1 📌 0

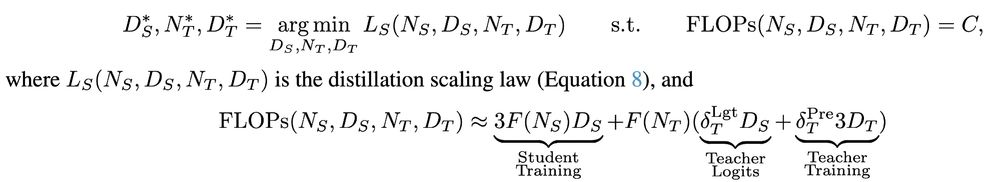

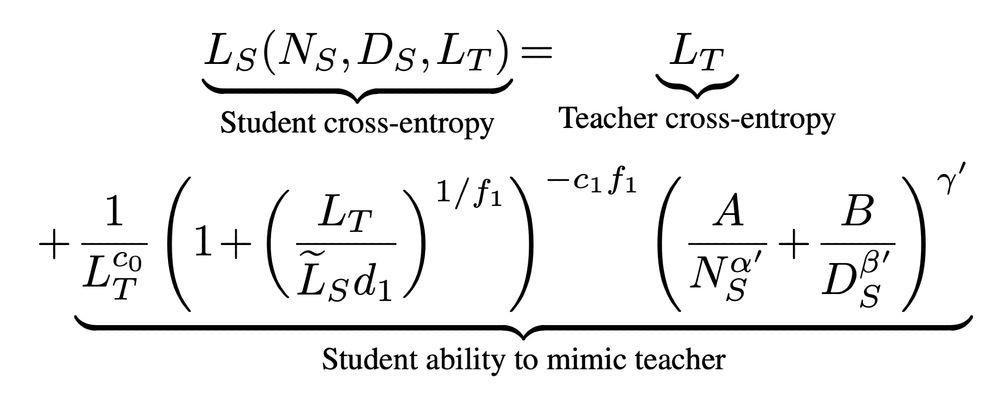

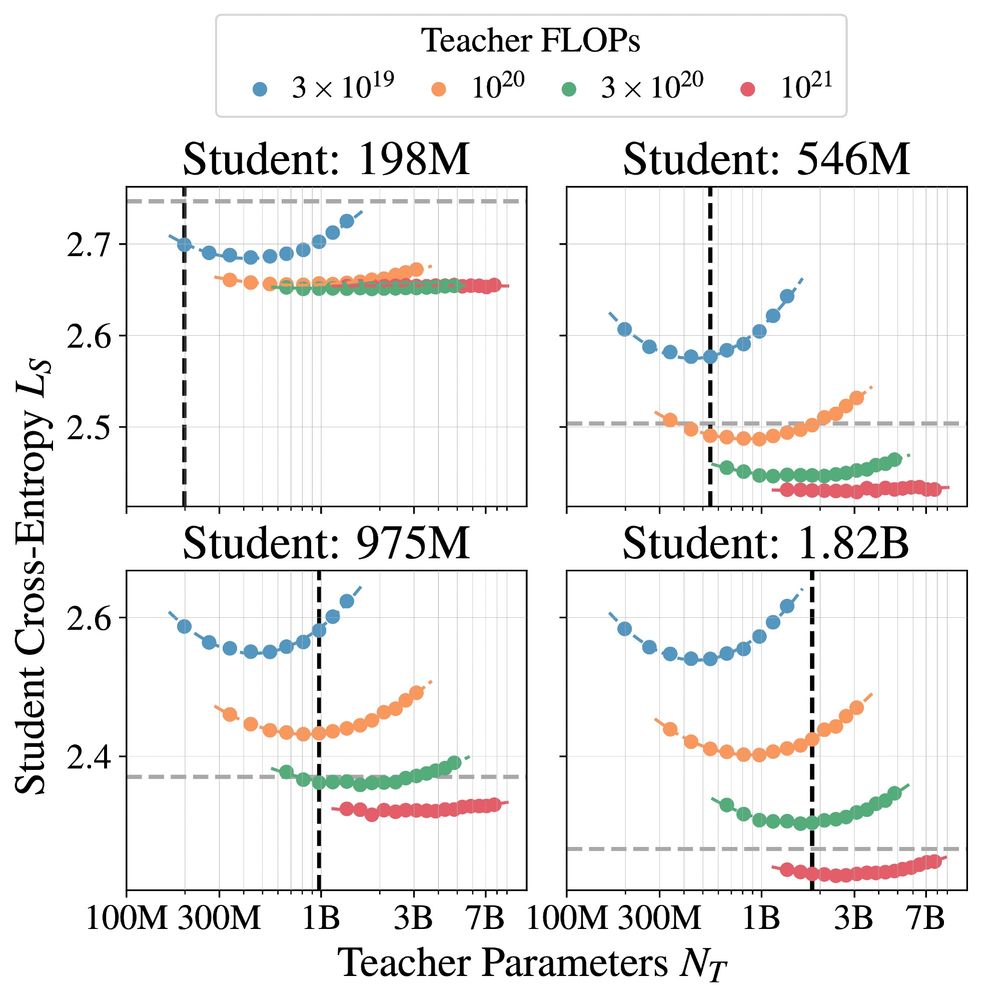

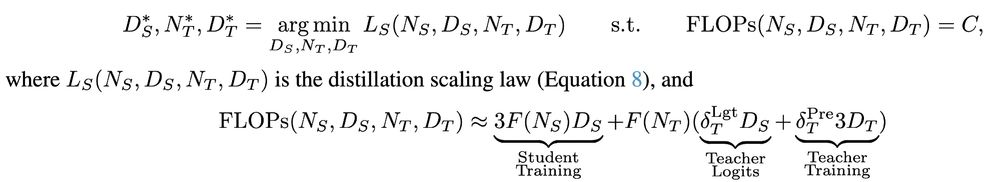

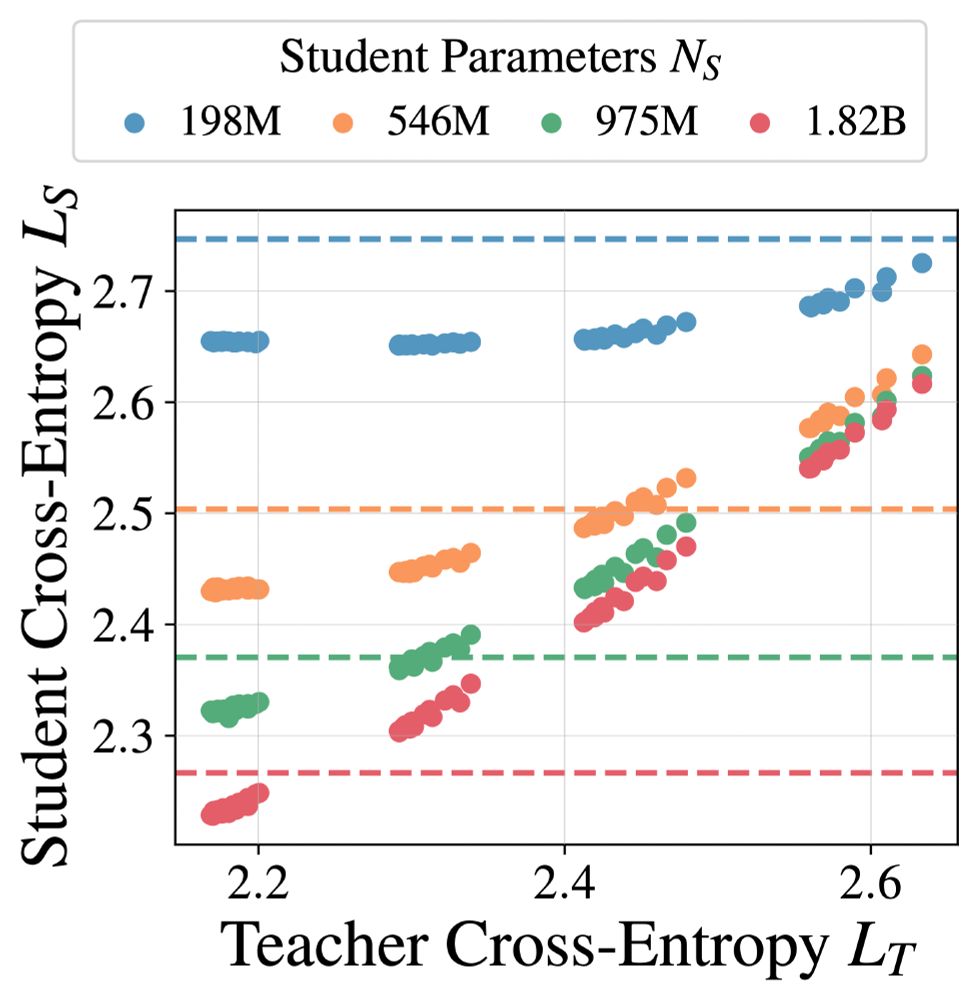

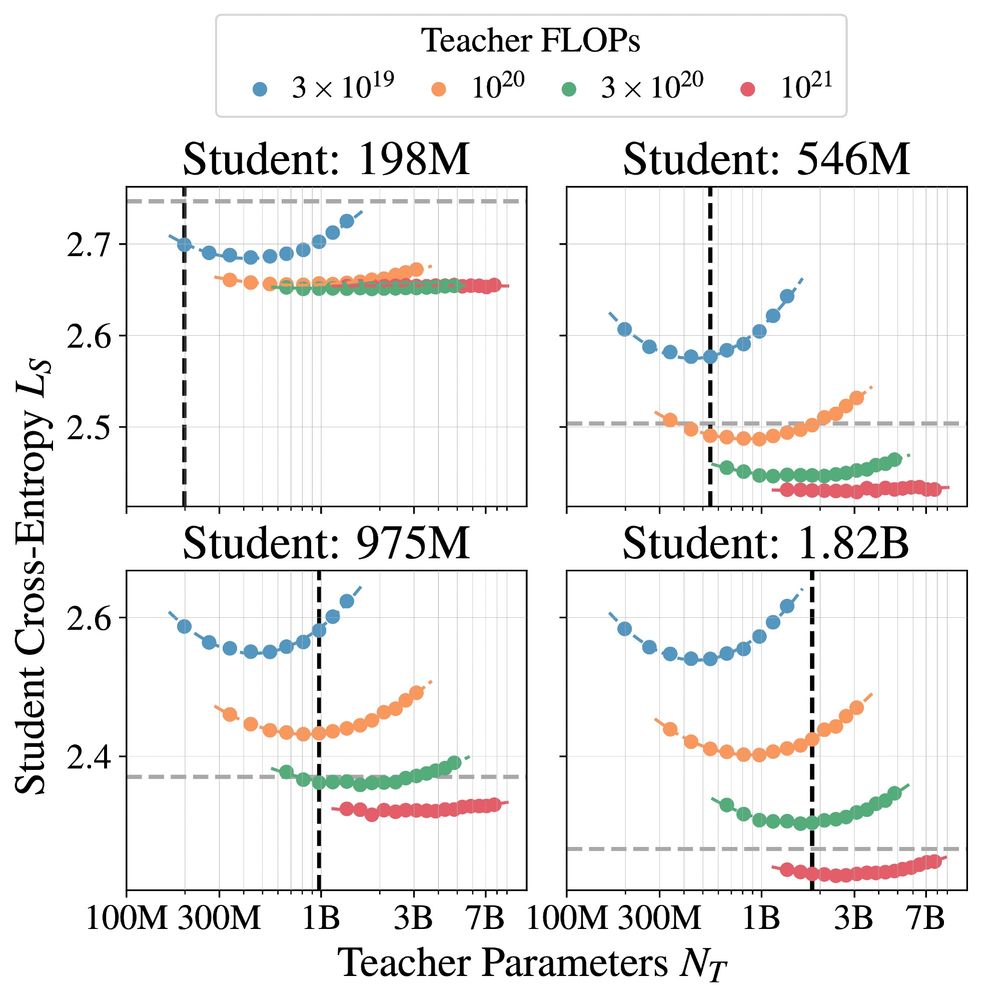

To arrive at our distillation scaling law, we noticed that teacher size influences student cross-entropy only through the teacher cross entropy, leading to a scaling law in student size, distillation tokens, and the teacher-cross entropy. Teacher size isn't directly important.

13.02.2025 21:50 — 👍 0 🔁 0 💬 1 📌 0

These results follow the largest study of distillation we are aware of, with 100s of student/teacher/data combinations, with model sizes ranging from 143M to 12.6B parameters and number distillation tokens ranging from 2B to 512B tokens.

13.02.2025 21:50 — 👍 0 🔁 0 💬 1 📌 0

Compared to supervised training:

1. It is only more efficient to distill if the teacher training cost does not matter.

2. Efficiency benefits vanish when enough compute/data is available.

3. Distillation cannot produce lower cross-entropies when enough compute/data is available.

13.02.2025 21:50 — 👍 0 🔁 0 💬 1 📌 0

Distillation Scaling Laws

We provide a distillation scaling law that estimates distilled model performance based on a compute budget and its allocation between the student and teacher. Our findings reduce the risks associated ...

Reading "Distilling Knowledge in a Neural Network" left me fascinated and wondering:

"If I want a small, capable model, should I distill from a more powerful model, or train from scratch?"

Our distillation scaling law shows, well, it's complicated... 🧵

arxiv.org/abs/2502.08606

13.02.2025 21:50 — 👍 3 🔁 3 💬 1 📌 2

Senior Staff Research Scientist @Google DeepMind, former Chair Prof @Oxford Uni

I am a research scientist @ Apple MLR, seeking a grand unification of generative modeling 🇪🇸🇺🇸

LLM @kyutai-labs.bsky.social

Research scientist at Anthropic. Prev. Google Brain/DeepMind, founding team OpenAI. Computer scientist; inventor of the VAE, Adam optimizer, and other methods. ML PhD. Website: dpkingma.com

research scientist at google deepmind.

phd in neural nonsense from stanford.

poolio.github.io

ML Engineer-ist @ Apple Machine Learning Research

Research Scientist at Apple for uncertainty quantification.

Coffee Lover • Husky Dad • ML Researcher @ • Berkeley Grad

Ramen whisperer, bad throat singer

Differential Privacy. Machine Learning. Apple.

F_vaggi on Twitter. Senior staff scientist at Google X, previously Amazon.

Parent, spouse, Australian, Professor of Machine Learning in Oxford. Long Covid, trans rights, music, reggae, AI must be good for humans, https://www.robots.ox.ac.uk/~mosb

Research Director, Founding Faculty, Canada CIFAR AI Chair @VectorInst.

Full Prof @UofT - Statistics and Computer Sci. (x-appt) danroy.org

I study assumption-free prediction and decision making under uncertainty, with inference emerging from optimality.

AI, sociotechnical systems, social purpose. Research director at Google DeepMind. Cofounder and Chair at Deep Learning Indaba. FAccT2025 co-program chair. shakirm.com

International Conference on Learning Representations https://iclr.cc/

So far I have not found the science, but the numbers keep on circling me.

Views my own, unfortunately.

Blog: https://argmin.substack.com/

Webpage: https://people.eecs.berkeley.edu/~brecht/