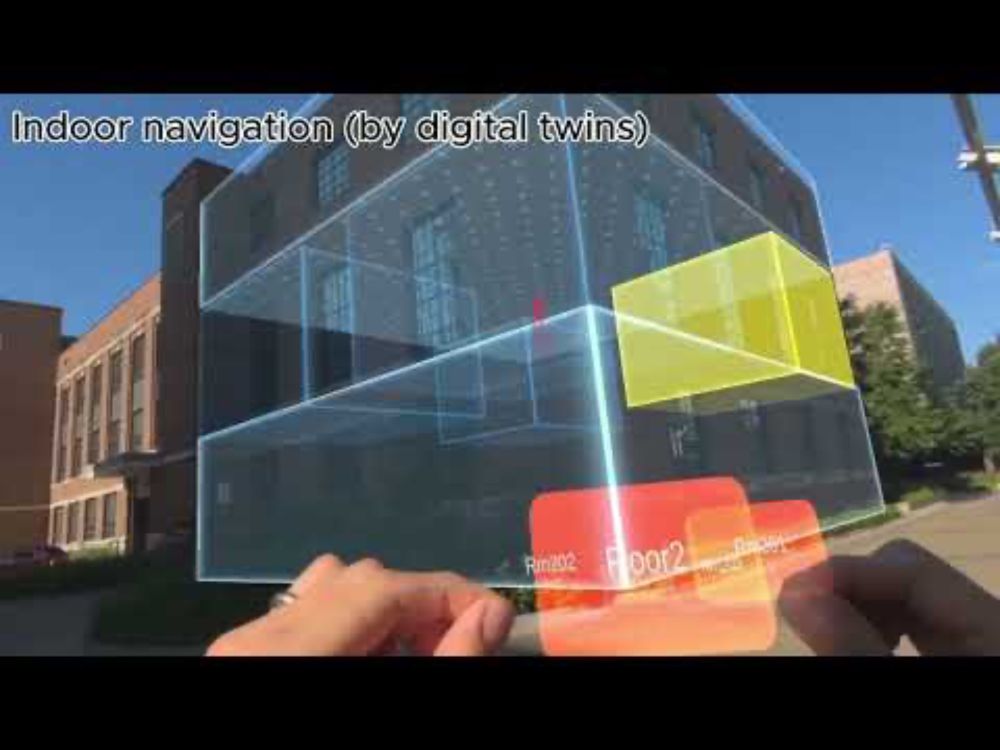

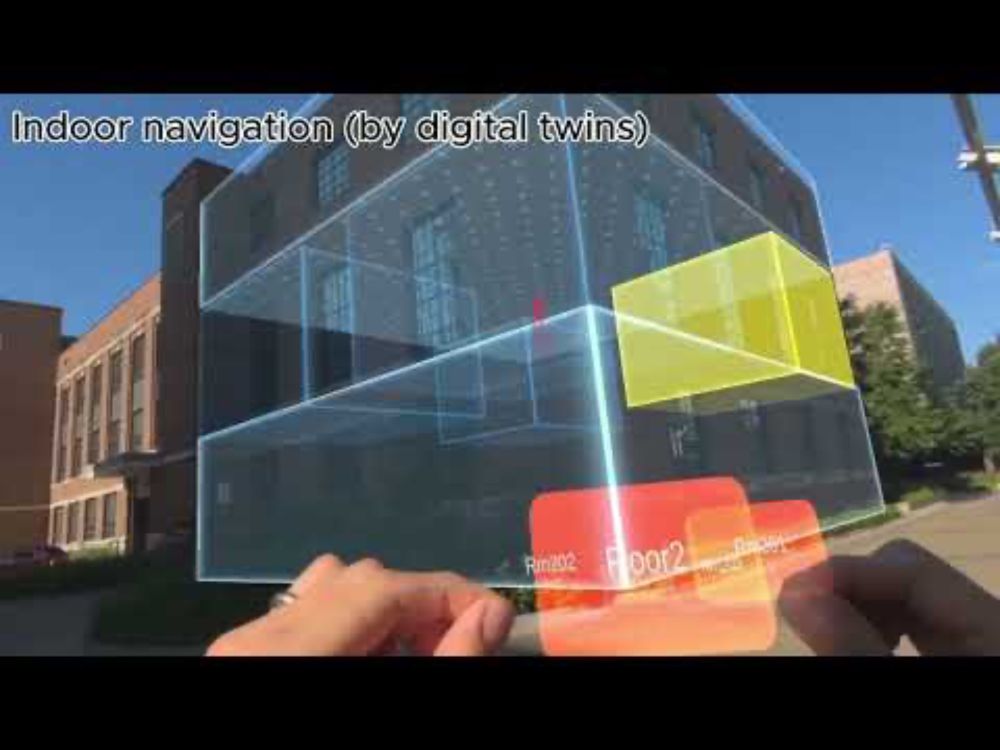

With that in mind we envision Reality Proxy. Check out the video teaser of a world interactable via proxies: youtu.be/F2ul_68PrD0, and stay tuned for more at ACM UIST Conference 2025 in Busan, Korea

Paper: lnkd.in/dFXdc2Bw

@twimar.bsky.social

Computer Scientist and Neuroscientist. Lead of the Blended Intelligence Research & Devices (BIRD) @GoogleXR. ex- Extended Perception Interaction & Cognition (EPIC) @MSFTResearch. ex- @Airbus applied maths

With that in mind we envision Reality Proxy. Check out the video teaser of a world interactable via proxies: youtu.be/F2ul_68PrD0, and stay tuned for more at ACM UIST Conference 2025 in Busan, Korea

Paper: lnkd.in/dFXdc2Bw

Over the last two years we have seen an acceleration on AI, but if AI is going to enable humans in their day to day tasks, it most probably will be via XR. The issue then is if a selection has real-world consequences, we will need great precision to interact.

05.08.2025 08:15 — 👍 9 🔁 1 💬 1 📌 0

At least they’re being honest, right?

03.05.2025 20:05 — 👍 48 🔁 4 💬 5 📌 2

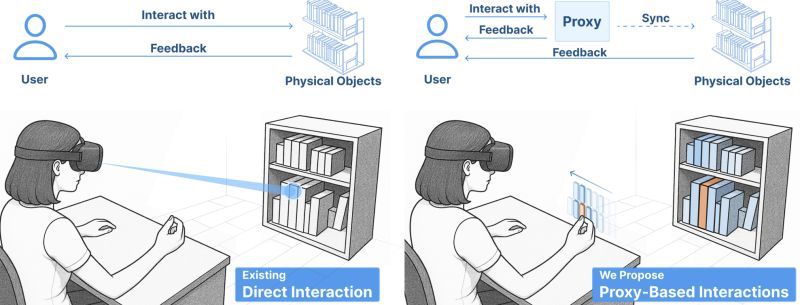

This precisely was something we tried to address and quantify in the VALID avatars. We found that ingroup participants were much better at determining the ethnicity of the avatars. And outgroups just had low accuracy labeling MENA, AIAN, NHPI and Hispanics. github.com/xrtlab/Valid...

28.04.2025 19:13 — 👍 6 🔁 0 💬 0 📌 0It lights my day whenever I see new research enabled by the easiness of use of the @msftresearch.bsky.social #RocketboxAvatars

21.04.2025 17:51 — 👍 7 🔁 0 💬 0 📌 0

To me experiments (on an homogeneous population) with 40+ participants are suspicious. Maybe they didn’t find significance with less but just trends so they added people until it was significant.

Sometimes a larger sample means higher probability of significance. murphyresearch.com/sample-size-...

Come work with Teresa Hirzle and me in a fully-funded PhD fellowship on extended reality and behavior change. The full add is at employment.ku.dk/phd/?show=16..., the application deadline is May 12th, and we will both be at #chi2025 to discuss. If you are not, feel free to reach out in other ways.

13.04.2025 14:23 — 👍 11 🔁 6 💬 0 📌 0»Researchers Catalog 170+ Text Input Techniques to Improve Typing in XR« https://www.roadtovr... #Metaverse #VirtualWorlds #XR #VR #MR #AR #BeyondPictures

08.04.2025 18:40 — 👍 1 🔁 1 💬 0 📌 0

Very cool to have the work on XR Text Input reach broader in Road2VR www.roadtovr.com/xr-text-trov...

Thanks @ben.roadtovr.com for inviting Max for a piece. Making VR research a general public interest.

Researchers Catalog 170+ Text Input Techniques to Improve Typing in XR Efficient text entry witho...

https://www.roadtovr.com/xr-text-trove-vr-ar-text-input-typing-technique-catalog-max-di-luca/

#Guest #Articles #News #XR #Industry #News

Event Attributes

Just ported all my Twitter/X posts to BlueSky with @en.blueark.app for $6.

It’s a small action, @bsky.app is open for everybody to train their AIs. Now everyone can use the historic of my human created content. And not only xAI. Data dignity they say…

We have a new position for someone with a strong computer science VR background to work on the PRESENCE project. This would be at the Event Lab, Institute of Neurosciences of the University of Barcelona.

If you are interested, you must apply through this website:

lnkd.in/d6QYQKYA

#VRcademicSky

Can AR-guided tunnels improve motor precision? New research tested HoloLens 2 hand-tracking with 3D holographic trajectories, showing improved performance and positive clinician acceptance.

doi.org/10.1007/s100...

Interested in a Visualization/HCI PhD in France? Come join us. We have 2 positions and will accept dual-career couples as well: www.linkedin.com/jobs/view/41...

jobs.inria.fr/public/class...

You can check out the work next month at @iclr-conf.bsky.social Bi-Align (bialign-workshop.github.io) and FM-wild (fm-wild-community.github.io) workshops:

“PARSE-Ego4D: Personal Action Recommendation Suggestions for Egocentric Videos”

Or start playing right now :)

But how do we know if our data is any good? We asked 35k humans in Prolific about how correct and useful they found the suggested actions in reference to every video. Now we opensource the new dataset alongside the human ranks. github.com/google/parse... and all the data generation prompts.

25.03.2025 02:30 — 👍 3 🔁 0 💬 1 📌 0

But really as it happens with all datasets, the key is on “How to make data useful?” That was our goal with PARSE-Ego4D parse-ego4d.github.io we augment the existing narrations and videos by providing 18k suggested actions using the latest of VLMs. This can be useful to train a future AI agents.

25.03.2025 02:30 — 👍 1 🔁 1 💬 1 📌 0

Ego4D is an incredible anthropological dataset. With over 3600 hours of videos (5 months of human activities) in first person view with written narrations from all over the world ego4d-data.org/fig1.html. (Always reminds me of this movie by #IsabelCoixet youtu.be/bYI3A6WLMBU)

25.03.2025 02:30 — 👍 7 🔁 1 💬 1 📌 0

But also: Not all avatars are created equal ⚠️. We did a deep study on preferences (presented at ISMAR 2023 arxiv.org/pdf/2304.01405) and realism matters quite a lot for work scenarios. most people don’t find acceptable a cartoon avatar…

23.03.2025 22:23 — 👍 2 🔁 0 💬 0 📌 0I love how well the work on bringing avatars to video conferences as an alternative to camera on/off is aging.

Check out our work on avatars via virtual cameras on regular conferences on our paper AllTogether. www.microsoft.com/en-us/resear...

From the knowledge acquired during the pandemic. We can all agree that a policy of letting the virus roam through the flocks will 1) limit containing the spread and 2) increase mutations… and that can end badly. For birds and humans. But hey, I am not the secretary of health. So what do I know?

21.03.2025 09:33 — 👍 2 🔁 0 💬 0 📌 0Me 2

16.03.2025 20:38 — 👍 2 🔁 0 💬 0 📌 0@ben.roadtovr.com I know you liked LocomotionVault.github.io so you will probably like this one too

14.03.2025 21:53 — 👍 2 🔁 0 💬 0 📌 0Welcome to the family of significant VR researchers @pedrolopes.org!! I hear the banquet this year was splendid :)

14.03.2025 16:28 — 👍 6 🔁 1 💬 1 📌 0Mike spotted this before the rest of us. 🫡

Publications from 2025 are shared more on Bluesky than on X/Twitter.

More over... we put all of this in an interactive dataset for people to explore each of the techniques and geek around😎 xrtexttrove.github.io some are wild!

13.03.2025 21:54 — 👍 4 🔁 0 💬 1 📌 0Read more & dive to text input:

Arpit Bhatia, Moaaz Hudhud Mughrabi, Diar Abdlkarim, Massimiliano Di Luca, Mar Gonzalez-Franco, Karan Ahuja, Hasti Seifi (2025)"Text Entry for XR Trove (TEXT): Collecting and Analyzing Techniques for Text Input in XR." ACM CHI

PDF: drive.google.com/file/d/1a6TH...

I personally think the solution will in part become a motion retargeting problem as soon as we can provide good haptic feedback. For as long as the human has to move fingers from point A to point B, we will have physical limitations to typing…

13.03.2025 17:47 — 👍 1 🔁 0 💬 1 📌 0

3) there is a tendency to think custom hardware will solve this gap but so far it hasn’t. And keyboard format still delivers the highest wpm.

13.03.2025 17:47 — 👍 1 🔁 0 💬 1 📌 0

2) external surfaces and fingertips/transparency of hands seem to be preferable as ways to improve performance compared to midair gestures.

13.03.2025 17:47 — 👍 0 🔁 0 💬 1 📌 0