AISTATS will take place in beautiful Tangier, Morocco on May 5th, 2026.

Your 4-page pdf may contain either new results or content from a relevant paper of yours published since 2025.

We have a fantastic lineup of speakers and panelists!

AISTATS will take place in beautiful Tangier, Morocco on May 5th, 2026.

Your 4-page pdf may contain either new results or content from a relevant paper of yours published since 2025.

We have a fantastic lineup of speakers and panelists!

I am excited to announce our Workshop on Causality in the Age of AI Scaling in AISTATS 2026!

- Is scaling sufficient for intelligent systems?

- Can causal abilities emerge from scale?

- What can causal modeling bring that scale cannot?

causcale.github.io

Submission deadline is February 27th.

Happy to be a part of this cohort! Apply to the JHU CS PhD program to work together.

engineering.jhu.edu/admissions/g...

10 new CS professors! 🥳

@anandbhattad.bsky.social @uthsav.bsky.social @gligoric.bsky.social @murat-kocaoglu.bsky.social @tiziano.bsky.social

Thank you so much Naftali! Hope all is well

05.06.2025 16:52 — 👍 0 🔁 0 💬 0 📌 0Thank you!

03.06.2025 11:26 — 👍 1 🔁 0 💬 0 📌 0

Bookmark our lab page and GitHub repo to follow our work:

muratkocaoglu.com/CausalML/

github.com/CausalML-Lab

CausalML Lab will continue to push the boundaries of fundamental causal inference and discovery research with an added focus on real-world applications and impact. If you are at Johns Hopkins @jhu.edu, or more generally on the East Coast, and are interested in collaborating, please reach out.

03.06.2025 08:42 — 👍 1 🔁 0 💬 1 📌 0I am also deeply grateful to Purdue University and @purdueece.bsky.social for their support during my first four years as a professor. I had the privilege of teaching enthusiastic undergrads, working with outstanding PhD students, and great colleagues there. I learned a great deal from them.

03.06.2025 08:42 — 👍 1 🔁 1 💬 1 📌 0

I am happy to share that I will be joining Johns Hopkins University's Computer Science Department @jhucompsci.bsky.social as an Assistant Professor in Fall 2025.

I am grateful to my mentors for their unwavering support and to my exceptional PhD students for advancing our lab's research vision.

Faithfulness can be relaxed significantly. Determinism (?) not sure what this means but I don't think it's equivalent to CMC. No unmeasured confounder is not necessary. You need some sparsity to observe some independence pattern, that's all; to learn something, but not necessarily everything.

25.01.2025 03:23 — 👍 2 🔁 0 💬 0 📌 0They used to give champagne glass? I was surprised to see something other than a mug this year.

28.12.2024 17:29 — 👍 2 🔁 0 💬 1 📌 0Teaching young researchers mathematical and scientific rigor is more important than ever today with AI tools' wider adoption in research, as these tools tend to be overly optimistic. LLM-assisted false proofs risk flooding our already overloaded reviewing infrastructure.

24.12.2024 20:21 — 👍 3 🔁 1 💬 0 📌 0

We will present this work at #NeurIPS2024 on Wednesday at 4:30pm local time in Vancouver. Poster #5107.

Led by my PhD students Zihan Zhou and Qasim Elahi.

Paper link:

openreview.net/forum?id=RfS...

Follow us for more updates from the #CausalML Lab!

Wienöbst et al.'s way of uniformly sampling from Markov equivalent DAGs allows us to answer other interesting questions. We focus on estimating the causal effect of non-manipulable variables. We can learn the edges adjacent to this node (a graph cut) and use adjustment from obs.

10.12.2024 17:13 — 👍 0 🔁 0 💬 1 📌 0

We then update the posteriors over each graph cut, which quickly converge to the true cut configurations. This gives us a sample-efficient way to learn causal graphs through interventions non-parametrically for discrete variables.

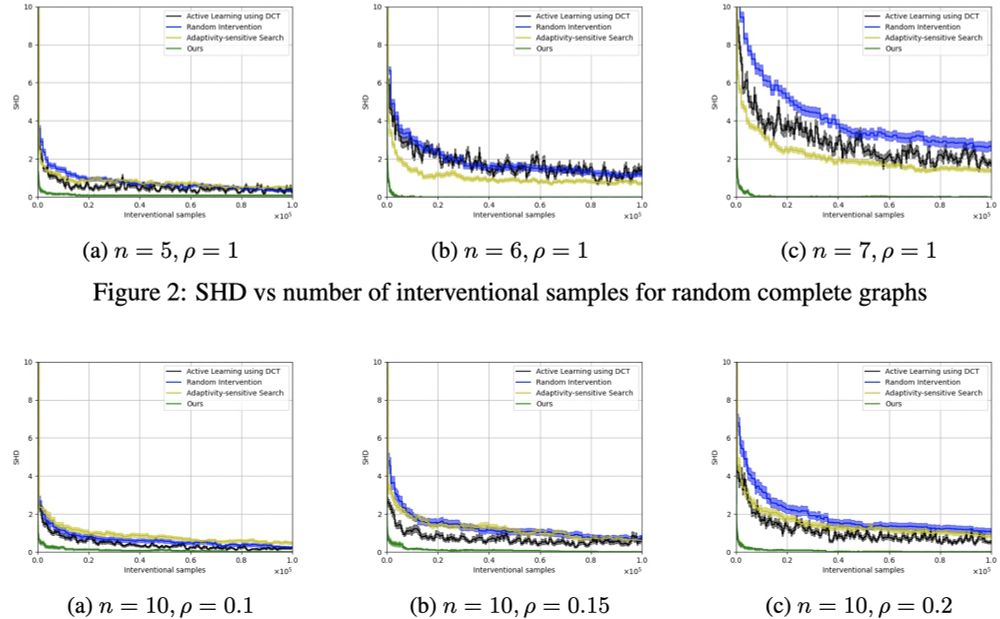

Green at the bottom is ours vs. some baselines.

Assuming we have enough obs. data, we can compute the likelihood of our intv. samples given any graph cut, even though we don't know the graph. Two ways to do this are by unif. sampling causal graphs in poly-time thanks to Wienöbst et al. 2023, or by adjustment of Perkovic 2020.

10.12.2024 17:13 — 👍 0 🔁 0 💬 1 📌 0We leverage an idea from 2010s on learning causal graphs with small interventions. (n, k) separating systems cut every edge with interventions of size k. Each intervention gives info about a cut. We keep track of the posteriors of a set of graph cuts due to (n, k) sep. system.

10.12.2024 17:13 — 👍 0 🔁 0 💬 1 📌 0

Bayesian approaches are promising since they can incorporate causal knowledge even in a single interventional sample. However they are computationally intensive to run on large graphs.

Instead of keeping track of all causal DAGs, can we keep track of a compact set of subgraphs?

You have causal questions. First step is deciding cause-effect relations in your system. Causal graphs capture these compactly. We usually need to be able to run experiments and use the outcomes to infer causal graphs. But experiments are expensive & w/ few samples.

#NeurIPS2024

I will present this work at #NeurIPS2024 next Thursday at 11am local time in Vancouver. Poster #5104.

Led by my PhD student Qasim Elahi. Joint work with my colleague Mahsa Ghasemi.

Paper link:

openreview.net/forum?id=uM3...

Follow us for more updates from the #CausalML Lab!

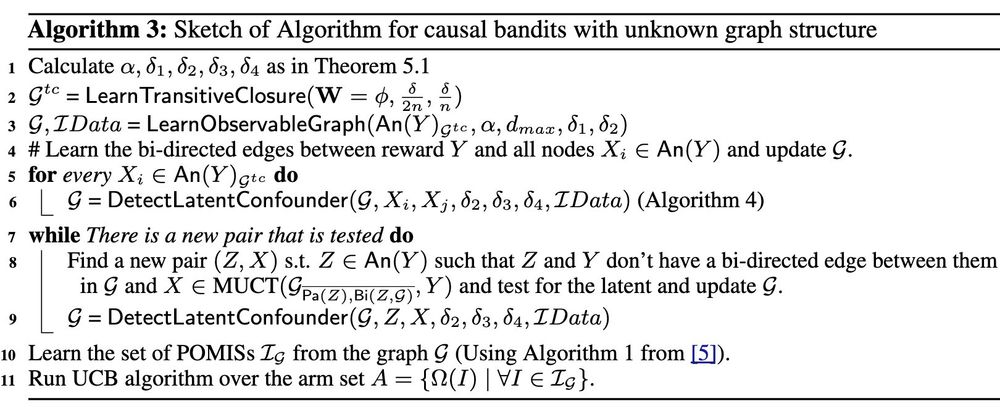

Finally, we have our bandit algorithm that can operate in unknown environments taking advantage of the fact that partial causal discovery is sufficient for achieving optimal regret, pseudocode below:

08.12.2024 19:36 — 👍 0 🔁 0 💬 1 📌 0

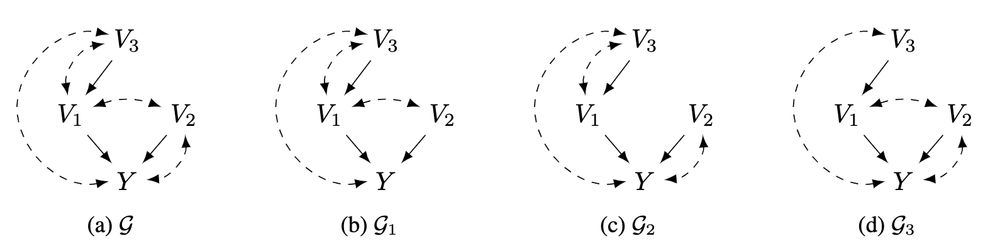

A toy example from the paper: Missing V1 <--> V3 does not affect the possibly optimal minimal intervention sets (POMIS), missing any other bidirected edge does. So we don't need to allocate rounds in our causal bandit algorithm for learning this edge after learning the rest.

08.12.2024 19:36 — 👍 0 🔁 0 💬 1 📌 0

We find that not all confounder locations are needed. You can get away with not learning some and you end up with the same POMIS set, which means you will never miss an optimal arm!

We propose an interventional causal discovery algorithm that takes advantage of this observation.

If you don't know the causal graph, you may try to learn it from data and/or experiments. We identify an interesting research question here:

Do we need to know all unobserved confounders to learn all POMISes? Or can we get away without knowing some?

This is not obvious.

A very nice idea is the POMIS developed by Sanghack Lee and Elias Bareinboim in 2018. They use do-calculus to eliminate unnecessary actions that give the same reward. They propose a principled algorithm to do this. And if you use less, they show you may miss the optimal arm.

08.12.2024 19:36 — 👍 0 🔁 0 💬 1 📌 0Most existing work focuses on how this action space reduction can be done algorithmically if you know the causal structure. In a semi-Markovian model, this includes the location of every unobserved confounder represented as bidirected edge.

08.12.2024 19:36 — 👍 0 🔁 0 💬 1 📌 0

You want to optimize a reward in an unknown environment. Structural knowledge of cause-effect relations is known to help significantly reduce the search space for bandit algorithms. But how much of the causal structure do you need to know to do this?

#NeurIPS2024

Not exactly sure what that means or how to do that. Any link with more info on this?

08.12.2024 01:35 — 👍 0 🔁 0 💬 1 📌 0

We will present this work at #NeurIPS2024 next Thursday at 11am local time in Vancouver. Poster #5103.

Joint work led by my PhD student

Md. Musfiqur Rahman and colleague Matt Jordan.

Paper link:

openreview.net/forum?id=vym...

Follow us for more updates from the #CausalML Lab!