#VisionScience community - please repost and share with trainees who might be interested in the job!

17.11.2023 00:22 — 👍 1 🔁 0 💬 0 📌 0

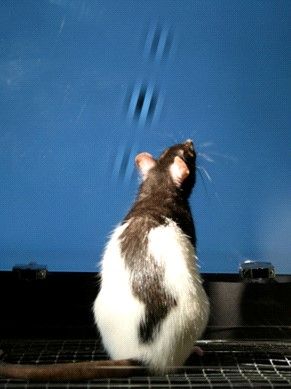

A rat views a visual stimulus in a psychophysical task.

Image credit: Philip Meier.

We are hiring! Opening for a lab manager/animal technician to support behavioral and neurophysiological studies of vision, decision-making and value-based choice in rats and mice. Learn more about the lab at www.ratrix.org, learn more about the job or apply at: employment.ucsd.edu/laboratory-t...

17.11.2023 00:20 — 👍 4 🔁 2 💬 1 📌 0

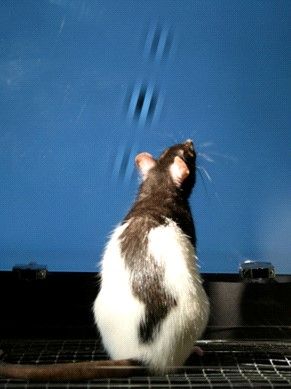

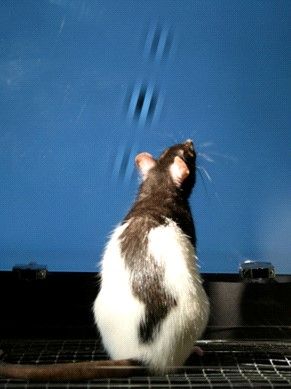

A rat views a visual stimulus in a psychophysical task.

Image credit: Philip Meier.

We are hiring! Opening for a lab manager/animal technician to support behavioral and neurophysiological studies of vision, decision-making and value-based choice in rats and mice. Learn more about the lab at www.ratrix.org, learn more about the job or apply at: employment.ucsd.edu/laboratory-t...

17.11.2023 00:20 — 👍 4 🔁 2 💬 1 📌 0

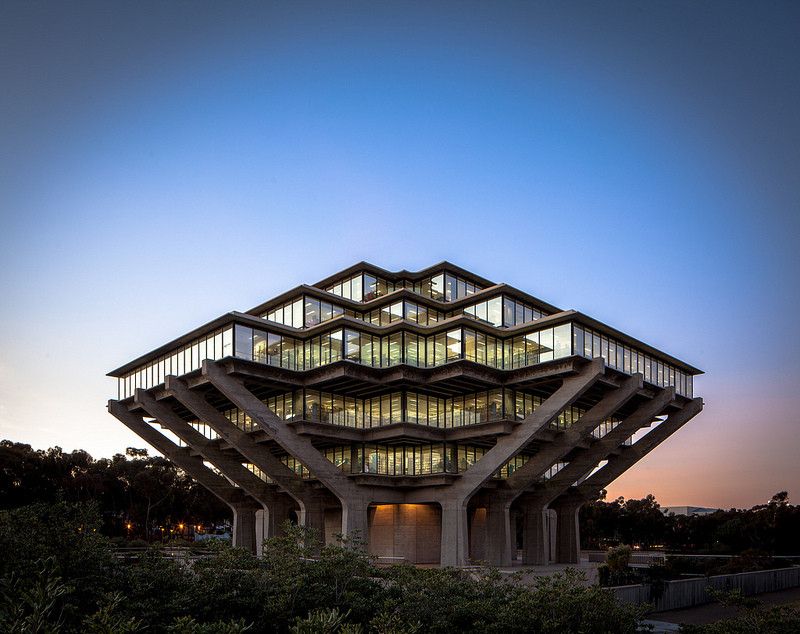

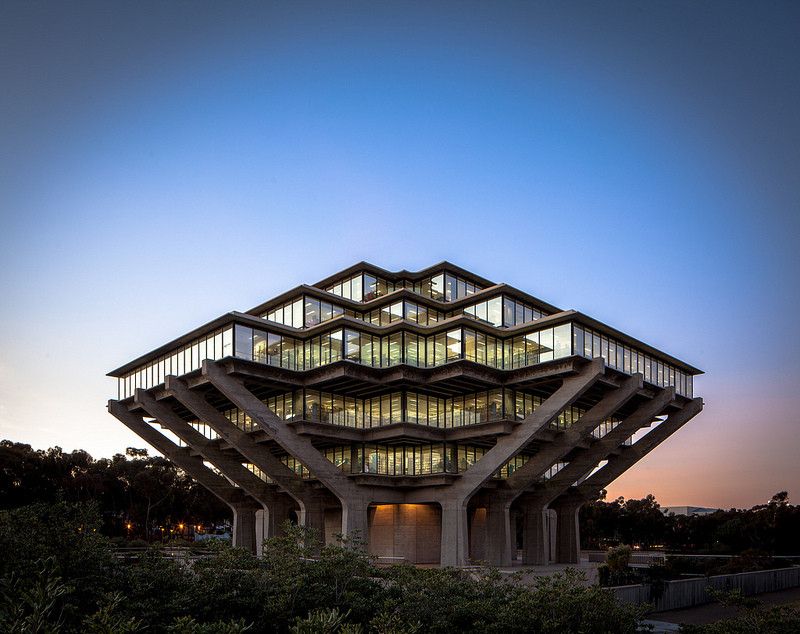

Geisel Library at UCSD

Opening for Postdoc in Data Science at UCSD.

Study multi-timescale correlations and non-stationarity in neural/behavioral data; assess implications for statistical inference; develop improved methods robust to these effects. Co-advisor Armin Schwartzman. Contact: preinagel@ucsd.edu, armins@ucsd.edu

09.11.2023 16:36 — 👍 1 🔁 0 💬 0 📌 0

Sure. Understanding the effects of each quantitatively and contextually aids interpretation of metascience data, e.g., the likely impact on past literature where a practice has been used; and helps focus attention and education on those practices that are actually responsible for the most harm.

02.11.2023 15:16 — 👍 1 🔁 0 💬 0 📌 0

I do mention sequential analysis, and cite your fine paper and others, for those who want to learn more. If my simulation reflects their existing practice, prespecifying what they already do may be their preferred choice, and is valid. Bonferroni kills power so it would be a particularly bad choice.

02.11.2023 15:11 — 👍 0 🔁 0 💬 1 📌 0

Others may think "I had no idea reporting p values constrained me in such a way! I shouldn't be reporting p values." A difference in our perspective is, I work in fields where most work is not even intended to be confirmatory, people just give p values because they're told to, and don't know better.

02.11.2023 15:07 — 👍 0 🔁 0 💬 1 📌 0

Actually I think I have a higher opinion of my readers than you; I don't think they'll glibly walk away with a superficial take. Some will come away with: "I really care about controlling false positives, but cool, I could get more flexibility and statistical power with sequential analysis."

02.11.2023 15:04 — 👍 0 🔁 0 💬 1 📌 0

Important difference - this would be w=19 in my simulations, (my Fig1). I claim FP<α(1+w/2) or <0.525 which accords. They got much lower than this bc they limited the maximum sample size. I focused on effect of limiting w (only adding data if p is near alpha) which I think reflects common practice.

02.11.2023 14:58 — 👍 1 🔁 0 💬 1 📌 0

Bottom line: collecting more data to shore up a finding isn’t bad science, it’s just bad for p-values. My purpose is not to justify or encourage p-hacking, but rather to bring to light some poorly-appreciated facts, and enable more informed and transparent choices. Plus, it was a fun puzzle. [3/3]

01.11.2023 23:45 — 👍 5 🔁 1 💬 0 📌 0

With the goal of teaching the perils of N-hacking, I simulated a realistic lab situation. To my surprise, the increase in false positives was slight. Moreover, N-hacking increased the chance a result would be replicable (the PPV). This paper shows why and when this is the case. [2/3]

01.11.2023 23:42 — 👍 3 🔁 0 💬 1 📌 0

If a study finds an effect that is not significant, it is considered a "questionable research practice" to collect more data to reach significance. This kind of p-hacking is often cited as a cause of unreproducible results. This is troubling, as the practice is common in biology. [1/3]

01.11.2023 23:41 — 👍 2 🔁 0 💬 1 📌 0

"The Crow and the Pitcher" illustration by Kendra Shedenhelm

Pleased to announce that my paper is out on the consequences of collecting more data to shore up a non-significant result (N-hacking). TLDR: although it's not correct practice, it's not always deleterious. (illustration by Kendra Shedenhelm) doi.org/10.1371/jour...

01.11.2023 23:39 — 👍 29 🔁 17 💬 3 📌 4

I think we have run up against the limitations of this medium. I actually have no idea what you meant by that post. But what I take away is we are interested in a related question, we appear to have different views and referents, and it would be worth digging into in some less staccato format.

23.10.2023 04:31 — 👍 1 🔁 0 💬 1 📌 0

Using methods of measurement whose mechanisms are well understood, thus whose assumptions and limitations are front-of-mind; also frequent use of triangulation: testing a casual model or theory by many distinct techniques that have different assumptions… I can give examples but not briefly. (2/2)

22.10.2023 21:09 — 👍 2 🔁 0 💬 2 📌 0

it’s hard to articulate examples in a few words, as a key characteristic is not thinking “science“ can ever be a short, simple, one-shot thing. It takes a long series of purposeful observations, theory, highly constrained models that make specific predictions, many many controlled experiments, (1/2)

22.10.2023 21:01 — 👍 2 🔁 0 💬 1 📌 0

looks interesting but can’t tell from a quick look if it’s the same methodology I had in mind, or one that includes both sides if the debate we were having, or something else entirely

22.10.2023 20:53 — 👍 0 🔁 0 💬 0 📌 0

There may be other reasons to study what scientists do in fields with poor track records, or fields with no track record,

But I think Phil of Sci aimed at improving practice can be advanced by studying what *good* or *great* scientists actually do, if you can identify who those are.

22.10.2023 17:22 — 👍 7 🔁 0 💬 0 📌 0

Of course there is a normative judgement there— these scientific fields have been incredibly successful at generating secure knowledge (discoveries and that stood the test of time, replicated endlessly, generalized broadly, parsimoniously explained much, led to successful practical applications…)

22.10.2023 17:19 — 👍 2 🔁 0 💬 1 📌 0

Opposite perspective. As one trained in classical biochemistry, genetics and (early/foundational) molecular biology, I think scientists in these fields have/had some brilliant, innovative, rigorous and productive methods that are not yet well codified, nor yet understood in philosophy or statistics

22.10.2023 17:14 — 👍 11 🔁 0 💬 3 📌 0

P.S. examples: some biochemistry, molecular biology, basic research on experimental model organisms. E.g., almost no p values in the landmark papers of molecular biology (Meselson & Stahl, Hershey & Chase, Jacob & Monod, Nirenberg & Matthaei... not even the overtly statistical Luria & Delbruck).

25.09.2023 16:39 — 👍 1 🔁 0 💬 0 📌 0

the rigor and reliability of the research could be very high, because the p values were not the basis for the conclusions. I think eliminating the reporting of performative p values is very important. Otherwise people outside the field are misled about the epistemic basis and status of the claims.

25.09.2023 16:12 — 👍 1 🔁 0 💬 1 📌 0

If you ask them what the p value does tell them, they mostly think it is the PPV. This is what they want to know. So with better education they probably wouldn't use p values, and might use Bayesian statistics. In such subfields most p values in the literature are invalid (post hoc), and yet [5/6]

25.09.2023 16:06 — 👍 1 🔁 0 💬 1 📌 0

Some scientists compute p values as an afterthought, after drawing conclusions, while writing papers. They do it because they think it is expected or required. But often they have strong justifications for their conclusions, which are given in the paper, and which are what sways their readers. [4/n]

25.09.2023 16:01 — 👍 1 🔁 0 💬 1 📌 0

I asked colleagues how much they rely on p values to decide if they believe something. The only ones who said p was important were trained in psychology (and they said it was the ONLY reason to believe something). Which is interesting. Psychology's excessive reliance may have caused their woes [3/n]

25.09.2023 15:51 — 👍 3 🔁 0 💬 1 📌 0

such as epidemiologists, field ecologists, pre-clinical/clinical researchers rely on p values, bc they study things with large variability relative to effects, substantial risk of chance associations, and for other reasons. Many other biologists only use p values if/when they are forced to. [2/n]

25.09.2023 15:47 — 👍 2 🔁 0 💬 1 📌 0

Can't resist this bait. If there's anything I've learned from wading into these waters it's that statements about "scientists" are senseless. Practice and norms differ wildly between disciplines. I used to argue "well, bioligists ___..." but that's even too vast an umbrella. Some biologists [1/n]

25.09.2023 15:42 — 👍 2 🔁 0 💬 2 📌 0

actually, re-read, I know that paper.

I will be surprised if we actually disagree, I think we’re more likely talking about different claims.

24.09.2023 15:23 — 👍 1 🔁 0 💬 1 📌 0

will definitely read!

24.09.2023 15:16 — 👍 1 🔁 0 💬 1 📌 0

I used to think that, but I was persuaded that NHST requires prospective design: you can only know the type I error rate of the null if the null is well defined, and post hoc you can’t know what procedure you might have followed if the data had been otherwise. Did you mean to challenge that view?

24.09.2023 15:12 — 👍 0 🔁 0 💬 2 📌 0

The Center for Philosophy of Science at the University of Pittsburgh. Here to provide you with up-to-date information on Center news, events, and all the rest!

neuroscientist studying vision at Univ of Oregon

nielllab.uoregon.edu

Philosopher of science and occasional scientist. Mainly philosophy of evolutionary biology, philosophy of probability, implications of modeling and statistical inference. Book: Evolution and the Machinery of Chance. https://marshallalmostsurely.com

Award-winning podcast on the history, philosophy & social studies of science brought to you by grad students from the University of Melbourne. Season 5 Out Now!

🎙️ New episodes weekly

🔗 www.hpsunimelb.org/the-hps-podcast

📣 By @tespiteri.bsky.social

History and Philosophy of Science, Cognitive Science,Experimental Philosophy, distinguished Prof at Pitt, Director of the Center for Philosophy of Science

Professor of History & Philosophy of Science (HPS) | Metascience | Improving Peer Review | Debates about statistical reform

Co-director of MetaMelb - an interdisciplinary metascience lab - at the University of Melbourne

pic: @replicats.bsky.social

Scientist interested in all aspects of brain and behavior. Loves to talk math and data. Prof of Biology at University of Washington, Seattle. Mom of 2 epsilons; paints in watercolor here, there, and anywhere.

Lab @ ESI Frankfurt. We believe the brain is noise-free. Or mostly-noise-free-ish. And that if we stare at behaving brains long enough, it will ALL MAKE SENSE.

Neuroscientist | Happy father |

PI at Weizmann Institue | FENS-Kavli Network Scholar | Brain-body interactions

https://www.weizmann.ac.il/brain-sciences/labs/livneh/

Professor of statistics, dean of the faculty of Behavioural & Social Sciences Groningen, @rug-gmw.bsky.social @unigroningen.bsky.social. I like LEGO and good music

Neurons, real and artificial. Visual neuroscientist working currently working on ML/AI.

orcid: 0000-0002-6976-6237

Scientist studying learning, memory, and decision-making. Poet and Playwright.

Animal pose estimation software - open, free, often SOTA, and helping enable your science since 2018 💜 DeepLabCut.org | #deeplabcut | posts by dev team 👋

🏳️🌈🧠🔬🚴♀️ Neuroscientist. Associate Director of Data and Outreach at Allen Institute for Neural Dynamics. Focused on how we do Science in the Open. She/her.

Job: Neurobiology @ USC

Likes: Bikes, crypto, techno

What’s next?

Associate Professor @PrincetonNeuro

and @PrincetonMolBio. Studying neuro-immune interactions in health and disease.

Visual perception and cognition scientist

(he/him)

My site: http://steveharoz.com

R guide: https://r-guide.steveharoz.com

StatCheck Simple: http://statcheck.steveharoz.com

Theoretical Neuroscientist, Columbia University

Metascience, statistics, psychology, philosophy of science. Eindhoven University of Technology, The Netherlands. Omnia probate. 🇪🇺