Go

I built a fully-functioning in-browser mobile Go Game for local play in a couple hours yesterday, or should I say, an AI Agent did.

chrispenner.ca/rugo

I'll summarize my experience with the tool;

10.08.2025 20:35 — 👍 5 🔁 1 💬 1 📌 0

Ultimately, the takeaway for me is:

* It's a great tool for getting a small artifact like this. It fulfills a need I had, and was very low investment.

* I didn't learn a damn thing. I don't know any more rust, I don't understand WebGPU, I didn't learn how to structure a game.

10.08.2025 20:39 — 👍 6 🔁 2 💬 2 📌 0

just finished playing What Remains of Edith Finch

I have a strong urge to drop everything and move to the Pacific Northwest

09.08.2025 16:45 — 👍 5 🔁 0 💬 0 📌 0

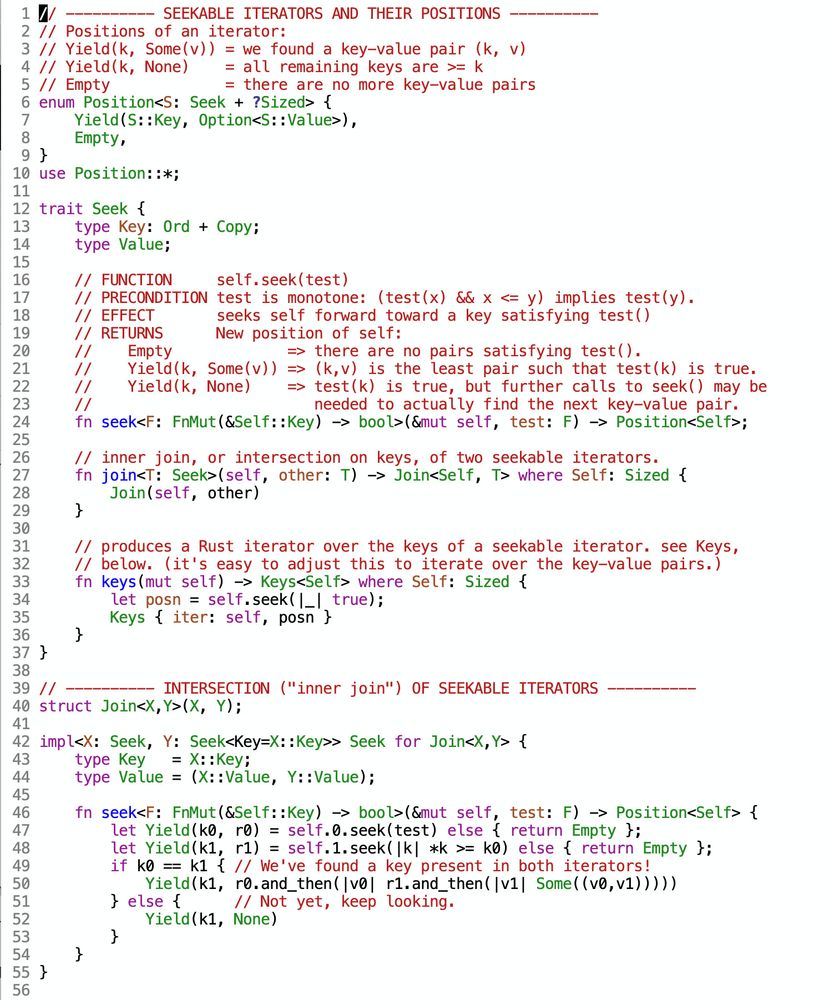

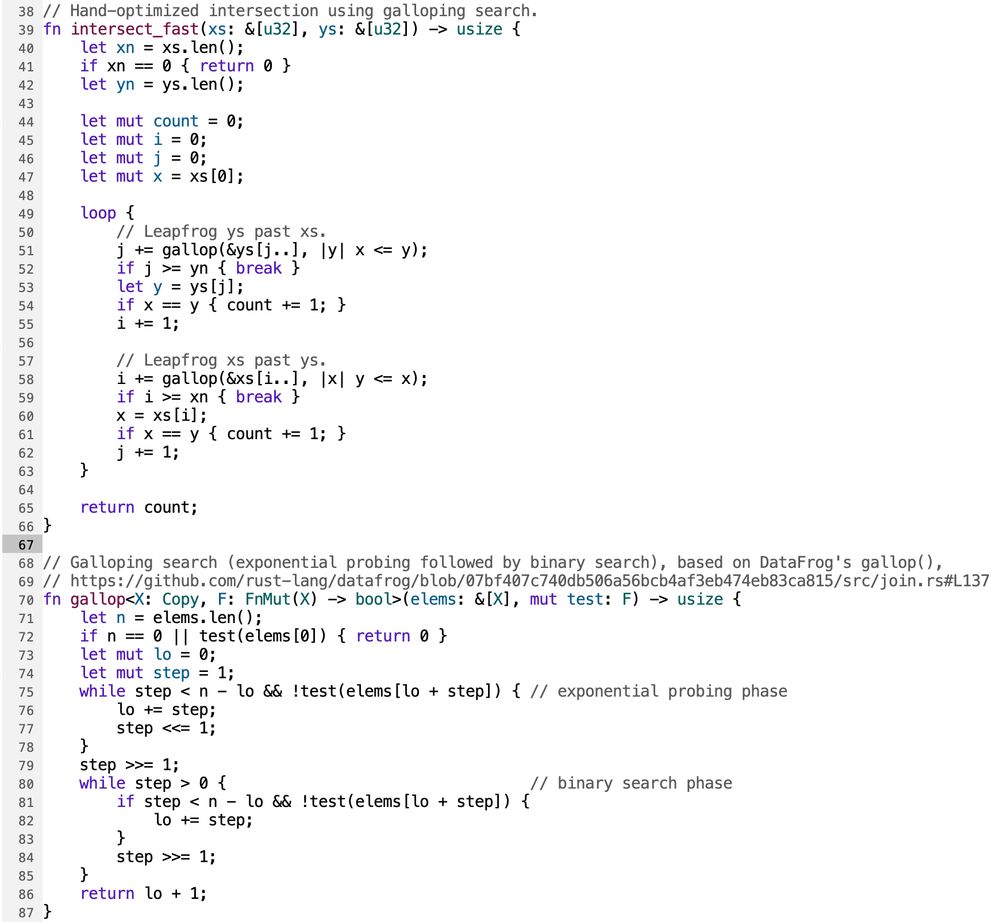

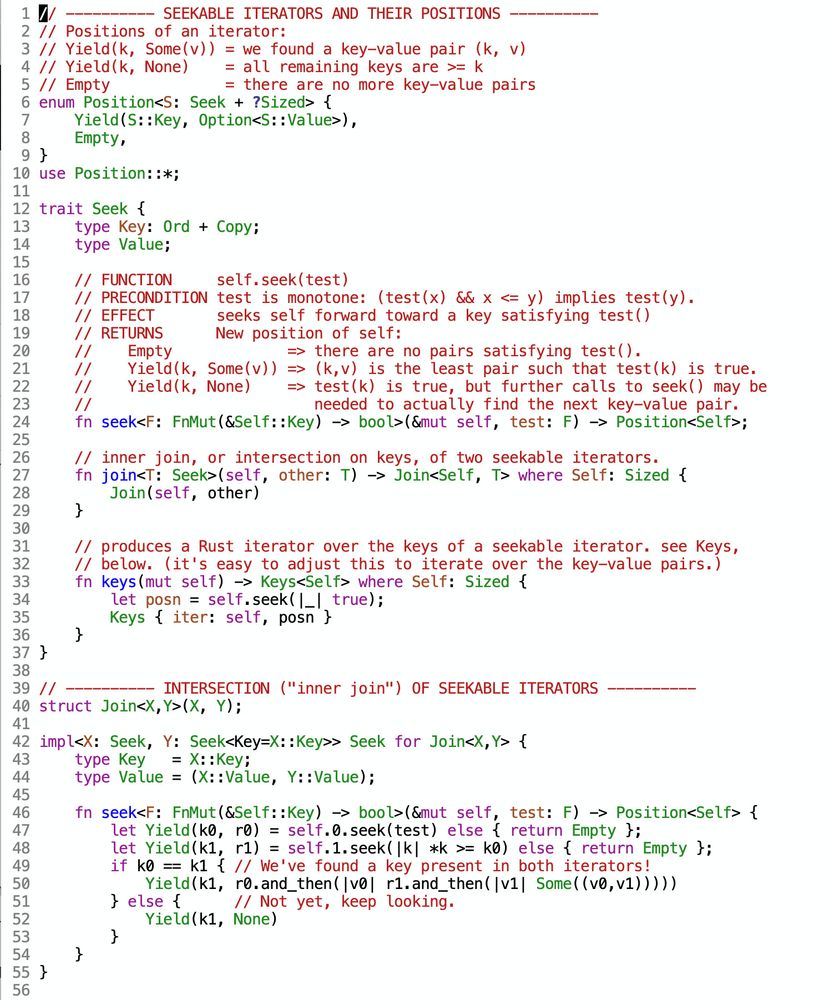

AIUI leapfrog-via-galloping-search is pretty close to the state-of-the-art way to do database joins on sorted data structures (eg BTrees). but, it doesn't parallelize. the divide-and-conquer algo seems very parallelizable. you could switch to leapfrog intersection once parallelism is exhausted.

09.08.2025 09:42 — 👍 0 🔁 0 💬 0 📌 0

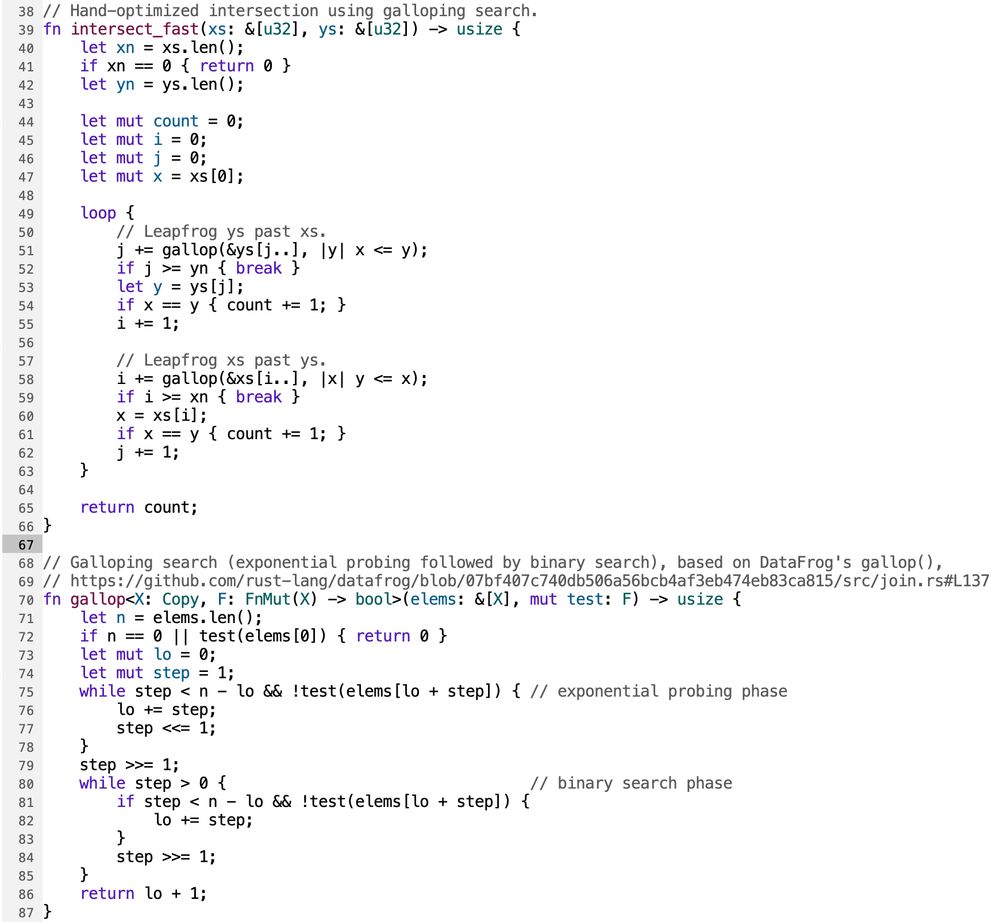

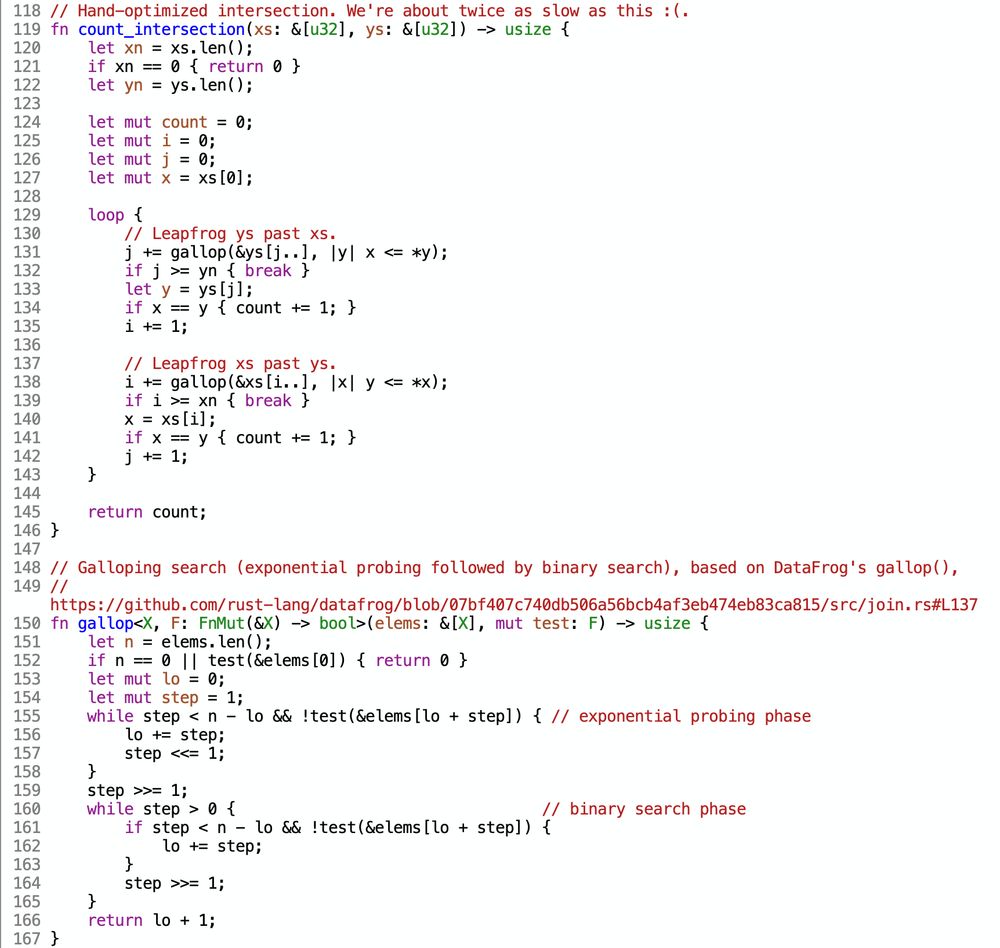

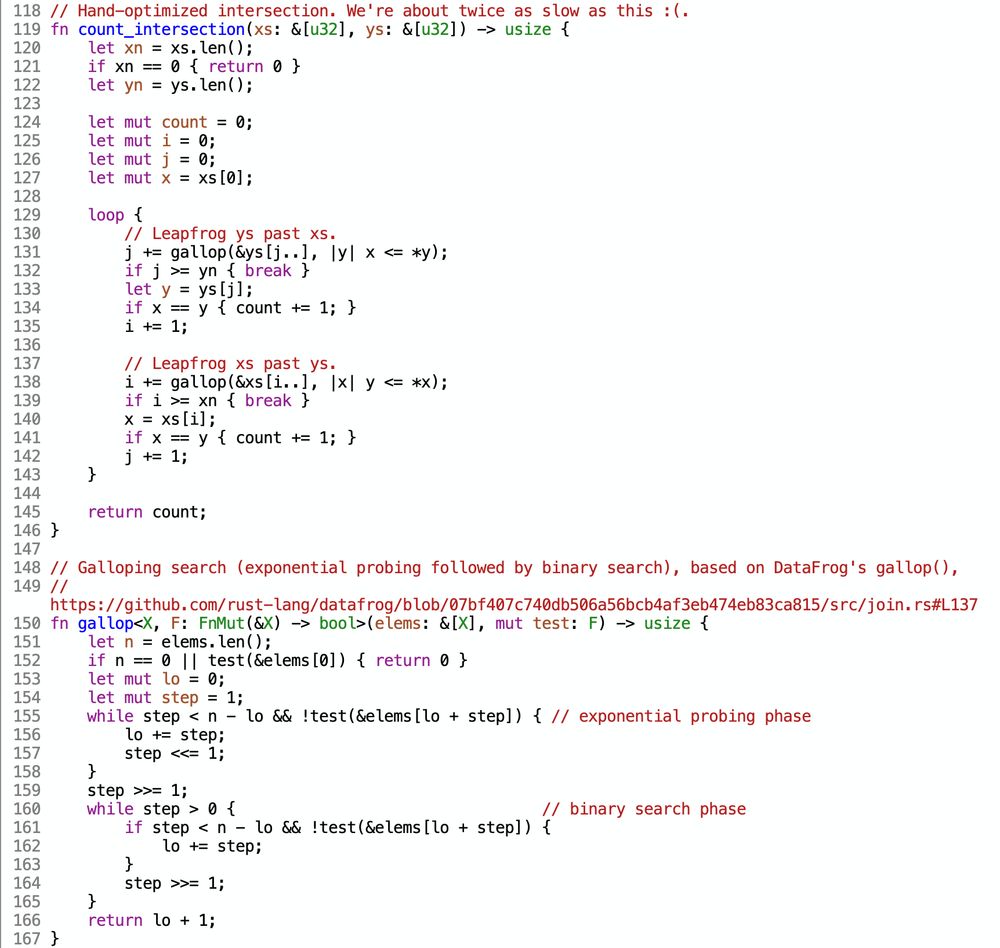

btw, the "better version" of the linear merge-intersection is leapfrog intersection: keep pointers into each list, starting at front. repeatedly "leapfrog" the pointer whose element is smaller by searching it forward toward the larger element. binary search will work, but galloping search is better.

09.08.2025 09:37 — 👍 1 🔁 0 💬 1 📌 0

the linear-merge intersection can be inefficient if one list is small. eg [0..1000] intersect [1001..1016] is empty; divide-and-conquer discovers this in 4 splittings; linear merge takes 1000 steps. linear in the sum of the input sizes, but unnecessarily slow.

09.08.2025 09:34 — 👍 0 🔁 0 💬 1 📌 0

Intersect 2 sorted lists A, B: Wlog |A| <= |B|. Let x =the median of A = A[|A|/2]. Binary search for x in B. This splits A, B each into two parts (below/above x). Recursively intersect A1,B1 and A2,B2.

Is this a well-known sorted list intersection algorithm? What is its worst-case complexity?

08.08.2025 17:28 — 👍 3 🔁 0 💬 1 📌 0

someplace where the light is strong, the air is cool, and the nights are quiet

08.08.2025 10:32 — 👍 1 🔁 0 💬 0 📌 0

I haven't. what's the easiest way to do that?

I previously (although for different Q) tried godbolt / looking at asm, but was completely overwhelmed by quantity of asm generated and could not parse what was going on at all.

07.08.2025 21:01 — 👍 1 🔁 0 💬 1 📌 0

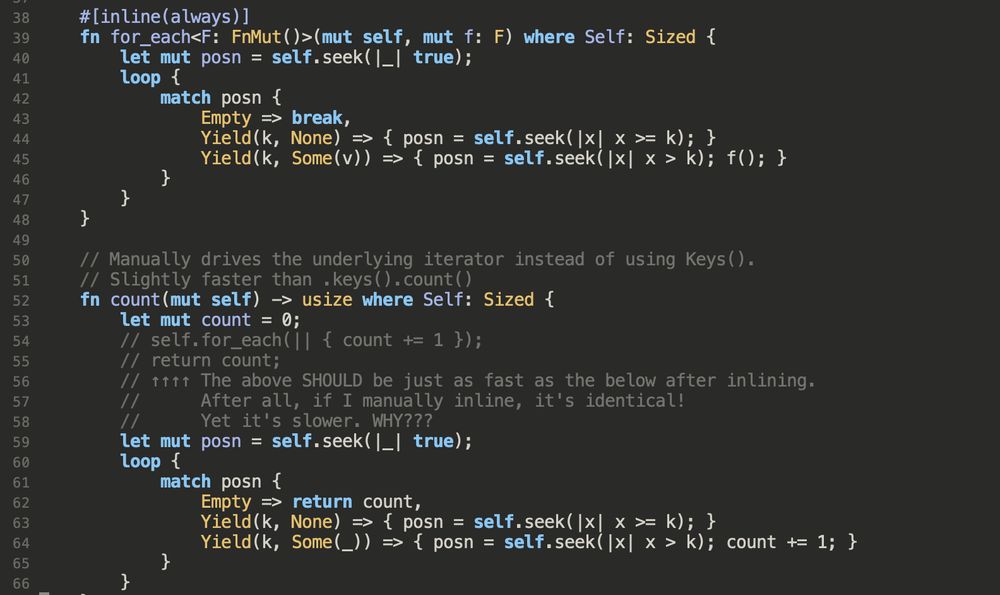

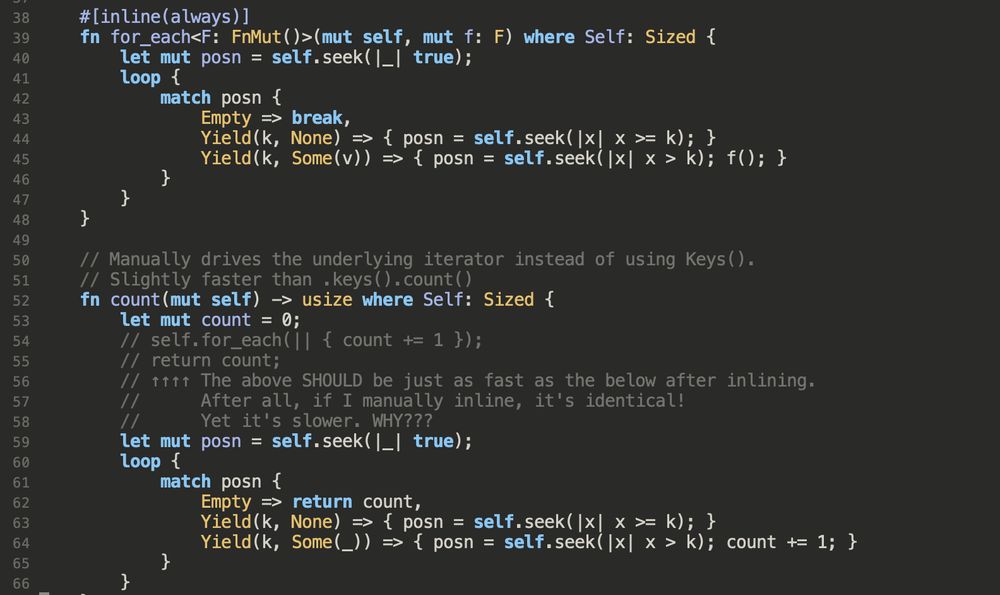

I do not understand inlining in Rust. Even with #[inline(always)], I get worse performance than if I inline manually. Wat?

07.08.2025 20:43 — 👍 1 🔁 0 💬 1 📌 0

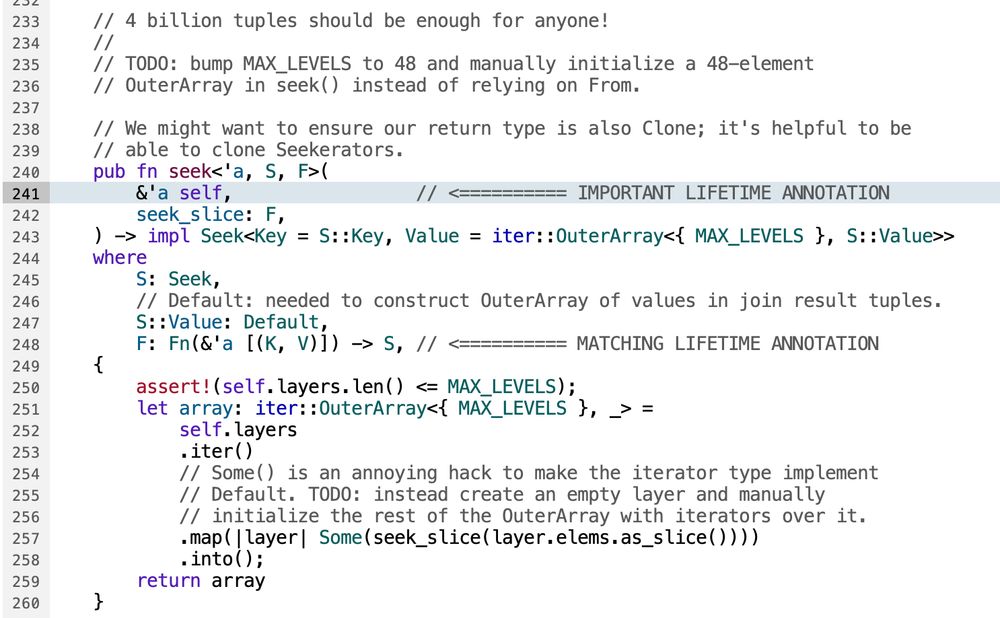

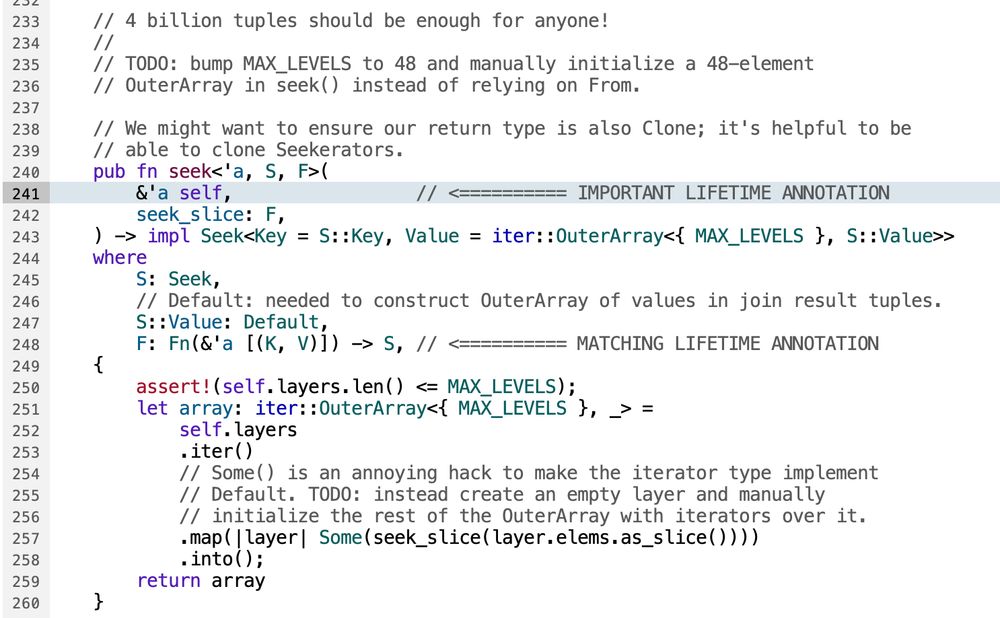

full code, including the seekable iterators with worst-case optimal/fair intersection, here: gist.github.com/rntz/9c10db3...

07.08.2025 16:18 — 👍 1 🔁 0 💬 0 📌 0

yeah, it turned out to be that an assert!(thing >= smaller_thing) was making a subsequent max(smaller_thing, thing) get optimized away. the assert! and max were in completely different functions, though; this only happened after inlining.

07.08.2025 15:55 — 👍 1 🔁 0 💬 0 📌 0

I tried optimizing my worst-case optimal seekable iterators in Rust and thought I did pretty good. Then I tried hand-optimizing a little "count the intersection" loop and discovered it's 2x as fast as the iterators. Feh!

07.08.2025 15:54 — 👍 4 🔁 0 💬 1 📌 0

why is removing assert!s making my Rust code run _slower_?

07.08.2025 08:09 — 👍 5 🔁 0 💬 2 📌 0

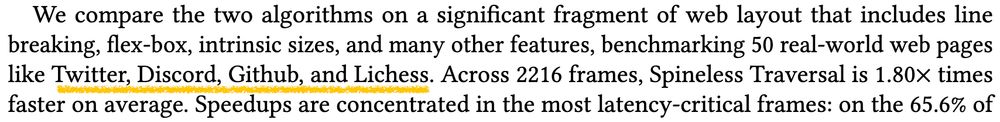

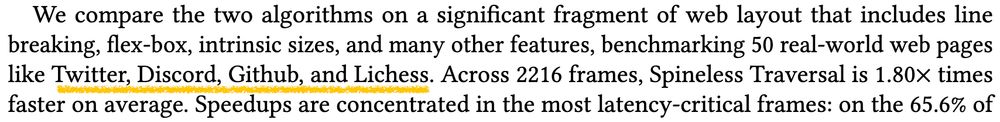

We compare the two algorithms on a significant fragment of web layout that includes line

breaking, flex-box, intrinsic sizes, and many other features, benchmarking 50 real-world web pages

like Twitter, Discord, Github, and Lichess. Across 2216 frames, Spineless Traversal is 1.80× times

faster on average. Speedups are concentrated in the most latency-critical frames: on the 65.6% of

the four genders: Twitter, Discord, Github, Lichess

27.07.2025 15:27 — 👍 6 🔁 2 💬 0 📌 0

This should allow e.g. parallel or, (por bottom true == por true bottom == true).

How hard would it be to make a dependently typed language runtime do (1)?

16.07.2025 08:02 — 👍 1 🔁 0 💬 1 📌 0

I want a PL for "deterministic" "concurrency" where:

1. I can run two exprs in parallel, receiving whichever evaluates "first", first. I have to prove this nondeterminism doesn't affect the result. To make this feasible:

2. I can quotient types. I must prove functions respect the equalities I add.

16.07.2025 08:02 — 👍 1 🔁 0 💬 1 📌 0

uploading this classic clip for posterity buttondown.com/jaffray/arch...

12.07.2025 14:15 — 👍 8 🔁 2 💬 0 📌 0

you, a set-theoretic plebeian:

> data Bool = True | False

me, a domain-theoretic sophisticate:

> data Bool = True | Later Bool

09.07.2025 03:00 — 👍 19 🔁 1 💬 0 📌 0

gonna write a paper with exactly two (2) citations

07.07.2025 04:18 — 👍 1 🔁 0 💬 0 📌 0

White mountain ermine hopping in the snow... Enjoy #bluesky 😊

06.07.2025 08:57 — 👍 16389 🔁 2173 💬 302 📌 214

databases are about "and"

Prolog is about "or"

06.07.2025 21:04 — 👍 5 🔁 0 💬 0 📌 0

what if different delimeters determined whether space was application or pipeline?

[xs (filter even) (map square) sum]

brackets = pipeline/Forthish, parens = application/Lispish

30.06.2025 18:11 — 👍 3 🔁 0 💬 1 📌 0

the right notion of equality on existential types should be something like bisimulation, right? is there a standard reference for this?

25.06.2025 04:58 — 👍 3 🔁 0 💬 0 📌 0

The Myth of RAM, part I

I'm more interested in the big-O of "memory access" than I am in hashing specifically.

also, someone on fediverse linked me to this blog post series, which not only suggests this holds empirically but also makes exactly your sqrt(n) via black holes argument! :) www.ilikebigbits.com/2014_04_21_m...

23.06.2025 05:40 — 👍 2 🔁 0 💬 0 📌 0

I've heard the argument made that hashtable lookup is only constant-time if memory access is O(1), but it's actually O(log n).

But isn't it actually O(∛n)? In a given time t, I can reach at most O(t³) space, limited by the speed of light.

23.06.2025 01:02 — 👍 4 🔁 0 💬 2 📌 0

🔥 take: Functional programming isn't really about mathematical functions, but input-output black-box procedures. It can't answer perfectly cogent questions like "for which x values is f(x) = 3"?

🌶️ take: Logic programming _can_ answer these questions. LP is the real FP.

18.06.2025 19:49 — 👍 3 🔁 0 💬 0 📌 0

rust gives me the same feeling as dependent types

"ooh! a fun puzzle: how do I convince the compiler my code is legit?"

(3 days of hyperfocus later)

"whew! Now that I've proven 1 + 1 = 2, I can get on to the thing I started out trying to do..."

17.06.2025 21:37 — 👍 4 🔁 0 💬 1 📌 0

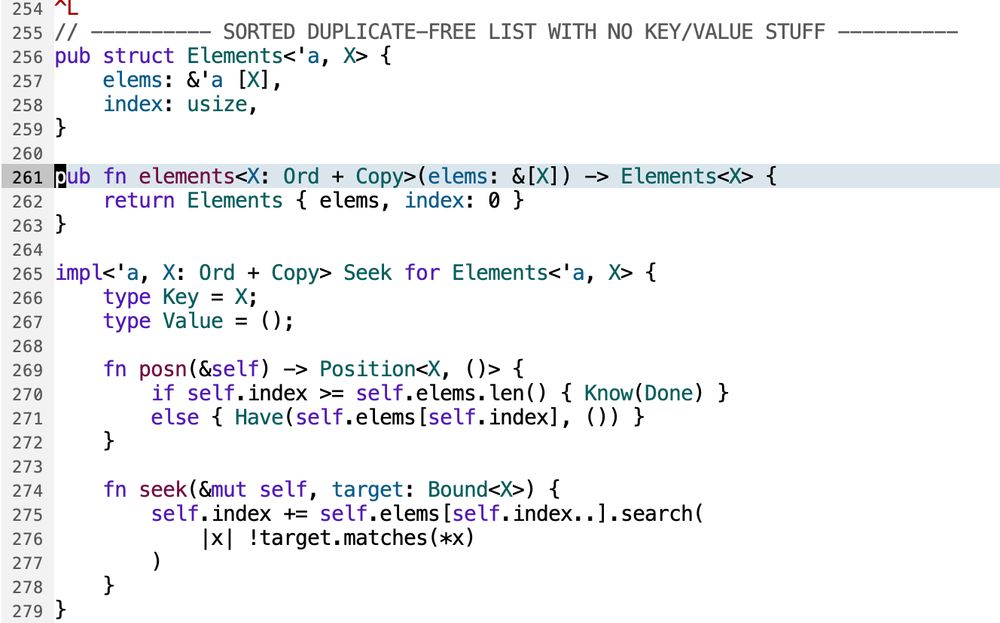

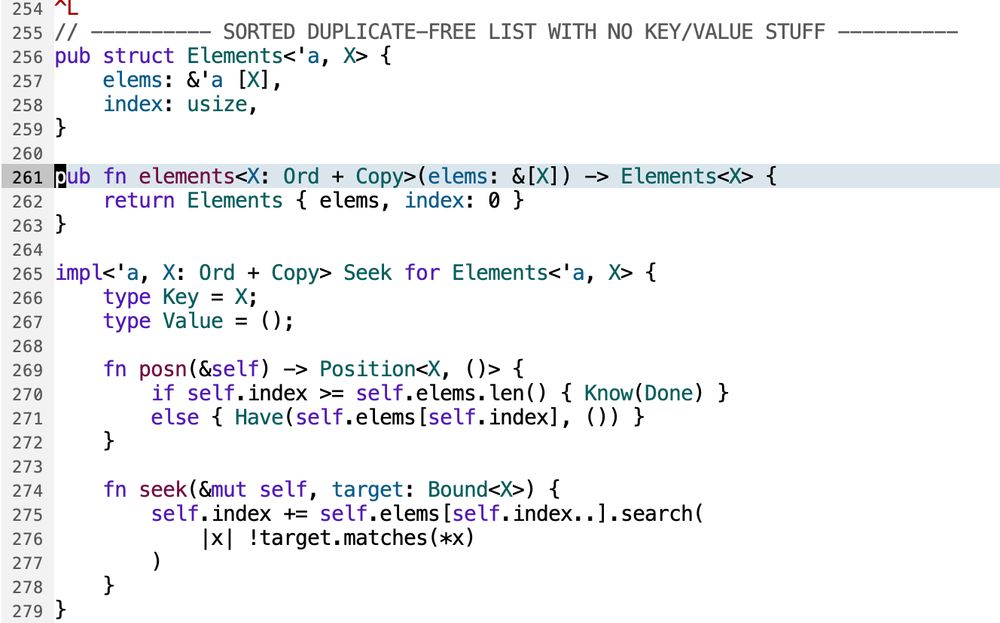

signature of elements for completeness:

fn elements<X: Ord + Copy>(elems: &[X]) -> Elements<X>

17.06.2025 00:41 — 👍 0 🔁 0 💬 0 📌 0

I figured it out by blindly stumbling around until I added the right lifetime annotations to seek().

17.06.2025 00:38 — 👍 0 🔁 0 💬 2 📌 0

computer systems nerd, interaction designer

work @ https://www.ekzhang.com

“i see nothing but the candle in the mirror”

A little constraint logic programming language and associated community of researchers!

Programming will get better.

web: http://rapparu.nl

instagram: https://www.instagram.com/rapparu/

patreon: https://www.patreon.com/rapparu

SW-5385-1349-2117 🦑

Reader in Computer Science at the University of St Andrews. Will play ukulele for free, will stop for money. Cricket. Go. Woke Prick. He/him. Avatar by Joo (www.siajoohiang.com)

https://www.type-driven.org.uk/edwinb/

https://www.lambda-miners.org.uk/

PL PhD student at Cornell

ngernest.github.io

Computers and decentralization.

Programmer building programs that are useful, easy to install, easy to run, easy to modify, easy to share. https://akkartik.name/freewheeling-apps

World Builder, Artist, Programmer. Thinking about programming languages and imaginary landscapes. he/him

- https://types.pl/@brendan (preferred)

- https://github.com/brendanzab

I like programming languages. A lot. Especially Haskell.

Tools, types and functions.

Functional programming, dependent types, denotational semantics, category theory, cooking, snowboarding, rugby.

Working on grid interconnection at Pearl Street Technologies. Prev databases and things at RelationalAI, Bubble, Cockroach.

https://petevilter.me

Exploring how to make programs more malleable and computing more humane | My communities: Malleable Systems, Matrix, Mozilla | He/him

Present: Pursuing PL at Penn (PhD)

Before: Browsing bytes at Brown (Bachelor's)

paulbiberstein.me

PL Researcher. Assistant Prof at University of Regina 🇨🇦

Trying to make dependent types a bit easier to use.

Formerly Postdoc at Edinburgh with Ohad Kammar, and PhD at UBC with Ron Garcia.

Likes Lean, Haskell, static analysis, PL design and theory, general CS, and his trumpet

Applied mathematician and technologist. Research scientist at @toposinstitute.bsky.social

Occasional OCaml programmer. Host of Signals and Threads http://signalsandthreads.com