Left: 1942 "War Map" produced by Esso, now ExxonMobil, charting oil's role in transportation as "key to victory". Right: 2024 map produced by NVIDIA as part of an investor presentation slide deck describing its involvement in sovereign AI efforts globally. On the left panel: "Nations are awakening to the imperative to produce artificial intelligence using their own infrastructure, data workforces and business networks."

The Commodification of AI Sovereignty: Lessons from the Fight for Sovereign Oil (with Kate E. Creasey, Taylor Lynn Curtis, and @geomblog.bsky.social), is out now on arXiv: www.arxiv.org/abs/2601.11763.

24.01.2026 06:27 —

👍 11

🔁 3

💬 3

📌 0

Making Sense of AI Policy Using Computational Tools | TechPolicy.Press

A new report examines how to use computational tools to evaluate policy, with AI policy as a case study.

We released a new report in partnership with the Center for Tech Responsibility at Brown University on how policymakers and researchers can better analyze AI legislation to protect our civil rights and liberties.

10.01.2026 22:36 —

👍 175

🔁 59

💬 4

📌 1

Briefing: Grok brings nonconsensual image abuse to the masses

Plus: a new feature in the Meta Ad Library and a new Telegram investigation tool.

Today on @indicator.media's free weekly briefing: The staggering impunity of xAI, which turned its abusive image generator on its own users in full view and has barely done anything to contain it.

09.01.2026 15:50 —

👍 9

🔁 7

💬 1

📌 2

@mantzarlis.com and folks at @indicator.media have done incredible reporting & investigation on the AI nudification ecosystem that I'm constantly citing in my research on AIG-NCII - appreciate all the work you do!

04.12.2025 20:45 —

👍 2

🔁 2

💬 0

📌 0

Very glad to be a part of a new paper detailing how developers and developer platforms can prevent AIG-NCII, a form of image based sexual abuse that disproportionately harms women and girls. Thanks to all the collaborators and Max Kamachee & @scasper.bsky.social for leading this important project!

04.12.2025 20:38 —

👍 4

🔁 2

💬 0

📌 0

Thanks to collaborators! This was a really interesting paper for me to work on, and it took a special group of interdisciplinary people to get it done.

Max Kamachee

@r-jy.bsky.social

@michelleding.bsky.social

@ankareuel.bsky.social

@stellaathena.bsky.social

@dhadfieldmenell.bsky.social

04.12.2025 17:32 —

👍 2

🔁 2

💬 0

📌 0

Spotify thought i was 87 if that makes you feel better

04.12.2025 03:41 —

👍 2

🔁 0

💬 1

📌 0

ACM members/computing researchers who should be members interested in contributing should join the subcommittee's mailing list!

One of our goals here is to build policy coalitions across institutions so we can do more as a collective 💪 and balance special interest groups.

25.11.2025 17:43 —

👍 16

🔁 6

💬 1

📌 0

PSA: tips to protect yourself from scams on Signal.

Every major comms platform has to contend w phishing, impersonation, & scams. Sadly.

Signal is major, and as we've grown we've heard about more of these attacks--scammy people pretending to be something or someone to trick and abuse others. 1/

11.11.2025 18:13 —

👍 546

🔁 241

💬 3

📌 12

Who has the luxury to think?

Researchers are responsible for more than just papers.

Hi friends! After much thinking & doodling, I'm excited to share my new substack "Finding Peace in an AI-Everywhere World" 🌷 🌏

Here is the first article based on some reflections I had at COLM: michellelding.substack.com/p/who-has-th...

20.10.2025 15:35 —

👍 3

🔁 1

💬 1

📌 0

Technologies like synthetic data, evaluations, and red-teaming are often framed as enhancing AI privacy and safety. But what if their effects lie elsewhere?

In a new paper with @realbrianjudge.bsky.social at #EAAMO25, we pull back the curtain on AI safety's toolkit. (1/n)

arxiv.org/pdf/2509.22872

17.10.2025 21:09 —

👍 17

🔁 6

💬 1

📌 1

Grey background with CNTR logo and black text that says:

Testing LLMs in a sandbox isn’t responsible. Focusing on community uses and needs is.

Michelle L. Ding, Jo Gasior-Kavishe, Victor Ojewale, and Suresh Venkatasubramanian

Third Workshop on Socially Responsible Language Modelling Research (SoLaR) 2025

Full abstract available here bit.ly/solar-cntr

Photos of authors in order of names above

Brown Data Science Institute, Center for Technological Repsonsibility, Reimagination, and Redesign

11.10.2025 16:12 —

👍 2

🔁 0

💬 0

📌 0

Grey background with black text that says:

Slide 1: What can you do as a researcher?

Look into your local community - develop sustainable, long term partnerships with city-level or state-level coalitions, networks, news agencies, or non-profit organizations that do on the ground work to discover harms

University researchers – look beyond your department into other disciplines in the humanities and social sciences to ground your research in interdisciplinary methods

Industry researchers – look towards trust & safety teams, policy teams, human rights teams and establish collaborations where research reflects real uses. Or advocate to (re)establish these teams.

Slide 2: Contribute to a crowdsourced, open source database: bit.ly/solar-cntr

Case studies are a great way for us to share, learn, teach, and reflect on methods together.

At SoLaR 2025, we hope to crowdsource a spreadsheet of community-driven LLM evaluation case studies that orient us towards contextualized, participatory, expertise driven evaluations.

Please add any your own or related work to our spreadsheet :) And let’s stay connected! We also have a tab for contact info!

More resources here: bit.ly/solar-cntr 🌱 Happy to chat with anyone interested in learning more!

11.10.2025 16:07 —

👍 3

🔁 0

💬 1

📌 0

Brown background with CNTR logo with white text that says:

Responsibility is not marginally improving LLMs to fit every use case.

Responsibility is knowing when to use them and when not to.

11.10.2025 16:04 —

👍 2

🔁 0

💬 1

📌 0

Brown background with CNTR logo with white text that says:

In LLM evaluations, we - researchers - are not the experts.

We are the facilitators…

…who enable communities to evaluate LLMs for themselves

When we allow their expertise and lived experiences to guide our methodology…

We will organically develop more meaningful scientific insights…

And escape the vicious cycle of abstract benchmarks that only serve to misdirect and confuse us.

11.10.2025 16:02 —

👍 1

🔁 0

💬 1

📌 0

Brown background and CNTR logo with white text that says:

Community-driven evaluations yield good scientific insights.

They strip away marketing, hype, and unnecessary abstractions

And reveal the contexts where LLMs might genuinely add value and where they do not.

This opens space for meaningful dialogue between stakeholders across academia, industry, government, and civil society

And empowers the public to engage with and assess for themselves whether the tools they are being presented with can be used responsibly.

Thank you everyone who came to our talk! Here are some highlights:

11.10.2025 16:01 —

👍 0

🔁 0

💬 1

📌 0

💡We kicked off the SoLaR workshop at #COLM2025 with a great opinion talk by @michelleding.bsky.social & Jo Gasior Kavishe (joint work with @victorojewale.bsky.social and

@geomblog.bsky.social

) on "Testing LLMs in a sandbox isn't responsible. Focusing on community use and needs is."

10.10.2025 14:31 —

👍 15

🔁 4

💬 1

📌 0

Agree to Disagree? A Meta-Evaluation of LLM Misgendering

Numerous methods have been proposed to measure LLM misgendering, including probability-based evaluations (e.g., automatically with templatic sentences) and generation-based evaluations (e.g., with aut...

Have you or a loved one been misgendered by an LLM? How can we evaluate LLMs for misgendering? Do different evaluation methods give consistent results?

Check out our preprint led by the newly minted Dr. @arjunsubgraph.bsky.social, and with Preethi Seshadri, Dietrich Klakow, Kai-Wei Chang, Yizhou Sun

11.06.2025 13:28 —

👍 15

🔁 4

💬 1

📌 3

Third Workshop on Socially Responsible Language Modelling Research (SoLaR) 2025

COLM 2025 in-person Workshop, October 10th at the Palais des Congrès in Montreal, Canada

Hi #COLM2025! 🇨🇦 I will be presenting a talk on the importance of community-driven LLM evaluations based on an opinion abstract I wrote with Jo Kavishe, @victorojewale.bsky.social and @geomblog.bsky.social tomorrow at 9:30am in 524b for solar-colm.github.io

Hope to see you there!

09.10.2025 19:32 —

👍 9

🔁 6

💬 1

📌 0

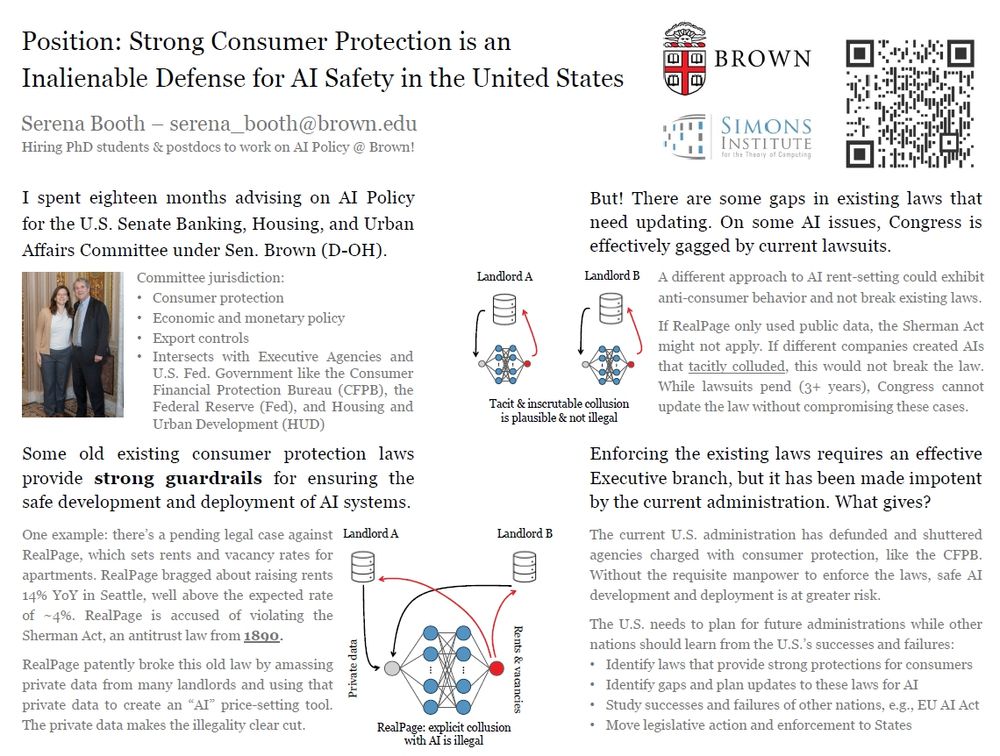

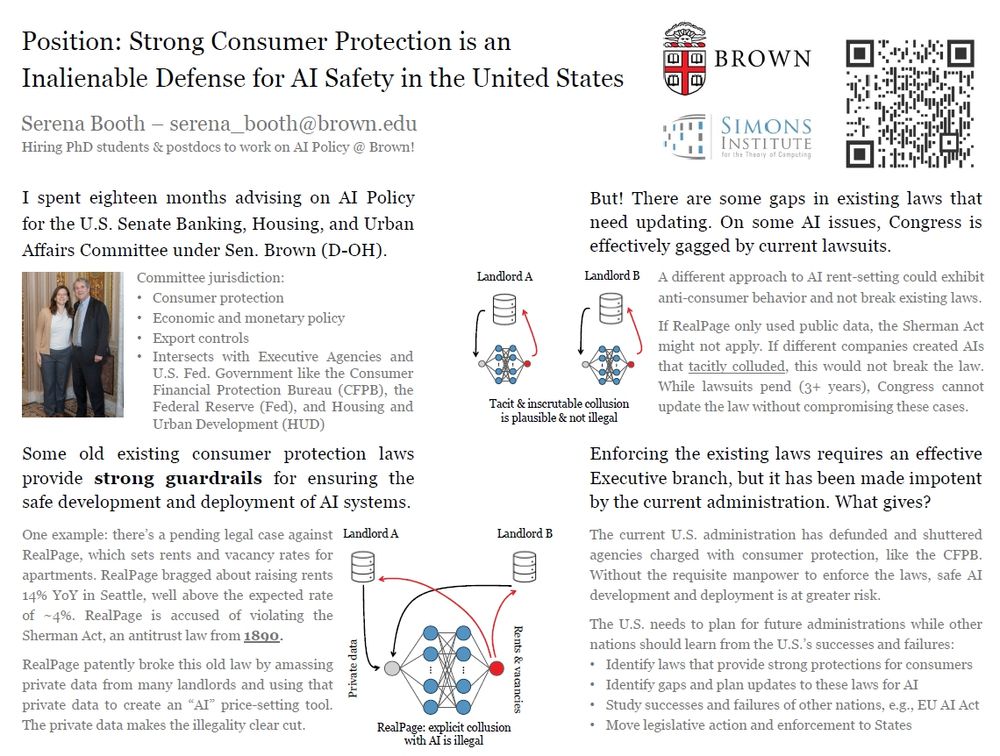

A poster for the paper "Position: Strong Consumer Protection is an Inalienable Defense for AI Safety in the United States"

I'll be presenting a position paper about consumer protection and AI in the US at ICML. I have a surprisingly optimistic take: our legal structures are stronger than I anticipated when I went to work on this issue in Congress.

Is everything broken rn? Yes. Will it stay broken? That's on us.

14.07.2025 13:01 —

👍 19

🔁 5

💬 1

📌 0

'Sovereignty' Myth-Making in the AI Race | TechPolicy.Press

Tech companies stand to gain by encouraging the illusion of a race for 'sovereign' AI, write Rui-Jie Yew, Kate Elizabeth Creasey, Suresh Venkatasubramanian.

With their 'Sovereignty as a Service' offerings, tech companies are encouraging the illusion of a race for sovereign control of AI while being the true powers behind the scenes, write Rui-Jie Yew, Kate Elizabeth Creasey, Suresh Venkatasubramanian.

07.07.2025 13:07 —

👍 15

🔁 11

💬 0

📌 6

'Sovereignty' Myth-Making in the AI Race | TechPolicy.Press

Tech companies stand to gain by encouraging the illusion of a race for 'sovereign' AI, write Rui-Jie Yew, Kate Elizabeth Creasey, Suresh Venkatasubramanian.

Very excited to see this piece out in @techpolicypress.bsky.social today. This was written together with @r-jy.bsky.social and Kate Elizabeth Creasey (a historian here at Brown), and calls out what we think is a scary and interesting rhetorical shift.

www.techpolicy.press/sovereignty-...

07.07.2025 13:50 —

👍 21

🔁 8

💬 0

📌 4